首先我们先引入requests模块

import requests

一、发送请求

r = requests.get('https://api.github.com/events') # GET请求

r = requests.post('http://httpbin.org/post', data = {'key':'value'}) # POST请求

r = requests.put('http://httpbin.org/put', data = {'key':'value'}) # PUT请求

r = requests.delete('http://httpbin.org/delete') # DELETE请求

r = requests.head('http://httpbin.org/get') # HEAD请求

r = requests.options('http://httpbin.org/get') # OPTIONS请求

type(r)

requests.models.Response

二、传递URL参数

URL传递参数的形式为:httpbin.org/get?key=val。但是手动的构造很麻烦,这是可以使用params参数来方便的构造带参数URL。

payload = {'key1': 'value1', 'key2': 'value2'}

r = requests.get("http://httpbin.org/get", params=payload)

print(r.url)

1

http://httpbin.org/get?key1=value1&key2=value2

Python客栈送红包、纸质书

同一个key可以有多个value

payload = {'key1': 'value1', 'key2': ['value2', 'value3']}

r = requests.get('http://httpbin.org/get', params=payload)

print(r.url)

http://httpbin.org/get?key1=value1&key2=value2&key2=value3

三、定制headers

headers是解决requests请求反爬的方法之一,相当于我们进去这个网页的服务器本身,假装自己本身在爬取数据。

对反爬虫网页,可以设置一些headers信息,模拟成浏览器取访问网站

只需要将一个dict传递给headers参数便可以定制headers

url = 'https://api.github.com/some/endpoint'

headers = {'user-agent': 'my-app/0.0.1'}

r = requests.get(url, headers=headers)

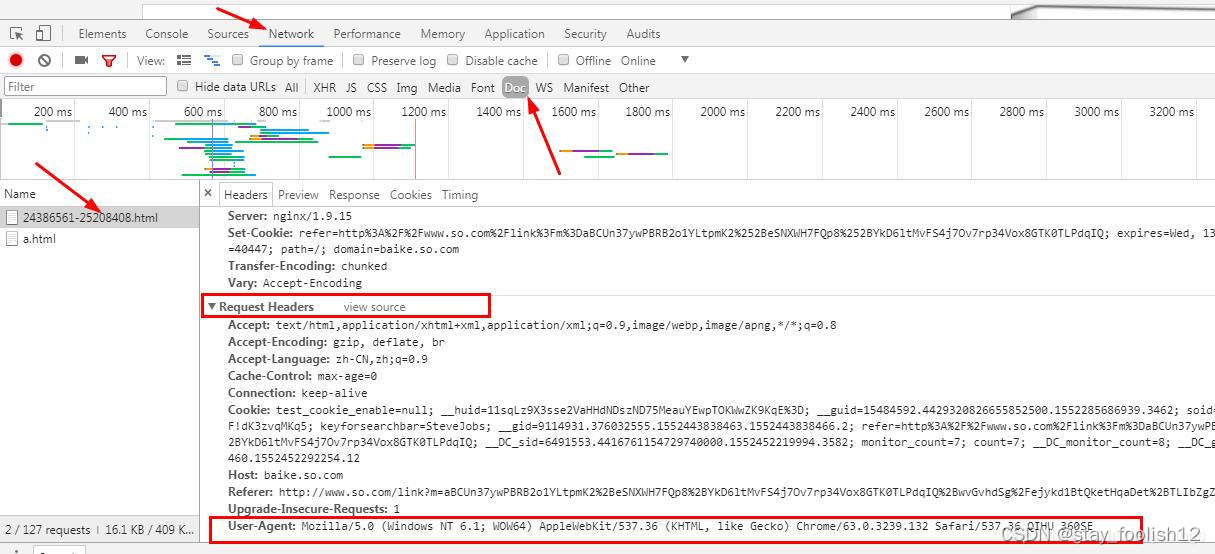

谷歌或者火狐浏览器,在网页面上点击:右键–>检查–>剩余按照图中显示操作,需要按Fn+F5刷新出网页来

有的浏览器是点击:右键->查看元素,刷新

response_put = requests.post('https://17*.**.**.***:9200/archives_original/_doc/952893014813904896?pretty',data=json.dumps(data, ensure_ascii=False).encode("utf-8"),headers={"Authorization": "Basic YWRtaW46YWRtaW4=",'Content-Type': "application/json"}, verify = False)