目录

一、kubernetes

- kubernetes,简称 K8s,是用 8 代替 8 个字符“ubernete”而成的缩写。是一个开源 的,用于管理云平台中多个主机上的容器化的应用,Kubernetes 的目标是让部署容器化的 应用简单并且高效(powerful),Kubernetes 提供了应用部署,规划,更新,维护的一种 机制。

- 传统的应用部署方式是通过插件或脚本来安装应用。这样做的缺点是应用的运行、配 置、管理、所有生存周期将与当前操作系统绑定,这样做并不利于应用的升级更新/回滚等 操作,当然也可以通过创建虚拟机的方式来实现某些功能,但是虚拟机非常重,并不利于 可移植性。

- 新的方式是通过部署容器方式实现,每个容器之间互相隔离,每个容器有自己的文件 系统 ,容器之间进程不会相互影响,能区分计算资源。相对于虚拟机,容器能快速部署, 由于容器与底层设施、机器文件系统解耦的,所以它能在不同云、不同版本操作系统间进 行迁移。

- 容器占用资源少、部署快,每个应用可以被打包成一个容器镜像,每个应用与容器间 成一对一关系也使容器有更大优势,使用容器可以在 build 或 release 的阶段,为应用创 建容器镜像,因为每个应用不需要与其余的应用堆栈组合,也不依赖于生产环境基础结构, 这使得从研发到测试、生产能提供一致环境。类似地,容器比虚拟机轻量、更“透明”, 这更便于监控和管理。

- Kubernetes 是 Google 开源的一个容器编排引擎,它支持自动化部署、大规模可伸缩、 应用容器化管理。在生产环境中部署一个应用程序时,通常要部署该应用的多个实例以便 对应用请求进行负载均衡。

- 在 Kubernetes 中,我们可以创建多个容器,每个容器里面运行一个应用实例,然后通 过内置的负载均衡策略,实现对这一组应用实例的管理、发现、访问,而这些细节都不需 要运维人员去进行复杂的手工配置和处理。

二、安装配置k8s

实验前提:

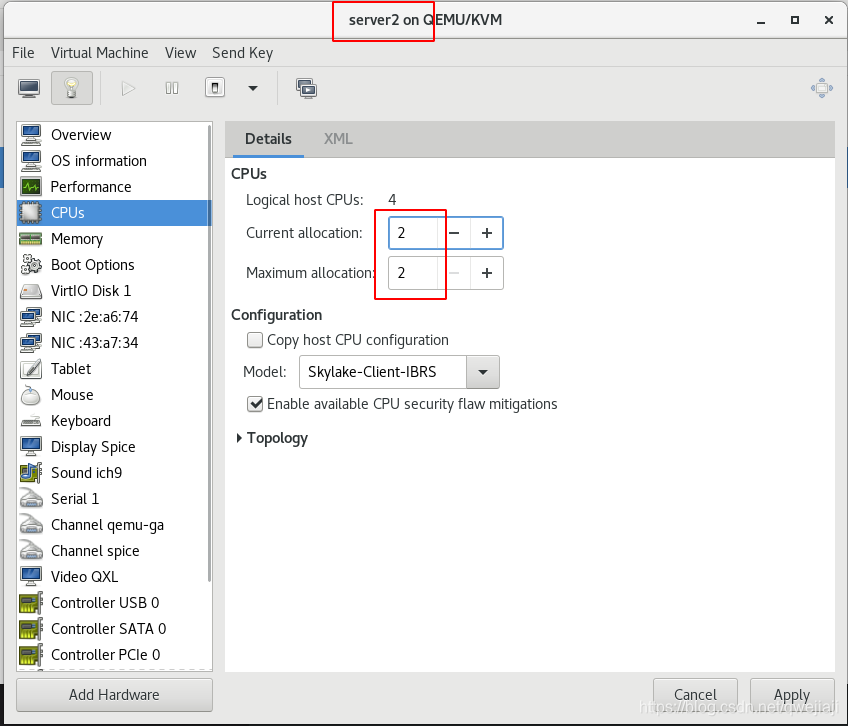

四台虚拟机,server1、server2、server3、server4都已经安装docker,server1中部署harbor仓库,server2做k8s集群的管理(注意k8s的管理端的这个虚拟机必须是cpu大于等于2),server3和server4做k8s集群的node。

1、环境配置

server2、3、4都执行:

docker image prune #删除镜象

docker service rm fbx15qromxax nvl5q273y41l ca1i2kjolag7 #删除服务

docker network prune #删除不用的网络

docker container prune #删除不用的容器

docker volume prune #删除不用的数据卷

docker swarm leave #Server2离开swarm集群

cd /etc/systemd/system/docker.service.d

rm -fr 10-machine.conf #删除之前machine的产物

systemctl daemon-reload

systemctl restart docker #重启

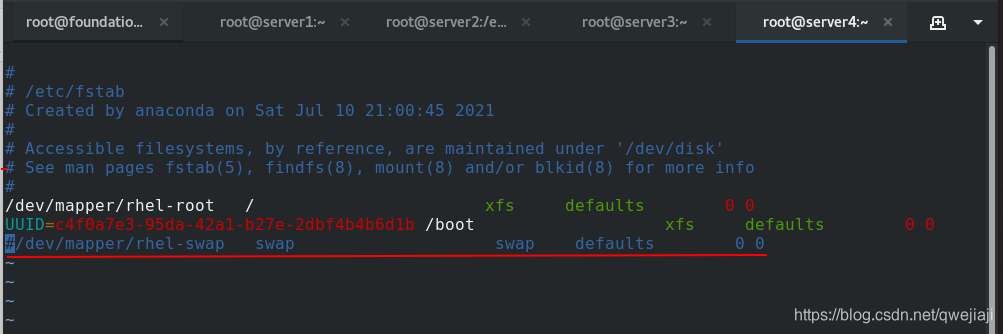

2、禁用所有节点的swap分区

server2、3、4都执行:

swapoff -a

vim /etc/fstab #注释掉/etc/fstab文件中的swap定义

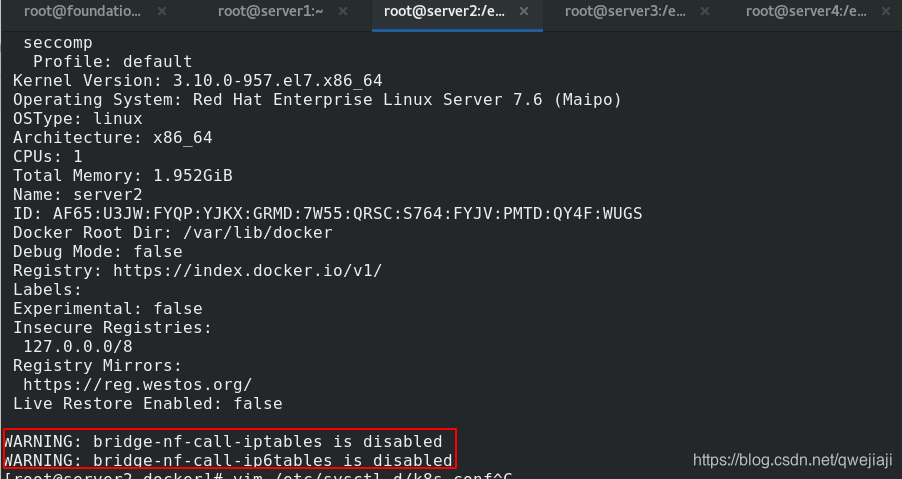

3、设定docker cgroup driver为systemd

server2、3、4都执行:

vim /etc/docker/daemon.json

///

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

\\\

systemctl restart docker #重启

docker info #查看已修改

4、配置网桥

解决上面的警告

server2、3、4都执行:

vim /etc/sysctl.d/k8s.conf

\\\

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

///

sysctl --system

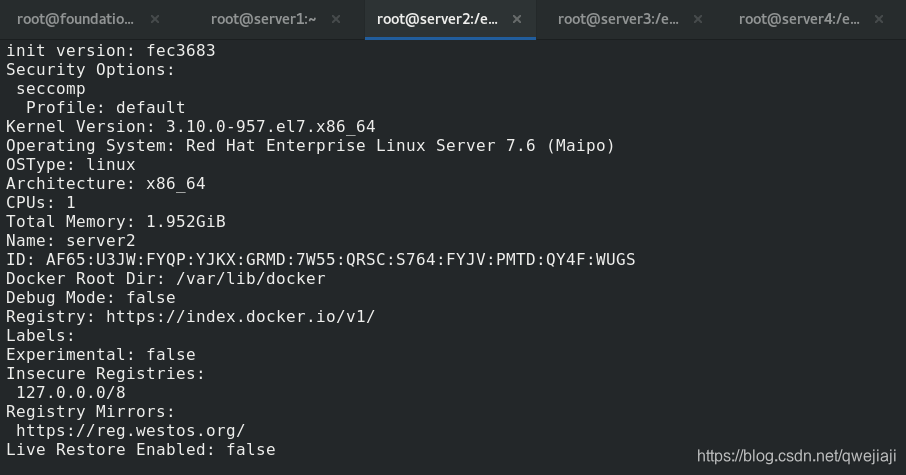

docker info

警告消失:

5、安装

server2、3、4都执行:

有压缩包的直接解压,进入目录后安装

tar zxf kubeadm-1.21.3.tar.gz

cd packages/

yum install -y *

systemctl enable --now kubelet.service #开机自启

6、生成密钥

为了后续方便操作,server2执行ssh-genkey生成密钥,ssh-copy-id传输给server3和server4,免密操作

server2中:

ssh-keygen

ssh-copy-id server3

ssh-copy-id server4

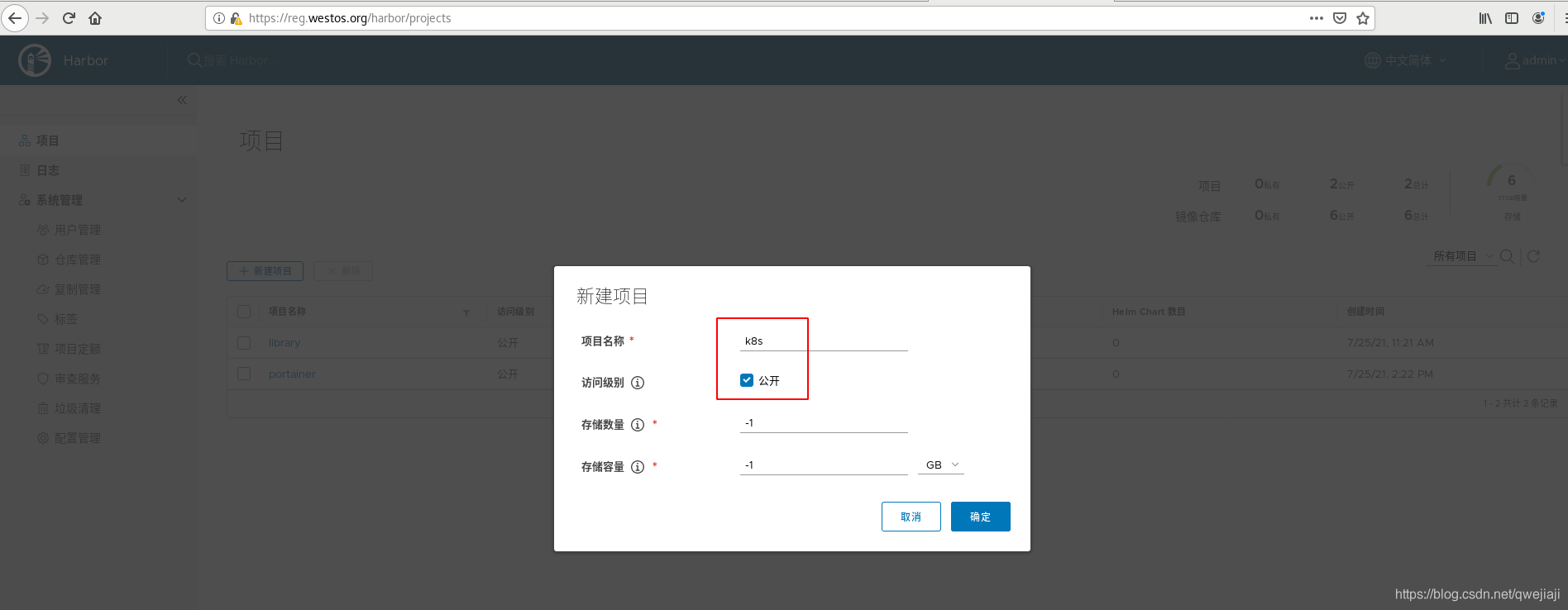

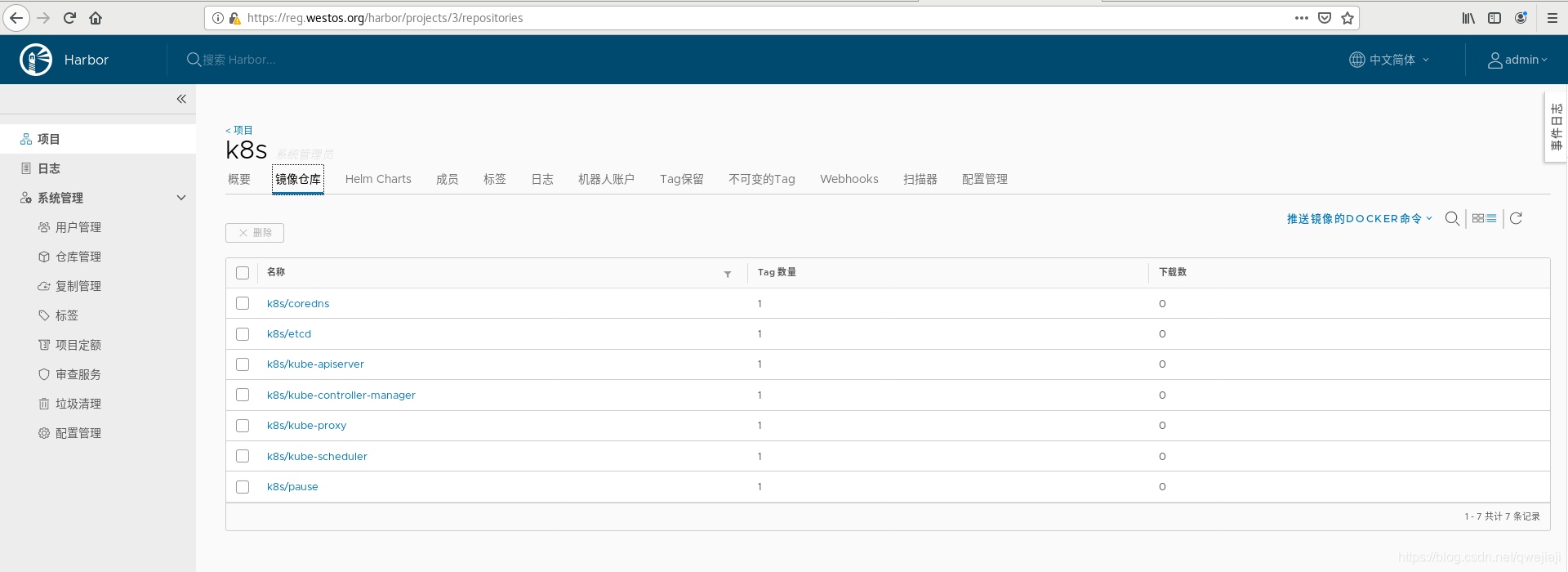

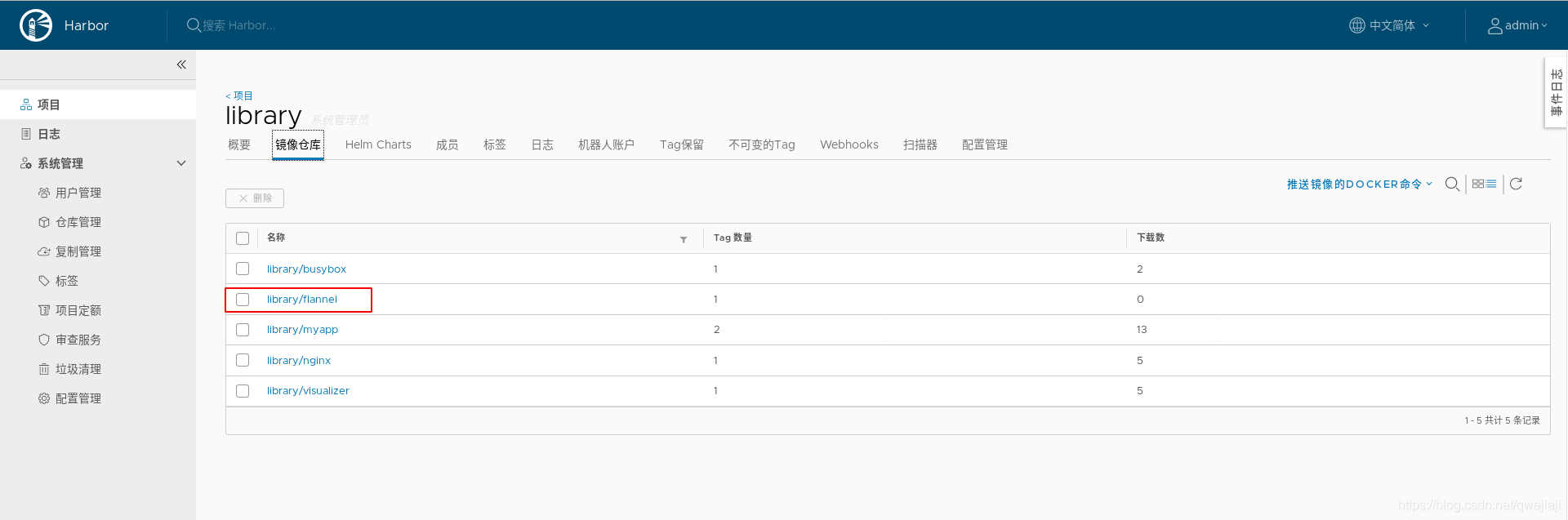

7、harbor中创建k8s项目

为了更好的管理,在harbor中创建k8s项目,设置公开,这样匿名用户才能拉取,专门放k8s的镜像

8、镜像上传到仓库

网上下载会很慢,而且容易无法连接,这里我提前把所需镜像放到server1的harbor仓库里,server2、3、4可以直接用仓库的,无需上网拉取。

kubeadm config print init-defaults %查看默认配置信息

kubeadm config images list --image-repository registry.aliyuncs.com/google_containers %列出所需镜像

kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers %拉取镜像

server1里执行:

docker load -i k8s-1.21.3.tar

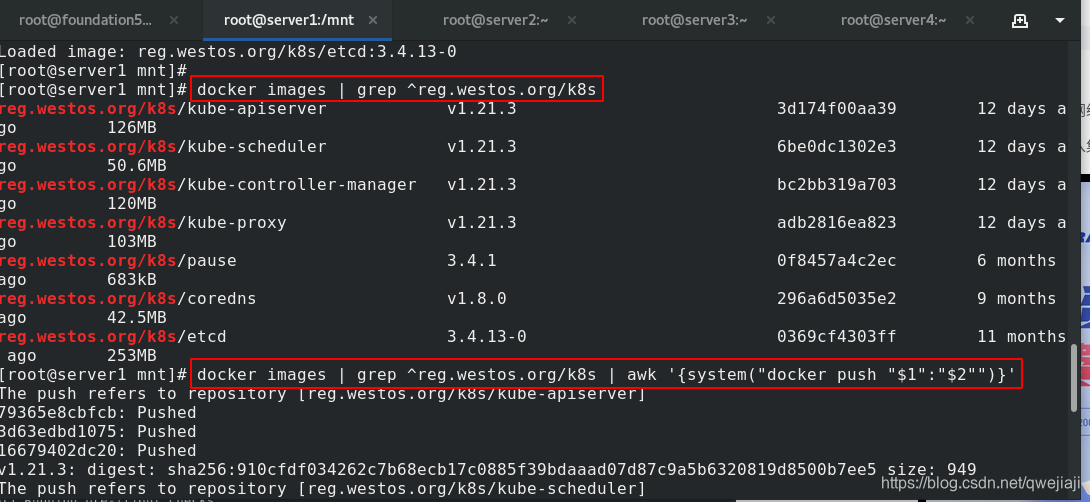

docker images | grep ^reg.westos.org/k8s #有7个相关镜像

docker images | grep ^reg.westos.org/k8s | awk '{system("docker push "$1":"$2"")}' #批量上传镜像到reg.westos.org/k8s

9、初始化

server2里执行:

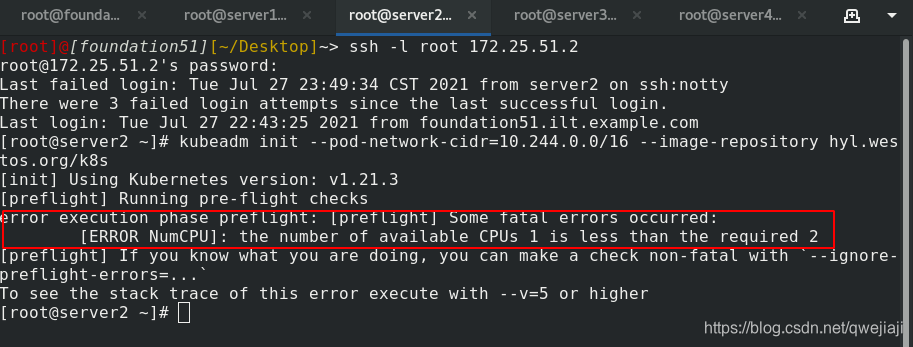

kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository reg.westos.org/k8s #初始化k8s集群

出现以上报错则在server2的cpu配置里,加大cpu到2!

初始化成功则:

10、配置环境变量与kubectl补齐

server2里执行:

按照初始化提示做如下

export KUBECONFIG=/etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile # 环境变量

echo "source <(kubectl completion bash)" >> ~/.bashrc # 补齐

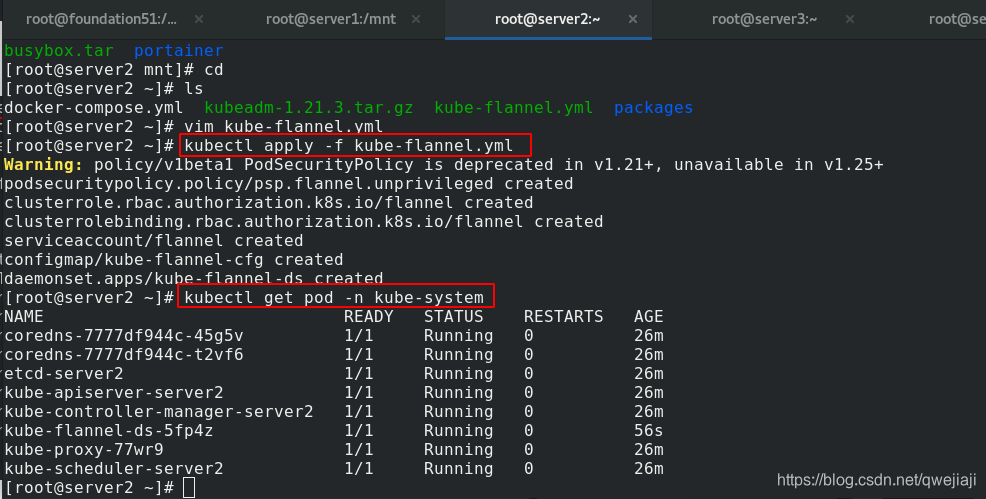

11、安装flannel网络组件

在控制节点安装flannel组件,若无flannel镜像可直接通过github拉取

docker pull quay.io/coreos/flannel:v0.14.0 %从网上拉取flannel网络组件镜像

docker tag quay.io/coreos/flannel:v0.14.0 reg.westos.org/library/flannel:v0.14.0 %更改标签

docker push reg.westos.org/library/flannel:v0.14.0 %上传镜像到harbor仓库

server2里执行:

[root@server2 ~]# kubectl get pod -n kube-system #查看节点状态,可以看到有两个没开,是因为没有安装flannel网络组件

NAME READY STATUS RESTARTS AGE

coredns-7777df944c-45g5v 0/1 Pending 0 9m33s

coredns-7777df944c-t2vf6 0/1 Pending 0 9m34s

etcd-server2 1/1 Running 0 9m41s

kube-apiserver-server2 1/1 Running 0 9m53s

kube-controller-manager-server2 1/1 Running 0 9m53s

kube-proxy-77wr9 1/1 Running 0 9m34s

kube-scheduler-server2 1/1 Running 0 9m41s

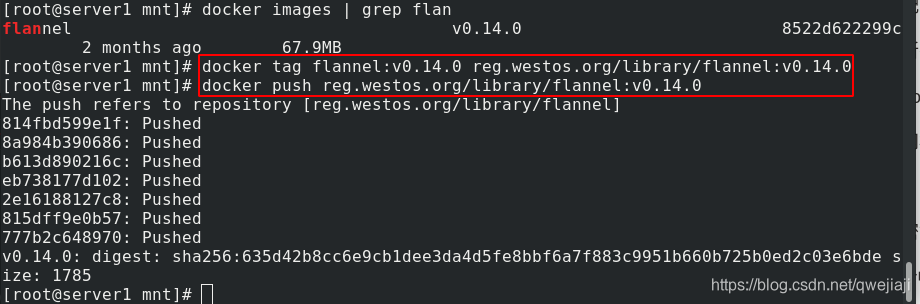

server1里执行:

docker tag flannel:v0.14.0 reg.westos.org/library/flannel:v0.14.0

docker push reg.westos.org/library/flannel:v0.14.0

12、编写kube-flannel.yml应用配置文件

server2里执行:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

kubectl apply -f kube-flannel.yml #应用配置文件

kubectl get pod -n kube-system #再次查看节点状态,发现节点全开,则正常

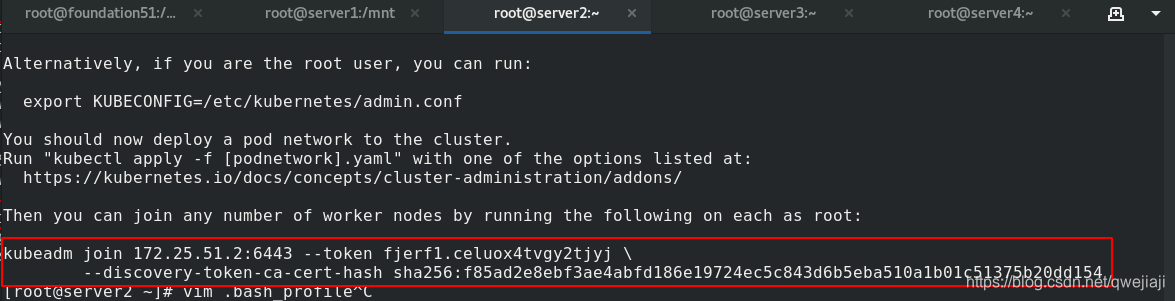

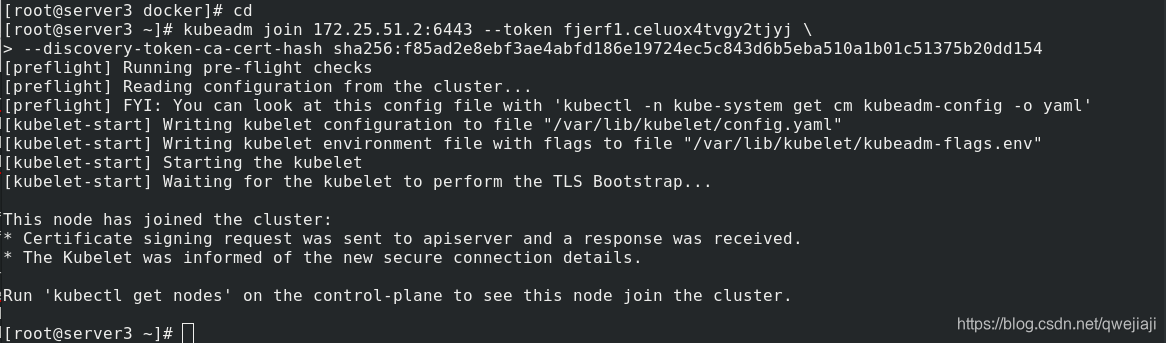

13、节点扩容(其他节点加入集群)

在受控端根据初始化集群返回的token加入集群

加入集群的命令是在server2里初始化后的提示内容!

在server3、4中执行(加入server2的集群):

kubeadm join 172.25.51.2:6443 --token fjerf1.celuox4tvgy2tjyj \

--discovery-token-ca-cert-hash sha256:f85ad2e8ebf3ae4abfd186e19724ec5c843d6b5eba510a1b01c51375b20dd154

14、查看节点

在server2中执行:

查看server3、server4确实已经加入集群。

[root@server2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

server2 Ready control-plane,master 38m v1.21.3

server3 Ready <none> 113s v1.21.3

server4 Ready <none> 49s v1.21.3