环境准备

这里准备三台Ubuntu Server X64 18.04 虚拟 机,如下

192.168.90.31 4核2G 40G硬盘 Kubernetes server1 Master

192.168.90.32 4核2G 40G硬盘 Kubernetes server2 Slave1

192.168.90.33 4核2G 40G硬盘 Kubernetes server3 Slave2

安装过程中可以使用阿里云的镜像库

http://mirrors.aliyun.com/ubuntu

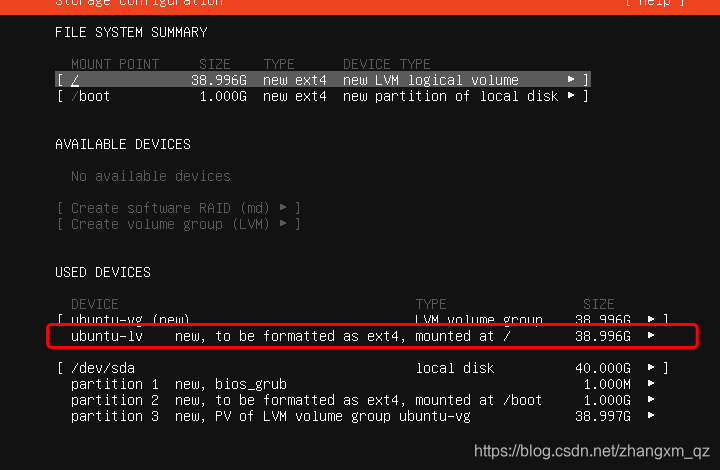

安装ubuntu 的过程记得修改硬盘空间(如果新建虚拟机后有调整磁盘空间那么这里会有未分配的空间,调整这里的值未最大即可)

固定ip

找到并修改如下文件,修改保存后 执行 sudo netplan apply 使生效

eric@server1:~$ cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: false

addresses: [192.168.90.31/24]

gateway4: 192.168.90.1

nameservers:

addresses: [8.8.8.8]

version: 2

优化配置

关闭交换空间:sudo swapoff -a

避免开机启动交换空间:注释 /etc/fstab 中的 swap

关闭防火墙:ufw disable

安装docker

# 更新软件源

sudo apt-get update

# 安装所需依赖

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# 安装 GPG 证书

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# 新增软件源信息

sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# 再次更新软件源

sudo apt-get -y update

# 安装 Docker CE 版

sudo apt-get -y install docker-ce

docker version 验证docker安装成功

eric@server1:/etc$ docker version

Client: Docker Engine - Community

Version: 20.10.8

API version: 1.41

Go version: go1.16.6

Git commit: 3967b7d

配置docker镜像加速器

eric@server1:/etc$ cat /etc/docker/daemon.json

{

"registry-mirrors": [

"https://registry.docker-cn.com"

]

}

重启docker docker info验证加速器是否生效

eric@server1:/etc$ sudo systemctl restart docker

eric@server1:/etc$ sudo docker info

......

Insecure Registries:

127.0.0.0/8

Registry Mirrors:

https://registry.docker-cn.com/

Live Restore Enabled: false

......

修改主机名

hostnamectl set-hostname server1

安装kubeadm

kubeadm 是 kubernetes 的集群安装工具,能够快速安装 kubernetes 集群。

配置软件源

# 安装系统工具

apt-get update && apt-get install -y apt-transport-https

# 安装 GPG 证书

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

# 写入软件源;注意:我们用系统代号为 bionic,但目前阿里云不支持,所以沿用 16.04 的 xenial

cat << EOF >/etc/apt/sources.list.d/kubernetes.list

> deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

> EOF

安装 kubeadm,kubelet,kubectl

kubeadm:用于初始化 Kubernetes 集群

kubectl:Kubernetes 的命令行工具,主要作用是部署和管理应用,查看各种资源,创建,删除和更新组件

kubelet:主要负责启动 Pod 和容器

安装时指定版本 避免 版本太新找不到镜像

apt-get update

apt-get install -y kubelet=1.14.10-00 kubeadm=1.14.10-00 kubectl=1.14.10-00

通过日志可以看到安装的版本

Setting up kubelet (1.14.10-00) ...

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /lib/systemd/system/kubelet.service.

Setting up kubectl (1.14.10-00) ...

Setting up kubeadm (1.14.10-00) ...

配置kubeadm

安装 kubernetes 主要是安装它的各个镜像,而 kubeadm 已经为我们集成好了运行 kubernetes 所需的基本镜像。但由于国内的网络原因,在搭建环境时,无法拉取到这些镜像。此时我们只需要修改为阿里云提供的镜像服务即可解决该问题。

生成并修改配置文件如下:

root@server1:/etc# kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yml

root@server1:/etc# vi kubeadm.yml

root@server1:~# cat kubeadm.yml

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.90.31 ---master ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: ""

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers --镜像库地址

kind: ClusterConfiguration

kubernetesVersion: v1.14.10 --kubeadm 版本

networking:

dnsDomain: cluster.local

podSubnet: "192.168.0.0/16" --ip段

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

--开启 IPVS 模式

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

root@server1:~# kubeadm config images list --config kubeadm.yml --查看所需镜像

registry.aliyuncs.com/google_containers/kube-apiserver:v1.14.10

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.14.10

registry.aliyuncs.com/google_containers/kube-scheduler:v1.14.10

registry.aliyuncs.com/google_containers/kube-proxy:v1.14.10

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.3.10

registry.aliyuncs.com/google_containers/coredns:1.3.1

root@server1:~# kubeadm config images pull --config kubeadm.yml --下载镜像

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.14.10

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.14.10

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.14.10

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.14.10

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.3.10

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.3.1

......

这部分工准备工作做完 后,需要克隆两台虚拟机作为从节点。并按照规划修改ip、主机名

搭建K8S集群

安装主节点

执行以下命令初始化主节点,该命令指定了初始化时需要使用的配置文件,其中添加 --experimental-upload-certs 参数可以在后续执行加入节点时自动分发证书文件。追加的 tee kubeadm-init.log 用以输出日志。

(要使用上文中创建的配置文件来执行该命令)

root@server1:~# kubeadm init --config=kubeadm.yml --experimental-upload-certs | tee kubeadm-init.log

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.90.31:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ea5361c0f2876b215d177cd7dab7679ac47bcc85d709edd6522ff72363be4a61

注意:如果安装 kubernetes 版本和下载的镜像版本不统一则会出现 timed out waiting for the condition 错误。中途失败或是想修改配置可以使用 kubeadm reset 命令重置配置,再做初始化操作即可。

按照输出结果进行配置(按照日志提示 使用非root用户执行如下命令)

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# 非 ROOT 用户执行

chown $(id -u):$(id -g) $HOME/.kube/config

验证(出现如下输出证明配置成功)

eric@server1:~$ kubectl get node

NAME STATUS ROLES AGE VERSION

server1 NotReady master 2d3h v1.14.10

kubeadm init 的执行过程

init:指定版本进行初始化操作

preflight:初始化前的检查和下载所需要的 Docker 镜像文件

kubelet-start:生成 kubelet 的配置文件 var/lib/kubelet/config.yaml,没有这个文件 kubelet 无法启动,所以初始化之前的 kubelet 实际上启动不会成功

certificates:生成 Kubernetes 使用的证书,存放在 /etc/kubernetes/pki 目录中

kubeconfig:生成 KubeConfig 文件,存放在 /etc/kubernetes 目录中,组件之间通信需要使用对应文件

control-plane:使用 /etc/kubernetes/manifest 目录下的 YAML 文件,安装 Master 组件

etcd:使用 /etc/kubernetes/manifest/etcd.yaml 安装 Etcd 服务

wait-control-plane:等待 control-plan 部署的 Master 组件启动

apiclient:检查 Master 组件服务状态。

uploadconfig:更新配置

kubelet:使用 configMap 配置 kubelet

patchnode:更新 CNI 信息到 Node 上,通过注释的方式记录

mark-control-plane:为当前节点打标签,打了角色 Master,和不可调度标签,这样默认就不会使用 Master 节点来运行 Pod

bootstrap-token:生成 token 记录下来,后边使用 kubeadm join 往集群中添加节点时会用到

addons:安装附加组件 CoreDNS 和 kube-proxy

配置slave节点

将 slave 节点加入到集群中很简单,只需要在 slave 服务器上安装 kubeadm,kubectl,kubelet 三个工具,然后使用 kubeadm join 命令加入即可。我们的两台从节点已经安装了必备的镜像和 kubeadm,kubectl,kubelet 三个工具 ,因此直接执行kubeadm join 命令,执行join命令需要两个参数,token 和 discovery-token-ca-cert-hash

token

- 可以通过安装 master 时的日志查看 token 信息

- 可以通过 kubeadm token list 命令打印出 token 信息

- 如果 token 过期,可以使用 kubeadm token create 命令创建新的 token

discovery-token-ca-cert-hash

- 可以通过安装 master 时的日志查看 sha256 信息

- 可以通过 openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed ‘s/^.* //’ 命令查看 sha256 信息

我们到master节点执行生成一个新token如下:

eric@server1:~$ kubeadm token create

9srgjm.6xkcpoiczll8id0i

在master节点查看 discovery-token-ca-cert-hash 如下:

eric@server1:~$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

ea5361c0f2876b215d177cd7dab7679ac47bcc85d709edd6522ff72363be4a61

根据上述参数 分别在两台从节点执行如下命令 进行注册

eric@server3:~$ sudo kubeadm join 192.168.90.31:6443 --token 9srgjm.6xkcpoiczll8id0i --discovery-token-ca-cert-hash sha256:ea5361c0f2876b215d177cd7dab7679ac47bcc85d709edd6522ff72363be4a61

[sudo] password for eric:

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.8. Latest validated version: 18.09

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[WARNING RequiredIPVSKernelModulesAvailable]:

The IPVS proxier may not be used because the following required kernel modules are not loaded: [ip_vs_wrr ip_vs_sh ip_vs ip_vs_rr]

or no builtin kernel IPVS support was found: map[ip_vs:{} ip_vs_rr:{} ip_vs_sh:{} ip_vs_wrr:{} nf_conntrack_ipv4:{}].

However, these modules may be loaded automatically by kube-proxy if they are available on your system.

To verify IPVS support:

Run "lsmod | grep 'ip_vs|nf_conntrack'" and verify each of the above modules are listed.

If they are not listed, you can use the following methods to load them:

1. For each missing module run 'modprobe $modulename' (e.g., 'modprobe ip_vs', 'modprobe ip_vs_rr', ...)

2. If 'modprobe $modulename' returns an error, you will need to install the missing module support for your kernel.

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

主节点验证 ,可以看到两个从节点注册成功

eric@server1:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

server1 NotReady master 2d3h v1.14.10

server2 NotReady <none> 2m21s v1.14.10

server3 NotReady <none> 83s v1.14.10

如果 slave 节点加入 master 时配置有问题可以在 slave 节点上使用 kubeadm reset 重置配置再使用 kubeadm join 命令重新加入即可。希望在 master 节点删除 node ,可以使用 kubeadm delete nodes 删除

查看pod状态,coredns尚未运行,需要安装网络插件

eric@server1:~$ kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7b7df549dd-htqc5 0/1 Pending 0 2d3h <none> <none> <none> <none>

coredns-7b7df549dd-smmfh 0/1 Pending 0 2d3h <none> <none> <none> <none>

etcd-server1 1/1 Running 0 2d3h 192.168.90.31 server1 <none> <none>

kube-apiserver-server1 1/1 Running 1 2d3h 192.168.90.31 server1 <none> <none>

kube-controller-manager-server1 1/1 Running 1 2d3h 192.168.90.31 server1 <none> <none>

kube-proxy-hsnwk 1/1 Running 0 3m14s 192.168.90.33 server3 <none> <none>

kube-proxy-r8cq8 1/1 Running 0 2d3h 192.168.90.31 server1 <none> <none>

kube-proxy-xvp28 1/1 Running 0 4m12s 192.168.90.32 server2 <none> <none>

kube-scheduler-server1 1/1 Running 1 2d3h 192.168.90.31 server1 <none> <none>

配置网络

容器网络是容器选择连接到其他容器、主机和外部网络的机制。容器的 runtime 提供了各种网络模式,每种模式都会产生不同的体验。例如,Docker 默认情况下可以为容器配置以下网络:

- none: 将容器添加到一个容器专门的网络堆栈中,没有对外连接。

- host: 将容器添加到主机的网络堆栈中,没有隔离。 default

- bridge: 默认网络模式。每个容器可以通过 IP 地址相互连接。

- 自定义网桥: 用户定义的网桥,具有更多的灵活性、隔离性和其他便利功能

CNI

CNI(Container Network Interface) 是一个标准的,通用的接口。在Docker,Kubernetes,Mesos平台中, 容器网络解决方案 有 flannel,calico,weave等。平台只要提供一个标准的接口协议,方案提供者根据接口为满足该协议接口的容器平台提供网络服务功能,CNI 就是这个标准接口协议。

K8S中的CNI插件

CNI 约定了基本的规则,在配置或销毁容器时动态配置合适的网络和资源。插件实现配置和管理 IP 地址,通常提供 IP 管理、容器的 IP 分配、以及多主机连接相关的功能。容器运行时会调用网络插件,在容器启动时分配 IP 地址并配置网络,在删除容器时再次调用它清理这些资源。协调器决定了容器应该加入哪个网络以及它需要调用哪个插件。然后,插件会将接口添加到容器网络命名空间中,它会在主机上进行更改,包括将 veth 的其他部分连接到网桥。再之后,它会通过调用单独的 IPAM(IP地址管理)插件来分配 IP 地址并设置路由。

在 Kubernetes 中,kubelet 可以在适当的时间调用它找到的插件,为通过 kubelet 启动的 pod进行自动的网络配置。Kubernetes 中可选的 CNI 插件包括:Flannel、Calico、Canal、Weave

为什么选Calico

Calico 为容器和虚拟机提供了安全的网络连接解决方案,并经过了大规模生产验证(在公有云和跨数千个集群节点中),可与 Kubernetes,OpenShift,Docker,Mesos,DC / OS 和 OpenStack 集成。

Calico 还提供网络安全规则的动态实施。使用 Calico 的简单策略语言,您可以实现对容器,虚拟机工作负载和裸机主机端点之间通信的细粒度控制。

安装calico

主节点执行如下命令

kubectl apply -f https://docs.projectcalico.org/v3.7/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.extensions/calico-node created

serviceaccount/calico-node created

deployment.extensions/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

确认是否安装成功,执行如下命令 需要等待 所有状态未 running 可能需要等待 3-5分钟

watch kubectl get pods --all-namespaces

Every 2.0s: kubectl get pods --all-namespaces server1: Mon Aug 9 13:56:02 2021

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-f6ff9cbbb-btnxp 0/1 Running 2 3m12s

kube-system calico-node-7jp5d 1/1 Running 0 3m12s

kube-system calico-node-hktzm 1/1 Running 0 3m12s

kube-system calico-node-z8bpj 1/1 Running 0 3m12s

kube-system coredns-7b7df549dd-htqc5 0/1 Running 1 2d4h

kube-system coredns-7b7df549dd-smmfh 0/1 Running 1 2d4h

kube-system etcd-server1 1/1 Running 0 2d4h

kube-system kube-apiserver-server1 1/1 Running 1 2d4h

kube-system kube-controller-manager-server1 1/1 Running 1 2d4h

kube-system kube-proxy-hsnwk 1/1 Running 0 27m

kube-system kube-proxy-r8cq8 1/1 Running 0 2d4h

kube-system kube-proxy-xvp28 1/1 Running 0 28m

kube-system kube-scheduler-server1 1/1 Running 1 2d4h

至此基本环境已部署完毕

在使用 watch kubectl get pods --all-namespaces 命令观察 Pods 状态时如果出现 ImagePullBackOff 无法 Running 的情况,请尝试使用如下步骤处理:

Master 中删除 Nodes:kubectl delete nodes

Slave 中重置配置:kubeadm reset

Slave 重启计算机:reboot

Slave 重新加入集群:kubeadm join

运行第一个容器

检查集群状态

eric@server1:~$ kubectl get cs --检查组件运行状态

NAME STATUS MESSAGE ERROR

scheduler Healthy ok --调度服务 作用是将pod调度到node

controller-manager Healthy ok --自动化修复服务 作用:Node宕机后自动修复

etcd-0 Healthy {“health”:“true”} --服务注册与发现

eric@server1:~$ kubectl cluster-info

Kubernetes master is running at https://192.168.90.31:6443 --主节点状态

KubeDNS is running at https://192.168.90.31:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy --dns服务

To further debug and diagnose cluster problems, use ‘kubectl cluster-info dump’.

eric@server1:~$ kubectl get nodes --节点状态

NAME STATUS ROLES AGE VERSION

server1 Ready master 2d4h v1.14.10

server2 Ready 34m v1.14.10

server3 Ready 33m v1.14.10

运行一个ngxin实例

#使用 kubectl 命令创建两个监听 80 端口的 Nginx Pod

eric@server1:~$ kubectl run nginx --image=nginx --replicas=2 --port=80

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/nginx created

eric@server1:~$ kubectl get pods --查看pod状态 从初始化到运行 需要一定的时间

NAME READY STATUS RESTARTS AGE

nginx-755464dd6c-kdlp2 1/1 Running 0 94s

nginx-755464dd6c-r6gf4 1/1 Running 0 94s

eric@server1:~$ kubectl get deployment --查看已部署的服务

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 2/2 2 2 111s

eric@server1:~$ kubectl expose deployment nginx --port=80 --type=LoadBalancer --映射服务让用户可以访问

service/nginx exposed

eric@server1:~$ kubectl get services --查看已发布的服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d4h

nginx LoadBalancer 10.96.91.244 <pending> 80:31480/TCP 11s ---可以看到 80端口映射到了 31480 端口

访问服务

通过master 地址的31480 端口访问nginx

http://192.168.141.130:31480/

删除服务

eric@server1:~$ kubectl delete deployment nginx

deployment.extensions "nginx" deleted

eric@server1:~$ kubectl delete service nginx

service "nginx" deleted

eric@server1:~$