目 录

一、Kubernetes二进制部署之etcd集群部署

环境准备:

k8s集群master01: 192.168.17.33 kube-apiserver kube-controller-manager kube-scheduler etcd

k8s集群master02: 192.168.17.133

k8s集群node01: 192.168.17.66 kubelet kube-proxy docker flannel

k8s集群node02: 192.168.17.99

etcd集群节点1: 192.168.17.33 etcd

etcd集群节点2: 192.168.17.66

etcd集群节点3: 192.168.17.99

负载均衡nginx+keepalive01 (master) : 192.168.17.166

负载均衡nginx+keepalive02 (backup) : 192.168.17.199

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

①组件etcd概念

etcd是Coreos团队于2013年6月发起的开源项目,它的目标是构建一个高可用的分布式键值( key-value)数据库。etcd内部采用raft协议作为一致性算法,etcd是go语言编写的。

etcd作为服务发现系统,有以下的特点:

- 简单:安装配置简单,而且提供了HTTP APr进行交互,使用也很简单安全:支持sSL证书验证正

- 快速:单实例支持每秒2k+读操作

- 可靠:来用raft算法,实现分布式系统数据的可用性和一致性

etcd 目前默认使用2379端口提供HTTP API服务,2380端口和peer通信(这两个端口已经被IANA(互联网数字分配机构)官方预留给etcd)。即etcd默认使用2379端口对外为客户端提供通讯,使用端口12380来进行服务器间内部通讯。etcd在生产环境中一般推荐集群方式部署。由于etcd 的leader选举机制,要求至少为3台或以上的奇数台。

准备签发证书环境

CFSSL是 cloudFlare公司开源的一款 PKI/TLS 工具。CPSSl包含一个命令行工具和一个用于签名、验证和捆绑"TLs 证书的wrreAPI 服务。使用Go语言编写。

CFSSI使用配置文件生成证书,因此自签之前,需要生成它识别的 json 格式的配置文件,cFSS。提供了方便的命令行生成配置文件。

CFSS用来为etcd提供TLS 证书,它支持签三种类型的证书:

- client 证书,服务端连接客户端时携带的证书,用于客户端验证服务端身份,如 kube-apiserver访问 etcd;

- server 证书,客户端连接服务端时携带的证书,用于服务端验证客户端身份,如 etcd 对外提供服务;

- peer 证书,相互之间连接时使用的证书,如etcd 节点之间进行验证和通信。

这里全部都使用问一套证书认证。

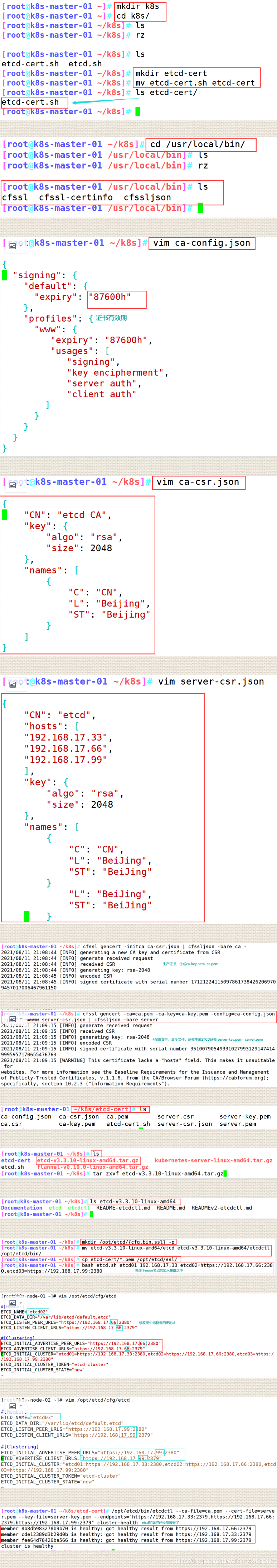

②在master节点上部署etcd

#master操作

[root@localhost ~]# mkdir k8s

[root@localhost ~]# cd k8s/

[root@localhost k8s]# ls //从宿主机拖进来

etcd-cert.sh etcd.sh

[root@localhost k8s]# mkdir etcd-cert

[root@localhost k8s]# mv etcd-cert.sh etcd-cert

#下载证书制作工具

[root@localhost k8s]# vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

#下载cfssl官方包

[root@localhost k8s]# bash cfssl.sh

[root@localhost k8s]# ls /usr/local/bin/

cfssl cfssl-certinfo cfssljson

//开始制作证书

//cfssl 生成证书工具 cfssljson通过传入json文件生成证书

cfssl-certinfo查看证书信息

#定义ca证书

[root@localhost etcd-cert]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#实现证书签名

[root@localhost etcd-cert]# cat > ca-csr.json <<EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

#生产证书,生成ca-key.pem ca.pem

[root@localhost etcd-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2021/08/11 21:08:44 [INFO] generating a new CA key and certificate from CSR

2021/08/11 21:08:44 [INFO] generate received request

2021/08/11 21:08:44 [INFO] received CSR

2021/08/11 21:08:44 [INFO] generating key: rsa-2048

2021/08/11 21:08:45 [INFO] encoded CSR

2021/08/11 21:08:45 [INFO] signed certificate with serial number 171212241150978617384262069709457017006467961150

#指定etcd三个节点之间的通信验证

[root@localhost etcd-cert]# cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.17.33",

"192.168.17.66",

"192.168.17.99"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

#配置文件,命令文件,证书生成ETCD证书 server-key.pem server.pem

[root@localhost etcd-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2021/08/11 21:09:15 [INFO] generate received request

2021/08/11 21:09:15 [INFO] received CSR

2021/08/11 21:09:15 [INFO] generating key: rsa-2048

2021/08/11 21:09:15 [INFO] encoded CSR

2021/08/11 21:09:15 [INFO] signed certificate with serial number 351007905493310279931291474149995957170655476763

2021/08/11 21:09:15 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@localhost etcd-cert]# ls

ca-config.json etcd-cert.sh server-csr.json

ca.csr etcd-v3.3.10-linux-amd64.tar.gz server-key.pem

ca-csr.json flannel-v0.10.0-linux-amd64.tar.gz server.pem

ca-key.pem kubernetes-server-linux-amd64.tar.gz

ca.pem server.csr

[root@localhost etcd-cert]# mv *.tar.gz ../

[root@localhost k8s]# ls

cfssl.sh etcd.sh flannel-v0.10.0-linux-amd64.tar.gz

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

[root@localhost k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@localhost k8s]# ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

#创建配置文件,命令文件,证书目录

[root@localhost k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

#配置文件,命令文件,证书

[root@localhost k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

#证书拷贝

[root@localhost k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

#进入卡住状态等待其他节点加入

[root@localhost k8s]# bash etcd.sh etcd01 192.168.17.33 etcd02=https://192.168.17.66:2380,etcd03=https://192.168.17.99:2380

#使用另外一个会话打开,会发现etcd进程已经开启

[root@localhost ~]# ps -ef | grep etcd

#拷贝证书去其他节点

[root@localhost k8s]# scp -r /opt/etcd/ root@192.168.17.66:/opt

[root@localhost k8s]# scp -r /opt/etcd/ root@192.168.17.99:/opt

#启动脚本拷贝其他节点

[root@localhost k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.17.66:/usr/lib/systemd/system/

[root@localhost k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.17.99:/usr/lib/systemd/system/

③在node节点上部署etcd

#在node01节点修改

[root@localhost ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.17.66:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.17.66:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.17.66:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.17.66:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.17.33:2380,etcd02=https://192.168.17.66:2380,etcd03=https://192.168.17.99:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#启动

[root@localhost ssl]# systemctl start etcd

[root@localhost ssl]# systemctl status etcd

#在node02节点修改

[root@localhost ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.17.99:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.17.99:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.17.99:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.17.99:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.17.33:2380,etcd02=https://192.168.17.66:2380,etcd03=https://192.168.17.99:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#启动

[root@localhost ssl]# systemctl start etcd

[root@localhost ssl]# systemctl status etcd

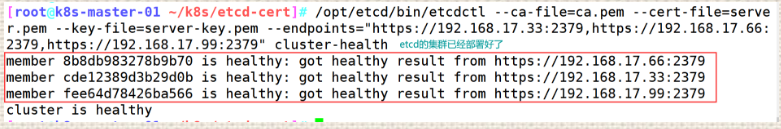

④查看etcd集群

[root@localhost etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.33:2379,https://192.168.17.66:2379,https://192.168.17.99:2379" cluster-health

member 8b8db983278b9b70 is healthy: got healthy result from https://192.168.17.66:2379

member cde12389d3b29d0b is healthy: got healthy result from https://192.168.17.33:2379

member fee64d78426ba566 is healthy: got healthy result from https://192.168.17.99:2379

cluster is healthy

⑤操作总图解

二、Kubernetes二进制部署之docker集群部署

部署详情请见文章:docker的集群安装

三、Kubernetes二进制部署之flannel集群部署

①组件flannel概念

K8S中Pod网络通信:

- Pod内容器与容器乏间的通信:

- 在同一个 Pod内的容器(Pod 内的容器是不会跨宿主机的)共享同一个网络命令空间,相当于它们在同一台机器上一样,可以用localhost地址访问彼此的端口

- 同一个Node内Pod之间的通信:

- 每个 Pod 都有一个真实的全局IP地址,同一个Node 内的不同Pod 之间可以直接采用对方 Pod的IP地址进行通信,Pod1与Pod2都是通过 Veth连接到同一个docker0 网桥,网段相同,所以它们之间可以直接通信。

- 不同 Node 上 Pod 之间的通信:

- Pod 地址与 docker0在同一网段,docker0网段与宿主机网卡是两个不同的网段,且不同Node之间的通信只能通过宿主机的物理网卡进行。要想实现不同Node 上 Pod 之间的通信,就必须想办法通过主机的物理网卡 IP地址进行寻址和通信。因此要满足两个条件: Pod 的IP 不能冲突;将Pod 的 IP和所在的 Node的IP 关联起来,通过这个关联让不同 Node 上 Pod 之间直接通过内网IP地址通信。

Overlay Network:

叠加网络,在二层或者三层基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路隧道连接起来(类似于VPN)。

VXLAN:

将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封装并将数据发送给目标地址。

Flannel:

Flannel 的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址。

Flannel是 overlay 网络的一种,也是将TCP源数据包封装在另一种网络包里而进行路由转发和通信,目前己经支持UDP、VXLAN、AWS VPC等数据转发方式。

Flannel工作原理:

数据从node01上Pod 的源容器中发出后,经由所在主机的 docker0虚拟网卡转发到 flannel0 虚拟网卡, flanneld 服务监听在 flannel0 虚拟网卡的另外一端。flannel 通过 etcd 服务维护了一张节点间的路由表。源主机 node01 的 flanneld 服务将原本的数据内容封装到UDP中后根据自己的路由表通过物理网卡投递给目的节点node02的 flanneld服务,数据到达以后被解包,然后直接进入目的节点的flannel0虚拟网卡,之后被转发到目的主机的 docker0 虚拟网卡,最后就像本机容器通信一样由 docker0 转发到日标容器。

ETCD 之Flannel 提供说明: 存储管理Flannel可分配的Ip地址段资源,监控ETCD中每个Pod的实际地址,并在内存中建立维护Pod节点路由表

②在master节点上部署flannel

#写入分配的子网段到ETCD中,供flannel使用

[root@localhost etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.33:2379,https://192.168.17.66:2379,https://192.168.17.99:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

#查看写入的信息

[root@localhost etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.17.33:2379,https://192.168.17.66:2379,https://192.168.17.99:2379" get /coreos.com/network/config

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

#拷贝到所有node节点(只需要部署在node节点即可)

[root@localhost k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.17.66:/root

[root@localhost k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.17.99:/root

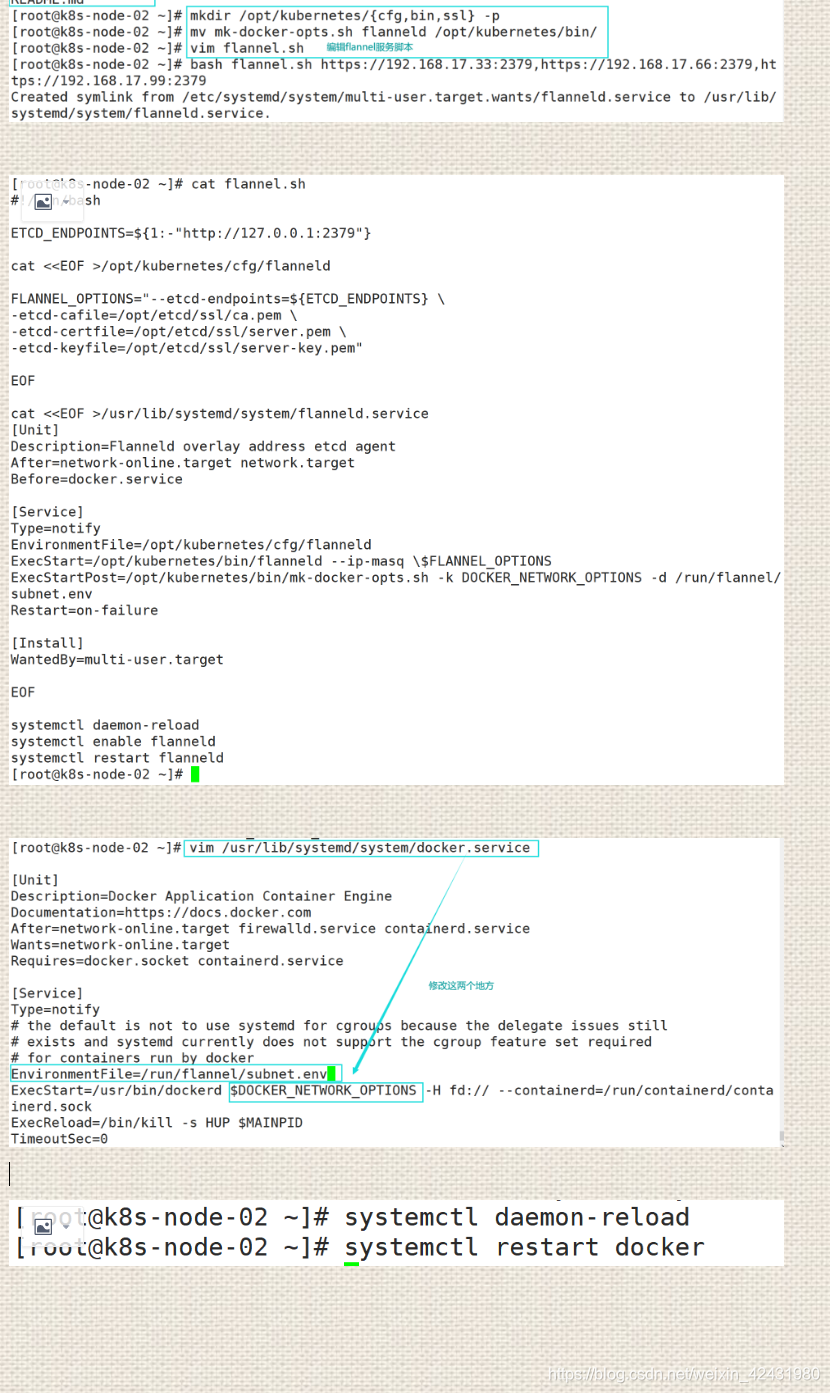

③在node节点上部署flannel

#所有node节点操作解压

[root@localhost ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

flanneld

mk-docker-opts.sh

README.md

#k8s工作目录

[root@localhost ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@localhost ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

[root@localhost ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

#开启flannel网络功能

[root@localhost ~]# bash flannel.sh https://192.168.17.33:2379,https://192.168.17.66:2379,https://192.168.17.99:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

#配置docker连接flannel

[root@localhost ~]# vim /usr/lib/systemd/system/docker.service

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

[root@localhost ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.42.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

#说明:bip指定启动时的子网

DOCKER_NETWORK_OPTIONS=" --bip=172.17.42.1/24 --ip-masq=false --mtu=1450"

#重启docker服务

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart docker

④查看flannel网络

[root@localhost ~]# ifconfig

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.84.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::fc7c:e1ff:fe1d:224 prefixlen 64 scopeid 0x20<link>

ether fe:7c:e1:1d:02:24 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 26 overruns 0 carrier 0 collisions 0

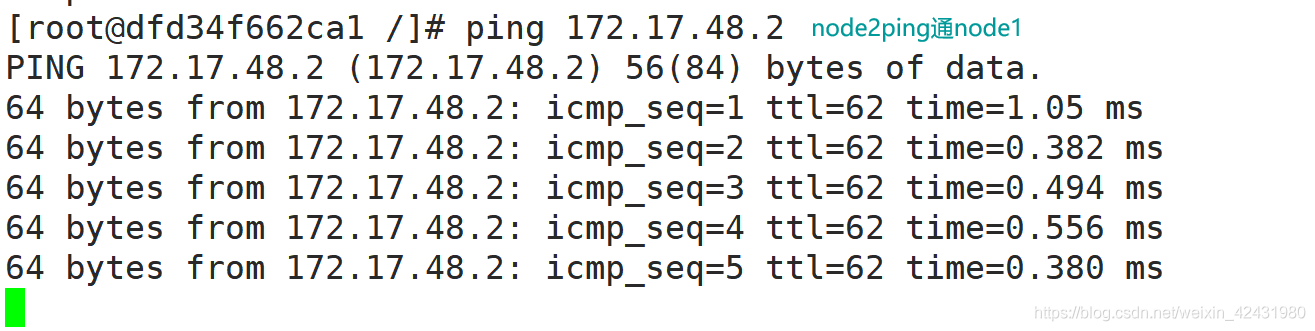

#测试ping通对方docker0网卡 证明flannel起到路由作用

[root@localhost ~]# docker run -it centos:7 /bin/bash

[root@5f9a65565b53 /]# yum install net-tools -y

[root@5f9a65565b53 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.84.2 netmask 255.255.255.0 broadcast 172.17.84.255

ether 02:42:ac:11:54:02 txqueuelen 0 (Ethernet)

RX packets 18192 bytes 13930229 (13.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6179 bytes 337037 (329.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

//再次测试ping通两个node中的centos:7容器

⑤操作总图解