本文记录自己在centos7下离线安装docker1.19和kubernetes1.18.20,有需要的人可以参考。

- 准备Linux相关环境:

- 关闭防火墙

systemctl stop firewalld

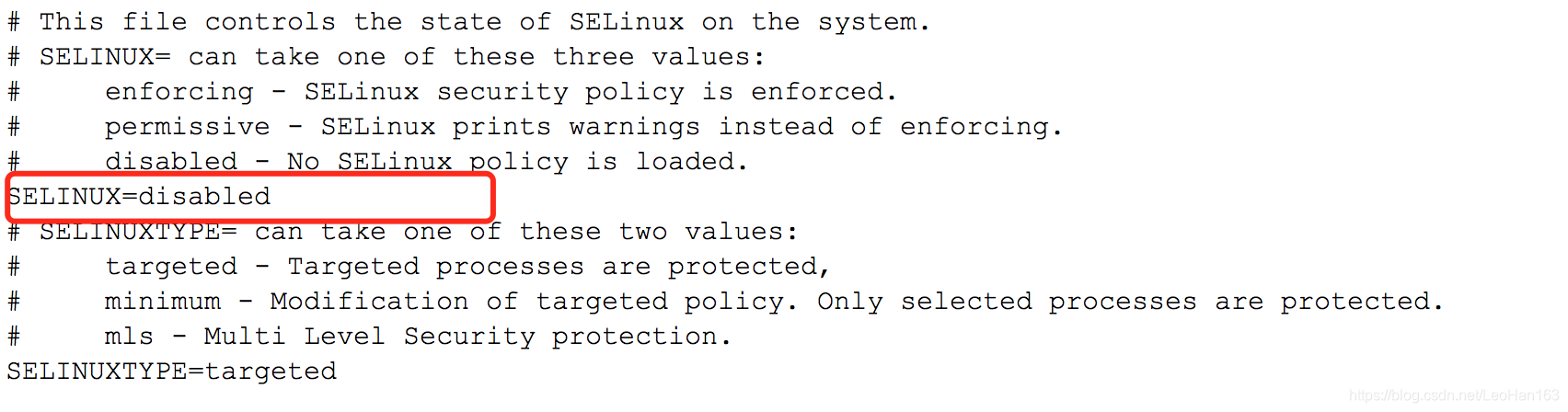

- 关闭selinux:

vim /etc/selinux/config

docker安装

2.1 下载docker离线安装包:

wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

ps: 如果需要其他版本的,可以在如下网址查找符合自己需要的版本https://download.docker.com/linux/static/stable/x86_64/

2.2 下载完之后解压,并移动到/usr/bin目录:

tar -zxvf docker-19.03.9.tgz

cp docker/* /usr/bin/

可以参考docker官网的安装指引: https://docs.docker.com/engine/install/binaries/

2.3 配置docker.service系统配置文件:

- dockser.service文件:

vim docker.service

### 写入如下内容:

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

- docker.socket文件:

vim docker.socket

### 写入如下内容:

[Unit]

Description=Docker Socket for the API

PartOf=docker.service

[Socket]

ListenStream=/var/run/docker.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

然后将上述两个文件放执行如下操作:

cp docker.socket /etc/systemd/system

cp docker.service /etc/systemd/system

systemctl daemon-reload

systemctl start docker

systemctl enable docker

2.4 增加自定义配置daemon.json:

创建或者修改/etc/docker/daemon.json内容:

daemon.josn配置样例

vim /etc/docker/daemon.json:

### 将如下内容写入:

{

"registry-mirrors": [

"https://registry.docker-cn.com"

],

"insecure-registries":[""],

"live-restore": true,

"exec-opts": ["native.cgroupdriver=systemd"]

}

然后重新加载配置:

systemctl daemon-reload

systemctl restart docker

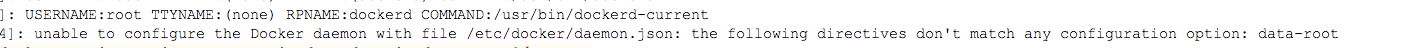

`有些这样配置完之后,重启docker可能会报如下错误:

systemctl start docker

Job for docker.service failed because start of the service was attempted too often. See “systemctl status docker.service” and “journalctl -xe” for details.

To force a start use “systemctl reset-failed docker.service” followed by “systemctl start docker.service” again.

`

这时候一般是在这里的自定义配置和系统默认的自定以配置冲突,查看相关日志:

journalctl -amu docker

可以看到如下日志:

这是因为,我们在这里配置的data-root和在docker.service文件里面冲突,我们调整两个文件配置如下:

vim /etc/systemd/system/docker.service

###覆盖写入如下内容

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd --graph /data1/docker

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

vim /etc/docker/daemon.json

### 覆盖写入如下内容:

{

"registry-mirrors": [

"https://kfwkfulq.mirror.aliyuncs.com",

"https://2lqq34jg.mirror.aliyuncs.com",

"https://pee6w651.mirror.aliyuncs.com",

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com"

]

}

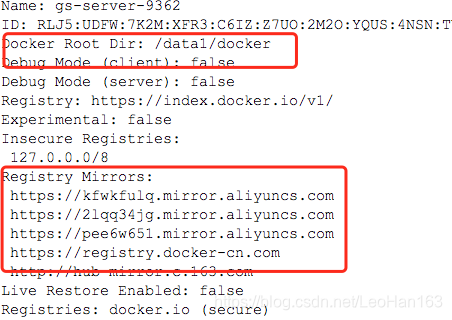

然后执行:

systemctl daemon-reload

systemctl restart docker

我们通过docker info查看配置是否起作用:

可以看到,新配置已经生效。

一般如果要更改默认的镜像仓库地址,可以通过修改上述两个文件,举例如下:

- 修改

/etc/systemd/system/docker.service:

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd --graph /data1/docker --registry-mirror=https://repository.gridsum.com:8443

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

- 修改

/etc/docker/daemon.json即可。

到这里docker安装完毕,接下来安装kubernetes。

etcd安装

首先安装etcd:

github地址: https://github.com/kubernetes/kubernetes/releases 在changelog里面找到自己需要的版本,我这里用的是3.4.16,

- 下载完之后执行如下语句:

tar -zxvf etcd-v3.4.16-linux-amd64.tar.gz

cp etcd-v3.4.16-linux-amd64/etcd /usr/bin/

cp etcd-v3.4.16-linux-amd64/etcdctl /usr/bin

然后建立etcd工作目录:

mkdir -p /data1/etcd

- 配置

etcd.conf配置文件:

vim /etc/etcd/etcd.conf

###将如下内容写入,没有目录创建对应目录和文件即可

ETCD_NAME=ETCD Server

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_ADVERTISE_CLIENT_URLS="http://xxxxx:2379"

编写etcd.servie文件:

vim etcd.service

### 将入内容写入:

[Unit]

Description=Etcd Server

[Service]

Type=notify

TimeoutStartSec=0

Restart=always

WorkingDirectory=/data1/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

[Install]

WantedBy=multi-user.target

复制到/usr/lib/systemd/system/目录:

cp etcd.service /usr/lib/systemd/system

- 配置开机自启动:

systemctl daemon-reload

systemctl enable etcd.service

systemctl start etcd.service

kubernetes master安装

首先生成一个ssl文件:

openssl genrsa -out /etc/kubernetes/ssl.key 2048

master需要安装的服务如下:

- kube-apiserver

- kube-controller-manager

- kube-scheduler

- kubectl

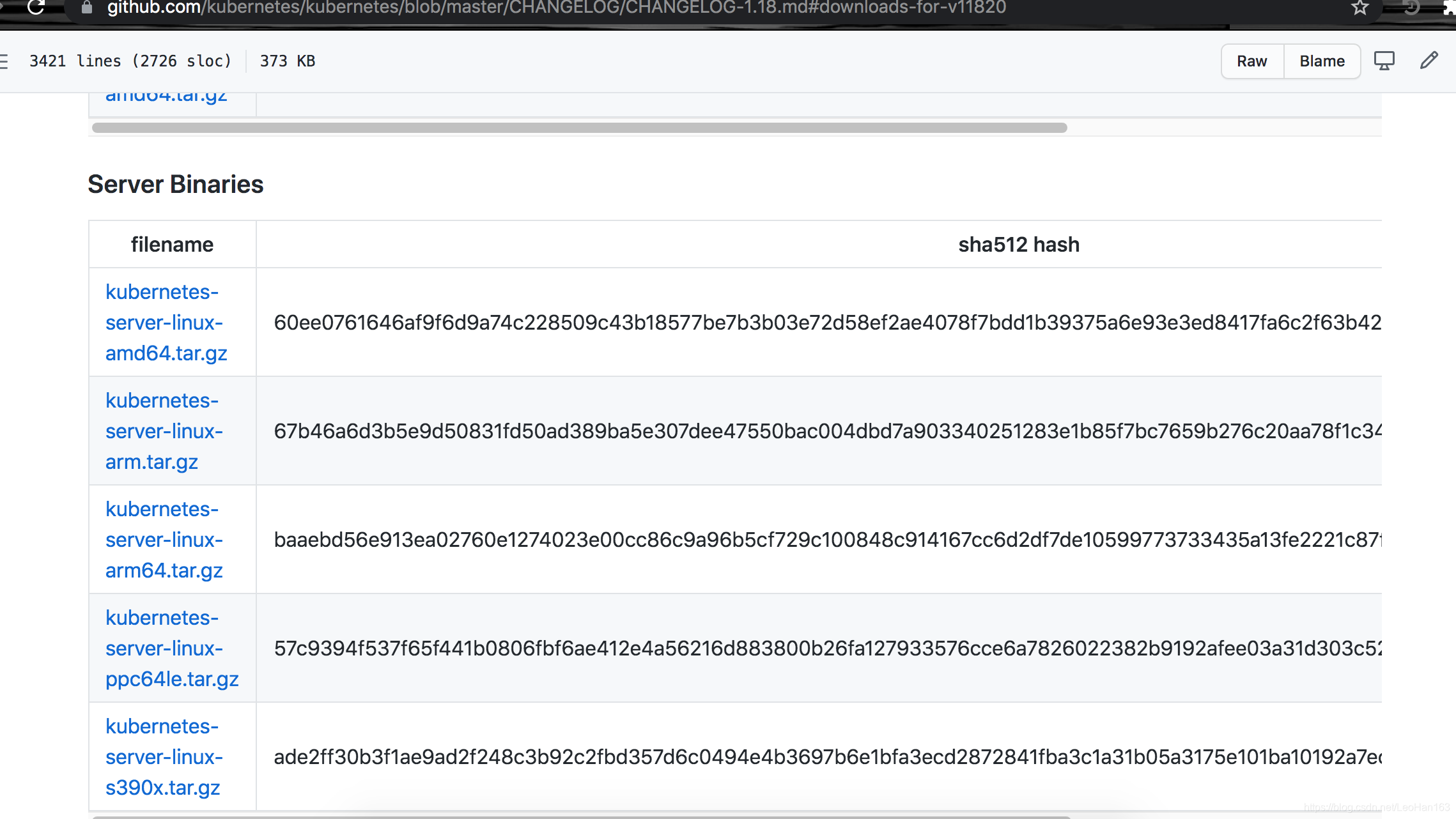

github地址为: https://github.com/kubernetes/kubernetes

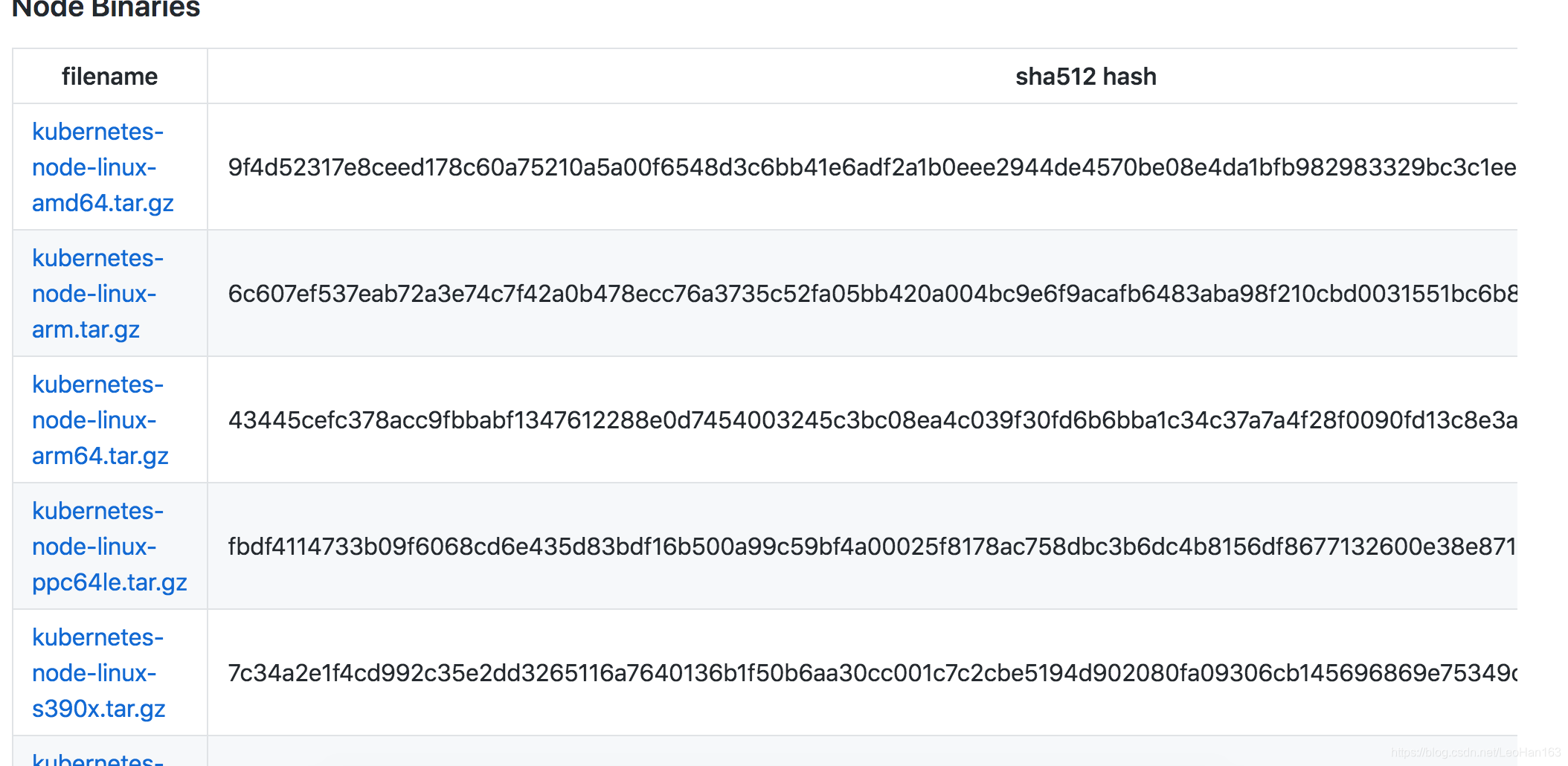

选中一个release的版本,到changlog里面,能看到相关的下载:

进行如下操作:

tar -zxvf kubernetes-server-linux-amd64.tar.gz

cp kubernetes/server/bin/kube-apiserver /usr/bin/

cp kubernetes/server/bin/kube-controller-manager /usr/bin/

cp kubernetes/server/bin/kube-scheduler /usr/bin/

cp kubernetes/server/bin/kubectl /usr/bin/

编辑启动文件:

- kube-apiserver

vim kube-apiserver.service

###写入如下内容:

[Unit]

Description=Kubernetes API Server

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_LOG \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

配置api-server配置:

vim /etc/kubernetes/apiserver

###写入如下内容:

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--insecure-port=8080"

KUBE_ETCD_SERVERS="--etcd-servers=http://10.201.50.144:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.201.0.0/16"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

KUBE_API_LOG="--logtostderr=false --log-dir=/var/log/kubernets/apiserver --v=2"

KUBE_API_ARGS="--service-account-key-file=/etc/kubernetes/ssl.key"

- kube-controller-manager

vim kube-controller-manager.service

###写入如下内容

[Unit]

Description=Kubernetes Scheduler

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

配置kube-controller-manager:

vim /etc/kubernetes/controller-manager

###写入如下内容:

KUBE_MASTER="--master=http://10.201.50.144:8080"

KUBE_CONTROLLER_MANAGER_ARGS="--service-account-private-key-file=/etc/kubernetes/ssl.key"

- kube-scheduler

vim kube-scheduler.service

###写入如下内容

[Unit]

Description=Kubernetes Scheduler

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

User=root

EnvironmentFile=-/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

配置kube-scheduler:

vim /etc/kubernetes/scheduler

###写入如下内容

KUBE_MASTER="--master=http://10.201.50.144:8080"

KUBE_SCHEDULER_ARGS="--logtostderr=true --log-dir=/var/log/kubernetes/scheduler --v=2"

然后将上述service文件复制到/usr/lib/systemd/system/

cp kube-apiserver.service /usr/lib/systemd/system/

cp kube-controller-manager.service /usr/lib/systemd/system/

cp kube-scheduler.service /usr/lib/systemd/system/

加入开启自启动:

systemctl daemon-reload

systemctl enable kube-apiserver.service

systemctl start kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl start kube-scheduler.service

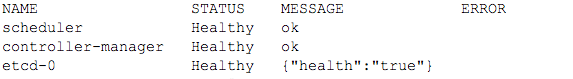

然偶验证是否成功:

kubectl get componentstatuses

出现如上,则表明搭建成功。

kubernetes node安装

调整配置如下:

- 调整

/proc/sys/net/bridge/bridge-nf-call-iptables

echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables

如果报找不到这个文件,则先执行如下命令:

modprobe br_netfilter

- 调整

swap:

swapoff -a

修改/etc/fstab配置:

sed -ri 's/.*swap.*/#&/' /etc/fstab

node节点上主要安装

- kube-proxy

- kubelet

首先,按照master安装步骤第一步一样,下载安装包:

wget https://dl.k8s.io/v1.18.20/kubernetes-node-linux-amd64.tar.gz

tar -zxvf kubernetes-node-linux-amd64.tar.gz

然后将相关可执行文件移动到/usr/bin目录下:

cp kubernetes/node/bin/kubectl /usr/bin/

cp kubernetes/node/bin/kubelet /usr/bin/

cp kubernetes/node/bin/kube-proxy /usr/bin/

vim kube-proxy.service

###写入如下内容

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

vim /etc/kubernetes/proxy

###写入如下内容

KUBE_PROXY_ARGS=""

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow_privileged=false"

KUBE_MASTER="--master=http://10.201.50.144:8080"

vim kubelet.service

###写入如下内容

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/data1/kubelet

EnvironmentFile=/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet $KUBELET_ARGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

创建kubelet工作目录:

mkdir -p /data1/kubelet

vim /etc/kubernetes/kubelet

###写入如下内容:

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_HOSTNAME="--hostname-override=nodeIP" #your node ip address

KUBELET_API_SERVER="--api-servers=http://10.201.50.144:8080"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=reg.docker.tb/harbor/pod-infrastructure:latest"

KUBELET_ARGS="--enable-server=true --enable-debugging-handlers=true --fail-swap-on=false --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --cgroup-driver=systemd"

vim /etc/kubernetes/kubelet.kubeconfig

###写入如下内容

apiVersion: v1

kind: Config

users:

- name: kubelet

clusters:

- name: kubernetes

cluster:

server: http://10.201.50.144:8080

contexts:

- context:

cluster: kubernetes

user: kubelet

name: service-account-context

current-context: service-account-context

cp kubelet.service /usr/lib/systemd/system/

cp kube-proxy.service /usr/lib/systemd/system/

systemctl daemon-reload

systemctl enable kubelet

systemctl enable kube-proxy

systemctl start kubelet

systemctl start kube-proxy

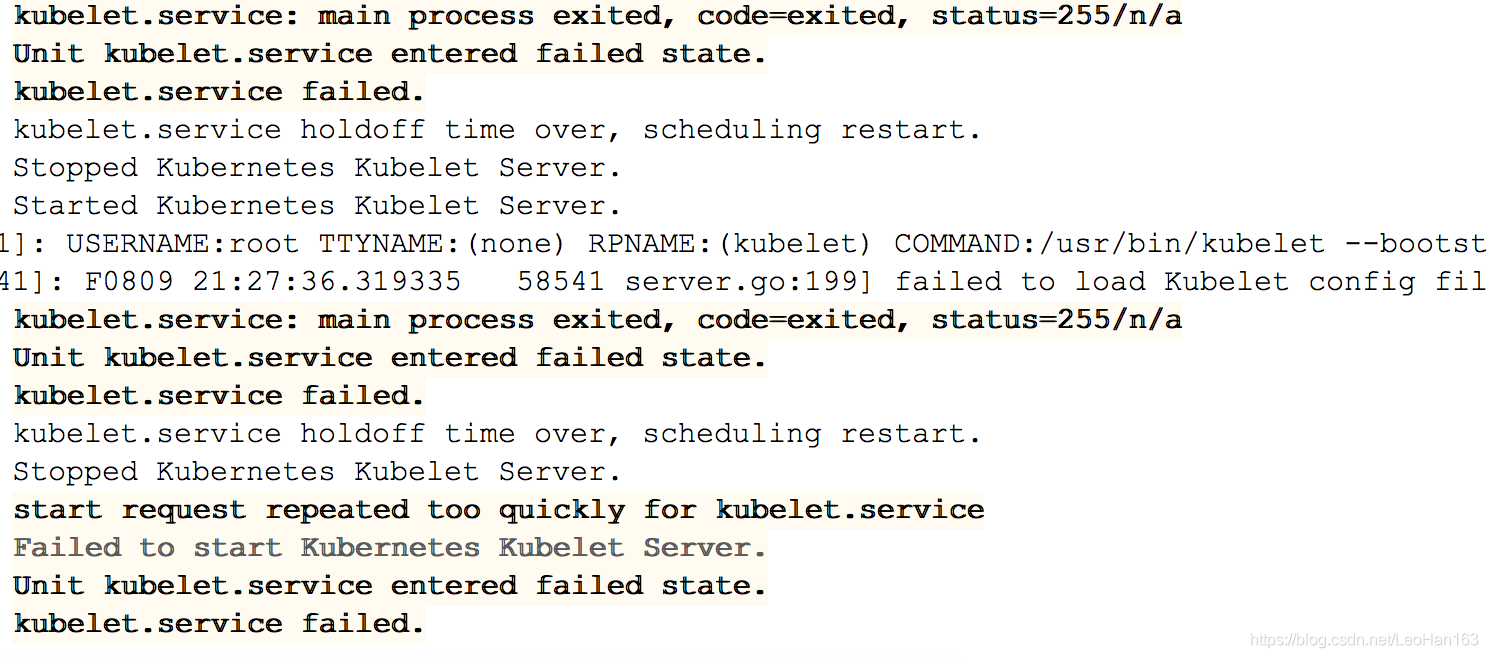

执行完之后,发现kubelet服务并没有启动,通过

journalctl -amu kubelet

发现错误信息如下:

根据错误信息,逐个处理:

- 调整

/proc/sys/net/bridge/bridge-nf-call-iptable

echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables

- 调整

swap:

swapoff -a

修改/etc/fstab配置:

sed -ri 's/.*swap.*/#&/' /etc/fstab

- 安装conntrack-tools,具体可以参考这个地址: https://gist.github.com/codemoran/8309269 ,我这里复制过来,方便大家查看:

#!/bin/bash

# Make sure we have the dev tools

yum groupinstall "Development Tools"

# lets put the source code here

mkdir -p ~/.src

cd ~/.src

# download and install the conntrack dependencies

# libnfnetlink

wget http://netfilter.org/projects/libnfnetlink/files/libnfnetlink-1.0.1.tar.bz2

tar xvfj libnfnetlink-1.0.1.tar.bz2

cd libnfnetlink-1.0.1

./configure && make && make install

cd ..

# libmnl

wget http://netfilter.org/projects/libmnl/files/libmnl-1.0.3.tar.bz2

tar xvjf libmnl-1.0.3.tar.bz2

cd libmnl-1.0.3

./configure && make && make install

cd ..

# libnetfilter

wget http://netfilter.org/projects/libnetfilter_conntrack/files/libnetfilter_conntrack-1.0.4.tar.bz2

tar xvjf libnetfilter_conntrack-1.0.4.tar.bz2

cd libnetfilter_conntrack-1.0.4

./configure PKG_CONFIG_PATH=/usr/local/lib/pkgconfig && make && make install

cd ..

# libnetfilter_cttimeout

wget http://netfilter.org/projects/libnetfilter_cttimeout/files/libnetfilter_cttimeout-1.0.0.tar.bz2

tar xvjf libnetfilter_cttimeout-1.0.0.tar.bz2

cd libnetfilter_cttimeout-1.0.0

./configure PKG_CONFIG_PATH=/usr/local/lib/pkgconfig && make && make install

cd ..

# libnetfilter_cthelper

wget http://netfilter.org/projects/libnetfilter_cthelper/files/libnetfilter_cthelper-1.0.0.tar.bz2

tar xvfj libnetfilter_cthelper-1.0.0.tar.bz2

cd libnetfilter_cthelper-1.0.0

./configure PKG_CONFIG_PATH=/usr/local/lib/pkgconfig && make && make install

cd ..

# libnetfilter_queue

wget http://netfilter.org/projects/libnetfilter_queue/files/libnetfilter_queue-1.0.2.tar.bz2

tar xvjf libnetfilter_queue-1.0.2.tar.bz2

cd libnetfilter_queue-1.0.2

./configure PKG_CONFIG_PATH=/usr/local/lib/pkgconfig && make && make install

cd ..

# Conntrack-tools

wget http://www.netfilter.org/projects/conntrack-tools/files/conntrack-tools-1.4.2.tar.bz2

tar xvjf conntrack-tools-1.4.2.tar.bz2

cd conntrack-tools-1.4.2

./configure PKG_CONFIG_PATH=/usr/local/lib/pkgconfig && make && make install

./configure --prefix=/usr PKG_CONFIG_PATH=/usr/local/lib/pkgconfig/ && make && make install

# all done, test

conntrack -L

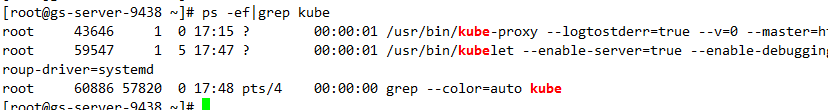

重启kubelet:

systemctl start kubelet

查看进程,启动成功:

然后在master节点创建一个deployment:

vim nginx.yml

###写入如下内容:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.12.2

ports:

然后执行:

kubectl create -f nginx.yml

查看相关状态:

kubectl get deploy

kubectl get pods

查看发现,查看pod的时候发现pod状态一直处于:

ContainerCreating

通过kubectl describe pod获取详细情况,

发现由于master和node节点无法访问外网,无法创建pausepod,这时候找一台能够上网的机器,然后按如下步骤执行:

- docker pull 下来对应的pause镜像版本

- docker save导出镜像到本地文件

- docker load记载镜像文件到指定的机器

- 重启机器上docker和kubelet

上述执行完之后,就可以了(这里默认无法访问外网的机器能够有一个私有的仓库能够下载镜像)

然后发现pod状态就正常了