准备环境

-

准备1台虚拟机,要求centos7系统,用VMvware Workstation安装

-

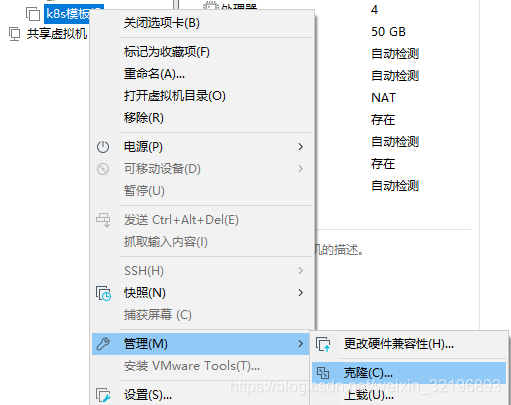

用安装好的虚拟机,克隆5台虚拟

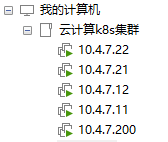

创建完成的效果图:

-

更改虚拟机 VMnet1 和 VMnet8 的设置。点击WMware Workstation: 编辑->虚拟网络编辑器,在弹出的对话框中修改

-

五台虚机的ip规划为 10.4.7.11、10.4.7.12、10.4.7.21、10.4.7.22、10.4.7.200,对应的名称分别为:hdss7-11.host.com、hdss7-12.host.com、hdss7-21.host.com、hdss7-22.host.com、hdss7-200.host.com

-

修改虚拟机ip

vim /etc/sysconfig/network-scripts/ifcfg-ens33将文件 ifcfg-ens33内容,改为如下

TYPE=Ethernet BOOTPROTO=none NAME=ens33 ONBOOT=yes IPADDR=10.4.7.11 NETMASK=255.255.255.0 GATEWAY=10.4.7.254 DNS1=10.4.7.254修改虚拟机名称:

hostnamectl set-hostname hdss7-11.host.com -

关闭firewalld服务

setenforce 0 systemctl diable firewalld systemctl stop firewalld -

安装epel源

yum install -y epel-release -

安装必要工具

yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

在hdss7-11上安装bind

-

安装bind

yum install bind -y -

修改bind主配置文件 vi /etc/named.conf

listen-on port 53 { 10.4.7.11; }; allow-query { any; }; forwarders { 10.4.7.254; }; dnssec-enable no; dnssec-validation no; -

检查配置文件是否报错

named-checkconf -

在区域配置文件配置 /etc/named.rfc1912.zones中,添加

zone "host.com" IN { type master; file "host.com.zone"; allow-update { 10.4.7.11; }; }; zone "od.com" IN { type master; file "od.com.zone"; allow-update { 10.4.7.11; }; }; -

配置 host.com.zone 配置文件 /var/named/host.com.zone

$ORIGIN host.com. $TTL 600 ; 10 minutes @ IN SOA dns.host.com. dnsadmin.host.com. ( 2021082201 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.host.com. $TTL 60 ; 1 minute dns A 10.4.7.11 HDSS7-11 A 10.4.7.11 HDSS7-12 A 10.4.7.12 HDSS7-21 A 10.4.7.21 HDSS7-22 A 10.4.7.22 HDSS7-200 A 10.4.7.200 -

配置 od.com.zone 配置文件 /var/named/od.com.zone

$ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2021082201 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 10.4.7.11配置完成后,检查配置是否有错

named-checkconf -

启动

systemctl start named # 查看53端口 netstat -luntp|grep 53 # 查看域名解析 dig -t A hdss7-21.host.com @10.4.7.11 +short -

修改 五台虚拟机的 /etc/sysconfig/network-scripts/ifcfg-ens33 配置文件,将 DNS 改为 10.4.7.11

DNS1=10.4.7.11 -

编辑五台虚拟机 /etc/resolv.conf 配置文件,增加

search host.com -

配置VMnet8 的dns 为10.4.7.11

-

在window宿主机中验证是否成功p

ping hdss7-200.host.com

准备签发证书环境(运维主机 hdss7-200.host.com 上)

-

安装CFSSL

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo -

创建/opt/cents目录并创建一个/opt/certs/ca-csr.json文件

{ "CN": "FengYun", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ], "ca": { "expiry": "175200h" } } -

自签证书

cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

部署docker 环境(hdss7-200.host.com、hdss7-21.host.com、hdss7-22.host.com)上

- 在三台虚拟机都安装docker

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun - 在三台虚拟机上都执行创建目录命令

mkdir -p /data/docker /etc/docker - 在 hdss7-21.host.com 创建 /etc/docker/daemon.json 文件,加入如下配置

同理 hdss7-200.host.com、hdss7-22.host.com 只需要将配置文件中的"bip": “172.7.21.1/24” 变为 “bip”: “172.7.200.1/24” 和 “bip”: “172.7.22.1/24”{ "graph": "/data/docker", "storage-driver": "overlay2", "insecure-registries": ["registry.access.redhat.com", "quay.io","harbor.od.com"], "registry-mirrors": ["https://k7sj660n.mirror.aliyuncs.com"], "bip": "172.7.21.1/24", "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true }

部署 docker 镜像私有仓库 harbor (hdss7-200.host.com)

- 下载离线二进制安装包

- 解压二进制包

tar -xvf harbor-offline-installer-v1.10.7.tgz -C /opt/ - 创建软连接,方便升级

cd /opt/ mv harbor/ harbor-v1.10.7 ln -s /opt/harbor-v1.10.7/ /opt/harbor - 进入 /opt/harbor目录,修改harbor.yml配置文件

hostname: harbor.od.com http: port: 180 # https related config #https: # # https port for harbor, default is 443 # port: 443 # # The path of cert and key files for nginx # certificate: /your/certificate/path # private_key: /your/private/key/path harbor_admin_password: Harbor12345 data_volume: /data/docker log: locatioin: /data/harbor/logs - 安装docker-compose

yum install docker-compose -y - 执行 /opt/harbor/install.sh 脚本

- 在 harbor目录向下,执行如下命令,验证安装结果。

docker-compose ps

安装 nginx (hdss7-200.host.com)

- 安装命令

yum install nginx -y - 在 nginx 中配置harbor,编辑/etc/nginx/conf.d/harbor.od.com.conf,加入如下配置:

报错退出后,执行如下命令:server { listen 80; server_name harbor.od.com; client_max_body_size 1000m; location / { proxy_pass http://127.0.0.1:180 } }nginx -t systemctl start nginx systemctl enable nginx - 执行 curl 命令:

如果报如下错误curl harbor.od.comcurl: (6) Could not resolve host: harbor.od.com; Unknown error - 由于有错,回到

(hdss7-11.host.com)主机上,修改如下:

原序列化 2021082201 变为 2021082202,增加$ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2021082202 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 10.4.7.11 harbor A 10.4.7.200harbor A 10.4.7.200 - 重启dns 并测试解析结果

(hdss7-11.host.com)systemctl restart named dig -t A harbor.od.com +short - 重新回到

(hdss7-200.host.com),执行curl命令,确保成功。curl harbor.od.com - 测试自己私有harbor仓库。登录 http://harbor.od.com

- 创建一个项目

- 从docker hub中拉取nginx镜像,并且打tag 推送到自己创建harbor仓库中

docker pull nginx:1.7.9 docker tag 84581e99d807 harbor.od.com/public/nginx:v1.7.9 # 84581e99d807 这个是镜像id docker login harbor.od.com # 登录自己的 harbor docker push harbor.od.com/public/nginx:v1.7.9 # 将镜像推送到自己 harbor - 成功将镜像推送到自己的私有仓库

安装etcd集群 (hdss7-12.host.com、hdss7-21.host.com、hdss7-22.host.com)

etcd集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| hdss7-12.host.com | etcd lead | 10.4.7.12 |

| hdss7-21.host.com | etcd follow | 10.4.7.21 |

| hdss7-22.host.com | etcd follow | 10.4.7.22 |

创建基于根证书的config配置文件(hdss7-200.host.com)

/opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

创建 etcd 的证书 (hdss7-200.host.com)

- 创建生成自签证书签名请求文件 /opt/certs/etcd-peer-csr.json

{ "CN": "k8s-etcd", "hosts": [ "10.4.7.11", "10.4.7.12", "10.4.7.21", "10.4.7.22" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } - 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer

安装 etcd(先安装 hdss7-12.host.com )

-

创建用户

useradd -s /sbin/nologin -M etcd id etcd -

下载 etcd 并解压

wget https://github.com/etcd-io/etcd/releases/download/v3.1.20/etcd-v3.1.20-linux-amd64.tar.gz tar -xf etcd-v3.1.20-linux-amd64.tar.gz -C /opt/ cd /opt/ mv etcd-v3.1.20-linux-amd64 etcd-v3.1.20 ln -s /opt/etcd-v3.1.20/ /opt/etcd -

创建目录,拷贝证书,私钥

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server将运维主机 hdss7-200.host.com 生成的 ca.pem、etcd-peer-key.pem、etcd-peer.pem拷贝到 /opt/etcd/certs 目录中,注意私钥文件权限600s

cd /opt/etcd/certs scp hdss7-200:/opt/certs/ca.pem . scp hdss7-200:/opt/certs/etcd-peer.pem . scp hdss7-200:/opt/certs/etcd-peer-key.pem . -

创建etcd服务启动脚本 /opt/etcd/etcd-server-startup.sh(注意,改如果以下脚本在hdss7-21、hdss7-22上执行时,修改相应的地方)

#!/bin/sh ./etcd --name etcd-server-7-12 \ --data-dir /data/etcd/etcd-server \ --listen-peer-urls https://10.4.7.12:2380 \ --listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \ --quota-backend-bytes 8000000000 \ --initial-advertise-peer-urls https://10.4.7.12:2380 \ --advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \ --initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380 \ --ca-file ./certs/ca.pem \ --cert-file ./certs/etcd-peer.pem \ --key-file ./certs/etcd-peer-key.pem \ --client-cert-auth \ --trusted-ca-file ./certs/ca.pem \ --peer-ca-file ./certs/ca.pem \ --peer-cert-file ./certs/etcd-peer.pem \ --peer-key-file ./certs/etcd-peer-key.pem \ --peer-client-cert-auth \ --peer-trusted-ca-file ./certs/ca.pem \ --log-output stdout -

增加执行权限,并且改属主

chmod +x etcd-server-startup.sh chown -R etcd:etcd /opt/etcd-v3.1.20/ chown -R etcd:etcd /data/etcd/ chown -R etcd:etcd /data/logs/etcd-server/ -

安装 supervisor(管理后台进程的软件)

yum install supervisor -y systemctl start supervisord systemctl enable supervisord -

创建 etcd-server 的启动配置 /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-12] command=/opt/etcd/etcd-server-startup.sh numprocs=1 directory=/opt/etcd autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,2 stopsignal=QUIT stopwaitsecs=10cd user=etcd redirect_stderr=true stdout_logfile=/data/logs/etcd-server/etcd.stdout.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false -

启动etcd

supervisorctl update supervisorctl status tail -fn 200 /data/logs/etcd-server/etcd.stdout.log #查看启动日志 netstat -luntp |grep etcd -

同理,在hdss7-21.host.com、hdss7-22.host.com上,按照1~8步骤,安装etcd

-

用 etcdctl 检测集群健康状态

./etcdctl cluster-health看到如下信息,说明集群是健康的

member 988139385f78284 is healthy: got healthy result from http://127.0.0.1:2379

member 5a0ef2a004fc4349 is healthy: got healthy result from http://127.0.0.1:2379

member f4a0cb0a765574a8 is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy./etcdctl member list988139385f78284: name=etcd-server-7-22 peerURLs=https://10.4.7.22:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.22:2379 isLeader=false

5a0ef2a004fc4349: name=etcd-server-7-21 peerURLs=https://10.4.7.21:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.21:2379 isLeader=false

f4a0cb0a765574a8: name=etcd-server-7-12 peerURLs=https://10.4.7.12:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=true

部署 kube-apiserver 集群

集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| hdss7-21.host.com | kube-apiserver | 10.4.7.21 |

| hdss7-22.host.com | kube-apiserver | 10.4.7.22 |

| hdss7-11.host.com | 4层负载均衡 | 10.4.7.11 |

| hdss7-12.host.com | 4层负载均衡 | 10.4.7.12 |

这里的10.4.7.11 和 10.4.7.12使用nginx做4层负载均衡器,用 keepalived 跑 vip:10.4.7.10,代理两个kube-apiserver,实现高可用。

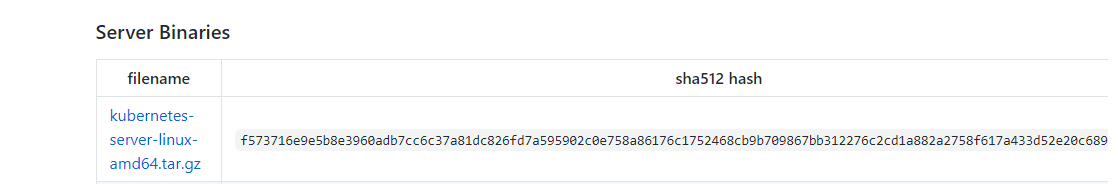

下载k8s安装报,解压,做软链接

- 下载二进制安装包https://dl.k8s.io/v1.15.2/kubernetes-server-linux-amd64.tar.gz

- 解压二进制包,并创建软连接

tar -xf kubernetes-server-linux-amd64.tar.gz -C /opt/ cd /opt/ mv kubernetes kubernetes-v1.15.2 ln -s /opt/kubernetes-v1.15.2/ /opt/kubernetes

签发client证书(apiserver与etcd集群通信使用)

此通信过程中,apiserver是client端,而etcd是server端。

- 创建生成证书签名请求(csr)的JSON配置文件 (此过程在hdss7-200.host.com上执行)

/opt/certs/client-csr.json

{ "CN": "k8s-node", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } - 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

签发 kube-apiserver 证书(由于其它组件访问api-server时候的证书)

- 创建生成证书签名请求(csr)的JSON配置文件 (此过程在hdss7-200.host.com上执行)

/opt/certs/apiserver-csr.json

{ "CN": "k8s-apiserver", "hosts": [ "127.0.0.1", "192.168.0.1", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local", "10.4.7.10", "10.4.7.21", "10.4.7.22", "10.4.7.23" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } - 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json | cfssl-json -bare apiserver

拷贝证书到 apiserver(hdss7-21.host.com,hdss7-22.host.com)

- 进入 /opt/kubernetes/server/bin下,创建 cert目录

cd /opt/kubernetes/server/bin mkdir cert - 将证书拷贝到 /opt/kubernetes/server/bin/cert目录下

scp hdss7-200:/opt/certs/apiserver-key.pem . scp hdss7-200:/opt/certs/apiserver.pem . scp hdss7-200:/opt/certs/ca-key.pem . scp hdss7-200:/opt/certs/ca.pem . scp hdss7-200:/opt/certs/client.pem . scp hdss7-200:/opt/certs/client-key.pem .

创建k8s集群api-server启动的配置文件(hdss7-21.host.com,hdss7-22.host.com)

- 创建conf目录 /opt/kubernetes/server/bin/conf

- 创建 /opt/kubernetes/server/bin/conf/audit.yaml 启动文件

apiVersion: audit.k8s.io/v1beta1 # This is required. kind: Policy # Don't generate audit events for all requests in RequestReceived stage. omitStages: - "RequestReceived" rules: # Log pod changes at RequestResponse level - level: RequestResponse resources: - group: "" # Resource "pods" doesn't match requests to any subresource of pods, # which is consistent with the RBAC policy. resources: ["pods"] # Log "pods/log", "pods/status" at Metadata level - level: Metadata resources: - group: "" resources: ["pods/log", "pods/status"] # Don't log requests to a configmap called "controller-leader" - level: None resources: - group: "" resources: ["configmaps"] resourceNames: ["controller-leader"] # Don't log watch requests by the "system:kube-proxy" on endpoints or services - level: None users: ["system:kube-proxy"] verbs: ["watch"] resources: - group: "" # core API group resources: ["endpoints", "services"] # Don't log authenticated requests to certain non-resource URL paths. - level: None userGroups: ["system:authenticated"] nonResourceURLs: - "/api*" # Wildcard matching. - "/version" # Log the request body of configmap changes in kube-system. - level: Request resources: - group: "" # core API group resources: ["configmaps"] # This rule only applies to resources in the "kube-system" namespace. # The empty string "" can be used to select non-namespaced resources. namespaces: ["kube-system"] # Log configmap and secret changes in all other namespaces at the Metadata level. - level: Metadata resources: - group: "" # core API group resources: ["secrets", "configmaps"] # Log all other resources in core and extensions at the Request level. - level: Request resources: - group: "" # core API group - group: "extensions" # Version of group should NOT be included. # A catch-all rule to log all other requests at the Metadata level. - level: Metadata # Long-running requests like watches that fall under this rule will not # generate an audit event in RequestReceived. omitStages: - "RequestReceived" - 创建api-server启动脚本 /opt/kubernetes/server/bin/kube-apiserver.sh

#!/bin/bash ./kube-apiserver \ --apiserver-count 2 \ --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \ --audit-policy-file ./conf/audit.yaml \ --authorization-mode RBAC \ --client-ca-file ./cert/ca.pem \ --requestheader-client-ca-file ./cert/ca.pem \ --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \ --etcd-cafile ./cert/ca.pem \ --etcd-certfile ./cert/client.pem \ --etcd-keyfile ./cert/client-key.pem \ --etcd-servers https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \ --service-account-key-file ./cert/ca-key.pem \ --service-cluster-ip-range 192.168.0.0/16 \ --service-node-port-range 3000-29999 \ --target-ram-mb=1024 \ --kubelet-client-certificate ./cert/client.pem \ --kubelet-client-key ./cert/client-key.pem \ --log-dir /data/logs/kubernetes/kube-apiserver \ --tls-cert-file ./cert/apiserver.pem \ --tls-private-key-file ./cert/apiserver-key.pem \ --v 2 - 调整权限,创建目录

chmod +x /opt/kubernetes/server/bin/kube-apiserver.sh mkdir -p /data/logs/kubernetes/kube-apiserver - 创建supervisor 配置 /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-7-21] command=/opt/kubernetes/server/bin/kube-apiserver.sh numprocs=1 directory=/opt/kubernetes/server/bin autostart=true autorestart=true startsecs=30 startretries=3 exitcodes=0,2 stopsignal=QUIT stopwaitsecs=10 user=root redirect_stderr=true stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log stdout_logfile_maxbytes=64MB stdout_logfile_backups=4 stdout_capture_maxbytes=1MB stdout_events_enabled=false - 启动api-servers

api-server启动成功后,我们可用看到了apiserver监听了8080端口和6443端口supervisorctl update netstat -luntp|grep kube-api

安装部署主控节点L4反向代理服务

- 在 hdss7-11、hdss7-12安装nginx

yum install nginx -y - 配置反向代理,在文件中添加 /etc/nginx/nginx.conf

stream { upstream kube-apiserver { server 10.4.7.21:6443 max_fails=3 fail_timeout=30s; server 10.4.7.22:6443 max_fails=3 fail_timeout=30s; } server { listen 7443; proxy_connect_timeout 2s; proxy_timeout 900s; proxy_pass kube-apiserver; } } - 检查配置并启动nginx

nginx -t systemctl start nginx systemctl enable nginx

安装keepalived(hdss7-11、hdss7-12)

-

安装 keepalived

yum install keepalived -y -

创建监听端口的脚本 /etc/keepalived/check_port.sh,主要是为了监听7443端口

#!/bin/bash CHK_PORT=$1 if [ -n "$CHK_PORT" ];then PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l` if [ $PORT_PROCESS -eq 0 ];then echo "Port $CHK_PORT Is Not Used,End." exit 1 fi else echo "Check Port Cant Be Empty!" fi -

给与执行权限

chmod +x /etc/keepalived/check_port.sh -

主 keepalived.conf 配置(hdss7-11)

! Configuration File for keepalived global_defs { router_id 10.4.7.11 } vrrp_script chk_nginx { script "/etc/keepalived/check_port.sh 7443" interval 2 weight -20 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 251 priority 100 advert_int 1 mcast_src_ip 10.4.7.11 nopreempt authentication { auth_type PASS auth_pass 11111111 } track_script { chk_nginx } virtual_ipaddress { 10.4.7.10 } } -

从 keepalive.conf 配置 ( hdss7-12 )

! Configuration File for keepalived global_defs { router_id 10.4.7.12 script_user root enable_script_security } vrrp_script chk_nginx { script "/etc/keepalived/check_port.sh 7443" interval 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface ens33 virtual_router_id 251 mcast_src_ip 10.4.7.12 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 11111111 } track_script { chk_nginx } virtual_ipaddress { 10.4.7.10 } } -

启动keepalived

systemctl start keepalived systemctl enable keepalived -

验证virtual-ip 是否能够漂移

nginx -s stop # 在hdss7-11上执行 ip addr # 在hdss7-11上执行 ip addr # 在hdss7-12上执行此时,发现virtual-ip漂移到 hdss7-12上了