基于虚拟设备分析Docker Bridge底层网络原理

实验环境:Centos7.9,NAT网卡 192.168.0.10,网关192.168.0.2

创建namespace和veth

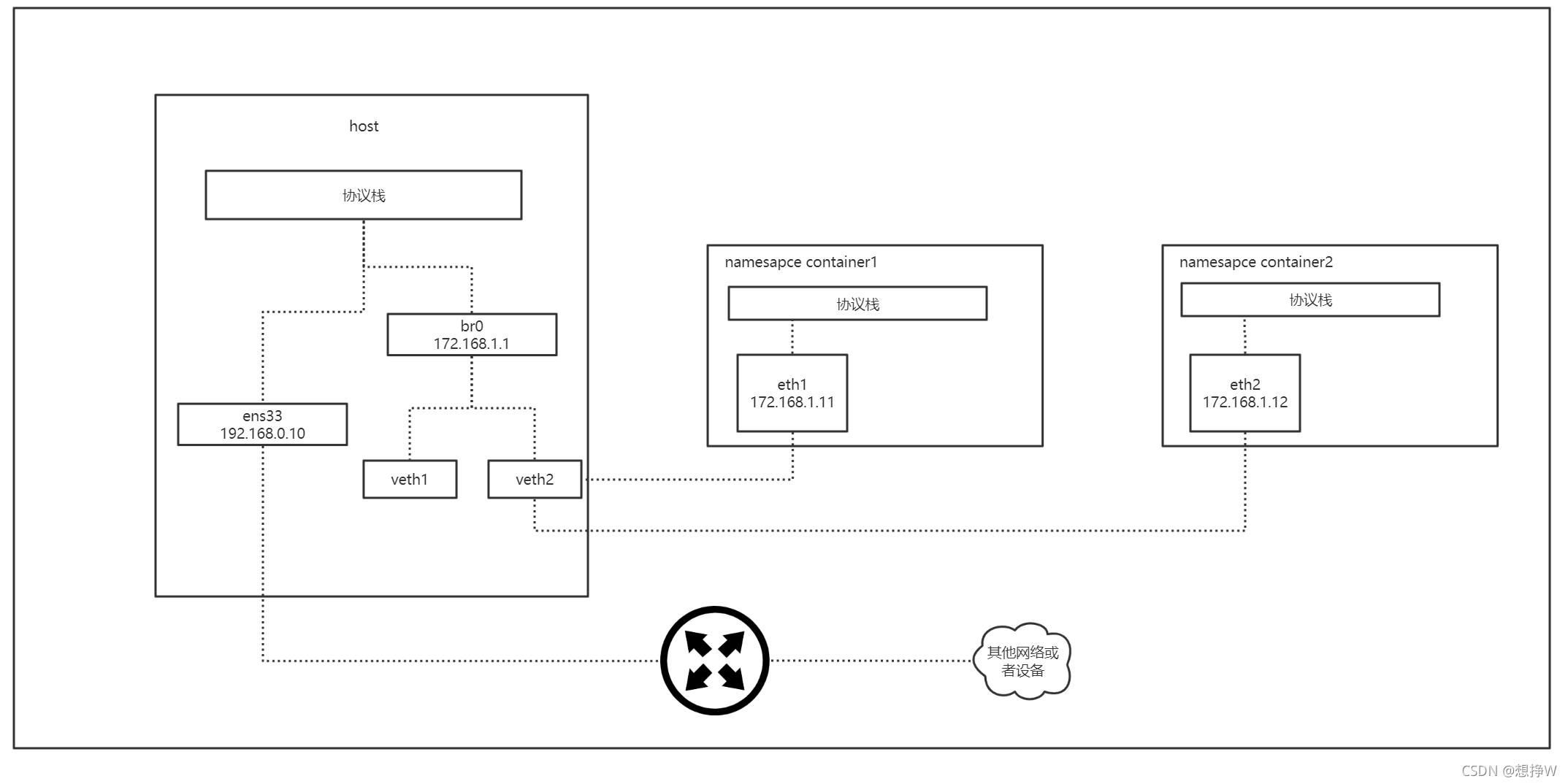

Docker 容器的网络如果设置为 bridge 模式,则那个容器就会拥有一个独立的网络命名空间(Network Namespace),一个新建的网络命名空间,其中只有一个为 DOWN 状态的 lo 网卡

Docker 随后创建一对虚拟以太网卡(Virtual Ethernet),由于其是成对存在的,所以这种设备一般称为 veth pair 。在它的一端发送的数据会在另外一端接收,类似于Linux的双向管道。Docker 会创建一对 veth,将一个移至容器命名空间中,将另一个挂到 Docker 自己创建的网桥 docker0 上。

# 创建2个namespace

ip netns add container1

ip netns add container2

# 添加veth设备

ip link add veth1 type veth peer name eth1

ip link add veth2 type veth peer name eth2

# 将ethx接入container

ip link set eth1 netns container1

ip link set eth2 netns container2

# 配置ethx IP

ip netns exec container1 ip addr add 172.168.1.11/24 dev eth1

ip netns exec container2 ip addr add 172.168.1.12/24 dev eth2

ip netns exec container1 ip link set eth1 up

ip netns exec container2 ip link set eth2 up

# 启用vethx设备

ip link set veth1 up

ip link set veth2 up

# 启动默认namespace下关闭的lo,如果不开启lo,container1就无法ping通自己

ip netns exec container1 ip link set lo up

ip netns exec container2 ip link set lo up

# 物理主机网络信息

[root@boy ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c4:ad:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.10/24 brd 192.168.0.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::624c:c1db:e3b4:9165/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: veth1@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP group default qlen 1000

link/ether 8e:15:02:5b:1b:70 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::8c15:2ff:fe5b:1b70/64 scope link

valid_lft forever preferred_lft forever

6: veth2@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP group default qlen 1000

link/ether 6e:7b:d0:04:b4:1f brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::6c7b:d0ff:fe04:b41f/64 scope link

valid_lft forever preferred_lft forever

7: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 6e:7b:d0:04:b4:1f brd ff:ff:ff:ff:ff:ff

inet 172.168.1.1/24 scope global br0

valid_lft forever preferred_lft forever

inet6 fe80::6c7b:d0ff:fe04:b41f/64 scope link

valid_lft forever preferred_lft forever

# container1 网络信息

[root@boy ~]# ip netns exec container1 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3: eth1@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c2:23:9b:67:b4:b5 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.168.1.11/24 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::c023:9bff:fe67:b4b5/64 scope link

valid_lft forever preferred_lft forever

# container2 网络信息

[root@boy ~]# ip netns exec container2 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

5: eth2@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ba:ee:58:ec:45:52 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.168.1.12/24 scope global eth2

valid_lft forever preferred_lft forever

inet6 fe80::b8ee:58ff:feec:4552/64 scope link

valid_lft forever preferred_lft forever

添加Bridge

? 网桥(bridge)虚拟设备用来桥接网络设备,相当于现实世界中的交换机,可以将网卡接到它上面。下面将留在默认命名空间中的两个虚拟网卡(veth1,veth2)接到自己创建的网桥

# 创建网桥

ip link add br0 type bridge

ip link set br0 up

# 将veth1,veth2添加到br0

ip link set veth1 master br0

ip link set veth2 master br0

# 查看bridge接口连接信息

[root@boy ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.6e7bd004b41f no veth1

veth2

# 为br0添加IP

ip addr add 172.168.1.1/24 dev br0

添加路由

# 添加路由,将去172.168.0.0/24的流量全部导入br0

route add -net 172.168.1.0/24 dev br0

# 在containerx中添加路由

ip netns exec container1 ip route add default via 172.168.1.1 dev eth1

ip netns exec container2 ip route add default via 172.168.1.1 dev eth2

===========

# 查看主机路由

[root@boy ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.0.2 0.0.0.0 UG 100 0 0 ens33

172.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 br0

192.168.0.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

# 查看container1路由

[root@boy ~]# ip netns exec container1 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.168.1.1 0.0.0.0 UG 0 0 0 eth1

172.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

# 查看container2路由

[root@boy ~]# ip netns exec container2 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.168.1.1 0.0.0.0 UG 0 0 0 eth2

172.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth2

# 主机 ping container1

[root@boy ~]# ping 172.168.1.11

PING 172.168.1.11 (172.168.1.11) 56(84) bytes of data.

64 bytes from 172.168.1.11: icmp_seq=1 ttl=64 time=0.021 ms

64 bytes from 172.168.1.11: icmp_seq=2 ttl=64 time=0.046 ms

^C

--- 172.168.1.11 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1009ms

rtt min/avg/max/mdev = 0.021/0.033/0.046/0.013 ms

# container1 ping container2

[root@boy ~]# ip netns exec container1 ping 172.168.1.12

PING 172.168.1.12 (172.168.1.12) 56(84) bytes of data.

64 bytes from 172.168.1.12: icmp_seq=1 ttl=64 time=0.043 ms

64 bytes from 172.168.1.12: icmp_seq=2 ttl=64 time=0.083 ms

^C

--- 172.168.1.12 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1004ms

rtt min/avg/max/mdev = 0.043/0.063/0.083/0.020 ms

# container1 ping container1(如果没有开启lo,无法ping通)

[root@boy ~]# ip netns exec container1 ping 172.168.1.11

PING 172.168.1.11 (172.168.1.11) 56(84) bytes of data.

64 bytes from 172.168.1.11: icmp_seq=1 ttl=64 time=0.013 ms

^C

--- 172.168.1.11 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.013/0.013/0.013/0.000 ms

# container1 ping 网关

[root@boy ~]# ip netns exec container1 ping 172.168.1.1

PING 172.168.1.1 (172.168.1.1) 56(84) bytes of data.

64 bytes from 172.168.1.1: icmp_seq=1 ttl=64 time=0.029 ms

^C

--- 172.168.1.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0

# container ping ens33

[root@boy ~]# ip netns exec container1 ping 192.168.0.10

PING 192.168.0.10 (192.168.0.10) 56(84) bytes of data.

64 bytes from 192.168.0.10: icmp_seq=1 ttl=64 time=0.026 ms

64 bytes from 192.168.0.10: icmp_seq=2 ttl=64 time=0.091 ms

^C

--- 192.168.0.10 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1005ms

rtt min/avg/max/mdev = 0.026/0.058/0.091/0.033 ms

下边关于容器分析了

# 当没有开启ip_forward转发时

[root@boy ~]# ip netns exec container1 ping 192.168.0.2

PING 192.168.0.2 (192.168.0.2) 56(84) bytes of data.

^C

--- 192.168.0.2 ping statistics ---

2 packets transmitted, 0 received, 100% packet loss, time 1012ms

# ens33抓包,此时抓不到ICMP包

[root@boy ~]# tcpdump -i ens33 -n -p icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

# 当开启ip_forward后,协议栈会将ICMP Request发送给ens33

[root@boy ~]# ip netns exec container1 ping 192.168.0.2

PING 192.168.0.2 (192.168.0.2) 56(84) bytes of data.

^C

--- 192.168.0.2 ping statistics ---

44 packets transmitted, 0 received, 100% packet loss, time 43254ms

# ens33抓包

[root@boy ~]# tcpdump -i ens33 -n -p icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens33, link-type EN10MB (Ethernet), capture size 262144 bytes

05:53:20.167099 IP 172.168.1.11 > 192.168.0.2: ICMP echo request, id 2505, seq 39, length 64

05:53:21.168456 IP 172.168.1.11 > 192.168.0.2: ICMP echo request, id 2505, seq 40, length 64

05:53:22.171285 IP 172.168.1.11 > 192.168.0.2: ICMP echo request, id 2505, seq 41, length 64

05:53:23.171907 IP 172.168.1.11 > 192.168.0.2: ICMP echo request, id 2505, seq 42, length 64

通过上边可以看出,ICMP request在开启ip_forward后,被转发到ens33,但是为什么没有ICMP reply?,当源地址为172.168.1.11,目的地址为192.168.0.2发送到网关后,构建的reply包的目的地址为172.168.1.11,故而看不到ICMPreply包

添加masqurade

# 当前机器iptables表

[root@boy ~]# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

iptables 有五个链(chain),这里的 FORWARD 链用于处理转发到其他机器 / network namespace 的数据包,这里的默认 policy 为 ACCEPT ,所以从 container1 到 container2 的数据包就会被转发,如果这里DROP了,请打开

iptables -t filter --policy FORWARD ACCEPT

# 添加masqurade,masqurade会把从br0出来的数据包(并且这个数据包要访问本机以外机器)的源地址换成ens33的地址,目标地址不变

iptables -t nat -A POSTROUTING -s 172.168.1.0/24 -o ens33 -j MASQUERADE

# 查看iptables表

[root@boy ~]# iptables -t nat -L -n

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 172.168.1.0/24 0.0.0.0/0

# container1 ping 114.114.114.114

[root@boy ~]# ip netns exec container1 ping 114.114.114.114

PING 114.114.114.114 (114.114.114.114) 56(84) bytes of data.

64 bytes from 114.114.114.114: icmp_seq=1 ttl=127 time=31.2 ms

64 bytes from 114.114.114.114: icmp_seq=2 ttl=127 time=40.6 ms

64 bytes from 114.114.114.114: icmp_seq=3 ttl=127 time=41.2 ms

# container1 内流量能正常出去了

添加DNAT

# 在container1,container2开一个80端口

ip netns exec container1 python3 -m http.server 80

ip netns exec container2 python3 -m http.server 80

# 测试是否正常

[root@boy ~]# curl -I http://172.168.1.11

HTTP/1.0 200 OK

Server: SimpleHTTP/0.6 Python/3.6.8

Date: Fri, 27 Aug 2021 14:02:03 GMT

Content-type: text/html; charset=utf-8

Content-Length: 715

[root@boy ~]# curl -I http://172.168.1.12

HTTP/1.0 200 OK

Server: SimpleHTTP/0.6 Python/3.6.8

Date: Fri, 27 Aug 2021 14:02:11 GMT

Content-type: text/html; charset=utf-8

Content-Length: 715

# 添加DNAT

iptables -t nat -A PREROUTING -p tcp --dport 8888 -j DNAT --to-destination 172.168.1.11:80

iptables -t nat -A PREROUTING -p tcp --dport 9999 -j DNAT --to- 172.168.1.12:80

# 测试是否生效(这里不能在虚拟机里curl http://192.168.0.10:8888,因为流量会走lo口去)

C:\Users\xxxx>tcping 192.168.0.10 9999

Probing 192.168.0.10:9999/tcp - Port is open - time=1.914ms

Probing 192.168.0.10:9999/tcp - Port is open - time=1.118ms

Control-C

C:\Users\JiaYu>tcping 192.168.0.10 8888

Probing 192.168.0.10:8888/tcp - Port is open - time=1.186ms

Control-C

# lo口数据

[root@boy ~]# curl -I http://192.168.0.10:8888

curl: (7) Failed connect to 192.168.0.10:8888; Connection refused

[root@boy ~]# tcpdump -i lo -n

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on lo, link-type EN10MB (Ethernet), capture size 262144 bytes

10:30:58.163745 IP 192.168.0.10.46652 > 192.168.0.10.ddi-tcp-1: Flags [S], seq 319318494, win 43690, options [mss 65495,sackOK,TS val 3112213 ecr 0,nop,wscale 7], length 0

10:30:58.163755 IP 192.168.0.10.ddi-tcp-1 > 192.168.0.10.46652: Flags [R.], seq 0, ack 319318495, win 0, length 0

至此docker的bridge流量进去与出去都模拟成功,希望该实验能让各位读者进一步了解docker底层网络通信过程