文章目录

一、Nova计算服务

1.1Nova计算服务概述

- 计算服务是OpenStack最核心的服务之一,负责维护和管理云环境的计算资源,它在OpenStack项目中代号是nova

- Nova自身并没有提供任何虚拟化能力,它提供计算服务,使用不同的虚拟化驱动来与底层支持的Hypervisor(虚拟机管理器)进行交互,所有的计算实例(虚拟服务器)由Nova进行生命周期的调度管理(启动、挂起、停止、删除等)

- Nova需要keystone、glance、neutron、cinder和swift等其他服务的支持,能与这些服务集成,实现如加密磁盘、裸金属计算实例等

1.2Nova系统架构

Nova由多个服务器进程组成,每个进程执行不同的功能

- DB:用于数据存储的sql数据库

- API:用于接收HTTP请求、转换命令、通过消息队列或HTTP与其他组件通信的nova组件

- Scheduler:用于决定哪台计算机节点承载计算实例的nova调度器

- Network:管理IP转发、网桥或虚拟局域网的nova网络组件

- Compute:管理虚拟机管理器与虚拟机之间通信的nova计算

- Conductor:处理需要协调(构建虚拟机或调整虚拟机大小)的请求,或者处理对象转换

二、Nova组件-API

- API是客户访问nova的http接口,它由nova-api服务实现,nova-api服务接收和响应来自最终用户的计算api请求,作为openst对外服务的最主要接口,nova-api提供了一个集中的可以查询所有api的端点

- 所欲对nova的请求都首先由nova-api处理。API提供REST标准调用服务,便于与第三方系统集成

- 最终用户不会直接改送RESTful请求,而是通过openstack命令行、dashbord和其他需要跟nova交换的组件来使用这些API

- 只要更虚拟机生命舟曲相关的操作,nova-api都可以响应

- Nova-api对接收到的HTTP API请求做一下处理:

①检查客户端传入参数是否合法有效

②调用nova其他服务来处理客户端HTTP请求

③格式化nova其他子服务返回结果并返回给客户端 - Nova-api是外部访问并使用nova提供的各种服务的唯一途径,也是客户端和nova之间的中间层

三、Nova组件-Scheduler

3.1Scheduler调度器

- Scheduler为调度器,由nova-Scheduler服务实现,主要解决的是如何选择在哪个节点上启动实例的问题,它可以应用多种规则,如果考虑内存使用率、CPU负载率、CPU构架等多种因素,根据一定的算法,确定虚拟机实例能够运行在哪一台计算机服务器上,Nova-Scheduler服务会从队列中接收一个虚拟机实例的请求,通过读取数据库的内容,从可用资源池中选择最合适的计算节点来创建新的虚拟机实例

- 创建虚拟机实例时,用户会提出资源需求,如CPU、内存、磁盘各需要多少,OpenStack将这些需求定义在实例类型中,用户只需指定使用哪个实例类型就可以了

3.2Nova调度器的类型

- 随机调度器(chance Scheduler):从所有正常运行nova-compute服务节点中随机选择

- 过滤调度器(filter Scheduler):根据指定的过滤条件以及权重选择最佳的计算节点,Filter有称为筛选器

- 缓存调度器(caching Scheduler):可看做随机调度器的一种特殊类型,在随机调度的基础上将主机资源信息缓存在本地内存中,然后通过后台的定时任务定时从数据库中获取最新的足迹资源信息

3.3Scheduler过滤调度器

- RetryFilter(在审过滤器)

主要作用是过滤掉之前已经调度过的节点。如A、B、C都通过了过滤,A权重最大被选中执行操作,由于某种原因,操作字啊A上失败了,Nova-filter将重新执行过滤操作,那么此时A就被会RetryFilter直接排除,以免再次失败 - AvailabilityZoneFilter(可用区域过滤器)

为提高容灾性并提供隔离服务,可以将计算节点划分到不同的可用区域中,OpenStack默认有一个命名为nova的可用区域,所有计算节点初始是放在nova区域中的,用户可以将根据需要创建自己的一个可用区域,创建实例时,需要指定实例部署在哪个不可用区域中,Nova-Scheduler执行过滤操作时,会使用AvailabilityZoneFilter不属于指定可用区域计算节点过滤掉 - ServerGroupAntiAffinityFilter(服务器反亲和性过滤器)

要求尽量将实例部署到不同的节点上 - ServerGroupAffinityFilter(服务器组亲和性过滤器)

与反亲和性过滤器相反,此过滤器尽量将实例部署到同一个计算节点上

四、Nova组件-Compute

五、OpenStack-placement组件部署

5.1创建数据库实例和数据库用户

[root@ct ~]# mysql -uroot -p123456

MariaDB [(none)]> create database placement;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

5.2创建Placement服务用户和API的endpoint

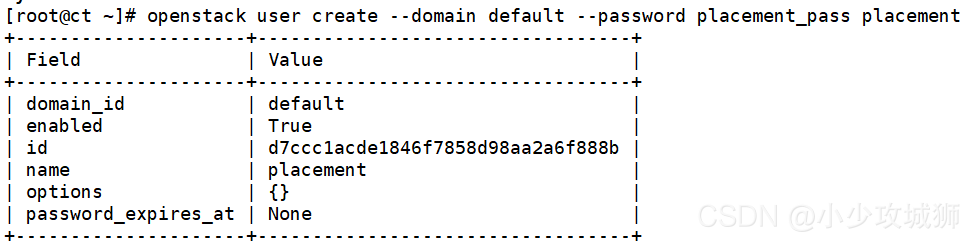

5.2.1创建placement用户

[root@ct ~]# openstack user create --domain default --password placement_pass placement

5.2.2给与placement用户对service项目拥有admin权限

[root@ct ~]# openstack role add --project service --user placement admin

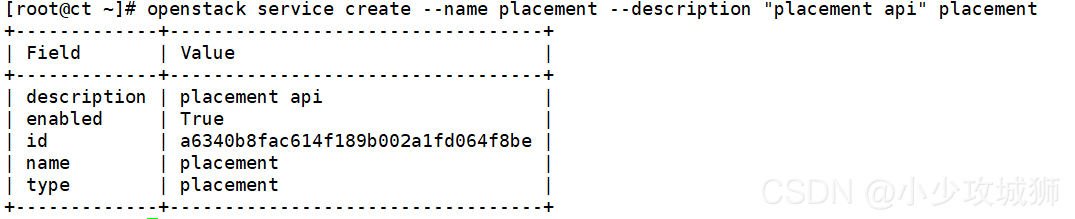

5.2.3创建一个placement服务,服务类型为placement

[root@ct ~]# openstack service create --name placement --description "placement api" placement

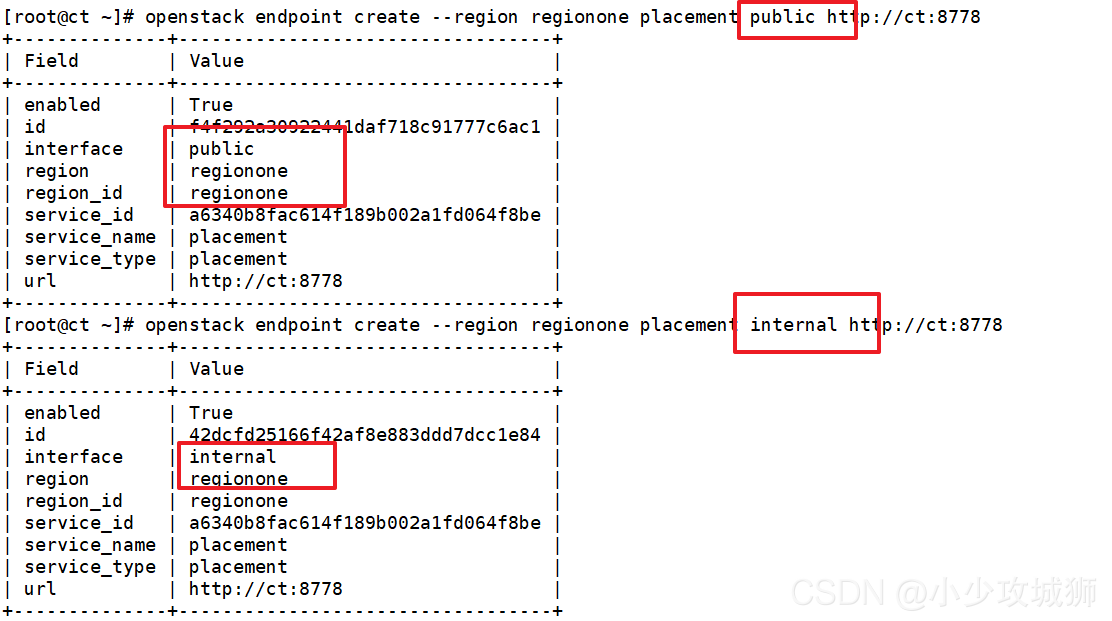

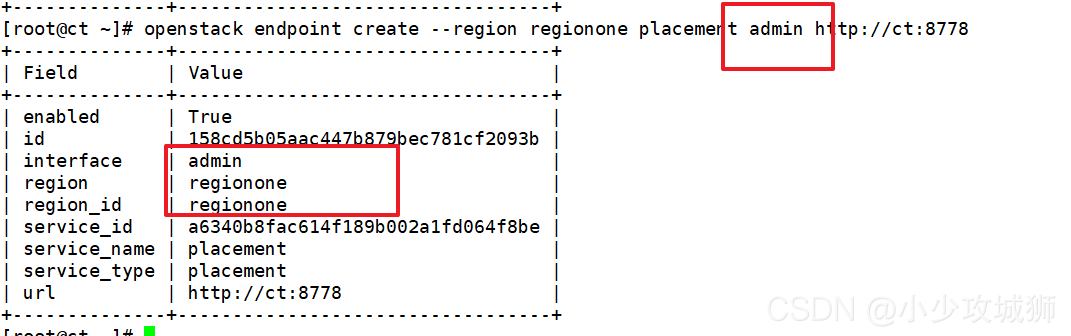

5.2.4 注册API端口到placement的service中

- 注册的信息会写入到mysql中

[root@ct ~]# openstack endpoint create --region regionone placement public http://ct:8778

[root@ct ~]# openstack endpoint create --region regionone placement internal http://ct:8778

[root@ct ~]# openstack endpoint create --region regionone placement admin http://ct:8778

5.2.5安装placement服务

[root@ct ~]# yum -y install openstack-placement-api

5.2.6修改placement配置文件

- 修改配置文件

[root@ct ~]# cp /etc/placement/placement.conf{,.bak}

[root@ct ~]# grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf

- 传入参数

openstack-config --set /etc/placement/placement.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

openstack-config --set /etc/placement/placement.conf api auth_strategy keystone

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_url http://ct:5000/v3

openstack-config --set /etc/placement/placement.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_type password

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_name service

openstack-config --set /etc/placement/placement.conf keystone_authtoken username placement

openstack-config --set /etc/placement/placement.conf keystone_authtoken password PLACEMENT_PASS

- 查看配置文件

[root@ct ~]# cd /etc/placement/

[root@ct placement]# cat placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://ct:5000/v3 #指定keystone地址

memcached_servers = ct:11211 #session信息是缓存放到了memcached中

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = PLACEMENT_PASS

[oslo_policy]

[placement]

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

[profiler]

5.2.7导入数据库

[root@ct placement]# su -s /bin/sh -c "placement-manage db sync" placement

5.2.8修改Apache配置文件

- 安装完placement服务后会自动创建该文件-虚拟主机配置

[root@ct ~]# cd /etc/httpd/conf.d/

[root@ct conf.d]# vim 00-placement-api.conf

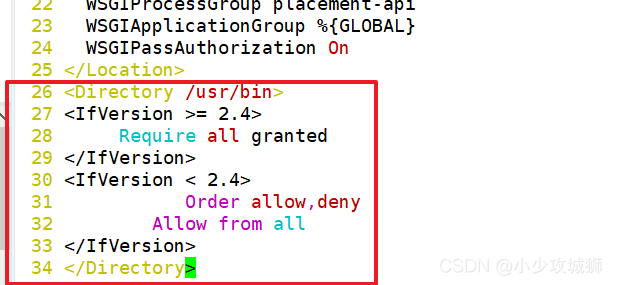

<Directory /usr/bin> #此处是bug,必须添加下面的配置来启用对placement api的访问,否则在访问apache的

<IfVersion >= 2.4> #api时会报403;添加在文件的最后即可

Require all granted

</IfVersion>

<IfVersion < 2.4> #apache版本;允许apache访问/usr/bin目录;否则/usr/bin/placement-api将不允许被访问

Order allow,deny

Allow from all #允许apache访问

</IfVersion>

</Directory>

5.2.9启动apache

[root@ct conf.d]# systemctl restart httpd

5.2.10测试

- curl测试访问

[root@ct placement]# curl ct:8778

{"versions": [{"status": "CURRENT", "min_version": "1.0", "max_version": "1.36", "id": "v1.0", "links": [{"href": "", "rel": "self"}]}]}

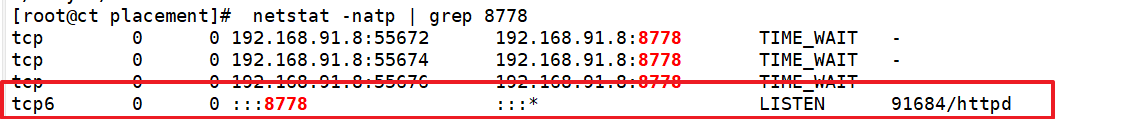

- 查看端口占用

[root@ct placement]# netstat -natp | grep 8778

- 检查placement状态

[root@ct placement]# placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

六、OpenStack-nova组价部署

6.1nova组价部署位置

6.1.1控制节点ct

nova-api ##nova主服务

nova-scheduler ##nova调度服务

nova-conductor ##nova数据库,提供数据库访问

nova-novncproxy ##nova的vnc服务,提供实例的控制台

6.1.2计算节点c1、c2

nova-compute ###nova计算服务

6.2计算节点Nova服务配置

6.2.1创建nova数据库,并执行授权操作

[root@ct ~]# mysql -uroot -p

mariadb [(none)]> create database nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

6.3管理nova用户及服务

6.3.1创建nova用户

[root@ct ~]# openstack user create --domain default --password nova_pass nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 41510ba2d615441188b48641e46e92b9 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

###把nova用户添加到service项目中,拥有admin权限

[root@ct ~]# openstack role add --project service --user nova admin

6.3.2创建nova服务

[root@ct ~]# openstack service create --name nova --description "openstack compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | openstack compute |

| enabled | True |

| id | f08b6ab959bb4f1a9b6d1f13cec8d7e6 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

6.3.3给nova服务关联endpoint(端点)

[root@ct ~]# openstack endpoint create --region regionone compute public http://ct:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 527c4d4c46944ca6a53cd0f7a4d798aa |

| interface | public |

| region | regionone |

| region_id | regionone |

| service_id | f08b6ab959bb4f1a9b6d1f13cec8d7e6 |

| service_name | nova |

| service_type | compute |

| url | http://ct:8774/v2.1 |

+--------------+----------------------------------+

[root@ct ~]# openstack endpoint create --region regionone compute internal http://ct:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 16eacd1ae4a24c40976474263b492086 |

| interface | internal |

| region | regionone |

| region_id | regionone |

| service_id | f08b6ab959bb4f1a9b6d1f13cec8d7e6 |

| service_name | nova |

| service_type | compute |

| url | http://ct:8774/v2.1 |

+--------------+----------------------------------+

[root@ct ~]# openstack endpoint create --region regionone compute admin http://ct:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | f25f5d81e7d741bbb17c913d9bec141d |

| interface | admin |

| region | regionone |

| region_id | regionone |

| service_id | f08b6ab959bb4f1a9b6d1f13cec8d7e6 |

| service_name | nova |

| service_type | compute |

| url | http://ct:8774/v2.1 |

+--------------+----------------------------------+

6.3.4安装nova组件(nova-api、nova-conductor、nova-novncproxy、nova-scheduler)

[root@ct ~]# yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler

6.4修改nova配置文件(nova.conf)

[root@ct ~]# cp -a /etc/nova/nova.conf{,.bak}

[root@ct ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

- 修改nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.91.8 ####修改为 ct的IP(内部IP)

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:RABBIT_PASS@ct

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@ct/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@ct/nova

openstack-config --set /etc/nova/nova.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://ct:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers ct:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen ' $my_ip'

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address ' $my_ip'

openstack-config --set /etc/nova/nova.conf glance api_servers http://ct:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://ct:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

- 查看nova.conf

[root@ct ~]# cat /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata #指定支持的api类型

my_ip = 192.168.91.8 #定义本地IP

use_neutron = true #通过neutron获取IP地址

firewall_driver = nova.virt.firewall.NoopFirewallDriver

transport_url = rabbit://openstack:RABBIT_PASS@ct #指定连接的rabbitmq

[api]

auth_strategy = keystone #指定使用keystone认证

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@ct/nova_api

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@ct/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://ct:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken] #配置keystone的认证信息

auth_url = http://ct:5000/v3 #到此url去认证

memcached_servers = ct:11211 #memcache数据库地址:端口

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency] #指定锁路径

lock_path = /var/lib/nova/tmp #锁的作用是创建虚拟机时,在执行某个操作的时候,需要等此步骤执行完后才能执行下一个步骤,不能并行执行,保证操作是一步一步的执行

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://ct:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc] #此处如果配置不正确,则连接不上虚拟机的控制台

enabled = true

server_listen = $my_ip #指定vnc的监听地址

server_proxyclient_address = $my_ip #server的客户端地址为本机地址;此地址是管理网的地址

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@ct/placement

6.5初始化数据库

6.5.1初始化nova_api数据库

[root@ct ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

6.5.2注册cell0数据库

- nova服务内部把资源划分到不同的cell中,把计算节点划分到不同的cell中;openstack内部基于cell把计算节点进行逻辑上的分组

[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

6.5.3创建cell1单元格

[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

e7245824-86ae-49eb-9f8f-48c252cdb70d

6.5.4初始化数据库

- 可以通过/var/log/nova/nova-manage.log 日志判断是否初始化成功

[root@ct ~]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release')

result = self._query(query)

6.5.5验证cell0和cell1是否注册成功

###验证cell0和cell1组件是否注册成功

[root@ct ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+----------------------------+-----------------------------------------+----------+

| 名称 | UUID | Transport URL | 数据库连接 | Disabled |

+-------+--------------------------------------+----------------------------+-----------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@ct/nova_cell0 | False |

| cell1 | e7245824-86ae-49eb-9f8f-48c252cdb70d | rabbit://openstack:****@ct | mysql+pymysql://nova:****@ct/nova | False |

+-------+--------------------------------------+----------------------------+-----------------------------------------+----------+

6.5.6启动nova服务

[root@ct ~]# systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service