1.基础设施(四台服务器,地址分别为111、112、113、114)

① 设置ip及主机名

//设置ip(111、112、113、114)

vi /etc/sysconfig/network-scripts/ifcfg-ens33

//设置111主机名

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=node01

//设置112主机名

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=node02

//设置113主机名

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=node03

//设置114主机名

vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=node04

设置本机ip与主机名之间的映射关系

//111、112、113、114分别做如下设置,否则ssh到另一台主机时会连不通

vi /etc/hosts

192.168.0.111 node01

192.168.0.112 node02

192.168.0.113 node03

192.168.0.114 node04

//重启服务器,让映射生效

② 关闭防火墙与selinux

//关闭防火墙(node01、node02、node03、node04)

service iptables stop

//关闭防火墙方式2(node01、node02、node03、node04)

systemctl stop firewalld.service

//设置防火墙禁用(node01、node02、node03、node04)

chkconfig iptables off

//设置防火墙禁用方式2(node01、node02、node03、node04)

systemctl disable firewalld.service

//关闭selinux(node01、node02、node03、node04)

vi /etc/selinux/config

SELINUX=disabled

③ 设置时间同步

//(node01、node02、node03、node04)

yum install ntp -y

//配置阿里云时间(node01、node02、node03、node04)

vi /etc/ntp.conf

server ntp1.aliyun.com

//开启时间同步(node01、node02、node03、node04)

service ntpd start

//开启开机同步时间(node01、node02、node03、node04)

chkconfig ntpd on

④ 安装jdk

下载地址:https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html

//(node01、node02、node03、node04)

//使用rpm方式安装jdk会自动生成usr/java/default

//有一些软件只认usr/java/default目录

rpm -i jdk-8u181-linux-x64.rpm

//配置环境变量

vi /etc/profile

export JAVA_HOME=/usr/java/default

export CLASSPATH=.:${JAVA_HOME}/jre/lib/rt.jar:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

export PATH=$PATH:${JAVA_HOME}/bin

//加载资源

source /etc/profile

⑤ 设置ssh免秘钥

//node01、node02、node03、node04分别登录自己,输入密码,生成.ssh文件夹

ssh localhost

//node01配置免密

// -t 类型 -P 密码 -f 公钥秘钥存放位置

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

//想免密登那台机器就把公钥放在对应机器的~/.ssh/authorized_keys

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

//从node01登录node02的免密配置

scp /root/.ssh/id_dsa.pub node02:/root/.ssh/node01.pub

//node02把公钥放在机器的~/.ssh/authorized_keys

cd ~/.ssh

cat node01.pub >> authorized_keys

//从node01登录node03的免密配置

scp /root/.ssh/id_dsa.pub node03:/root/.ssh/node01.pub

//node03把公钥放在机器的~/.ssh/authorized_keys

cd ~/.ssh

cat node01.pub >> authorized_keys

//从node01登录node04的免密配置

scp /root/.ssh/id_dsa.pub node04:/root/.ssh/node01.pub

//node04把公钥放在机器的~/.ssh/authorized_keys

cd ~/.ssh

cat node01.pub >> authorized_keys

2.部署配置

① 安装Hadoop

下载地址:https://downloads.apache.org/hadoop/common/hadoop-3.2.2/

//node01创建安装目录

mkdir /opt/bigdata

//node01上传hadoop包

rz -be

//node01解压缩hadoop

tar -zxvf hadoop-3.2.2.tar.gz

//node01配置环境变量

vi /etc/profile

export JAVA_HOME=/usr/java/default

# 放在path之前

export HADOOP_HOME=/opt/bigdata/hadoop-3.2.2

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

//node01加载资源

source /etc/profile

② 配置hadoop的角色

cd /$HADOOP_HOME/etc/hadoop

//node01给hadoop配置javahome,必须配置,否则ssh找不到

vi hadoop-env.sh

export JAVA_HOME=/usr/java/default

//node01配置NameNode角色在哪里启动

vi core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://node01:9000</value>

</property>

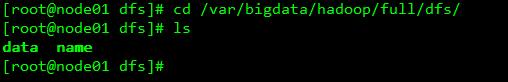

//node01配置hdfs副本数为2

//node01配置NameNode节点存放位置(默认在临时文件夹中,容易被删除掉)

//node01配置DataNode数据存放位置(默认在临时文件夹中,容易被删除掉)

//node01配置NameNode辅助节点secondary地址为node02即存放位置(默认在临时文件夹中,容易被删除掉)

vi hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/var/bigdata/hadoop/full/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/var/bigdata/hadoop/full/dfs/data</value>

</property>

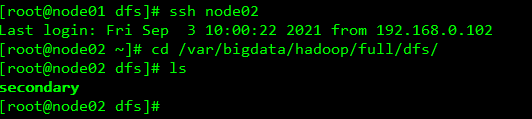

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node02:50090</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>/var/bigdata/hadoop/full/dfs/secondary</value>

</property>

//node01配置DataNode角色在哪里启动

vi slaves

node02

node03

node04

③ 配置hadoop的分发

cd /opt

//分发hadoop到node02、node03、node04

scp -r ./bigdata/ node02:`pwd`

scp -r ./bigdata/ node03:`pwd`

scp -r ./bigdata/ node04:`pwd`

3.初始化运行

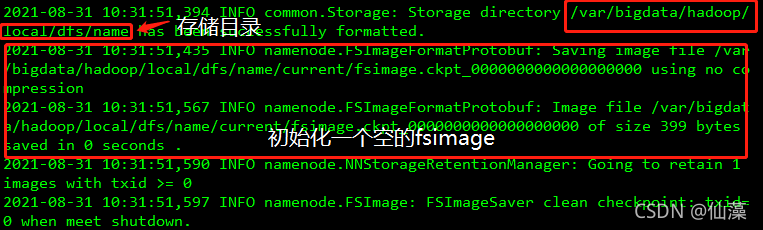

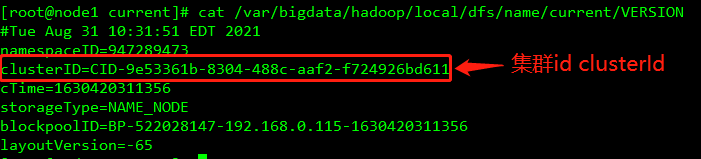

① 创建存储目录、初始化一个空的fsImage、创建NameNode(不会创建DataNode,DataNode在启动时自动创建)、生成集群id(clusterId、只需要格式化一次,再次格式化会生成新的clusterId)

hdfs namenode -format

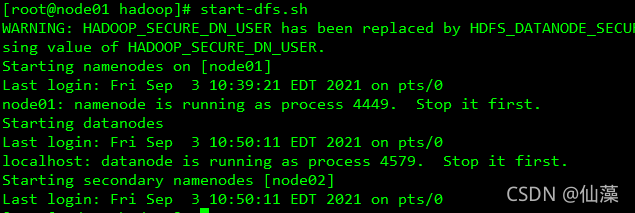

② 初始化datanode与secondary角色。创建datanode与secondary的数据目录

start-dfs.sh

③ windows配置host映射

//修改hosts映射,添加ip与主机名映射

C:\Windows\System32\drivers\etc

192.168.0.111 node01

192.168.0.112 node02

192.168.0.113 node03

192.168.0.114 node04

④ 访问页面

//查询官网发现端口是9870而不是50070

http://node01:9870/

问题:初始化时出现如下问题

Starting namenodes on [node01]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [node01]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

原因:缺少用户定义造成的

解决:

① 在/hadoop/sbin路径下在start-dfs.sh,stop-dfs.sh顶部添加如下内容

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

② start-yarn.sh,stop-yarn.sh顶部添加如下内容

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root