4 Prometheus实战之虚拟机监控

node-exporter用于监控Linux主机的度量信息。

4.1 安装node-exporter

下载地址:https://prometheus.io/download/#node_exporter

# 创建node-exporter目录

mkdir /usr/local/node-exporter

# 跳转到node-exporter目录

cd /usr/local/node-exporter

# 下载

wget https://github.com/prometheus/node_exporter/releases/download/v1.2.2/node_exporter-1.2.2.linux-amd64.tar.gz

# 解压

tar -zxvf node_exporter-1.2.2.linux-amd64.tar.gz

# 跳转到安装目录

cd node_exporter-1.2.2.linux-amd64

# 启动

./node_exporter默认监听9100端口,也可以通过如下参数修改:

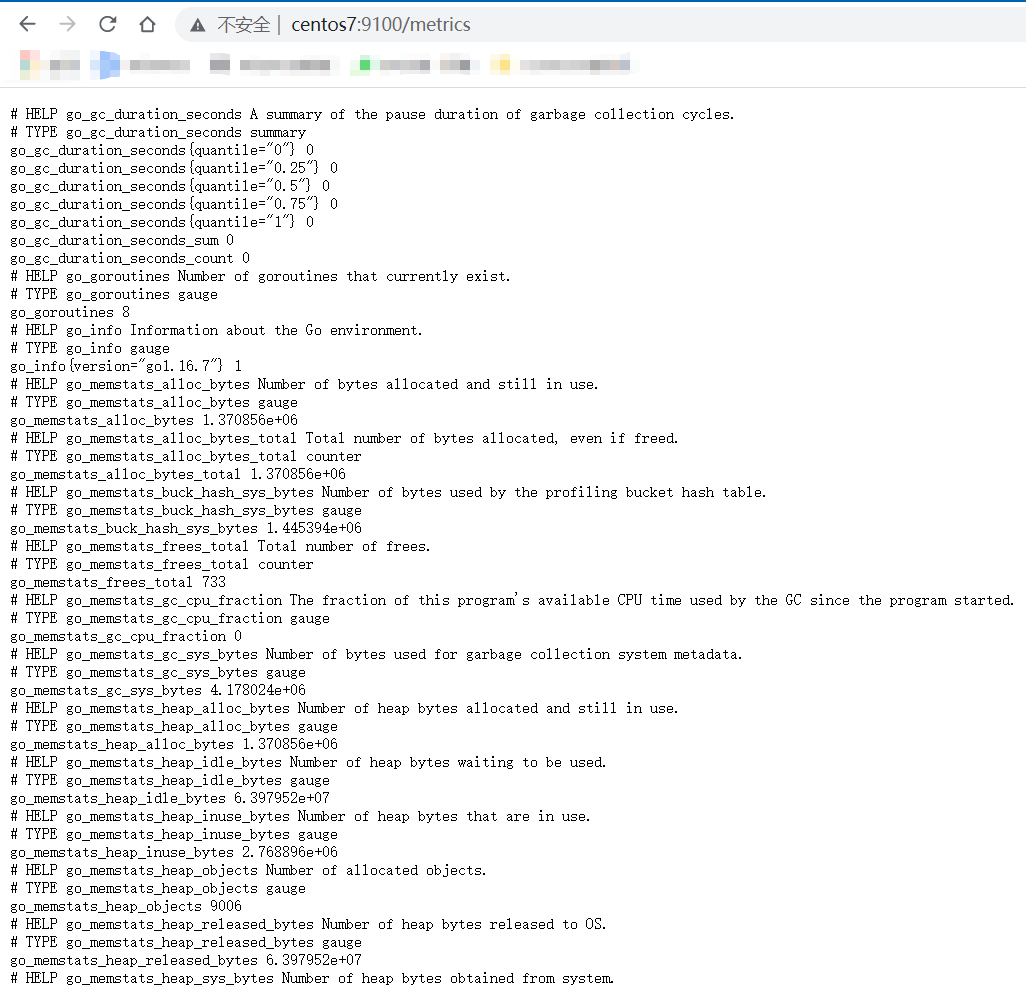

./node_exporter --web.listen-address 127.0.0.1:8082虚拟机的主机名是centos7,使用主机名访问:http://centos7:9100/metrics

4.2 修改Prometheus配置

当前虚拟机使用主机名访问,现在配置了两台虚拟机:

scrape_configs:

- job_name: 'node'

scrape_interval: 5s

static_configs:

- targets: ['centos7:9100', 'centos8:9100']注意:

1、targets里面不要写https或者http,如果需要的话,使用schema指定(默认是http)。

2、job名称一定得是node,因为后面Dashboard里面配置的默认Job名称都是node

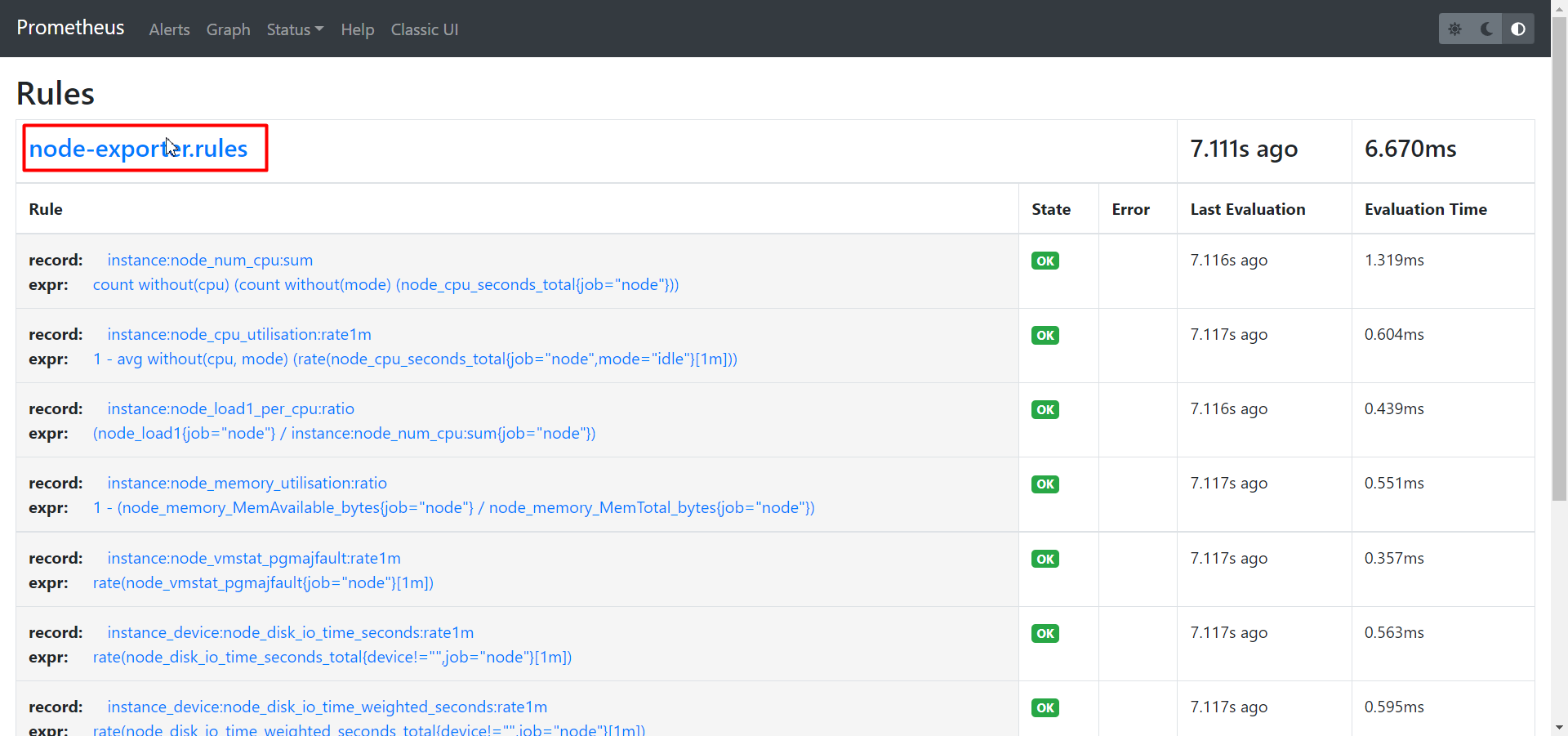

4.3 配置Recording Rule

为什么要配置记录规则?

使用记录规则,可以预计算和缓存频繁查询的metrics。比如Dashboard需要使用到rate()查询,如果有了Recording Rule,则会进行计算并产生一个新的时间序列。这样就能避免在Dashboard更新的时候,必须抓取数据并计算数据。

在导入Dashboard之前,应当配置Recording Rule!

node_exporter_recording_rules.yml

Recording rule文件如下(规则名称是node-exporter.rules,默认选择器器是job=node):

"groups":

- "name": "node-exporter.rules"

"rules":

- "expr": |

count without (cpu) (

count without (mode) (

node_cpu_seconds_total{job="node"}

)

)

"record": "instance:node_num_cpu:sum"

- "expr": |

1 - avg without (cpu, mode) (

rate(node_cpu_seconds_total{job="node", mode="idle"}[1m])

)

"record": "instance:node_cpu_utilisation:rate1m"

- "expr": |

(

node_load1{job="node"}

/

instance:node_num_cpu:sum{job="node"}

)

"record": "instance:node_load1_per_cpu:ratio"

- "expr": |

1 - (

node_memory_MemAvailable_bytes{job="node"}

/

node_memory_MemTotal_bytes{job="node"}

)

"record": "instance:node_memory_utilisation:ratio"

- "expr": |

rate(node_vmstat_pgmajfault{job="node"}[1m])

"record": "instance:node_vmstat_pgmajfault:rate1m"

- "expr": |

rate(node_disk_io_time_seconds_total{job="node", device!=""}[1m])

"record": "instance_device:node_disk_io_time_seconds:rate1m"

- "expr": |

rate(node_disk_io_time_weighted_seconds_total{job="node", device!=""}[1m])

"record": "instance_device:node_disk_io_time_weighted_seconds:rate1m"

- "expr": |

sum without (device) (

rate(node_network_receive_bytes_total{job="node", device!="lo"}[1m])

)

"record": "instance:node_network_receive_bytes_excluding_lo:rate1m"

- "expr": |

sum without (device) (

rate(node_network_transmit_bytes_total{job="node", device!="lo"}[1m])

)

"record": "instance:node_network_transmit_bytes_excluding_lo:rate1m"

- "expr": |

sum without (device) (

rate(node_network_receive_drop_total{job="node", device!="lo"}[1m])

)

"record": "instance:node_network_receive_drop_excluding_lo:rate1m"

- "expr": |

sum without (device) (

rate(node_network_transmit_drop_total{job="node", device!="lo"}[1m])

)

"record": "instance:node_network_transmit_drop_excluding_lo:rate1m"配置上述规则

修改prometheus.yml:

rule_files:

- "node_exporter_recording_rules.yml"重启Prometheus后,可以看到node-exporter.rules规则已经生效:

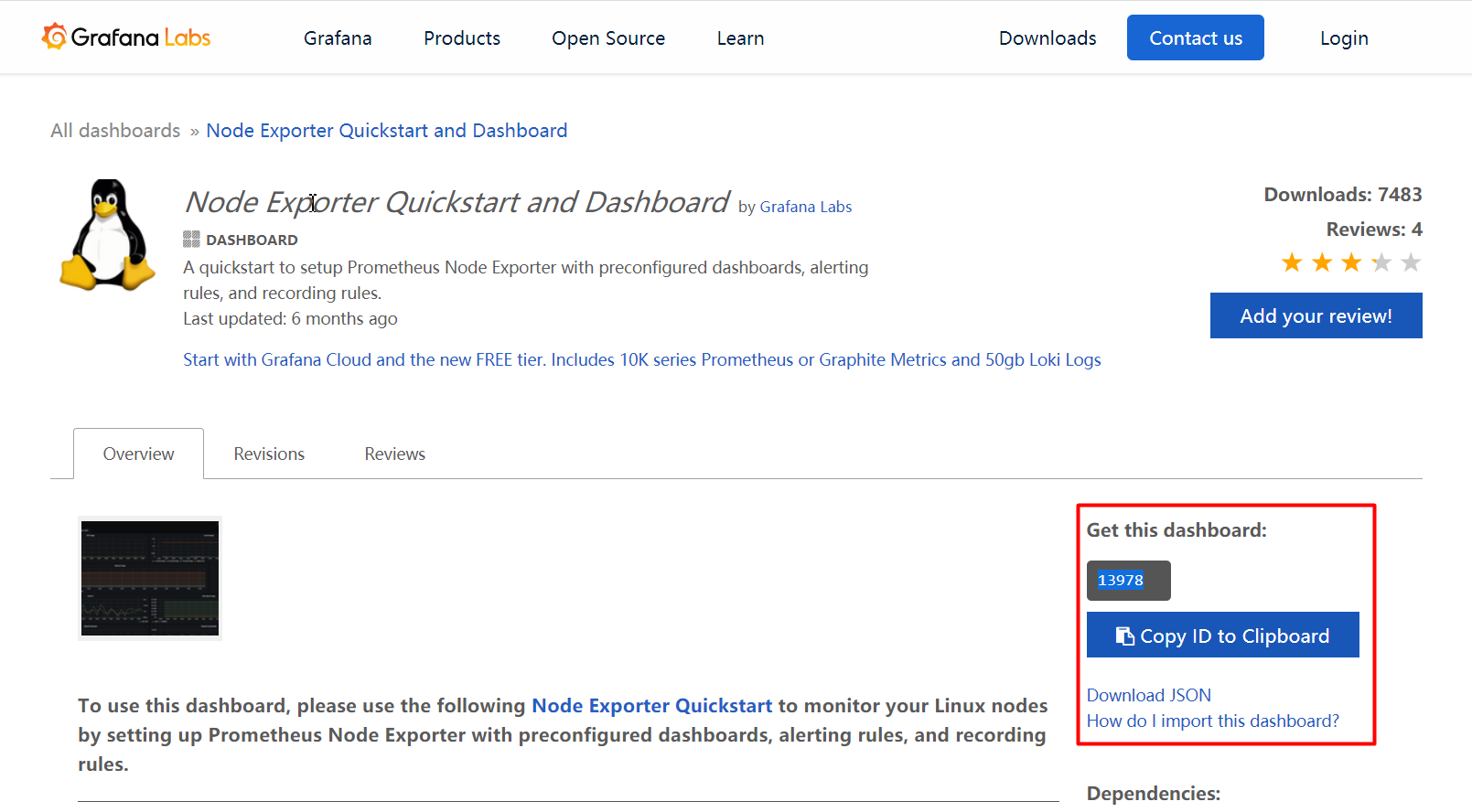

4.4 导入Dashboard

搜索Dashboard

访问:https://grafana.com/grafana/dashboards搜索node-exporter的Dashboard(就在Dashboard首页就能看到这个下面的面板)如下:

https://grafana.com/grafana/dashboards/13978?pg=dashboards&plcmt=featured-sub1

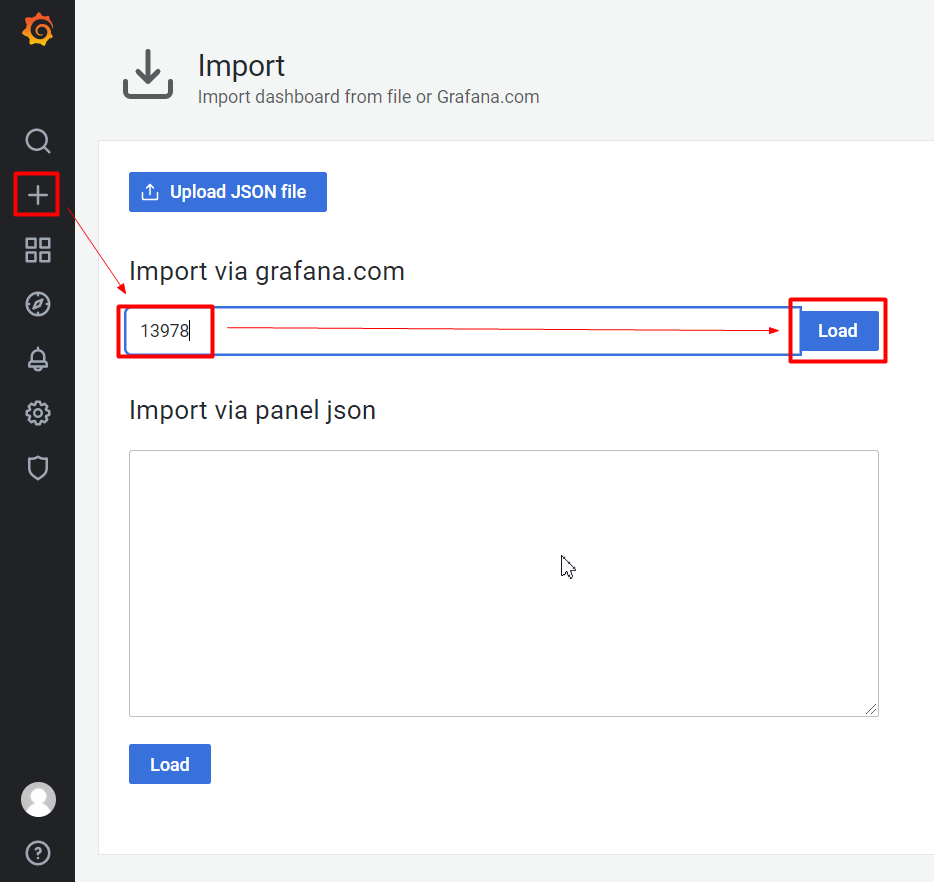

导入Dashboard

在Grafana中按照ID导入:

Dashboard显示效果

这个Dashboard包含如下面板信息:

- CPU Usage

- Load Average

- Memory Usage

- Disk I/O

- Disk Usage

- Network Received

- Network Transmitted

最终显示效果:

4.5 配置告警规则

告警的含义

当PromQL表达式在一定时间内违背了某些限制条件或者满足了特定的条件,就会触发告警。只要触发了告警,告警就会进入Pending状态,当周期时间内满足for参数定义的条件后,就会进入Firing状态。这个时候配置告警工具(比如alertmanager)就可以通知了。

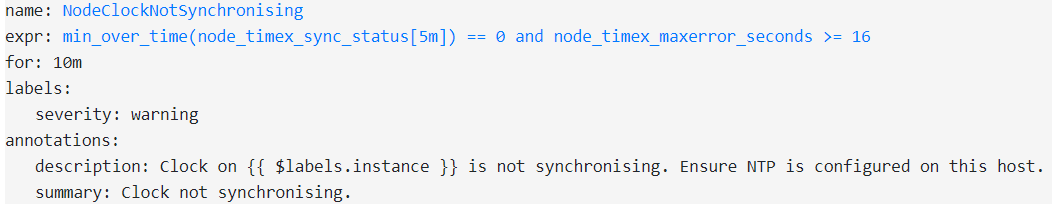

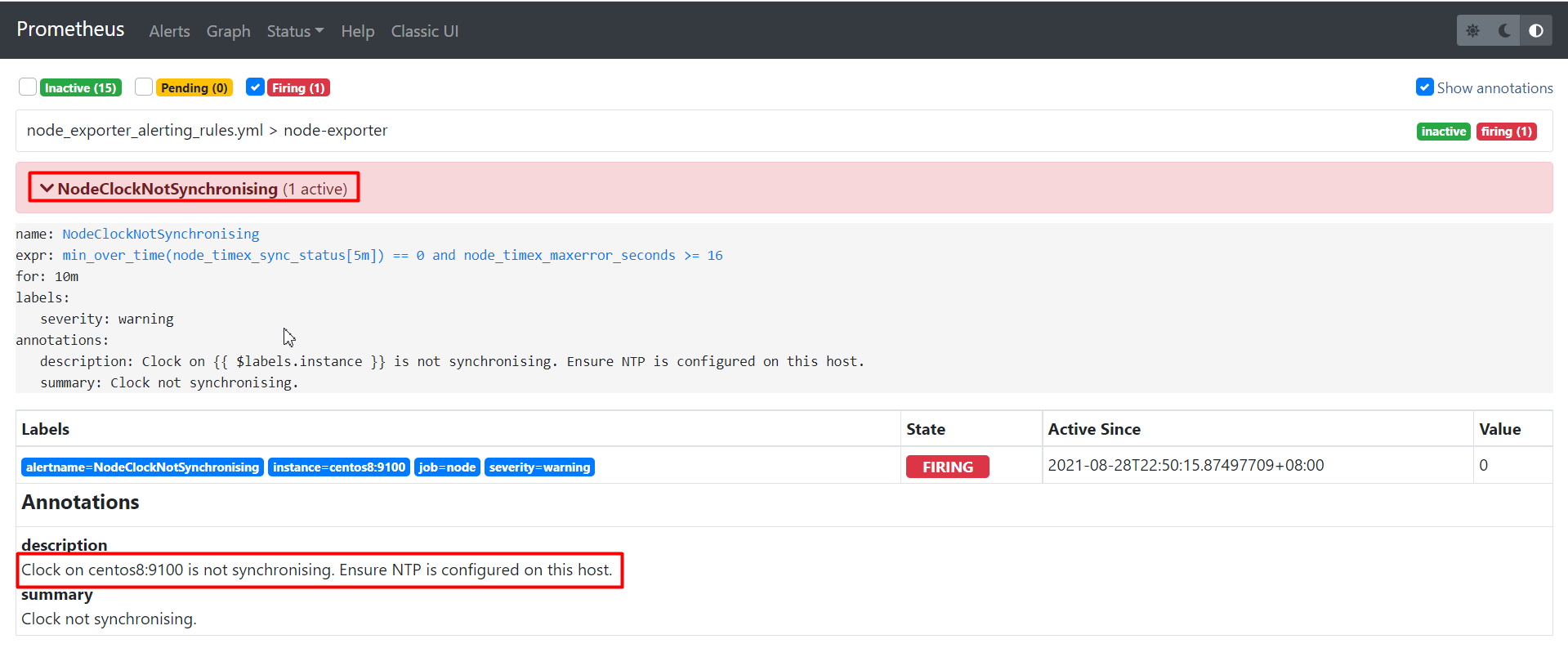

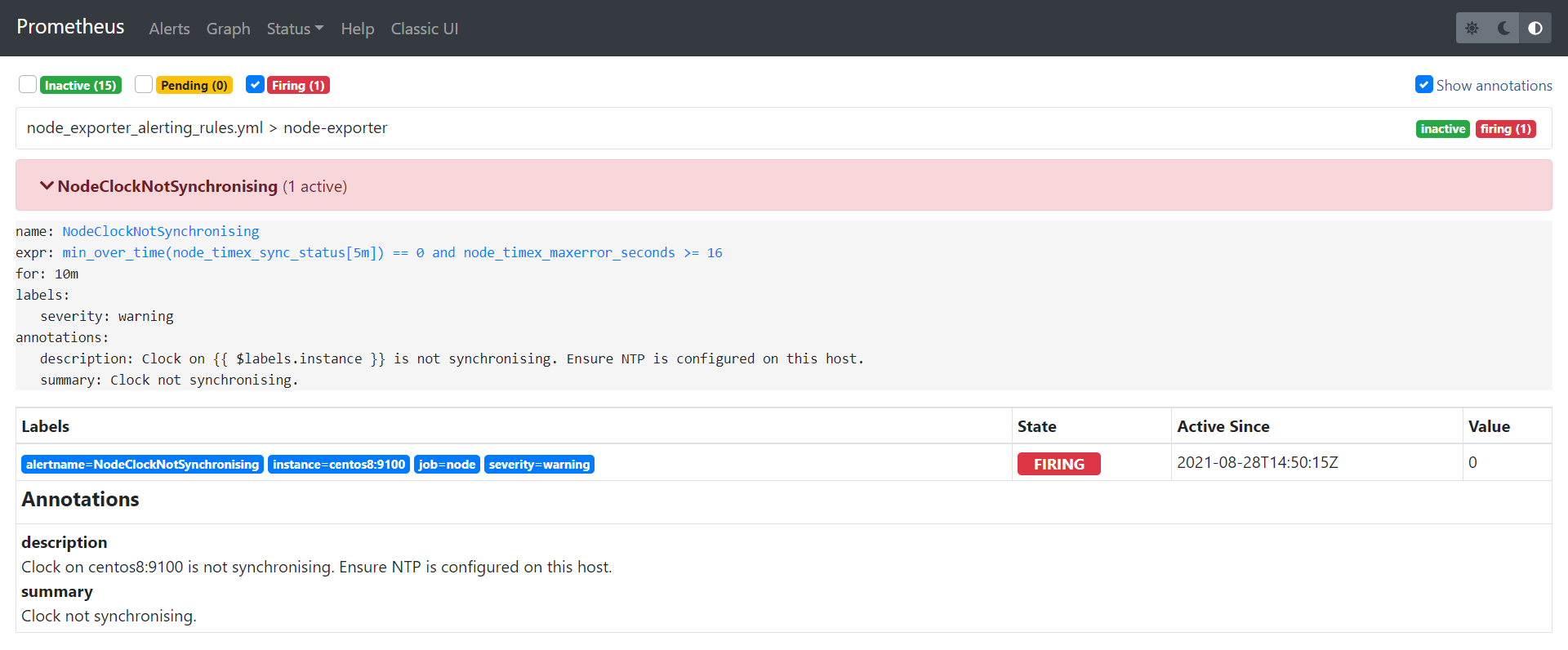

比如下面的时钟未同步告警,如果触发了一次告警,则会变成Pending状态,如果10min后还是满足条件,则会进入Firing状态。

node_exporter_alerting_rules.yml

告警配置包括如下内容:

- NodeFilesystemSpaceFillingUp、NodeFilesystemAlmostOutOfSpace、NodeFilesystemFilesFillingUp、NodeFilesystemAlmostOutOfFiles:磁盘快满了

- NodeNetworkReceiveErrs:过去两分钟内网卡收到很多错误

- NodeHighNumberConntrackEntriesUsed:% of conntrack entries are used.

- NodeTextFileCollectorScrapeError:Node Exporter文本文件收集器抓取失败

- NodeClockSkewDetected:时钟不准,且超过300秒

- NodeClockNotSynchronising:时钟没有同步,确保服务器上配置了NTP

- NodeRAIDDegraded:因为磁盘故障导致RADI实例进入降级状态

- NodeRAIDDiskFailure:RADI磁盘故障

"groups":

- "name": "node-exporter"

"rules":

- "alert": "NodeFilesystemSpaceFillingUp"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left and is filling up."

"summary": "Filesystem is predicted to run out of space within the next 24 hours."

"expr": |

(

node_filesystem_avail_bytes{job="node",fstype!=""} / node_filesystem_size_bytes{job="node",fstype!=""} * 100 < 40

and

predict_linear(node_filesystem_avail_bytes{job="node",fstype!=""}[6h], 24*60*60) < 0

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "warning"

- "alert": "NodeFilesystemSpaceFillingUp"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left and is filling up fast."

"summary": "Filesystem is predicted to run out of space within the next 4 hours."

"expr": |

(

node_filesystem_avail_bytes{job="node",fstype!=""} / node_filesystem_size_bytes{job="node",fstype!=""} * 100 < 20

and

predict_linear(node_filesystem_avail_bytes{job="node",fstype!=""}[6h], 4*60*60) < 0

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "critical"

- "alert": "NodeFilesystemAlmostOutOfSpace"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left."

"summary": "Filesystem has less than 5% space left."

"expr": |

(

node_filesystem_avail_bytes{job="node",fstype!=""} / node_filesystem_size_bytes{job="node",fstype!=""} * 100 < 5

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "warning"

- "alert": "NodeFilesystemAlmostOutOfSpace"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available space left."

"summary": "Filesystem has less than 3% space left."

"expr": |

(

node_filesystem_avail_bytes{job="node",fstype!=""} / node_filesystem_size_bytes{job="node",fstype!=""} * 100 < 3

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "critical"

- "alert": "NodeFilesystemFilesFillingUp"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left and is filling up."

"summary": "Filesystem is predicted to run out of inodes within the next 24 hours."

"expr": |

(

node_filesystem_files_free{job="node",fstype!=""} / node_filesystem_files{job="node",fstype!=""} * 100 < 40

and

predict_linear(node_filesystem_files_free{job="node",fstype!=""}[6h], 24*60*60) < 0

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "warning"

- "alert": "NodeFilesystemFilesFillingUp"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left and is filling up fast."

"summary": "Filesystem is predicted to run out of inodes within the next 4 hours."

"expr": |

(

node_filesystem_files_free{job="node",fstype!=""} / node_filesystem_files{job="node",fstype!=""} * 100 < 20

and

predict_linear(node_filesystem_files_free{job="node",fstype!=""}[6h], 4*60*60) < 0

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "critical"

- "alert": "NodeFilesystemAlmostOutOfFiles"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left."

"summary": "Filesystem has less than 5% inodes left."

"expr": |

(

node_filesystem_files_free{job="node",fstype!=""} / node_filesystem_files{job="node",fstype!=""} * 100 < 5

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "warning"

- "alert": "NodeFilesystemAlmostOutOfFiles"

"annotations":

"description": "Filesystem on {{ $labels.device }} at {{ $labels.instance }} has only {{ printf \"%.2f\" $value }}% available inodes left."

"summary": "Filesystem has less than 3% inodes left."

"expr": |

(

node_filesystem_files_free{job="node",fstype!=""} / node_filesystem_files{job="node",fstype!=""} * 100 < 3

and

node_filesystem_readonly{job="node",fstype!=""} == 0

)

"for": "1h"

"labels":

"severity": "critical"

- "alert": "NodeNetworkReceiveErrs"

"annotations":

"description": "{{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf \"%.0f\" $value }} receive errors in the last two minutes."

"summary": "Network interface is reporting many receive errors."

"expr": |

rate(node_network_receive_errs_total[2m]) / rate(node_network_receive_packets_total[2m]) > 0.01

"for": "1h"

"labels":

"severity": "warning"

- "alert": "NodeNetworkTransmitErrs"

"annotations":

"description": "{{ $labels.instance }} interface {{ $labels.device }} has encountered {{ printf \"%.0f\" $value }} transmit errors in the last two minutes."

"summary": "Network interface is reporting many transmit errors."

"expr": |

rate(node_network_transmit_errs_total[2m]) / rate(node_network_transmit_packets_total[2m]) > 0.01

"for": "1h"

"labels":

"severity": "warning"

- "alert": "NodeHighNumberConntrackEntriesUsed"

"annotations":

"description": "{{ $value | humanizePercentage }} of conntrack entries are used."

"summary": "Number of conntrack are getting close to the limit."

"expr": |

(node_nf_conntrack_entries / node_nf_conntrack_entries_limit) > 0.75

"labels":

"severity": "warning"

- "alert": "NodeTextFileCollectorScrapeError"

"annotations":

"description": "Node Exporter text file collector failed to scrape."

"summary": "Node Exporter text file collector failed to scrape."

"expr": |

node_textfile_scrape_error{job="node"} == 1

"labels":

"severity": "warning"

- "alert": "NodeClockSkewDetected"

"annotations":

"description": "Clock on {{ $labels.instance }} is out of sync by more than 300s. Ensure NTP is configured correctly on this host."

"summary": "Clock skew detected."

"expr": |

(

node_timex_offset_seconds > 0.05

and

deriv(node_timex_offset_seconds[5m]) >= 0

)

or

(

node_timex_offset_seconds < -0.05

and

deriv(node_timex_offset_seconds[5m]) <= 0

)

"for": "10m"

"labels":

"severity": "warning"

- "alert": "NodeClockNotSynchronising"

"annotations":

"description": "Clock on {{ $labels.instance }} is not synchronising. Ensure NTP is configured on this host."

"summary": "Clock not synchronising."

"expr": |

min_over_time(node_timex_sync_status[5m]) == 0

and

node_timex_maxerror_seconds >= 16

"for": "10m"

"labels":

"severity": "warning"

- "alert": "NodeRAIDDegraded"

"annotations":

"description": "RAID array '{{ $labels.device }}' on {{ $labels.instance }} is in degraded state due to one or more disks failures. Number of spare drives is insufficient to fix issue automatically."

"summary": "RAID Array is degraded"

"expr": |

node_md_disks_required - ignoring (state) (node_md_disks{state="active"}) > 0

"for": "15m"

"labels":

"severity": "critical"

- "alert": "NodeRAIDDiskFailure"

"annotations":

"description": "At least one device in RAID array on {{ $labels.instance }} failed. Array '{{ $labels.device }}' needs attention and possibly a disk swap."

"summary": "Failed device in RAID array"

"expr": |

node_md_disks{state="failed"} > 0

"labels":

"severity": "warning"配置上述规则

修改prometheus.yml:

rule_files:

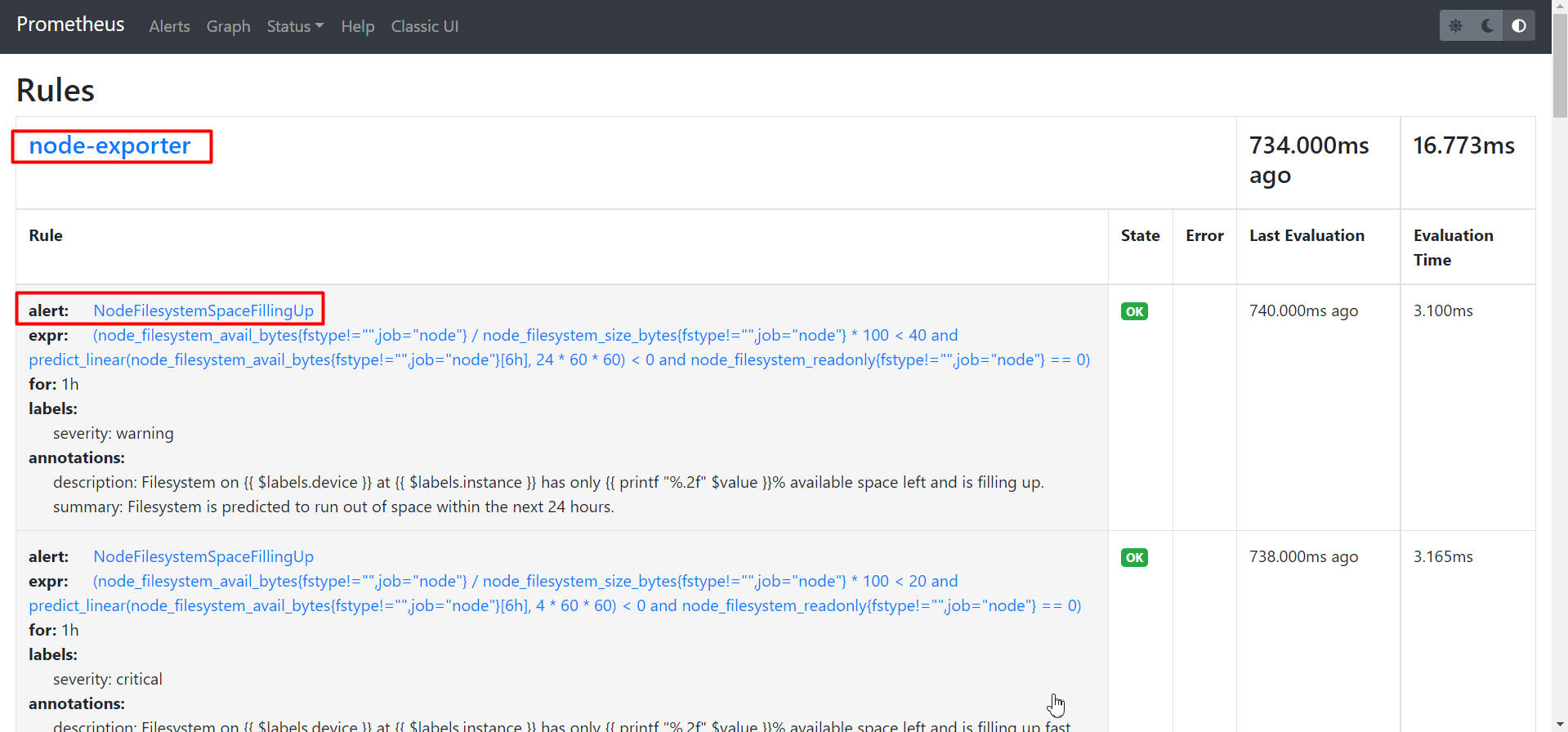

- "node_exporter_alerting_rules.yml"重启后,即可看到配置的规则:

在告警页面,可以看到所有的告警:

4.6 配置告警 - alertmanager

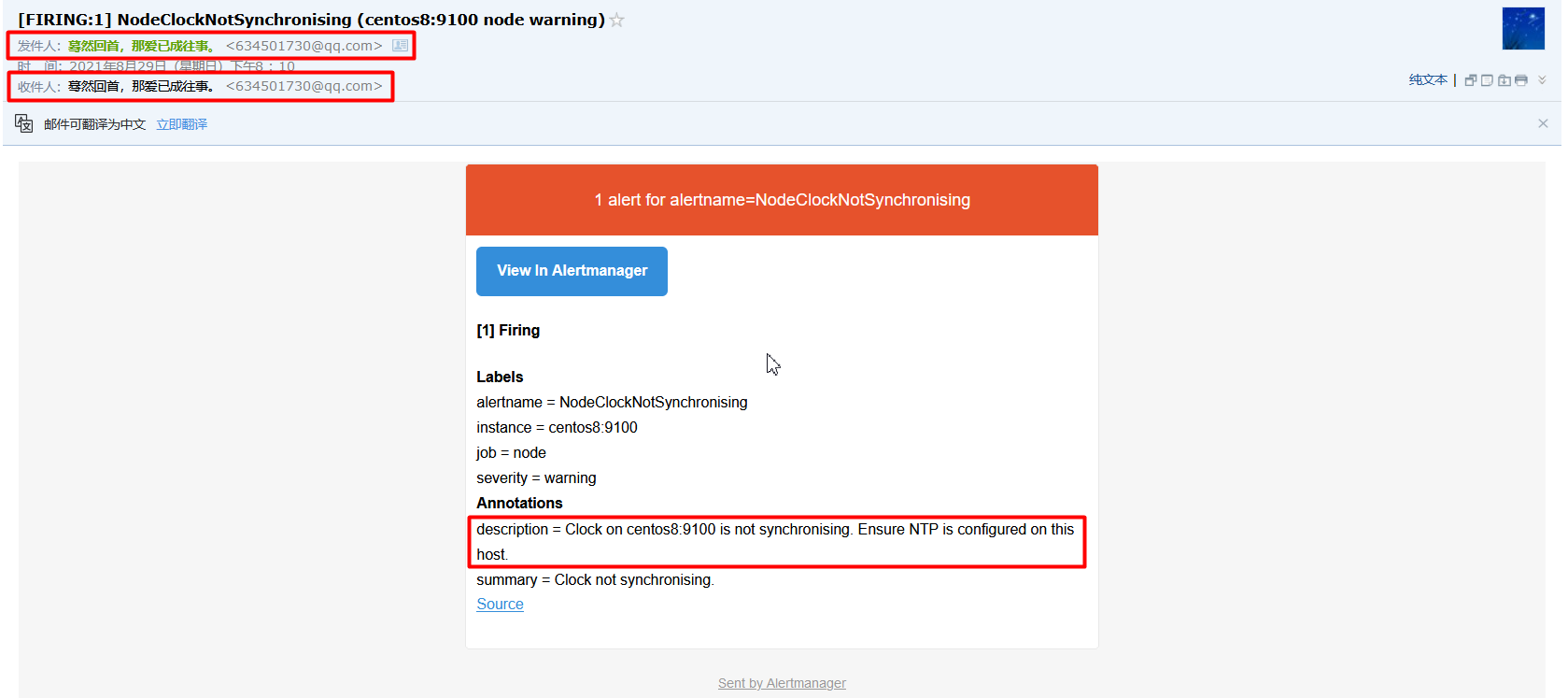

假设现在虚拟机发生了告警,比如centos8虚拟机没有设置时钟的NTP同步。具体告警使用alertmanager实现。

4.6.1 alertmanager概述

Prometheus告警组成部分

Prometheus中的告警分成两部分:Prometheus Server中定义告警规则,然后发送告警给alertmanager。alertmanager管理(去重、分组、路由)这些告警并使用邮件、聊天平台等方式发送出去。

告警和通知实现方式

告警和通知需要这样才能实现:

- 在Prometheus中告警规则

- 配置Prometheus(Prometheus作为alertmanager的客户端应用)与alertmanager进行通讯

- 启动并配置alertmanager

Alertmanager中的核心概念

- 分组(grouping):把多个具备特征的合并到一个里面去。用于大规模系统失败,触发成千上万告警的时候。比如网络出现,导致大量应用无法连接数据库。这些告警就可以归一到一个通知里面去。

- 压制(inhibition):如果某些其它的告警已经触发了,那这个告警就需要被压制住,不要再发了。比如整个都不可达了,然后特定告警已经触发,那么就可以把其它关注于这个集群的告警都mute掉,防止发出无限多的通知出去。

- 静默(silences):是一种直接让告警mute的方式,其配置基于匹配器(matcher),所有到来的告警都会和匹配器进行匹配,如果和这个silence匹配器匹配,则出现告警时,就不会发出通知。

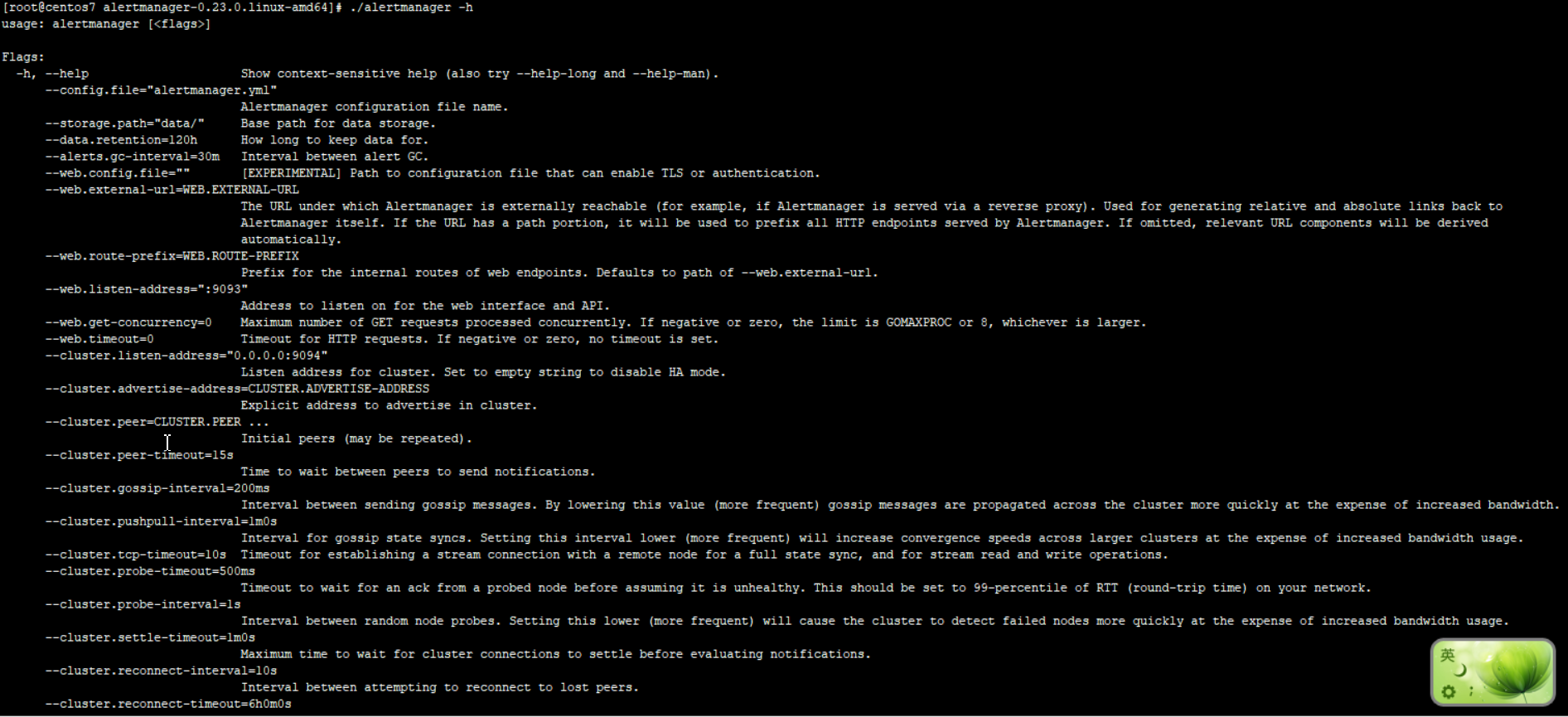

Alertmanager中的参数配置

Alertmanager由命令行参数(即启动命令上加--参数)和配置文件来配置。命令行参数配置不可变的系统参数,配置文件配置压制规则、通知路由和通知接受者。

使用altermanager -h命令(即--help)可以查看所有可用的命令行参数:

Alertmanager如何在运行时重载配置

alertmanager可以在运行时重新加载配置。如果新配置格式问题,则不会新配置并在日志中记录错误。

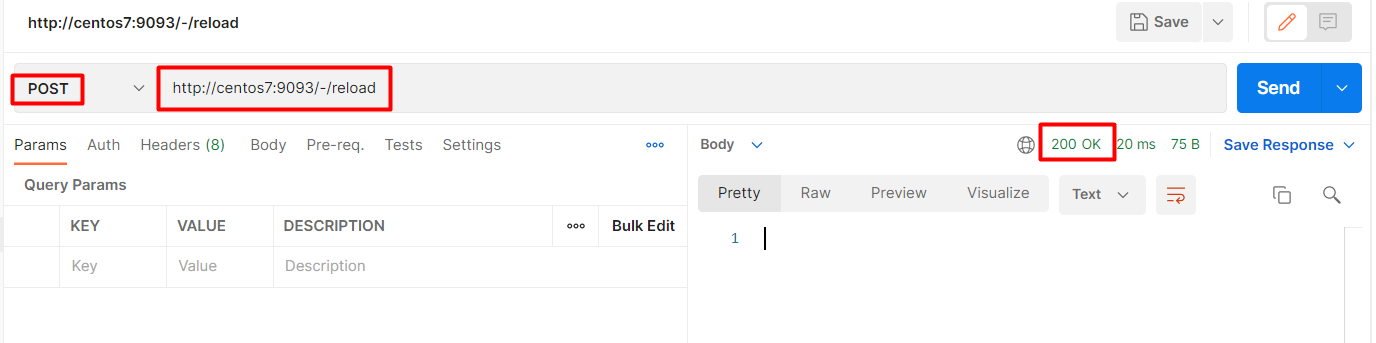

通过给进程发送SIGNUP信号(还没找到实现方式)或者通过HTTP POST请求/-/reload端点即可重载配置(如下图所示,同时日志中出现“Completed loading of configuration file”)。

4.6.2 安装alertmanager

# 创建alertmanager目录

mkdir /usr/local/alertmanager

# 跳转到alertmanager目录

cd /usr/local/alertmanager

# 下载

wget https://hub.fastgit.org/prometheus/alertmanager/releases/download/v0.23.0/alertmanager-0.23.0.linux-amd64.tar.gz

# 解压

tar -zxvf alertmanager-0.23.0.linux-amd64.tar.gz

# 跳转到安装目录

cd alertmanager-0.23.0.linux-amd64

# 启动(先不要启动,我们还得改下配置文件)

# ./alertmanager --config.file=alertmanager.yml4.6.3 修改alertmanager.yml

这里简单的配置一个QQ邮箱发送邮件,发件人我自己的邮箱634501730@qq.com,收件人为了方便测试用的也是我自己的邮箱634501730@qq.com(即发件人和收件人是相同的邮箱):

global:

# The default SMTP From header field.

smtp_from: '634501730@qq.com'

# The default SMTP smarthost used for sending emails, including port number.

# Port number usually is 25, or 587 for SMTP over TLS (sometimes referred to as STARTTLS).

# Example: smtp.example.org:587

smtp_smarthost: 'smtp.qq.com:465'

# The default hostname to identify to the SMTP server.

#smtp_hello: <string> | default = "localhost"

# SMTP Auth using CRAM-MD5, LOGIN and PLAIN. If empty, Alertmanager doesn't authenticate to the SMTP server.

smtp_auth_username: '634501730@qq.com'

# SMTP Auth using LOGIN and PLAIN.

smtp_auth_password: '这个是授权码,不是密码哈!'

# SMTP Auth using PLAIN.

#smtp_auth_identity: <string>

# SMTP Auth using CRAM-MD5.

#smtp_auth_secret: <secret>

# The default SMTP TLS requirement.

# Note that Go does not support unencrypted connections to remote SMTP endpoints.

smtp_require_tls: false

templates:

#

route:

group_by: ['alertname']

group_wait: 30s

group_interval: 5m

# 默认发送时间间隔是repeat_interval + group_interval

repeat_interval: 1h

receiver: 'email'

receivers:

- name: 'email'

email_configs:

- to: '634501730@qq.com'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']配置完成后,启动alertmanager:

./alertmanager --config.file=alertmanager.yml启动日志如下:

caller=main.go:225 msg="Starting Alertmanager" version="(version=0.23.0, branch=HEAD, revision=61046b17771a57cfd4c4a51be370ab930a4d7d54)"

caller=main.go:226 build_context="(go=go1.16.7, user=root@e21a959be8d2, date=20210825-10:48:55)"

caller=cluster.go:184 component=cluster msg="setting advertise address explicitly" addr=192.168.31.245 port=9094

caller=cluster.go:671 component=cluster msg="Waiting for gossip to settle..." interval=2s

caller=coordinator.go:113 component=configuration msg="Loading configuration file" file=alertmanager.yml

caller=coordinator.go:126 component=configuration msg="Completed loading of configuration file" file=alertmanager.yml

caller=main.go:518 msg=Listening address=:9093

4.6.4 Prometheus中配置alertmanager

alertmanager是支持部署的(所以下面的配置是一个数组),这里只部署一台。因为是和Prometheus部署在一起的,所以地址写成了localhost:9093。修改prometheus.yml如下:

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- localhost:9093配置修改完成后,重启Prometheus。

4.6.5 查看告警邮件

此时,查看邮箱就能看到收到的邮件:

这里尽管收到了告警信息,但是告警还是没有解决。在Prometheus中依然会显示出这个告警:

4.6.6 解决告警

因为告警是centos8虚拟机没有设置NTP时钟同步,那在centos8上设置一下就可以消除告警:

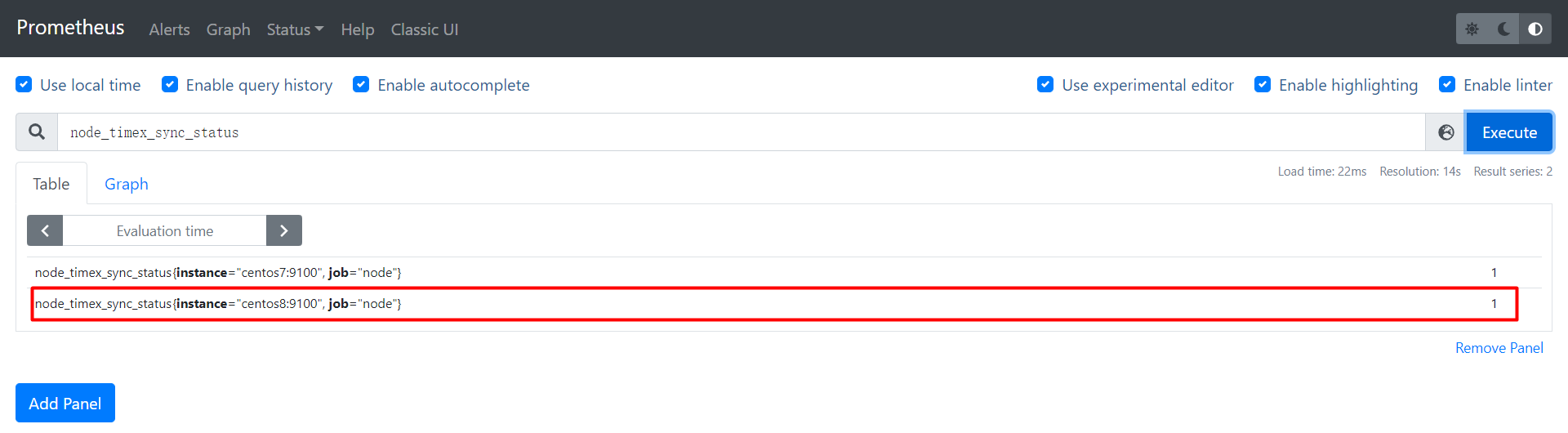

dnf install chrony

systemctl enable chronyd

重启服务器重启完CentOS8后即可看到同步状态变成了1,然后告警消失。

至此,一个Prometheus完整的监控流程,就完整了。