Kubernetes

环境

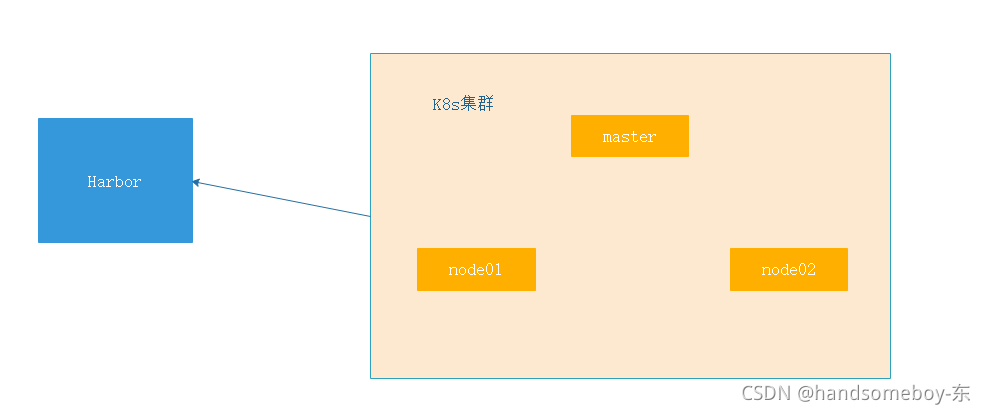

需求:三台主机组成K8S集群,集群外有一台私人镜像仓库Harbor,K8S集群用kubeadm方式进行安装

master(2C/4G,cpu核心数要求大于2) 192.168.118.11 docker、kubeadm、kubelet、kubectl、flannel

node01(2C/2G) 192.168.118.22 docker、kubeadm、kubelet、kubectl、flannel

node02(2C/2G) 192.168.118.88 docker、kubeadm、kubelet、kubectl、flannel

Harbor节点(hub) 192.168.118.33 docker、docker-compose、harbor-offline-v1.2.2

- 修改主机名

hostnamectl set-hostname master

hostnamectl set-hostname node01

hostnamectl set-hostname node02

hostnamectl set-hostname hub.kgc.com

kubeadm 部署单master集群

所有节点配置,这里只显示master

##关闭防火墙,关闭安全防护

[root@localhost ~]# hostnamectl set-hostname master

[root@localhost ~]# su

[root@master ~]# systemctl stop firewalld

[root@master ~]# systemctl disable firewalld

[root@master ~]# setenforce 0

[root@master ~]# iptables -F

[root@master ~]# swapoff -a

[root@master ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

[root@master ~]# for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

ip_vs_dh

ip_vs_ftp

ip_vs

ip_vs_lblc

ip_vs_lblcr

ip_vs_lc

ip_vs_nq

ip_vs_pe_sip

ip_vs_rr

ip_vs_sed

ip_vs_sh

ip_vs_wlc

ip_vs_wrr

[root@master ~]# vim /etc/hosts #添加地址映射

192.168.118.11 master

192.168.118.22 node01

192.168.118.88 node02

192.168.118.33 hub

##调整内核参数

[root@master ~]# cat > /etc/sysctl.d/kubernetes.conf << EOF

> net.bridge.bridge-nf-call-ip6tables=1

> net.bridge.bridge-nf-call-iptables=1

> net.ipv6.conf.all.disable_ipv6=1

> net.ipv4.ip_forward=1

> EOF

[root@master ~]# sysctl --system

安装docker

[root@master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@master ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

已加载插件:fastestmirror, langpacks

adding repo from: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

grabbing file https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo

[root@master ~]# yum install -y docker-ce

[root@master ~]# systemctl start docker

[root@master ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master ~]#

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

[root@master ~]# docker --version

Docker version 20.10.8, build 3967b7d

安装kubeadm、kubelet核kubectl

[root@master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@master ~]# yum install -y kubelet-1.15.1 kubeadm-1.15.1 kubectl-1.15.1

[root@master ~]# rpm -qa | grep kube

kubectl-1.15.0-0.x86_64

kubernetes-cni-0.8.7-0.x86_64

kubelet-1.15.0-0.x86_64

kubeadm-1.15.0-0.x86_64

[root@master ~]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

master上配置

[root@master ~]# mkdir k8s && cd k8s

[root@master k8s]# kubeadm init \ ##初始kubernetes

> --apiserver-advertise-address=192.168.118.11 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.15.0 \

> --service-cidr=10.1.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

> [root@master k8s]#mkdir -p $HOME/.kube

[root@master k8s]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master k8s]# chown $(id -u):$(id -g) $HOME/.kube/config

##安装pod网络插件(flannel)

[root@master k8s]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

[root@master k8s]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel configured

clusterrolebinding.rbac.authorization.k8s.io/flannel configured

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg configured

daemonset.apps/kube-flannel-ds created

##查看组件状态,查看节点状态

[root@master k8s]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 8m24s v1.15.0

node节点配置,只显示node1

##拉取flannel镜像

[root@node1 ~]# docker pull lizhenliang/flannel:v0.11.0-amd64

v0.11.0-amd64: Pulling from lizhenliang/flannel

cd784148e348: Pulling fs layer

cd784148e348: Pull complete

04ac94e9255c: Pull complete

e10b013543eb: Pull complete

005e31e443b1: Pull complete

74f794f05817: Pull complete

Digest: sha256:bd76b84c74ad70368a2341c2402841b75950df881388e43fc2aca000c546653a

Status: Downloaded newer image for lizhenliang/flannel:v0.11.0-amd64

docker.io/lizhenliang/flannel:v0.11.0-amd64

##添加节点

[root@node1 ~]# kubeadm join 192.168.118.11:6443 --token ni3wlu.6zd1t4aa91hq0k58 \

> --discovery-token-ca-cert-hash sha256:d19d7174b4238d5a454ffe3e74aaec561b9bd2bb5a4123fd044c095dcfe9f355

在master节点上查看状态

[root@master k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 16m v1.15.0

node1 Ready <none> 2m23s v1.15.0

node2 Ready <none> 2m23s v1.15.0

##为node节点打标签

[root@master k8s]# kubectl label node node1 node-role.kubernetes.io/node=node

node/node1 labeled

[root@master k8s]# kubectl label node node2 node-role.kubernetes.io/node=node

node/node2 labeled

##kubectl get pods -n kubs-system 查看pod状态“1/1 Running”为正常

[root@master k8s]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-trdxh 1/1 Running 0 19m

coredns-bccdc95cf-tzxlw 1/1 Running 0 19m

etcd-master 1/1 Running 0 18m

kube-apiserver-master 1/1 Running 0 18m

kube-controller-manager-master 1/1 Running 0 18m

kube-flannel-ds-5drcp 1/1 Running 0 6m4s

kube-flannel-ds-6vvkc 0/1 Init:ImagePullBackOff 0 13m

kube-flannel-ds-amd64-89qxn 1/1 Running 0 14m

kube-flannel-ds-amd64-mvphr 1/1 Running 1 6m4s

kube-flannel-ds-amd64-whgr7 1/1 Running 0 6m4s

kube-flannel-ds-qxbn8 1/1 Running 0 6m4s

kube-proxy-7hxqt 1/1 Running 0 6m4s

kube-proxy-dmmmk 1/1 Running 0 6m4s

kube-proxy-fh578 1/1 Running 0 19m

kube-scheduler-master 1/1 Running 0 18m

34

Harbor仓库部署

[root@harbor ~]# cat <<EOF >>/etc/hosts ##添加地址映射

> 192.168.118.11 master

> 192.168.118.22 node1

> 192.168.118.88 node2

> 192.168.118.33 harbor

> EOF

##关闭防火墙核心防护

[root@harbor ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@harbor ~]# setenforce 0

##开启路由转发

[root@harbor ~]# echo "net.ipv4.ip_forward=1" >> /etc/sysctl.conf

[root@harbor ~]# sysctl -p

net.ipv4.ip_forward = 1

安装docker

[root@harbor ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@harbor ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@harbor ~]# yum install -y docker-ce

[root@harbor ~]# systemctl start docker

[root@harbor ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

##上传docker-compose、harbor压缩文件

[root@harbor ~]# cd /opt

[root@harbor opt]# cp docker-compose-Linux-x86_64 /usr/local/bin

[root@harbor opt]# chmod +x /usr/local/bin/docker-compose-Linux-x86_64

[root@harbor opt]# tar zxvf harbor-offline-installer-v1.2.2.tgz -C /usr/local

[root@harbor opt]# cd /usr/local/harbor/

##修改harbor节点ip

[root@harbor harbor]# sed -i "5s/reg.mydomain.com/192.168.118.33/" /usr/local/harbor/harbor.cfg

##执行安装脚本

[root@harbor harbor]# sh /usr/local/harbor/install.sh

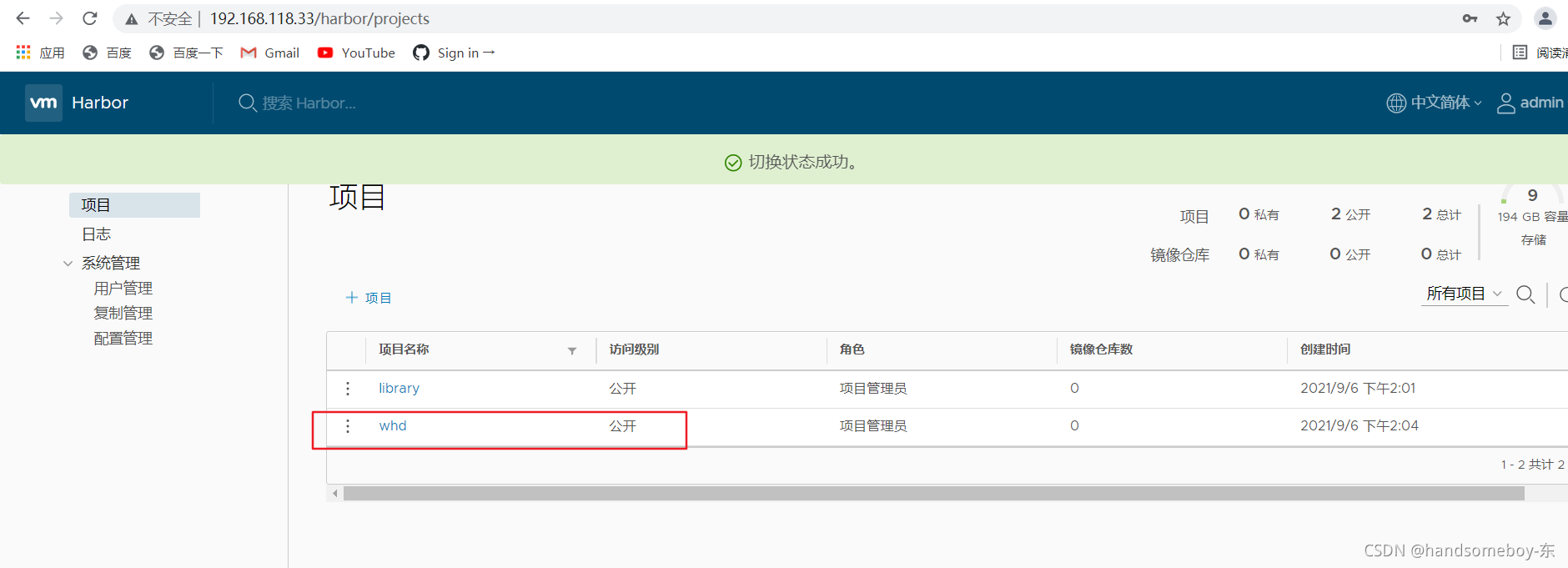

- 网页登录192.168.118.33,默认账户admin,密码Harbor12345,创建公有库whd

- 在master上拉取代码-生成镜像、推送到Harbor

[root@master ~]# yum install git -y

[root@master ~]# git clone https://github.com/otale/tale.git

正克隆到 'tale'...

remote: Enumerating objects: 6759, done.

remote: Counting objects: 100% (3/3), done.

remote: Compressing objects: 100% (3/3), done.

remote: Total 6759 (delta 0), reused 0 (delta 0), pack-reused 6756

接收对象中: 100% (6759/6759), 27.13 MiB | 2.09 MiB/s, done.

处理 delta 中: 100% (3532/3532), done.

[root@master ~]# mkdir dockerfile && cd dockerfile

[root@master dockerfile]# cp -r /root/tale /root/dockerfile

[root@master dockerfile]# touch Dockerfile

[root@master dockerfile]# cat << EOF >> /root/dockerfile/Dockerfile

> FROM docker.io/centos:7

> RUN yum install wget curl curl-devel -y

> RUN wget -c --header "Cookie: oraclelicense=accept-securebackup-cookie" http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131-linux-x64.rpm

> RUN yum localinstall -y jdk-8u131-linux-x64.rpm

> ADD tale tale

> RUN cd tale/ && sh install.sh

> RUN cd tale/tale/bin && chmod +x tool

> EXPOSE 9000

> EOF

[root@master dockerfile]# docker build -t="centos:tale" . ##基于dockerfile生成镜像

>>>>>>>>>>>>>>>>

? ----Harbor has been installed and started successfully.----

Now you should be able to visit the admin portal at http://192.168.118.33.

For more details, please visit https://github.com/vmware/harbor .

[root@master dockerfile]# docker run -dit -p 9000:9000 centos:tale /bin/bash

[root@master dockerfile]# docker exec -it e851b56c5119 /bin/bash

[root@bd455ebc28d1 /]# cd tale/tale/

[root@bd455ebc28d1 tale]# ls

lib resources tale-latest.jar tool

[root@bd455ebc28d1 tale]# sh -x tool start

+ APP_NAME=tale

+ JAVA_OPTS='-Xms256m -Xmx256m -Dfile.encoding=UTF-8'

+ psid=0

+ REMOVE_LOCAL_THEME=1

+ case "$1" in

+ start

+ checkpid

++ pgrep -f tale-latest

+ javaps=

+ '[' -n '' ']'

+ psid=0

+ '[' 0 -ne 0 ']'

+ echo 'Starting tale ...'

Starting tale ...

+ sleep 1

+ nohup java -Xms256m -Xmx256m -Dfile.encoding=UTF-8 -jar tale-latest.jar --app.env=prod

+ checkpid

++ pgrep -f tale-latest

+ javaps=33

+ '[' -n 33 ']'

+ psid=33

+ '[' 33 -ne 0 ']'

+ echo '(pid=33) [OK]'

(pid=33) [OK]

+ exit 0

+ [root@bd455ebc28d1 tale]# exit

- 访问192.168.118.11:9000

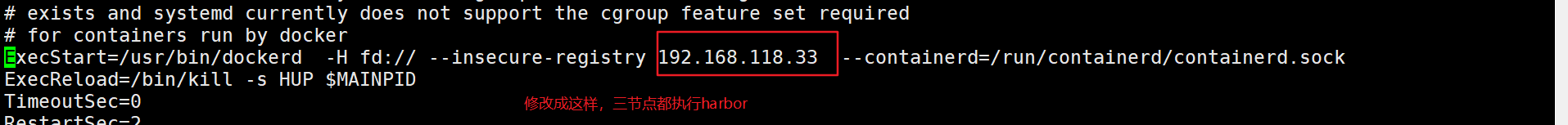

- 在k8s三台节点上指定harbor仓库位置 /usr/lib/systemd/system/docker.service ,这里只显示master

[root@master dockerfile]# vim /usr/lib/systemd/system/docker.service

[root@master dockerfile]# systemctl daemon-reload

[root@master dockerfile]# systemctl restart docker

- 在master上将镜像推送到harbor

[root@master dockerfile]# docker login -u admin -p Harbor12345 http://192.168.118.33

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

##对tale项目打标签

[root@master dockerfile]# docker tag centos:tale 192.168.118.33/whd/centos:tale

##上传镜像然后再harbor网页查看

[root@master dockerfile]# docker push 192.168.118.33/whd/centos:tale

The push refers to repository [192.168.118.33/whd/centos]

7674b1f519d8: Pushed

50be6e724ae5: Pushed

6bb60525b2d0: Pushed

9cd5d03a6213: Pushed

3904c1aa1c91: Pushed

84dff87df527: Pushed

174f56854903: Pushed

tale: digest: sha256:4114720b9c6684a1809eb8bd3f293474b5bda7c09a6c337b7558ec468c34ef17 size: 1799

- 退出仓库

[root@master dockerfile]# docker logout 192.168.118.33

Removing login credentials for 192.168.118.33

- 在master上执行k8s服务发布命令、发布java程序到K8S

[root@master dockerfile]# cd tale

[root@master tale]# touch tale.yaml

[root@master tale]# cat << EOF >> tale.yaml

> apiVersion: apps/v1

> kind: Deployment

> metadata:

> name: tale-deployment

> labels:

> app: tale

> spec:

> replicas: 1

> selector:

> matchLabels:

> app: tale

> template:

> metadata:

> labels:

> app: tale

> spec:

> containers:

> - name: tale

> image: 192.168.118.33/whd/centos:tale

> command: [ "bin/bash","-ce","tail -f /dev/null"]

> ports:

> - containerPort: 9000

> EOF

[root@master tale]# touch tale-service.yaml

[root@master tale]# cat <<EOF >> tale-service.yaml

> apiVersion: v1

> kind: Service

> metadata:

> name: tale-service

> labels:

> app: tale

> spec:

> type: NodePort

> ports:

> - port: 80

> targetPort: 9000

> selector:

> app: tale

> EOF

##发布

[root@master tale]# kubectl apply -f tale.yaml

deployment.apps/tale-deployment created

[root@master tale]# kubectl apply -f tale-service.yaml

service/tale-service created

##查看

[root@master tale]# kubectl get po,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/tale-deployment-77c46764cb-bnqxt 1/1 Running 0 46s 10.244.1.2 node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 17h <none>

service/tale-service NodePort 10.1.130.91 <none> 80:31496/TCP 45s app=tale

[root@master tale]# kubectl exec -it pod/tale-deployment-77c46764cb-bnqxt /bin/bash

[root@tale-deployment-77c46764cb-bnqxt /]# cd tale/tale/bin

[root@tale-deployment-77c46764cb-bnqxt tale]# sh tool start

Starting tale ...

(pid=25) [OK]

用node1IP地址访问浏览器http://192.168.118.22:31496