理解Docker0

清空所有环境

测试

[root@aZang ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

# 本地回环地址

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:15:7b:6f brd ff:ff:ff:ff:ff:ff

# 阿里云(某)内网地址

inet 172.26.77.「*」/20 brd 172.26.「*」.215 scope global dynamic eth0

valid_lft 305383517sec preferred_lft 305383517sec

inet6 fe80::216:3eff:fe15:7b6f/64 scope link

valid_lft forever preferred_lft forever

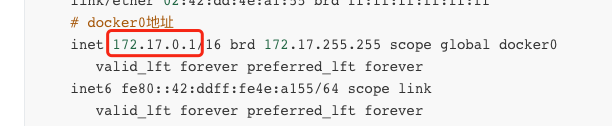

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:dd:4e:a1:55 brd ff:ff:ff:ff:ff:ff

# docker0地址

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:ddff:fe4e:a155/64 scope link

valid_lft forever preferred_lft forever

106: br-e766602b536d: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:59:68:78:72 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-e766602b536d

valid_lft forever preferred_lft forever

inet6 fe80::42:59ff:fe68:7872/64 scope link

valid_lft forever preferred_lft forever

三个网络

# 问题: docker是如何处理容器网络访问的

[root@aZang ~]# docker run -d -P --name tomcat_link tomcat

Unable to find image 'tomcat:latest' locally

latest: Pulling from library/tomcat

1cfaf5c6f756: Pull complete

c4099a935a96: Pull complete

f6e2960d8365: Pull complete

dffd4e638592: Pull complete

a60431b16af7: Pull complete

4869c4e8de8d: Pull complete

9815a275e5d0: Pull complete

c36aa3d16702: Pull complete

cc2e74b6c3db: Pull complete

1827dd5c8bb0: Pull complete

Digest: sha256:1af502b6fd35c1d4ab6f24dc9bd36b58678a068ff1206c25acc129fb90b2a76a

Status: Downloaded newer image for tomcat:latest

d5fe7d1d04acd94a51fc2426a6e963251c586c3a66358fb3c261beb5934ea8e6

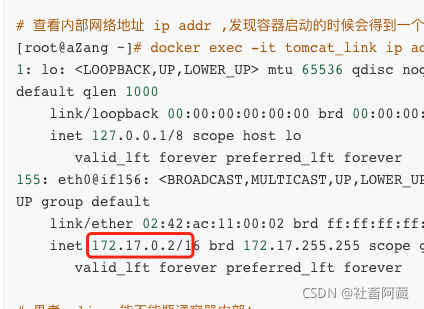

# 查看内部网络地址 ip addr ,发现容器启动的时候会得到一个eth0@if156 IP地址: docker分配的!

[root@aZang ~]# docker exec -it tomcat_link ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

155: eth0@if156: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 思考: linux能不能瓶通容器内部!

[root@aZang ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.063 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.054 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.043 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.042 ms

c64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.052 ms

# linux可以ping通docke容器内部

原理

是在同一网段(172.17)内所以可以ping通

-

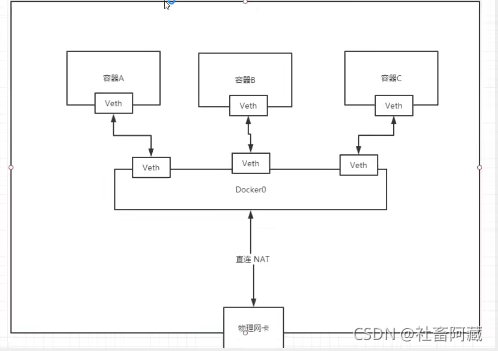

我们每启动一个docker容器,docker就会给docker容器分配一个ip,我们只要安装了docker,就会有一个网卡docker0桥接模式,使用的技术是evth-pair技术!

再次测试ip addr

[root@aZang ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:15:7b:6f brd ff:ff:ff:ff:ff:ff inet 172.... brd 172... scope global dynamic eth0 valid_lft 305382441sec preferred_lft 305382441sec inet6 fe80::216:3eff:fe15:7b6f/64 scope link valid_lft forever preferred_lft forever 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:dd:4e:a1:55 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:ddff:fe4e:a155/64 scope link valid_lft forever preferred_lft forever 106: br-e766602b536d: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:59:68:78:72 brd ff:ff:ff:ff:ff:ff inet 172.18.0.1/16 brd 172.18.255.255 scope global br-e766602b536d valid_lft forever preferred_lft forever inet6 fe80::42:59ff:fe68:7872/64 scope link valid_lft forever preferred_lft forever # 这里的156 与之前tomcat的eth0@if156所对应 156: veth2c966ca@if155: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 7a:b9:86:a3:52:c4 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::78b9:86ff:fea3:52c4/64 scope link valid_lft forever preferred_lft forever -

再启动一个容器测试

[root@aZang ~]# docker run -d -P --name tomcat_link2 tomcat e3de580f6464bd22ab233a42a8fa67a205fceae3eb32400fdc05fe9384933588 [root@aZang ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:15:7b:6f brd ff:ff:ff:ff:ff:ff inet 172.../20 brd 172... scope global dynamic eth0 valid_lft 305382242sec preferred_lft 305382242sec inet6 fe80::216:3eff:fe15:7b6f/64 scope link valid_lft forever preferred_lft forever 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:dd:4e:a1:55 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:ddff:fe4e:a155/64 scope link valid_lft forever preferred_lft forever 106: br-e766602b536d: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:59:68:78:72 brd ff:ff:ff:ff:ff:ff inet 172.18.0.1/16 brd 172.18.255.255 scope global br-e766602b536d valid_lft forever preferred_lft forever inet6 fe80::42:59ff:fe68:7872/64 scope link valid_lft forever preferred_lft forever 156: veth2c966ca@if155: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 7a:b9:86:a3:52:c4 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::78b9:86ff:fea3:52c4/64 scope link valid_lft forever preferred_lft forever # 这里发现又多了一个网卡 158: vethe611eca@if157: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether e2:45:e3:5a:91:44 brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet6 fe80::e045:e3ff:fe5a:9144/64 scope link valid_lft forever preferred_lft forever [root@aZang ~]# docker exec -it tomcat_link2 ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever # 这个是一对 157: eth0@if158: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever# 我们发现这个容器带来网卡,都是一对一对的 # evth-pair就是一堆的虚拟设备接口,他们都是成对出现的,一端连着协议,一端彼此相连 # 正因为有这个特性,evth-pair 充当一个桥接,链接各种虚拟网络设备的 # OpenStac,Docker容器之间的链接, OVS的链接,都是使用veth-pair技术 -

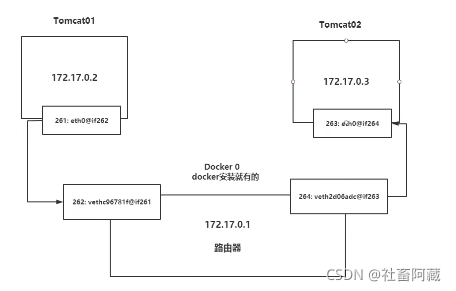

我们来测试下tomcat01和tomcat02是否可以ping通

[root@aZang ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES e3de580f6464 tomcat "catalina.sh run" 2 days ago Up 2 days 0.0.0.0:49156->8080/tcp, :::49156->8080/tcp tomcat_link2 d5fe7d1d04ac tomcat "catalina.sh run" 2 days ago Up 2 days 0.0.0.0:49155->8080/tcp, :::49155->8080/tcp tomcat_link [root@aZang ~]# docker exec tomcat_link2 ping 172.17.0.3 PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data. 64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.036 ms 64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.034 ms 64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.035 ms 64 bytes from 172.17.0.3: icmp_seq=4 ttl=64 time=0.049 ms 64 bytes from 172.17.0.3: icmp_seq=5 ttl=64 time=0.039 ms # 结论: 容器和容器之间是可以互相ping通的! -

绘制一个网络流行图

结论: tomcat01和tomcat02 是公用的一个路由,docker0

所有的容器不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用ip

小结

Docker使用的是Linux的桥接,宿主机中是一个Docker容器的网桥docker0

Docker中的所有网络都是虚拟的. 虚拟的转发效率高! (内网传递文件!)

只要容器删除,对应的一对网桥就没了

–link

思考一场景,我们编写了一个微服务,database url = ip;项目不重启,数据库ip换掉了,我们希望可以处理这个问题,可以通过容器名字来进行访问

[root@aZang ~]# docker exec tomcat_link2 ping tomcat_link

ping: tomcat_link: Name or service not known

# 这里会发现ping不通, 如何解决?

[root@aZang ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e3de580f6464 tomcat "catalina.sh run" 3 days ago Up 3 days 0.0.0.0:49156->8080/tcp, :::49156->8080/tcp tomcat_link2

d5fe7d1d04ac tomcat "catalina.sh run" 3 days ago Up 3 days 0.0.0.0:49155->8080/tcp, :::49155->8080/tcp tomcat_link

[root@aZang ~]# docker run -d -P --name tomcat_link3 --link tomcat_link tomcat

7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150

[root@aZang ~]# docker exec tomcat_link3 ping tomcat_link

PING tomcat_link (172.17.0.2) 56(84) bytes of data.

64 bytes from tomcat_link (172.17.0.2): icmp_seq=1 ttl=64 time=0.104 ms

64 bytes from tomcat_link (172.17.0.2): icmp_seq=2 ttl=64 time=0.070 ms

64 bytes from tomcat_link (172.17.0.2): icmp_seq=3 ttl=64 time=0.055 ms

# 通过--link就可以解决了,思考了tomcat_link3可以ping通tomcat_link,但是反向可以ping通么?

[root@aZang ~]# docker exec tomcat_link ping tomcat_link3

ping: tomcat_link3: Name or service not known

# 无法ping通因为没有配置

探究inspect

[root@aZang ~]# docker inspect 7b86cee53b9f

[

{

"Id": "7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150",

"Created": "2021-09-02T14:14:48.576233291Z",

"Path": "catalina.sh",

"Args": [

"run"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 18625,

"ExitCode": 0,

"Error": "",

"StartedAt": "2021-09-02T14:14:48.998326843Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:266d1269bb298d6a3259fc2c2a9deaedf8be945482a2d596b64f73343289a56c",

"ResolvConfPath": "/var/lib/docker/containers/7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150/hostname",

"HostsPath": "/var/lib/docker/containers/7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150/hosts",

"LogPath": "/var/lib/docker/containers/7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150/7b86cee53b9f8057fb23cfde565f7cc5ae32bb9c2fef4ced76f1685b724a7150-json.log",

"Name": "/tomcat_link3",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": [ "3e19dbe259d1dbc249e2bac55c3f078e41ce8761ae1e371e2d4b3471b2d16502"

],

"HostConfig": {

"Binds": null,

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {}

},

"NetworkMode": "default",

"PortBindings": {},

"RestartPolicy": {

"Name": "no",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"CgroupnsMode": "host",

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

# 新大陆在这里哦,发现了么??

"Links": [

"/tomcat_link:/tomcat_link3/tomcat_link"

],

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": true,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"KernelMemory": 0,

"KernelMemoryTCP": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": false,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/c9d2005f0a8b80c6aa7bd698eec870c0f15a3967e4eb5fcea76b43608d9d3338-init/diff:/var/lib/docker/overlay2/32ef393e0a5da0ee1493e230d1ea3e5f35cc2117233474c4005b38348bb52218/diff:/var/lib/docker/overlay2/e6e4d6ee8430f9456941eb312170d1fa484746a8bf152e6672af3b65842c5600/diff:/var/lib/docker/overlay2/2e1219de84f1d0185c24ac377be0159e0f64314a70ca7e74d08cb7467ce34d37/diff:/var/lib/docker/overlay2/2d113afd546ad3606181940a2b9015a63c65c24029dee034dbfaeb39d93fce92/diff:/var/lib/docker/overlay2/0c3528aa42590e0f02309fc29c74ec6aa5672caf5faf76ffdb6b53b8cfcc6b80/diff:/var/lib/docker/overlay2/1580bae5aabce90483f0ed6f8ffcbea216ed75bc1f04a5e6eed888105f89dddf/diff:/var/lib/docker/overlay2/4a85873e94ad5ffc2e9f2cca5fec089ca8065c2d479ab8fb541a953859ae2d75/diff:/var/lib/docker/overlay2/17909dbca1519a429ab9a5a17b89a106f70560b2c358ec1c83c2a1396f034436/diff:/var/lib/docker/overlay2/0310324ca56012654ef8394e046bec320155ce506f801cbfa6afa3340b49ee68/diff:/var/lib/docker/overlay2/20dfeaca54216179c20e80283006d54fe8aec0afe44c79bfe588065f13561ad9/diff",

"MergedDir": "/var/lib/docker/overlay2/c9d2005f0a8b80c6aa7bd698eec870c0f15a3967e4eb5fcea76b43608d9d3338/merged",

"UpperDir": "/var/lib/docker/overlay2/c9d2005f0a8b80c6aa7bd698eec870c0f15a3967e4eb5fcea76b43608d9d3338/diff",

"WorkDir": "/var/lib/docker/overlay2/c9d2005f0a8b80c6aa7bd698eec870c0f15a3967e4eb5fcea76b43608d9d3338/work"

},

"Name": "overlay2"

},

"Mounts": [],

"Config": {

"Hostname": "7b86cee53b9f",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"8080/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/tomcat/bin:/usr/local/openjdk-11/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"JAVA_HOME=/usr/local/openjdk-11",

"LANG=C.UTF-8",

"JAVA_VERSION=11.0.12",

"CATALINA_HOME=/usr/local/tomcat",

"TOMCAT_NATIVE_LIBDIR=/usr/local/tomcat/native-jni-lib",

"LD_LIBRARY_PATH=/usr/local/tomcat/native-jni-lib",

"GPG_KEYS=48F8E69F6390C9F25CFEDCD268248959359E722B A9C5DF4D22E99998D9875A5110C01C5A2F6059E7 DCFD35E0BF8CA7344752DE8B6FB21E8933C60243",

"TOMCAT_MAJOR=9",

"TOMCAT_VERSION=9.0.52",

"TOMCAT_SHA512=35e007e8e30e12889da27f9c71a6f4997b9cb5023b703d99add5de9271828e7d8d4956bf34dd2f48c7c71b4f8480f318c9067a4cd2a6d76eaae466286db4897b"

],

"Cmd": [

"catalina.sh",

"run"

],

"Image": "tomcat",

"Volumes": null,

"WorkingDir": "/usr/local/tomcat",

"Entrypoint": null,

"OnBuild": null,

"Labels": {}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "dd13b1f2eb239b0d518e87ad8dce5264020dfc8aca5523380593d7a6316811d9",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {

"8080/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "49157"

},

{

"HostIp": "::",

"HostPort": "49157"

}

]

},

"SandboxKey": "/var/run/docker/netns/dd13b1f2eb23",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "b85af21af160b1ea6e0abf44eb65b641b09d1596728b76e8898576704f80e5e4",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.4",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:04",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "23d28517dccb517cbd79b9e337d195a7e5ad17605adcdfd8b93ce6595c1648d4",

"EndpointID": "b85af21af160b1ea6e0abf44eb65b641b09d1596728b76e8898576704f80e5e4",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.4",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:04",

"DriverOpts": null

}

}

}

}

]

以上可能不太直观我们直接进入tomcat_link3的host

# 查看hosts配置,这里有猫腻

[root@aZang ~]# docker exec -it tomcat_link3 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

# 发现了喵???

172.17.0.2 tomcat_link d5fe7d1d04ac

172.17.0.4 7b86cee53b9f

# 因为tomcat_link没有绑定tomcat_link03所以不能ping通

[root@aZang ~]# docker exec -it tomcat_link cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 d5fe7d1d04ac

其实这个tomcat_link3就是在本地配置了tomcat_link的配置

本质探究–link 就是我们在hosts配置中增加了一个172.17.0.2 tomcat_link d5fe7d1d04ac

我们现在玩Docker已经不建议使用–link了!

自定义网络! 不适用docker0!

docker0问题: 他不支持容器名连接访问!

自定义网络

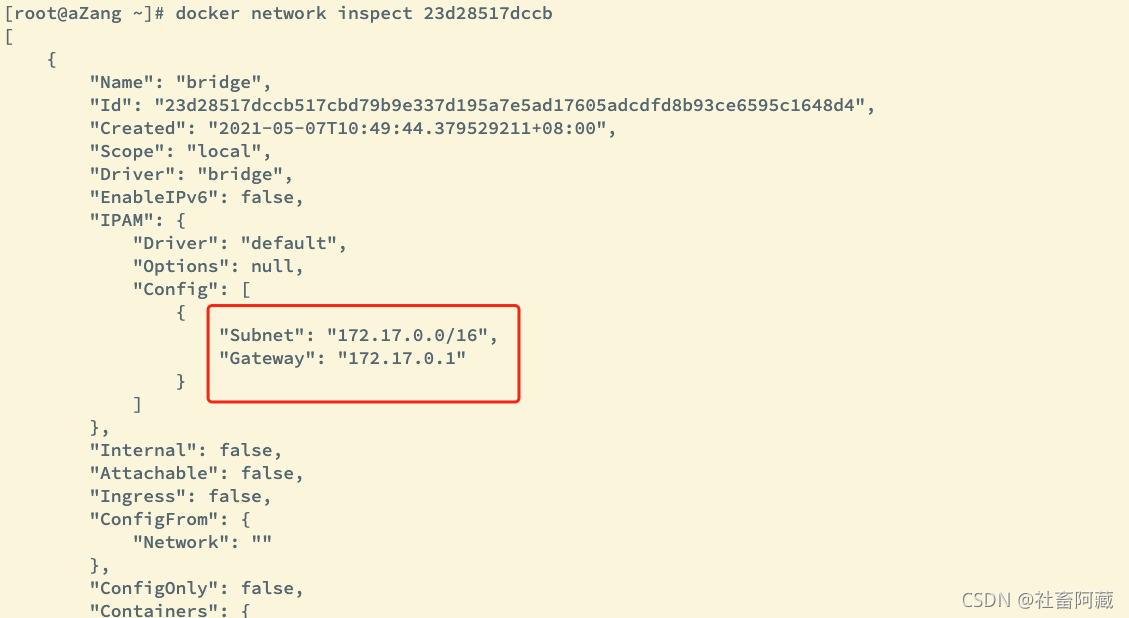

查看所有的docker网络

[root@aZang ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

23d28517dccb bridge bridge local

2fcb49cb4be0 host host local

88375725a3c1 none null local

e766602b536d some-network bridge local

- 网络模式

- bridge: 桥接docker(默认,自己创建也使用bridge桥接模式)

- none: 不配置网络

- host: 和宿主机共享网络

- container: 容器网络连通(用的少,局限性很大)

测试

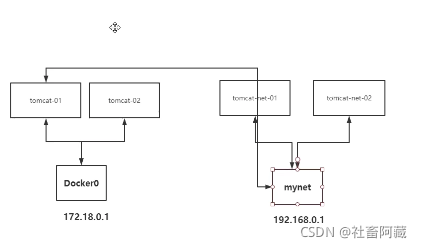

# 我们直接启动过的命令 --net bridge 而这个就是我们的docker0

docker run -d -P --name tomcat01 tomcat

docker run -d -P --name tomcat01 --net bridge tomcat

# docker0特点: 默认,域名不能访问,--link可以打通连接!

# 我们可以自定义一个网络!

# --driver bridge

# --subnet 192.168.0.0/16 「这个范围是192.168.0.2-255」

# --gateway 192.168.0.1 mynet

[root@aZang ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

c1b8416a32a532115ba6aa900dc14bf682cef4203c15001d67101e18546770c0

[root@aZang ~]# docker inspect c1b8416a32a532115

[

{

"Name": "mynet",

"Id": "c1b8416a32a532115ba6aa900dc14bf682cef4203c15001d67101e18546770c0",

"Created": "2021-09-04T22:23:35.778025687+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

# 我们刚才配置的

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

我们启动两个tomcat在我们自己创建的网络mynet中

[root@aZang ~]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

bb8b44d49e3f33557664a8f4ec643f6bf86e83f785b1a001d53c1a3ded760451

[root@aZang ~]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

53d3cedb9c7bebe93d8a0e2908a9f5a6dbe4d8dd2ac7d10be5b5221f94d3f435

[root@aZang ~]# docker inspect mynet

[

{

"Name": "mynet",

"Id": "c1b8416a32a532115ba6aa900dc14bf682cef4203c15001d67101e18546770c0",

"Created": "2021-09-04T22:23:35.778025687+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

# 这里我们会发现我们新创建的两个容器

"Containers": {

"53d3cedb9c7bebe93d8a0e2908a9f5a6dbe4d8dd2ac7d10be5b5221f94d3f435": {

"Name": "tomcat-net-02",

"EndpointID": "a5eaf23ca552ee84d719195afd38d27f4fa2ccaaa2a7e2b83eae78370c79e029",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"bb8b44d49e3f33557664a8f4ec643f6bf86e83f785b1a001d53c1a3ded760451": {

"Name": "tomcat-net-01",

"EndpointID": "5c24c4527a86e79845436be388ee78858f83a0fedc0383af96179bf3e90f5b0e",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

# 尝试是否可以使用容器名字ping通

[root@aZang ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

# 完全没没有问题

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.072 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.071 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.055 ms

^C

--- tomcat-net-02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.055/0.066/0.072/0.007 ms

我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐我们平时这样使用网络!

好处:

redis–不同的集群使用不同的网络,从而保证集群是安全和健康的

mysql-不同的集群使用不同的网络,保证集训时安全健康的

网络连通

前沿: 我们有4个tomcat容器分别处于两个不同的网络中现在我们想让这两个网络打通

[root@aZang ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

53d3cedb9c7b tomcat "catalina.sh run" 29 minutes ago Up 29 minutes 0.0.0.0:49159->8080/tcp, :::49159->8080/tcp tomcat-net-02

bb8b44d49e3f tomcat "catalina.sh run" 29 minutes ago Up 29 minutes 0.0.0.0:49158->8080/tcp, :::49158->8080/tcp tomcat-net-01

7b86cee53b9f tomcat "catalina.sh run" 2 days ago Up 2 days 0.0.0.0:49157->8080/tcp, :::49157->8080/tcp tomcat_link3

e3de580f6464 tomcat "catalina.sh run" 5 days ago Up 5 days 0.0.0.0:49156->8080/tcp, :::49156->8080/tcp tomcat_link2

d5fe7d1d04ac tomcat "catalina.sh run" 5 days ago Up 5 days 0.0.0.0:49155->8080/tcp, :::49155->8080/tcp tomcat_link

[root@aZang ~]# docker network -h

Flag shorthand -h has been deprecated, please use --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

[root@aZang ~]# docker network connect -h

Flag shorthand -h has been deprecated, please use --help

# docker网络连接 [选项] 网络容器

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options:

--alias strings Add network-scoped alias for the container

--driver-opt strings driver options for the network

--ip string IPv4 address (e.g., 172.30.100.104)

--ip6 string IPv6 address (e.g., 2001:db8::33)

--link list Add link to another container

--link-local-ip strings Add a link-local address for the container

# 尝试打通tomcat_link - mynet

# 连通之后就是将tomcat——link放到了mynet网络下

[root@aZang ~]# docker network connect mynet tomcat_link

[root@aZang ~]# docker inspect mynet

[

{

"Name": "mynet",

"Id": "c1b8416a32a532115ba6aa900dc14bf682cef4203c15001d67101e18546770c0",

"Created": "2021-09-04T22:23:35.778025687+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"53d3cedb9c7bebe93d8a0e2908a9f5a6dbe4d8dd2ac7d10be5b5221f94d3f435": {

"Name": "tomcat-net-02",

"EndpointID": "a5eaf23ca552ee84d719195afd38d27f4fa2ccaaa2a7e2b83eae78370c79e029",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"bb8b44d49e3f33557664a8f4ec643f6bf86e83f785b1a001d53c1a3ded760451": {

"Name": "tomcat-net-01",

"EndpointID": "5c24c4527a86e79845436be388ee78858f83a0fedc0383af96179bf3e90f5b0e",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"d5fe7d1d04acd94a51fc2426a6e963251c586c3a66358fb3c261beb5934ea8e6": {

# 在这里,加入进来了

"Name": "tomcat_link",

"EndpointID": "88bc0bd4387a0bd26ea30c78a578b077a1c79a74c74586b0d9d31f59be34980b",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

# 这样就会导致一个容器两个ip!

# 阿里云服务 ,公网ip,私网ip

# 测试是否可以ping通

[root@aZang ~]# docker exec -it tomcat_link ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

# 可以ping通没毛病

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.074 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.098 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.074 ms

^C

--- tomcat-net-01 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2ms

rtt min/avg/max/mdev = 0.074/0.082/0.098/0.011 ms

# 这里发现因为刚才只把tomcat_link和网络mynet进行了连通,但是tomcat_link2依然是无法连通的

[root@aZang ~]# docker exec -it tomcat_link2 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

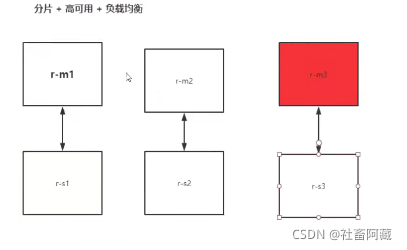

实战:部署redis集群

shell脚本!!

# 创建网卡(Redis的)

[root@aZang ~]# docker network create redis --subnet 172.38.0.0/16

5af55e5caabd38dae8e717e321cefdc11e9bc43d739bfdc2d0692d2335239fc6

[root@aZang ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

23d28517dccb bridge bridge local

2fcb49cb4be0 host host local

c1b8416a32a5 mynet bridge local

88375725a3c1 none null local

5af55e5caabd redis bridge local

e766602b536d some-network bridge local

# 通过脚本文件创建六个redis配置

for port in $(seq 1 6);\

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >>/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

[root@aZang ~]# for port in $(seq 1 6);\

> do \

> mkdir -p /mydata/redis/node-${port}/conf

> touch /mydata/redis/node-${port}/conf/redis.conf

> cat << EOF >>/mydata/redis/node-${port}/conf/redis.conf

> port 6379

> bind 0.0.0.0

> cluster-enabled yes

> cluster-config-file nodes.conf

> cluster-announce-ip 172.38.0.1${port}

> cluster-announce-port 6379

> cluster-announce-bus-port 16379

> appendonly yes

> EOF

> done

[root@aZang redis]# cd /mydata/redis

[root@aZang redis]# ls

node-1 node-2 node-3 node-4 node-5 node-6

[root@aZang redis]# cd node-1/cd conf

[root@aZang conf]# ls

redis.conf

[root@aZang conf]# cat redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-announce-ip 172.38.0.11

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

# 接下来我们启动docker容器

for port in $(seq 1 6);\

do \

docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port} \

-v /mydata/redis/node-${port}/data:/data \

-v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.1${port} redis:latest redis-server /etc/redis/redis.conf

done

[root@aZang conf]# docker run -p 6379:6379 -p 16379:16379 --name redis-1 -v /mydata/redis/node-1/data:/data -v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.11 redis:latest redis-server /etc/redis/redis.conf

Unable to find image 'redis:latest' locally

latest: Pulling from library/redis

a330b6cecb98: Pull complete

14bfbab96d75: Pull complete

8b3e2d14a955: Pull complete

5da5e1b21a2f: Pull complete

6af3a5ca4596: Pull complete

4f9efe5b47a5: Pull complete

Digest: sha256:e595e79c05c7690f50ef0136acc9d932d65d8b2ce7915d26a68ca3fb41a7db61

Status: Downloaded newer image for redis:latest

4f00cfb0c0c3da8b2c9322364df8a4aa2449fbc799372eff6cbcfa3f027d436b

# 使用脚本创建6个redis

[root@aZang conf]# for port in $(seq 1 6);\

> do \

> docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port} \

> -v /mydata/redis/node-${port}/data:/data \

> -v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \

> -d --net redis --ip 172.38.0.1${port} redis:latest redis-server /etc/redis/redis.conf

> done

e6bf0e1678da079adc3f63961b53aefd1ab12481a8fd4d5395aa83d321755efc

92e3d3322a616b2803d2194a0efc3d21a25d1ac16e69415627e5a2ffcf89c45f

8d662501ea7dc20cd574e9164766059cd17b0660e3ee04fffade927e0bef7a3d

a92e3d757981f6454b031f14fa47f558501efd4613542cde284dc39745a19255

f8256d51856ba3cc5e858c14380a4ae2c85e8c7acf6ae745cda273b57b345e89

414186d4eb8f4ded91f8a013fac9e1d303e6e3096239f66b61169a0ef6b5ac1a

[root@aZang conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

414186d4eb8f redis:latest "docker-entrypoint.s…" 37 seconds ago Up 36 seconds 0.0.0.0:6376->6379/tcp, :::6376->6379/tcp, 0.0.0.0:16376->16379/tcp, :::16376->16379/tcp redis-6

f8256d51856b redis:latest "docker-entrypoint.s…" 38 seconds ago Up 37 seconds 0.0.0.0:6375->6379/tcp, :::6375->6379/tcp, 0.0.0.0:16375->16379/tcp, :::16375->16379/tcp redis-5

a92e3d757981 redis:latest "docker-entrypoint.s…" 38 seconds ago Up 37 seconds 0.0.0.0:6374->6379/tcp, :::6374->6379/tcp, 0.0.0.0:16374->16379/tcp, :::16374->16379/tcp redis-4

8d662501ea7d redis:latest "docker-entrypoint.s…" 39 seconds ago Up 38 seconds 0.0.0.0:6373->6379/tcp, :::6373->6379/tcp, 0.0.0.0:16373->16379/tcp, :::16373->16379/tcp redis-3

92e3d3322a61 redis:latest "docker-entrypoint.s…" 39 seconds ago Up 38 seconds 0.0.0.0:6372->6379/tcp, :::6372->6379/tcp, 0.0.0.0:16372->16379/tcp, :::16372->16379/tcp redis-2

e6bf0e1678da redis:latest "docker-entrypoint.s…" 39 seconds ago Up 39 seconds 0.0.0.0:6371->6379/tcp, :::6371->6379/tcp, 0.0.0.0:16371->16379/tcp, :::16371->16379/tcp redis-1

# 创建集群

[root@aZang conf]# docker exec -it e6bf0e1678da /bin/bash

root@e6bf0e1678da:/data# ls

appendonly.aof dump.rdb nodes.conf

root@e6bf0e1678da:/data# redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.

0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: a8c8dcb706db927484541b719d676f474677ce6d 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: dba632e3a0c15a8567d9693078476f277f0eebc6 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 052c864b4f5bf3ccdbaa398077e73af02554b814 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 8901697bb12829da8e181c8a25a81c963cc06593 172.38.0.14:6379

replicates 052c864b4f5bf3ccdbaa398077e73af02554b814

S: 47e8e590c317c0b73a82d44803e84d14ce5f9777 172.38.0.15:6379

replicates a8c8dcb706db927484541b719d676f474677ce6d

S: f185f858198fce86a4cb1496af8290618cbecd24 172.38.0.16:6379

replicates dba632e3a0c15a8567d9693078476f277f0eebc6

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: a8c8dcb706db927484541b719d676f474677ce6d 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 47e8e590c317c0b73a82d44803e84d14ce5f9777 172.38.0.15:6379

slots: (0 slots) slave

replicates a8c8dcb706db927484541b719d676f474677ce6d

M: dba632e3a0c15a8567d9693078476f277f0eebc6 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: f185f858198fce86a4cb1496af8290618cbecd24 172.38.0.16:6379

slots: (0 slots) slave

replicates dba632e3a0c15a8567d9693078476f277f0eebc6

M: 052c864b4f5bf3ccdbaa398077e73af02554b814 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 8901697bb12829da8e181c8a25a81c963cc06593 172.38.0.14:6379

slots: (0 slots) slave

replicates 052c864b4f5bf3ccdbaa398077e73af02554b814

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# 集群创建完毕,进行查看

root@e6bf0e1678da:/data# redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:83

cluster_stats_messages_pong_sent:85

cluster_stats_messages_sent:168

cluster_stats_messages_ping_received:80

cluster_stats_messages_pong_received:83

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:168

127.0.0.1:6379> cluster nodes

47e8e590c317c0b73a82d44803e84d14ce5f9777 172.38.0.15:6379@16379 slave a8c8dcb706db927484541b719d676f474677ce6d 0 1630852418000 1 connected

dba632e3a0c15a8567d9693078476f277f0eebc6 172.38.0.12:6379@16379 master - 0 1630852422170 2 connected 5461-10922

f185f858198fce86a4cb1496af8290618cbecd24 172.38.0.16:6379@16379 slave dba632e3a0c15a8567d9693078476f277f0eebc6 0 1630852421167 2 connected

052c864b4f5bf3ccdbaa398077e73af02554b814 172.38.0.13:6379@16379 master - 0 1630852421000 3 connected 10923-16383

8901697bb12829da8e181c8a25a81c963cc06593 172.38.0.14:6379@16379 slave 052c864b4f5bf3ccdbaa398077e73af02554b814 0 1630852421000 3 connected

a8c8dcb706db927484541b719d676f474677ce6d 172.38.0.11:6379@16379 myself,master - 0 1630852420000 1 connected 0-5460

# 我们在这里set进去一个值然后关闭对应的docker

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

172.38.0.13:6379> get a

"b"

###############

这里关闭了ip为172.38.0.13的redis容器

[root@aZang ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

414186d4eb8f redis:latest "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 0.0.0.0:6376->6379/tcp, :::6376->6379/tcp, 0.0.0.0:16376->16379/tcp, :::16376->16379/tcp redis-6

f8256d51856b redis:latest "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 0.0.0.0:6375->6379/tcp, :::6375->6379/tcp, 0.0.0.0:16375->16379/tcp, :::16375->16379/tcp redis-5

a92e3d757981 redis:latest "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 0.0.0.0:6374->6379/tcp, :::6374->6379/tcp, 0.0.0.0:16374->16379/tcp, :::16374->16379/tcp redis-4

8d662501ea7d redis:latest "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 0.0.0.0:6373->6379/tcp, :::6373->6379/tcp, 0.0.0.0:16373->16379/tcp, :::16373->16379/tcp redis-3

92e3d3322a61 redis:latest "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 0.0.0.0:6372->6379/tcp, :::6372->6379/tcp, 0.0.0.0:16372->16379/tcp, :::16372->16379/tcp redis-2

e6bf0e1678da redis:latest "docker-entrypoint.s…" 14 minutes ago Up 14 minutes 0.0.0.0:6371->6379/tcp, :::6371->6379/tcp, 0.0.0.0:16371->16379/tcp, :::16371->16379/tcp redis-1

[root@aZang ~]# docker stop redis-3

redis-3

###############

172.38.0.13:6379> get a

^C

root@e6bf0e1678da:/data# redis -cli c

bash: redis: command not found

root@e6bf0e1678da:/data# redis -cli

bash: redis: command not found

root@e6bf0e1678da:/data# redis -cli -c

bash: redis: command not found

root@e6bf0e1678da:/data# redis-cli -c

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

# 14是13的从机

172.38.0.14:6379>

# 我们再次查看redis集群

127.0.0.1:6379> cluster nodes

47e8e590c317c0b73a82d44803e84d14ce5f9777 172.38.0.15:6379@16379 slave a8c8dcb706db927484541b719d676f474677ce6d 0 1630852845000 1 connected

dba632e3a0c15a8567d9693078476f277f0eebc6 172.38.0.12:6379@16379 master - 0 1630852845860 2 connected 5461-10922

f185f858198fce86a4cb1496af8290618cbecd24 172.38.0.16:6379@16379 slave dba632e3a0c15a8567d9693078476f277f0eebc6 0 1630852844857 2 connected

# 这里发现13故障了

052c864b4f5bf3ccdbaa398077e73af02554b814 172.38.0.13:6379@16379 master,fail - 1630852677199 1630852674000 3 connected

8901697bb12829da8e181c8a25a81c963cc06593 172.38.0.14:6379@16379 master - 0 1630852844000 7 connected 10923-16383

a8c8dcb706db927484541b719d676f474677ce6d 172.38.0.11:6379@16379 myself,master - 0 1630852844000 1 connected 0-5460

# docker搭建redis集群完毕!

我们使用docker之后,所有的技术都会慢慢变得简单起来!