Kubernetes

Kubernetes特性

- 轻量级:一些解释性语言:例如Python、Shell、Perl等,效率较低,占用的内存资源过多,所以使用go语言(编译型语言),语言级别支持进程管理,不需要人为控制,所以以go开发的资源消耗占用资源小。

- 开源。

- 自我修复:在节点故障时重新启动失败的容器,替换和重新部署,保证预期的副本数量。杀死健康检查失败的容器,并且在未准备好之前不会处理客户端请求,确保业务不会中断。

- 弹性伸缩:YmI文件设置阈值,当CPU使用率大于80%的时候,触发扩容pod,使用命令、UI或者基于CPU使用情况自动快速扩容和缩容应用程序实例,保证应用业务高峰并发时的高可用性,业务低峰的时候,节省资源。

- 自动部署和回滚:K8s采用滚动更新策略更新应用,一次更新一个pod,而不是一次删除所有pod,如果更新过程中出现问题,将回滚更改,确保升级不影响业务。

- 服务发现和负载均衡:K8s为多个pod提供一个统一访问入口,并且负载均衡关联的容器。

- 机密和配置管理:管理机密数据和应用程序的配置,而不需要把敏感数据暴露在镜像里,提高敏感数据的安全性,并可以将一些常用的配置存储在K8S中,方便应用程序使用。

- 存储编排:挂载外部存储系统,无论时来自本地存储,公有云,过着网络存储(NFS、GlusterFS)都作为集群资源的一部分,极大提高存储使用灵活性。

- 批处理:提供一次性任务(job),定时任务(crontab),满足批量数据处理和分析场景。

K8s部署

K8S部署

Master:192.168.20.11/24 kube-apiserver kube-controller-manager kube-scheduler etcd

Node01:192.168.20.22/24 kubelet kube-proxy docker flannel etcd

Node02:192.168.20.33/24 kubelet kube-proxy docker flannel etcd

node节点部署docker

[root@docker ~]# yum -y install yum-utils device-mapper-persistent-data lvm2 //下载驱动

[root@docker ~]# cd /etc/yum.repos.d/ //变更yum源

[root@docker yum.repos.d]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo //改为阿里源

[root@docker yum.repos.d]# setenforce 0 //关闭核心防护

[root@docker yum.repos.d]# yum -y install docker-ce

[root@docker yum.repos.d]# systemctl start docker //开启

[root@docker yum.repos.d]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

- 设置镜像加速(可以在阿里云官方网站上,只要注册了用户在容器镜像服务中会自动分配加速地址)

[root@docker ~]# cd /etc/docker

[root@docker docker]# tee /etc/docker/daemon.json <<-'EOF'

> {

> "registry-mirrors": ["https://k79j39r9.mirror.aliyuncs.com"]

> }

> EOF

{

"registry-mirrors": ["https://k79j39r9.mirror.aliyuncs.com"]

}

[root@docker docker]# systemctl daemon-reload

[root@docker docker]# systemctl restart docker

- 优化网络

[root@docker docker]# vim /etc/sysctl.conf

net.ipv4.ip_forward=1 //末尾插入

[root@docker docker]# sysctl -p

net.ipv4.ip_forward = 1

[root@docker docker]# systemctl restart network

[root@docker docker]# systemctl restart docker

[root@docker docker]# ls

daemon.json key.json

[root@server docker]# cat daemon.json //在这个文件里也可以配置一些自己想定义的如:仓库、地址等

{

"registry-mirrors": ["https://k79j39r9.mirror.aliyuncs.com"]

}

ETCD集群部署和获取CA证书

[root@k8s ~]# systemctl stop firewalld

[root@k8s ~]# systemctl disable firewalld

[root@k8s ~]#

[root@k8s ~]# setenforce 0

[root@k8s ~]# mkdir k8s

[root@k8s ~]# cd k8s/

[root@k8s k8s]# ls //上传证书制作脚本和etcd的启动脚本

etcd-cert.sh etcd.sh

[root@k8s k8s]# cat etcd-cert.sh

cat > ca-config.json <<EOF //CA证书配置文件

{

"signing": { //键名称

"default": {

"expiry": "87600h" //证书有效期为(10年)默认为1年

},

"profiles": { //简介

"www": { //名称

"expiry": "87600h",

"usages": [ //使用方法

"signing", //键

"key encipherment", //密钥认证(密钥验证要设置在CA证书中)

"server auth", //服务器端验证

"client auth" //客户端验证

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF //CA签名文件

{

"CN": "etcd CA", //CA签名为etcd指定(三个节点均需要)

"key": {

"algo": "rsa", //使用rsa非对称密钥的形式

"size": 2048 //密钥长度为2048

},

"names": [ //在证书中定义信息(标注格式)

{

"C": "CN", //名称

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF //服务器端的签名

{

"CN": "etcd",

"hosts": [ //定义三个节点的IP地址

"192.168.20.11",

"192.168.20.22",

"192.168.20.33"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

[root@k8s k8s]# cat etcd.sh

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.20.11etcd02=https://192.168.20.22:2380,etcd03=https://192.168.20.33:2380

ETCD_NAME=$1 //位置变量1:etcd节点名称

ETCD_IP=$2 //位置变量2:节点地址

ETCD_CLUSTER=$3 //位置变量3:集群

WORK_DIR=/opt/etcd //指定工作目录

cat <<EOF >$WORK_DIR/cfg/etcd //在指定的工作目录中创建ETCD的配置文件

#[Member]

ETCD_NAME="${ETCD_NAME}" //etcd的名称

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380" //etcdIP地址:2380端口,用于集群之间通讯

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379" //etcd IP地址:2379端口,用于开放给外部客户端通讯

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379" //对外提供的url使用https协议进行访问

ETCD_INITIAL_CLUSTER="etcd01=https://${ETCD_IP}:2380,${ETCD_CLUSTER}" //多路访问

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" //tokens令牌环名称etcd-cluster

ETCD_INITIAL_CLUSTER_STATE="new" //状态,重新创建EOF

EOF

cat <<EOF >/usr/lib/systemd/system/etcd.service //定义etcd的启动脚本

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd //etcd文件位置

ExecStart=${WORK_DIR}/bin/etcd \ //准启动状态及以下的参数

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \ //以下为集群内部的设定

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \ //群集内部通信,也是使用的令牌,为了保证安全

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \ //证书相关参数

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536 //开放最多额端口号

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload //参数重载

systemctl enable etcd

systemctl restart etcd

创建cfssl类型工具下载脚本

[root@k8s etcd-cert]# cat cfssl.sh

#先从官网源中制作证书的工具下载下来,(-o:导出)放在/usr/local/bin中便于系统识别

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

#从另一个站点源中下载cfssljson工具,用于识别json配置文件格式

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

#下载cfssl-certinfo工具

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

#给与权限

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

[root@k8s etcd-cert]# bash cfssl.sh

[root@k8s etcd-cert]# cd /usr/local/bin

[root@k8s etcd-cert]# ls

cfssl cfssl-certinfo cfssljson

[root@k8s bin]# chmod +x *

[root@k8s etcd-cert]# ./etcd-cert.sh

2021/09/30 11:05:15 [INFO] generating a new CA key and certificate from CSR

2021/09/30 11:05:15 [INFO] generate received request

2021/09/30 11:05:15 [INFO] received CSR

2021/09/30 11:05:15 [INFO] generating key: rsa-2048

2021/09/30 11:05:15 [INFO] encoded CSR

2021/09/30 11:05:15 [INFO] signed certificate with serial number 301194785248313759559097048574537696331972284983

2021/09/30 11:05:15 [INFO] generate received request

2021/09/30 11:05:15 [INFO] received CSR

2021/09/30 11:05:15 [INFO] generating key: rsa-2048

2021/09/30 11:05:15 [INFO] encoded CSR

2021/09/30 11:05:15 [INFO] signed certificate with serial number 606502063507097458749548619643100358626736021444

2021/09/30 11:05:15 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s etcd-cert]# ls

ca-config.json ca-csr.json ca.pem server.csr server-key.pem

ca.csr ca-key.pem etcd-cert.sh server-csr.json server.pem

.pem结尾的四个证书,分别是ca证书、ca的密钥证书、服务端证书、服务端的密钥证书

部署ETCD集群

[root@k8s k8s]# ls

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

etcd.sh flannel-v0.10.0-linux-amd64.tar.gz

[root@k8s k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@k8s k8s]# cd etcd-v3.3.10-linux-amd64/

[root@k8s etcd-v3.3.10-linux-amd64]# ls

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

[root@k8s k8s]# cd etcd-v3.3.10-linux-amd64/

[root@k8s etcd-v3.3.10-linux-amd64]# ls

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

[root@k8s etcd-v3.3.10-linux-amd64]# mv etcd etcdctl /opt/etcd/bin/

[root@k8s etcd-v3.3.10-linux-amd64]# cd ..

[root@k8s k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

[root@k8s k8s]# ls

etcd-cert etcd-v3.3.10-linux-amd64 flannel-v0.10.0-linux-amd64.tar.gz

etcd.sh etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

[root@k8s k8s]# bash etcd.sh etcd01 192.168.20.11 etcd02=https://192.168.20.22:2380,etcd03=httpds://192.168.20.33:2380

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

Job for etcd.service failed because the control process exited with error code. See "systemctl status etcd.service" and "journalctl -xe" for details.

[root@k8s k8s]# journalctl -xe

9月 30 13:52:02 k8s etcd[75157]: etcd Version: 3.3.10

9月 30 13:52:02 k8s etcd[75157]: Git SHA: 27fc7e2

9月 30 13:52:02 k8s etcd[75157]: Go Version: go1.10.4

9月 30 13:52:02 k8s etcd[75157]: Go OS/Arch: linux/amd64

9月 30 13:52:02 k8s etcd[75157]: setting maximum number of CPUs to 4, total number of available CPUs is

9月 30 13:52:02 k8s etcd[75157]: peerTLS: cert = /opt/etcd/ssl/server.pem, key = /opt/etcd/ssl/server-k

9月 30 13:52:02 k8s etcd[75157]: listening for peers on https://192.168.20.11:2380

9月 30 13:52:02 k8s etcd[75157]: The scheme of client url http://127.0.0.1:2379 is HTTP while peer key/

9月 30 13:52:02 k8s etcd[75157]: listening for client requests on 127.0.0.1:2379

9月 30 13:52:02 k8s etcd[75157]: listening for client requests on 192.168.20.11:2379

9月 30 13:52:02 k8s etcd[75157]: error setting up initial cluster: URL scheme must be http, https, unix

9月 30 13:52:02 k8s systemd[1]: etcd.service: main process exited, code=exited, status=1/FAILURE

9月 30 13:52:02 k8s systemd[1]: Failed to start Etcd Server.

-- Subject: Unit etcd.service has failed

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit etcd.service has failed.

--

-- The result is failed.

9月 30 13:52:02 k8s systemd[1]: Unit etcd.service entered failed state.

9月 30 13:52:02 k8s systemd[1]: etcd.service failed.

9月 30 13:52:02 k8s systemd[1]: etcd.service holdoff time over, scheduling restart.

9月 30 13:52:02 k8s systemd[1]: Stopped Etcd Server.

-- Subject: Unit etcd.service has finished shutting down

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit etcd.service has finished shutting down.

9月 30 13:52:02 k8s systemd[1]: start request repeated too quickly for etcd.service

9月 30 13:52:02 k8s systemd[1]: Failed to start Etcd Server.

-- Subject: Unit etcd.service has failed

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit etcd.service has failed.

--

-- The result is failed.

9月 30 13:52:02 k8s systemd[1]: Unit etcd.service entered failed state.

9月 30 13:52:02 k8s systemd[1]: etcd.service failed.

[root@k8s k8s]# scp -r /opt/etcd/ root@192.168.20.22:/opt/

The authenticity of host '192.168.20.22 (192.168.20.22)' can't be established.

ECDSA key fingerprint is SHA256:EJF/ChFA3xatEi7E4/nQiImp/Vtn94mqcgYTm17oJ7Y.

ECDSA key fingerprint is MD5:a9:7f:b8:51:01:2a:c1:c1:fb:a4:24:8c:49:88:2a:bf.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.20.22' (ECDSA) to the list of known hosts.

root@192.168.20.22's password:

etcd 100% 510 689.4KB/s 00:00

etcd 100% 18MB 89.6MB/s 00:00

etcdctl 100% 15MB 141.1MB/s 00:00

ca-key.pem 100% 1675 1.9MB/s 00:00

ca.pem 100% 1265 1.4MB/s 00:00

server-key.pem 100% 1679 2.5MB/s 00:00

server.pem 100% 1338 2.6MB/s 00:00

[root@k8s k8s]# scp -r /opt/etcd/ root@192.168.20.33:/opt/

The authenticity of host '192.168.20.33 (192.168.20.33)' can't be established.

ECDSA key fingerprint is SHA256:E0gR+AZivW18l8JOhHq4BM6NG54S/av2VBzhrZ23UhE.

ECDSA key fingerprint is MD5:49:92:0a:cf:62:e3:88:b2:3b:86:4f:d0:71:4c:64:d3.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.20.33' (ECDSA) to the list of known hosts.

root@192.168.20.33's password:

etcd 100% 510 629.1KB/s 00:00

etcd 100% 18MB 132.1MB/s 00:00

etcdctl 100% 15MB 143.5MB/s 00:00

ca-key.pem 100% 1675 2.0MB/s 00:00

ca.pem 100% 1265 204.0KB/s 00:00

server-key.pem 100% 1679 2.2MB/s 00:00

server.pem 100% 1338 2.2MB/s 00:00

[root@k8s k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.20.22:/usr/l

lib/ lib64/ libexec/ local/

[root@k8s k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.20.22:/usr/

bin/ games/ lib/ libexec/ sbin/ src/

etc/ include/ lib64/ local/ share/ tmp/

[root@k8s k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.20.22:/usr/lib/systemd/system/

root@192.168.20.22's password:

etcd.service 100% 923 1.0MB/s 00:00

[root@k8s k8s]# scp /usr/lib/systemd/system/etcd.service root@192.168.20.33:/usr/lib/systemd/system/

root@192.168.20.33's password:

etcd.service 100% 923 1.1MB/s 00:00

修改两个Node节点的etcd

- master为etcd01,node1为02,node2为03,并修改对应的ip

[root@node2 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" //

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.20.22:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.20.22:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.20.22:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.20.22:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.20.11:2380,etcd02=https://192.168.20.22:2380,etcd03=httpds://192.168.20.33:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

启动所有节点的etcd,并查看集群状态

[root@k8s k8s]# systemctl start etcd

[root@k8s k8s]# cd /root/k8s/etcd-cert/

[root@k8s etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.20.11:2379,https://192.168.20.22:2379,https://192.168.20.33:2379" cluster-health

member 98aa99c4dcd6c4 is healthy: got healthy result from https://192.168.20.11:2379

member 73631a4ae16c039a is healthy: got healthy result from https://192.168.20.33:2379

member d7dbef5017b35f0a is healthy: got healthy result from https://192.168.20.22:2379

cluster is healthy

flannel网络配置

- master节点写入分配的子网段到etcd中

[root@k8s etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.20.11:2379,https://192.168.20.22:2379,https://192.168.20.33:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

[root@k8s k8s]# ls

etcd-cert etcd-v3.3.10-linux-amd64 flannel-v0.10.0-linux-amd64.tar.gz

etcd.sh etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

[root@k8s k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.20.22:/root

root@192.168.20.22's password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 127.6MB/s 00:00

[root@k8s k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@192.168.20.33:/root

root@192.168.20.33's password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 131.3MB/s 00:0

- node1和node2节点操作

[root@node1 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz //解压安装包

flanneld

mk-docker-opts.sh

README.md

[root@node1 ~]# vim flannel.sh //启动脚本

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

[root@node1 ~]# bash flannel.sh https://192.168.20.11:2379,https://192.168.20.22:2379,https://192.168.20.33:2379 //开启flannel网络功能

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

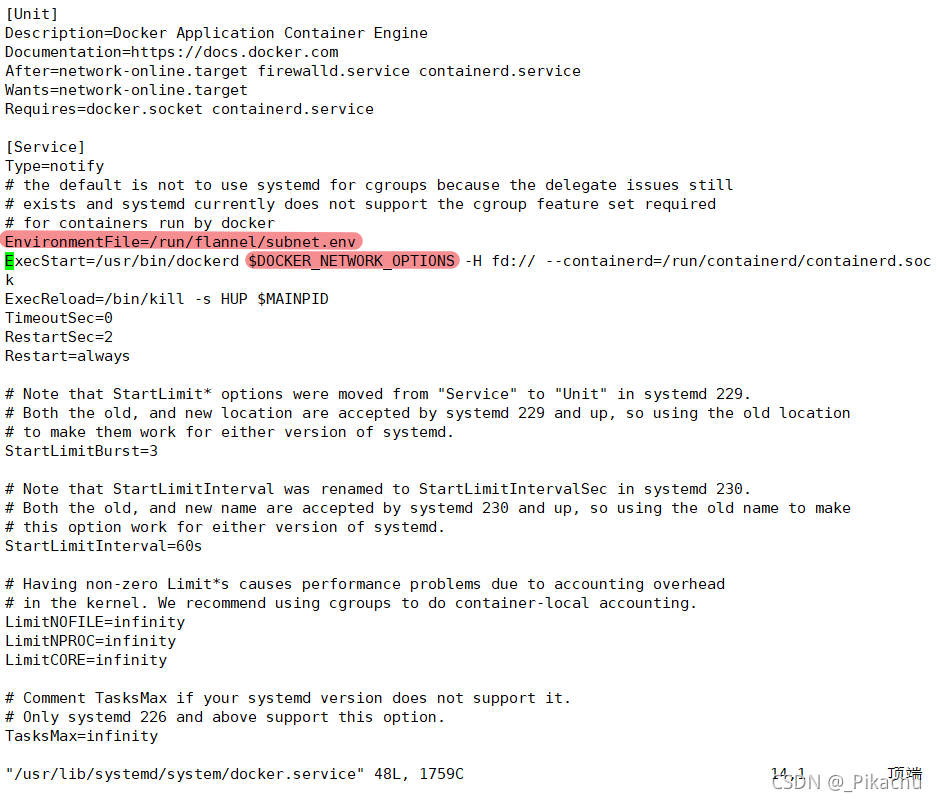

[root@node1 ~]# vim /usr/lib/systemd/system/docker.service //配置docker连接flannel

[root@node1 ~]# cat /run/flannel/subnet.env //--bip为指定启动时的子网

DOCKER_OPT_BIP="--bip=172.17.62.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.62.1/24 --ip-masq=false --mtu=1450"

[root@node1 ~]# systemctl daemon-reload //重启docker

[root@node1 ~]# systemctl restart docker

[root@node1 ~]# ifconfig //查看flannel网络

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.62.1 netmask 255.255.255.0 broadcast 172.17.62.255

ether 02:42:8e:f1:3b:c1 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.20.22 netmask 255.255.255.0 broadcast 192.168.20.255

inet6 fe80::41cb:ed52:e2e0:2c57 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:8e:58:63 txqueuelen 1000 (Ethernet)

RX packets 278596 bytes 192886220 (183.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 171913 bytes 19610376 (18.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.62.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::c81e:28ff:fe03:8fc1 prefixlen 64 scopeid 0x20<link>

ether ca:1e:28:03:8f:c1 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 36 overruns 0 carrier 0 collisions 0

- 验证测试ping通对方docker0网卡 证明flannel起到路由作用

[root@node1 ~]# docker run -it centos:7 /bin/bash

Unable to find image 'centos:7' locally

7: Pulling from library/centos

2d473b07cdd5: Pull complete

Digest: sha256:9d4bcbbb213dfd745b58be38b13b996ebb5ac315fe75711bd618426a630e0987

Status: Downloaded newer image for centos:7

[root@f983696b9946 /]#

[root@f983696b9946 /]# yum -y install net-tools -y //下载网卡工具

[root@f983696b9946 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.62.2 netmask 255.255.255.0 broadcast 172.17.62.255

ether 02:42:ac:11:3e:02 txqueuelen 0 (Ethernet)

RX packets 25877 bytes 19804749 (18.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 13788 bytes 748564 (731.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@f983696b9946 /]# ping 172.17.62.1

PING 172.17.62.1 (172.17.62.1) 56(84) bytes of data.

64 bytes from 172.17.62.1: icmp_seq=1 ttl=64 time=0.081 ms

64 bytes from 172.17.62.1: icmp_seq=2 ttl=64 time=0.049 ms

^C

--- 172.17.62.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.049/0.065/0.081/0.016 ms

- 再次测试ping通两个node中的centos:7容器

- 这是我node2节点centos:7的ip

[root@fd49e7aef949 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.67.2 netmask 255.255.255.0 broadcast 172.17.67.255

ether 02:42:ac:11:43:02 txqueuelen 0 (Ethernet)

RX packets 25635 bytes 19789884 (18.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10477 bytes 569495 (556.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- 用node1节点ping通node2

[root@node1 ~]# ping 172.17.67.2

PING 172.17.67.2 (172.17.67.2) 56(84) bytes of data.

64 bytes from 172.17.67.2: icmp_seq=1 ttl=63 time=0.462 ms

64 bytes from 172.17.67.2: icmp_seq=2 ttl=63 time=0.344 ms

64 bytes from 172.17.67.2: icmp_seq=3 ttl=63 time=0.281 ms

64 bytes from 172.17.67.2: icmp_seq=4 ttl=63 time=0.316 ms

64 bytes from 172.17.67.2: icmp_seq=5 ttl=63 time=0.325 ms

^C

--- 172.17.67.2 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4007ms

rtt min/avg/max/mdev = 0.281/0.345/0.462/0.064 ms

api-server生成证书

[root@k8s k8s]# unzip master.zip

Archive: master.zip

inflating: apiserver.sh

inflating: controller-manager.sh

inflating: scheduler.sh

[root@k8s k8s]# cat apiserver.sh

#!/bin/bash

MASTER_ADDRESS=$1

ETCD_SERVERS=$2

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \\

--v=4 \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=${MASTER_ADDRESS} \\

--secure-port=6443 \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-50000 \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

[root@k8s k8s]# cat controller-manager.sh

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect=true \\

--address=127.0.0.1 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

[root@k8s k8s]# cat scheduler.sh

#!/bin/bash

MASTER_ADDRESS=$1

cat <<EOF >/opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \\

--v=4 \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

[root@k8s k8s]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@k8s k8s]# mkdir k8s-cert

[root@k8s k8s]# cd k8s-cert/

[root@k8s k8s-cert]# vim k8s-cert.sh

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.20.11",

"192.168.20.22",

"192.168.20.33",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

- 生成k8s证书

[root@k8s k8s-cert]# bash k8s-cert.sh

2021/10/02 21:28:54 [INFO] generating a new CA key and certificate from CSR

2021/10/02 21:28:54 [INFO] generate received request

2021/10/02 21:28:54 [INFO] received CSR

2021/10/02 21:28:54 [INFO] generating key: rsa-2048

2021/10/02 21:28:54 [INFO] encoded CSR

2021/10/02 21:28:54 [INFO] signed certificate with serial number 433405011884562314292385370033233890577832238804

2021/10/02 21:28:54 [INFO] generate received request

2021/10/02 21:28:54 [INFO] received CSR

2021/10/02 21:28:54 [INFO] generating key: rsa-2048

2021/10/02 21:28:54 [INFO] encoded CSR

2021/10/02 21:28:54 [INFO] signed certificate with serial number 549768035499516233389525663943952839636890971840

2021/10/02 21:28:54 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2021/10/02 21:28:54 [INFO] generate received request

2021/10/02 21:28:54 [INFO] received CSR

2021/10/02 21:28:54 [INFO] generating key: rsa-2048

2021/10/02 21:28:54 [INFO] encoded CSR

2021/10/02 21:28:54 [INFO] signed certificate with serial number 101547408584247106811868981292166022526631595064

2021/10/02 21:28:54 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2021/10/02 21:28:54 [INFO] generate received request

2021/10/02 21:28:54 [INFO] received CSR

2021/10/02 21:28:54 [INFO] generating key: rsa-2048

2021/10/02 21:28:55 [INFO] encoded CSR

2021/10/02 21:28:55 [INFO] signed certificate with serial number 668824476389808026338365974127236706346340870192

2021/10/02 21:28:55 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s k8s-cert]# ls

admin.csr admin.pem ca-csr.json k8s-cert.sh kube-proxy-key.pem server-csr.json

admin-csr.json ca-config.json ca-key.pem kube-proxy.csr kube-proxy.pem server-key.pem

admin-key.pem ca.csr ca.pem kube-proxy-csr.json server.csr server.pem

[root@k8s k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl/

[root@k8s k8s-cert]# cd ..

[root@localhost k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@k8s k8s]# cd /root/k8s/kubernetes/server/bin

[root@k8s bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@k8s bin]# cd /root/k8s

[root@k8s k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

f9612b7c8f61100472069fe1237be3d6

[root@k8s k8s]# vim /opt/kubernetes/cfg/token.csv

[root@k8s k8s]# bash apiserver.sh 192.168.20.11 https://192.168.20.22:2379,https://192.168.20.33:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/sytemd/system/kube-apiserver.service.

[root@k8s k8s]# ps aux | grep kube

root 19644 108 7.8 392876 304440 ? Ssl 21:44 0:07 /opt/kubernetes/bin/kube-apiserver -logtostderr=true --v=4 --etcd-servers=https://192.168.20.22:2379,https://192.168.20.33:2379 --bind-addess=192.168.20.11 --secure-port=6443 --advertise-address=192.168.20.11 --allow-privileged=true --servie-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccoun,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-oken-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-ert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --clent-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etc-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/serer-key.pem

root 19662 0.0 0.0 112724 984 pts/1 R+ 21:44 0:00 grep --color=auto kube

[root@k8s k8s]# cat /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.20.22:2379,https://192.168.20.33:2379 \

--bind-address=192.168.20.11 \

--secure-port=6443 \

--advertise-address=192.168.20.11 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction\

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

[root@k8s k8s]# netstat -ntap | grep 6443

tcp 0 0 192.168.20.11:6443 0.0.0.0:* LISTEN 19644/kube-apiserve

tcp 0 0 192.168.20.11:6443 192.168.20.11:39798 ESTABLISHED 19644/kube-apiserve

tcp 0 0 192.168.20.11:39798 192.168.20.11:6443 ESTABLISHED 19644/kube-apiserve

[root@k8s k8s]# netstat -ntap | grep 8080

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 19644/kube-apiserve

[root@k8s k8s]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/sytemd/system/kube-scheduler.service.

[root@k8s k8s]# ps aux | grep ku

postfix 9409 0.0 0.1 91732 4088 ? S 21:37 0:00 pickup -l -t unix -u

root 19644 24.3 8.1 397360 312816 ? Ssl 21:44 0:08 /opt/kubernetes/bin/kube-apiserver -logtostderr=true --v=4 --etcd-servers=https://192.168.20.22:2379,https://192.168.20.33:2379 --bind-addess=192.168.20.11 --secure-port=6443 --advertise-address=192.168.20.11 --allow-privileged=true --servie-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccoun,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-oken-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-ert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --clent-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etc-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/serer-key.pem

root 19738 1.8 0.5 46128 19356 ? Ssl 21:44 0:00 /opt/kubernetes/bin/kube-scheduler -logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

root 19757 0.0 0.0 112728 976 pts/1 S+ 21:44 0:00 grep --color=auto ku

[root@k8s k8s]# chmod +x controller-manager.sh

[root@k8s k8s]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /ur/lib/systemd/system/kube-controller-manager.service.

[root@k8s k8s]# bash etcd.sh etcd01 192.168.20.11 etcd02=https://192.168.20.22:2380,etcd03=https://192168.20.33:2380

[root@k8s k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

[root@k8s k8s]# cd kubernetes/

[root@k8s kubernetes]# ls

addons kubernetes-src.tar.gz LICENSES server

[root@k8s kubernetes]# cd server/

[root@k8s server]# ls

bin

[root@k8s server]# cd bin/

[root@k8s bin]# ls

apiextensions-apiserver kube-apiserver.docker_tag kube-proxy

cloud-controller-manager kube-apiserver.tar kube-proxy.docker_tag

cloud-controller-manager.docker_tag kube-controller-manager kube-proxy.tar

cloud-controller-manager.tar kube-controller-manager.docker_tag kube-scheduler

hyperkube kube-controller-manager.tar kube-scheduler.docker_tag

kubeadm kubectl kube-scheduler.tar

kube-apiserver kubelet mounter

[root@k8s bin]# scp kubelet kube-proxy root@192.168.20.22:/opt/kubernetes/bin/

root@192.168.20.22's password:

kubelet 100% 168MB 127.7MB/s 00:01

kube-proxy 100% 48MB 148.9MB/s 00:00

[root@k8s bin]# cd

[root@k8s ~]# cd k8s/

[root@k8s k8s]# mkdir kubeconfig

[root@k8s k8s]# cd kubeconfig/

[root@k8s kubeconfig]# rz -E

rz waiting to receive.

[root@k8s kubeconfig]# mv kubeconfig.sh kubeconfig

[root@k8s kubeconfig]# vim kubeconfig

[root@k8s kubeconfig]# cat /opt/kubernetes/cfg/token.csv

f9612b7c8f61100472069fe1237be3d6,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

[root@k8s kubeconfig]# vim kubeconfig

[root@k8s kubeconfig]# export PATH=$PATH:/opt/kubernetes/bin/

[root@k8s kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

[root@k8s kubeconfig]# bash kubeconfig 192.168.20.11 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@k8s kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

[root@k8s kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.20.22:/opt/kubernees/cfg/

root@192.168.20.22's password:

bootstrap.kubeconfig 100% 2167 2.8MB/s 00:00

kube-proxy.kubeconfig 100% 6273 9.3MB/s 00:00

[root@k8s kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-botstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

[root@k8s kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-aBXCbQApx0wiTsqUianmzf-dpj5UC3W95zAPYy9GU_4 22s kubelet-bootstrap Pending

[root@k8s kubeconfig]# kubectl certificate approve node-csr-aBXCbQApx0wiTsqUianmzf-dpj5UC3W95zAPYy9GU_

certificatesigningrequest.certificates.k8s.io/node-csr-aBXCbQApx0wiTsqUianmzf-dpj5UC3W95zAPYy9GU_4 appoved

[root@k8s kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-aBXCbQApx0wiTsqUianmzf-dpj5UC3W95zAPYy9GU_4 2m58s kubelet-bootstrap Approved,Issued

[root@k8s kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.20.22 Ready <none> 12s v1.12.3

- node

[root@node1 ~]# unzip node.zip

Archive: node.zip

inflating: proxy.sh

inflating: kubelet.sh

[root@node1 ~]# bash kubelet.sh 192.168.20.22

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/sstem/kubelet.service.

[root@node1 ~]# ps aux | grep kube

root 16261 0.0 0.9 325908 18404 ? Ssl 21:39 0:01 /opt/kubernetes/bin/flanneld --ip-maq --etcd-endpoints=https://192.168.20.11:2379,https://192.168.20.22:2379,https://192.168.20.33:2379 -ecd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/serer-key.pem

root 20494 8.0 2.4 405340 46160 ? Ssl 22:24 0:00 /opt/kubernetes/bin/kubelet --logtosderr=true --v=4 --hostname-override=192.168.20.22 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig -bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.cofig --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/googl-containers/pause-amd64:3.0

root 20510 0.0 0.0 112724 980 pts/1 S+ 22:24 0:00 grep --color=auto kube