《linux mmap系统调用》主要描述了用户空间内mmap的使用及其注意事项,mmap最终还是要进入到内核态,如果没有指定addr则由内核分配一段可用的vma,如果已经指定addr则内核按照对齐等要求对addr地址进行整理,根据需要内核生成一个vma,以记录跟踪用户空间的使用情况,最后根据生成的vma以及fd做映射。

mmap主要过程

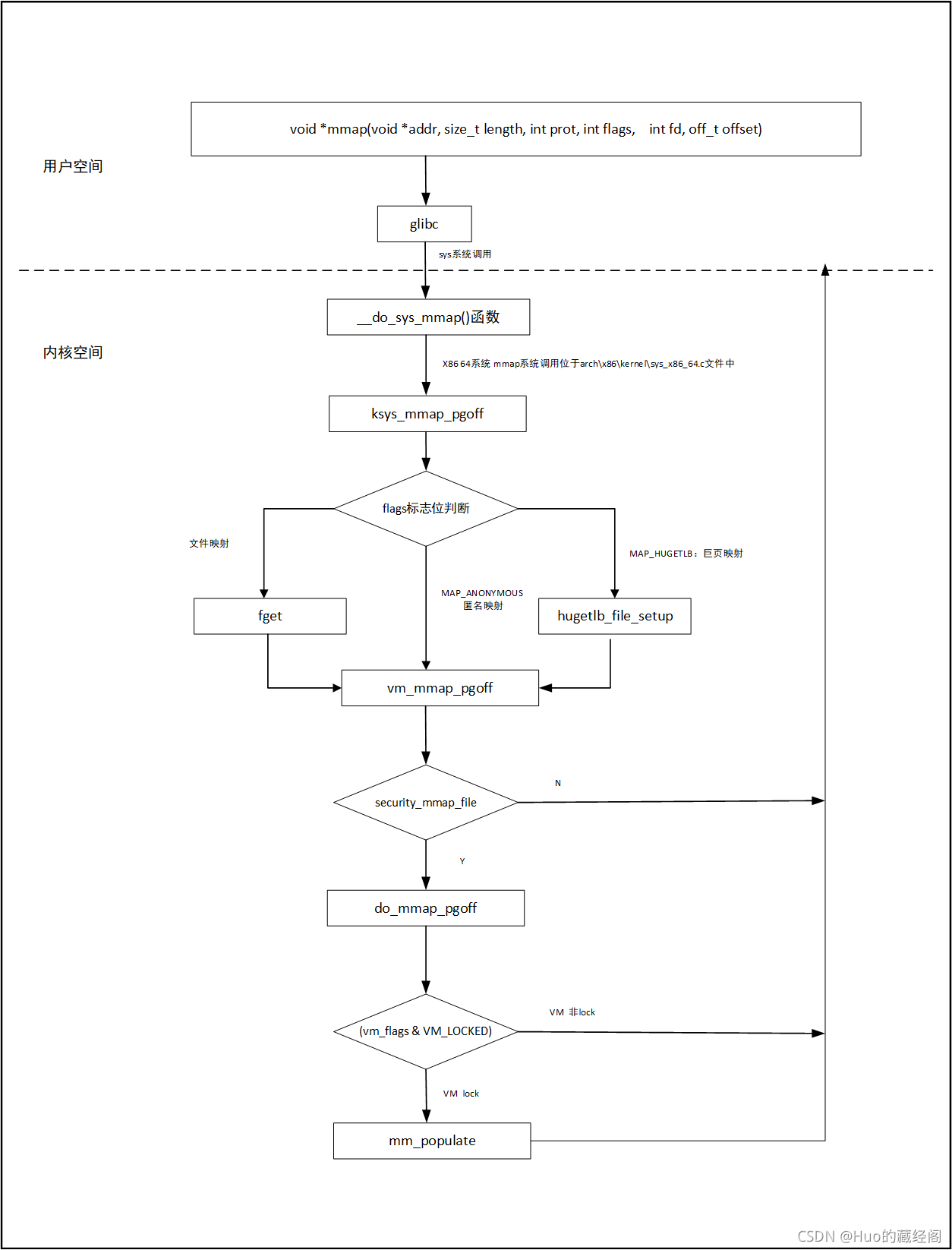

用户空间mmap主要是通过glibc,由glibc通过系统调用最终进入到内核态__do_sys_mmap(),以内核5.8.10版本为例,主要流程图如下:

?主要流程:

- 用户空间 调用mmap,通过glibc,最终通过系统调用陷入到内核态中,调用__do_sys_mmap()函数

- __do_sys_mmap在每个平台实现稍微有点区别,以X86 64为例 ,该函数定义位于X86 64系统 mmap系统调用位于arch\x86\kernel\sys_x86_64.c文件中

- ?__do_sys_mmap函数对offset参数进行检查是否为页对齐,如果不是页对齐则之间返回。否则就进入ksys_mmap_pgoff函数。

- ksys_mmap_pgoff为x86 64 mmap处理总入口函数,该函数会对flags标记做出不同的处理:

? ? ? ? ?如果是文件映射,则需要根据传入的fd,通过fget获取到对应的文件struct? file(struct file结构作用《linux 内核inode VS file》详细了解)? ?

? ? ? ? 如果是巨页映射情况MAP_HUGETTLB(主要在数据库中应用较多,可以减少page table,从而提高效率,缺点就是内存浪费比较严重),则对该情况进行特殊处理

? ? ? ? 如果是匿名映射不做特殊处理

- 最终进入到vm_mmap_pgoff 实际处理函数中。

- vm_mmap_pgoff ()首先会调用security_mmap_file,该函数主要是内核sandboxing功能,通过sandboxing调用mmap_file函数,如果是文件映射会mmap_file会对文件进行权限检查之类操作(sandboxing功能可以实现程序隔离,增加系统安全性Sandboxing with the Landlock security module [LWN.net])

- mmap_file检查通过之后就会进入mmap主要处理函数do_mmap_pgoff,最终会调用到do_mmap 具体实现mmap映射

- do_mmap主要处理思路就是为相应的映射申请一段合适的用户虚拟空间,根据需要是否生成一个vma结构,用户跟踪用户空间使用情况。

- 最后根据是否配置memlock功能 ,是否提前调用mm_populate为其申请物理内存,memlock功能最大好处就是可以提前为其申请内存,将虚拟内存和物理内存映射固定起来,这样就不需要发生page fault,后面也不会因为内存不足而被置换swap out出去。(对于很多应用场景以及驱动来说都需要用到此功能,但是内核社区也不建议长期对内存锁住,以产生内存不足或者较多内存碎片)。

do_mmap

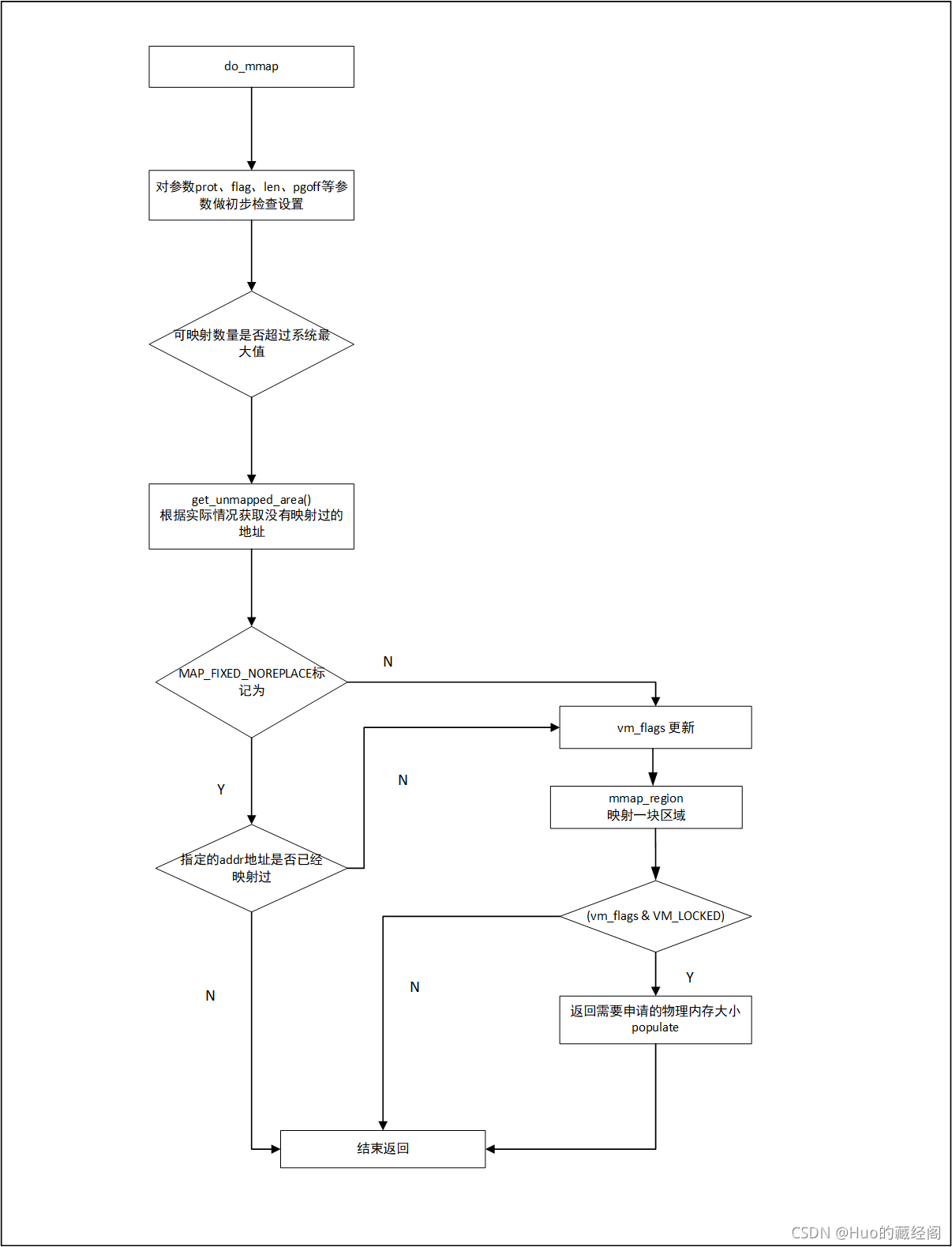

do_mmap是实现mmap系统调用整个关键实现部分,根据入参各种情况,申请一块用户虚拟地址空间,并创建相应VMA用于跟踪记录,以及根据是否需要lock情况,返回要申请的物理内存大小,主要流程图如下:

?该函数实现比较复杂,但是大概可以分为以下几个主体处理部分:

- 参数检查,以及各种系统检查

- 根据实际情况调用get_unmapped_are(),获取一个没有映射并满足需求的虚拟用户空间地址

- MAP_FIXED_NOREPLACE 是否设置,处理

- execute_only_pkey 处理

- ?vm_flag标志位更新

- mmap_region:申请一块用户虚拟地址空间,并创建相应VMA用于跟踪记录

- 如果该vma 被lock住,则需要返回需要申请对应物理内存大小。

参数检查阶段

参数阶段不仅对入参进行检查 还更新了一些状态标记位等处理,主要代码部分如下:

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

struct mm_struct *mm = current->mm;

int pkey = 0;

*populate = 0;

if (!len)

return -EINVAL;

/*

* Does the application expect PROT_READ to imply PROT_EXEC?

*

* (the exception is when the underlying filesystem is noexec

* mounted, in which case we dont add PROT_EXEC.)

*/

if ((prot & PROT_READ) && (current->personality & READ_IMPLIES_EXEC))

if (!(file && path_noexec(&file->f_path)))

prot |= PROT_EXEC;

/* force arch specific MAP_FIXED handling in get_unmapped_area */

if (flags & MAP_FIXED_NOREPLACE)

flags |= MAP_FIXED;

if (!(flags & MAP_FIXED))

addr = round_hint_to_min(addr);

/* Careful about overflows.. */

len = PAGE_ALIGN(len);

if (!len)

return -ENOMEM;

/* offset overflow? */

if ((pgoff + (len >> PAGE_SHIFT)) < pgoff)

return -EOVERFLOW;

/* Too many mappings? */

if (mm->map_count > sysctl_max_map_count)

return -ENOMEM;

... ...

}- ?长度len 为0 直接返回错误。

- 如果设置PRT_READ? read权限 则需要根据当前系统配置情况(可以通过系统调用personality() 设置READ_IMPLIES_EXEC) 是否需要追加 可执行权限,如果追加则说明默认read权限附带可执行权限。

-

?如果设置了MAP_FIXED_NOREPLACE 标记为,则需要追加MAP_FIXED,方便后续处理

-

如果没有设置MAP_FIXED,则需要根据传入的参数addr地址选择一个地址,如果传入addr小于mmap_min_addr, 则将addr设置为mmap_min_addr,如果大于mmap_min_addr,则值不变

-

对len 参数进行对齐处理

-

对pgoff和len参数检查 防止overflow场景

-

检查该进程内的用户空间映射的map_count数量是否超过sysctl_max_map_count。

get_unmapped_area

get_unmapped_area()该函数主要代码如下:

unsigned long

get_unmapped_area(struct file *file, unsigned long addr, unsigned long len,

unsigned long pgoff, unsigned long flags)

{

unsigned long (*get_area)(struct file *, unsigned long,

unsigned long, unsigned long, unsigned long);

unsigned long error = arch_mmap_check(addr, len, flags);

if (error)

return error;

/* Careful about overflows.. */

if (len > TASK_SIZE)

return -ENOMEM;

get_area = current->mm->get_unmapped_area;

if (file) {

if (file->f_op->get_unmapped_area)

get_area = file->f_op->get_unmapped_area;

} else if (flags & MAP_SHARED) {

/*

* mmap_region() will call shmem_zero_setup() to create a file,

* so use shmem's get_unmapped_area in case it can be huge.

* do_mmap_pgoff() will clear pgoff, so match alignment.

*/

pgoff = 0;

get_area = shmem_get_unmapped_area;

}

addr = get_area(file, addr, len, pgoff, flags);

if (IS_ERR_VALUE(addr))

return addr;

if (addr > TASK_SIZE - len)

return -ENOMEM;

if (offset_in_page(addr))

return -EINVAL;

error = security_mmap_addr(addr);

return error ? error : addr;

}

?该函数主要功能是根据入参以及 实际使用情况选择一个addr最近的没有映射使用过的地址。

?MAP_FIXED_NOREPLACE

如果设置了MAP_FIXED_NOREPLACE, 则需要对addr地址进行检查,如果addr地址已经映射过,直接返回

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

... ...

if (flags & MAP_FIXED_NOREPLACE) {

struct vm_area_struct *vma = find_vma(mm, addr);

if (vma && vma->vm_start < addr + len)

return -EEXIST;

}

... ...

}?vm_flags更新

根据prot 以及匿名映射 和文件映射 等更新vma_flag状态标志位

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

... ...

/* Do simple checking here so the lower-level routines won't have

* to. we assume access permissions have been handled by the open

* of the memory object, so we don't do any here.

*/

vm_flags |= calc_vm_prot_bits(prot, pkey) | calc_vm_flag_bits(flags) |

mm->def_flags | VM_MAYREAD | VM_MAYWRITE | VM_MAYEXEC;

if (flags & MAP_LOCKED)

if (!can_do_mlock())

return -EPERM;

if (mlock_future_check(mm, vm_flags, len))

return -EAGAIN;

if (file) {

struct inode *inode = file_inode(file);

unsigned long flags_mask;

if (!file_mmap_ok(file, inode, pgoff, len))

return -EOVERFLOW;

flags_mask = LEGACY_MAP_MASK | file->f_op->mmap_supported_flags;

switch (flags & MAP_TYPE) {

case MAP_SHARED:

/*

* Force use of MAP_SHARED_VALIDATE with non-legacy

* flags. E.g. MAP_SYNC is dangerous to use with

* MAP_SHARED as you don't know which consistency model

* you will get. We silently ignore unsupported flags

* with MAP_SHARED to preserve backward compatibility.

*/

flags &= LEGACY_MAP_MASK;

fallthrough;

case MAP_SHARED_VALIDATE:

if (flags & ~flags_mask)

return -EOPNOTSUPP;

if (prot & PROT_WRITE) {

if (!(file->f_mode & FMODE_WRITE))

return -EACCES;

if (IS_SWAPFILE(file->f_mapping->host))

return -ETXTBSY;

}

/*

* Make sure we don't allow writing to an append-only

* file..

*/

if (IS_APPEND(inode) && (file->f_mode & FMODE_WRITE))

return -EACCES;

/*

* Make sure there are no mandatory locks on the file.

*/

if (locks_verify_locked(file))

return -EAGAIN;

vm_flags |= VM_SHARED | VM_MAYSHARE;

if (!(file->f_mode & FMODE_WRITE))

vm_flags &= ~(VM_MAYWRITE | VM_SHARED);

fallthrough;

case MAP_PRIVATE:

if (!(file->f_mode & FMODE_READ))

return -EACCES;

if (path_noexec(&file->f_path)) {

if (vm_flags & VM_EXEC)

return -EPERM;

vm_flags &= ~VM_MAYEXEC;

}

if (!file->f_op->mmap)

return -ENODEV;

if (vm_flags & (VM_GROWSDOWN|VM_GROWSUP))

return -EINVAL;

break;

default:

return -EINVAL;

}

} else {

switch (flags & MAP_TYPE) {

case MAP_SHARED:

if (vm_flags & (VM_GROWSDOWN|VM_GROWSUP))

return -EINVAL;

/*

* Ignore pgoff.

*/

pgoff = 0;

vm_flags |= VM_SHARED | VM_MAYSHARE;

break;

case MAP_PRIVATE:

/*

* Set pgoff according to addr for anon_vma.

*/

pgoff = addr >> PAGE_SHIFT;

break;

default:

return -EINVAL;

}

}

/*

* Set 'VM_NORESERVE' if we should not account for the

* memory use of this mapping.

*/

if (flags & MAP_NORESERVE) {

/* We honor MAP_NORESERVE if allowed to overcommit */

if (sysctl_overcommit_memory != OVERCOMMIT_NEVER)

vm_flags |= VM_NORESERVE;

/* hugetlb applies strict overcommit unless MAP_NORESERVE */

if (file && is_file_hugepages(file))

vm_flags |= VM_NORESERVE;

}

... ...

}mmap_region

mmap_region是真正实施映射处理,选择一个合适的虚拟空间,生成一个vma,用于跟踪记录:

unsigned long mmap_region(struct file *file, unsigned long addr,

unsigned long len, vm_flags_t vm_flags, unsigned long pgoff,

struct list_head *uf)

{

struct mm_struct *mm = current->mm;

struct vm_area_struct *vma, *prev;

int error;

struct rb_node **rb_link, *rb_parent;

unsigned long charged = 0;

/* Check against address space limit. */

if (!may_expand_vm(mm, vm_flags, len >> PAGE_SHIFT)) {

unsigned long nr_pages;

/*

* MAP_FIXED may remove pages of mappings that intersects with

* requested mapping. Account for the pages it would unmap.

*/

nr_pages = count_vma_pages_range(mm, addr, addr + len);

if (!may_expand_vm(mm, vm_flags,

(len >> PAGE_SHIFT) - nr_pages))

return -ENOMEM;

}

/* Clear old maps */

while (find_vma_links(mm, addr, addr + len, &prev, &rb_link,

&rb_parent)) {

if (do_munmap(mm, addr, len, uf))

return -ENOMEM;

}

/*

* Private writable mapping: check memory availability

*/

if (accountable_mapping(file, vm_flags)) {

charged = len >> PAGE_SHIFT;

if (security_vm_enough_memory_mm(mm, charged))

return -ENOMEM;

vm_flags |= VM_ACCOUNT;

}

/*

* Can we just expand an old mapping?

*/

vma = vma_merge(mm, prev, addr, addr + len, vm_flags,

NULL, file, pgoff, NULL, NULL_VM_UFFD_CTX);

if (vma)

goto out;

/*

* Determine the object being mapped and call the appropriate

* specific mapper. the address has already been validated, but

* not unmapped, but the maps are removed from the list.

*/

vma = vm_area_alloc(mm);

if (!vma) {

error = -ENOMEM;

goto unacct_error;

}

vma->vm_start = addr;

vma->vm_end = addr + len;

vma->vm_flags = vm_flags;

vma->vm_page_prot = vm_get_page_prot(vm_flags);

vma->vm_pgoff = pgoff;

if (file) {

if (vm_flags & VM_DENYWRITE) {

error = deny_write_access(file);

if (error)

goto free_vma;

}

if (vm_flags & VM_SHARED) {

error = mapping_map_writable(file->f_mapping);

if (error)

goto allow_write_and_free_vma;

}

/* ->mmap() can change vma->vm_file, but must guarantee that

* vma_link() below can deny write-access if VM_DENYWRITE is set

* and map writably if VM_SHARED is set. This usually means the

* new file must not have been exposed to user-space, yet.

*/

vma->vm_file = get_file(file);

error = call_mmap(file, vma);

if (error)

goto unmap_and_free_vma;

/* Can addr have changed??

*

* Answer: Yes, several device drivers can do it in their

* f_op->mmap method. -DaveM

* Bug: If addr is changed, prev, rb_link, rb_parent should

* be updated for vma_link()

*/

WARN_ON_ONCE(addr != vma->vm_start);

addr = vma->vm_start;

vm_flags = vma->vm_flags;

} else if (vm_flags & VM_SHARED) {

error = shmem_zero_setup(vma);

if (error)

goto free_vma;

} else {

vma_set_anonymous(vma);

}

vma_link(mm, vma, prev, rb_link, rb_parent);

/* Once vma denies write, undo our temporary denial count */

if (file) {

if (vm_flags & VM_SHARED)

mapping_unmap_writable(file->f_mapping);

if (vm_flags & VM_DENYWRITE)

allow_write_access(file);

}

file = vma->vm_file;

out:

perf_event_mmap(vma);

vm_stat_account(mm, vm_flags, len >> PAGE_SHIFT);

if (vm_flags & VM_LOCKED) {

if ((vm_flags & VM_SPECIAL) || vma_is_dax(vma) ||

is_vm_hugetlb_page(vma) ||

vma == get_gate_vma(current->mm))

vma->vm_flags &= VM_LOCKED_CLEAR_MASK;

else

mm->locked_vm += (len >> PAGE_SHIFT);

}

if (file)

uprobe_mmap(vma);

/*

* New (or expanded) vma always get soft dirty status.

* Otherwise user-space soft-dirty page tracker won't

* be able to distinguish situation when vma area unmapped,

* then new mapped in-place (which must be aimed as

* a completely new data area).

*/

vma->vm_flags |= VM_SOFTDIRTY;

vma_set_page_prot(vma);

return addr;

unmap_and_free_vma:

vma->vm_file = NULL;

fput(file);

/* Undo any partial mapping done by a device driver. */

unmap_region(mm, vma, prev, vma->vm_start, vma->vm_end);

charged = 0;

if (vm_flags & VM_SHARED)

mapping_unmap_writable(file->f_mapping);

allow_write_and_free_vma:

if (vm_flags & VM_DENYWRITE)

allow_write_access(file);

free_vma:

vm_area_free(vma);

unacct_error:

if (charged)

vm_unacct_memory(charged);

return error;

}

如果是一个文件映射,则根据file 进入到call_mmap 实现相对应的文件映射功能,并将其文件映射到虚拟空间中。

如果是匿名映射,则没有特殊处理,直接选择一个合适的虚拟空间,生成vma插入到该进程对应的 mm中。

vm_flags & VM_LOCKED

如果vm 被锁住即虚拟内存被锁住,需要返回需要实际的物理内存大小:

unsigned long do_mmap(struct file *file, unsigned long addr,

unsigned long len, unsigned long prot,

unsigned long flags, vm_flags_t vm_flags,

unsigned long pgoff, unsigned long *populate,

struct list_head *uf)

{

... ...

if (!IS_ERR_VALUE(addr) &&

((vm_flags & VM_LOCKED) ||

(flags & (MAP_POPULATE | MAP_NONBLOCK)) == MAP_POPULATE))

*populate = len;

return addr;

)