Docker网络

先将docker中的container 和 image 全部清除

docker ps -a

docker rm -f `docker ps -aq`

docker images -a

docker image prune -a

docker rmi -f `docker images -aq`

理解docker0

docker是如何处理容器之间的网络访问的?

[root@localhost mytomcat]# ll

total 375052

-rw-r--r--. 1 root root 11576317 Sep 28 06:59 apache-tomcat-9.0.54.tar.gz

-rw-r--r--. 1 root root 828 Sep 29 05:40 Dockerfile

-rw-r--r--. 1 root root 194042837 Oct 15 2021 jdk-8u202-linux-x64.tar.gz

-rw-r--r--. 1 root root 15 Sep 28 21:53 readme.txt

[root@localhost mytomcat]# docker build -t mytomcat:1.0 .

[root@localhost mytomcat]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mytomcat 1.0 8d5258351cbe 22 seconds ago 755MB

centos latest 5d0da3dc9764 13 days ago 231MB

[root@localhost mytomcat]# docker run -d -P --name tomcat01 mytomcat:1.0

8f52dd2abc8c08a9095ec78dd8bece98ef199485ffd9181afd6d6dcf6d3b47af

[root@localhost mytomcat]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8f52dd2abc8c mytomcat:1.0 "/bin/sh -c '$CATALI…" 3 seconds ago Up 2 seconds 0.0.0.0:49162->8080/tcp, :::49162->8080/tcp tomcat01

[root@localhost mytomcat]# docker exec -it 8f52dd2abc8c ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

# 这个网卡信息就是docker分配给它的地址

262: eth0@if263: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

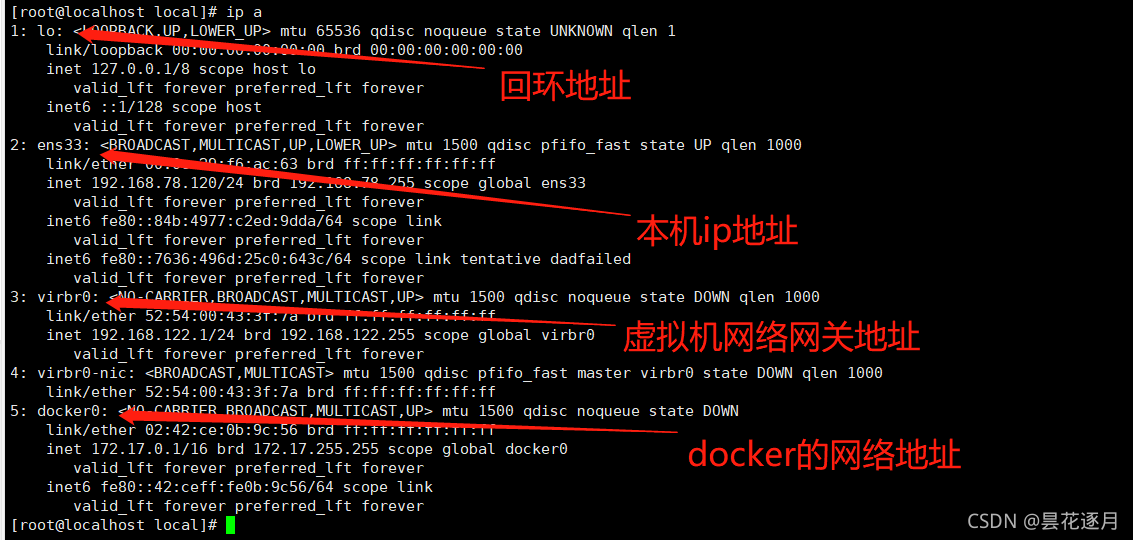

[root@localhost mytomcat]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:f6:ac:63 brd ff:ff:ff:ff:ff:ff

inet 192.168.78.120/24 brd 192.168.78.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::84b:4977:c2ed:9dda/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::7636:496d:25c0:643c/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:43:3f:7a brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:43:3f:7a brd ff:ff:ff:ff:ff:ff

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP

link/ether 02:42:ce:0b:9c:56 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:ceff:fe0b:9c56/64 scope link

valid_lft forever preferred_lft forever

# 263 的信息就是新启动的容器的网络信息,是docker分配给它的

263: veth8c32440@if262: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP

link/ether fa:2d:c7:4a:dd:c1 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::f82d:c7ff:fe4a:ddc1/64 scope link

valid_lft forever preferred_lft forever

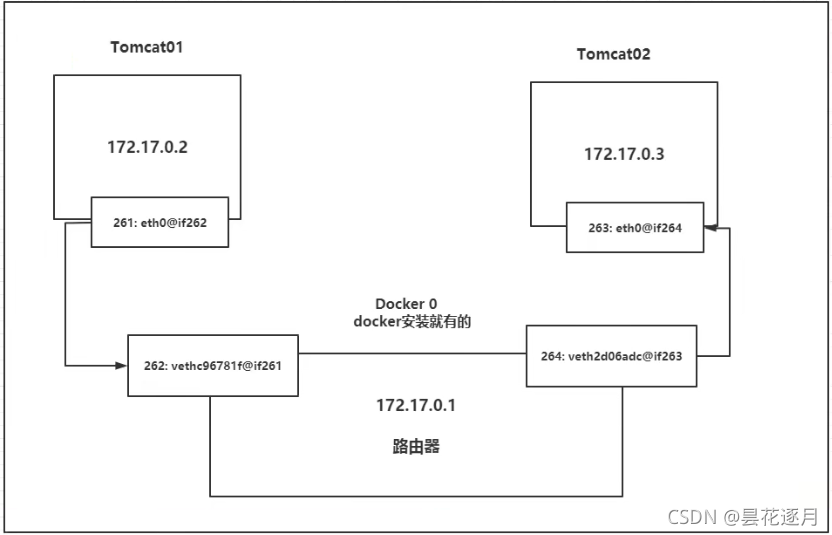

原理

1、我们每启动一个docker容器,docker就会给容器分配一个ip ,我们只要安装了docker,就会有一个网卡docker0

桥接模式,使用的技术是 veth-pair 技术 !

veth-pari 就是成对的虚拟设备接口,它们都是成对出现的,一端连着协议,一端彼此相连。

正因为有这个特性,veth-pair充当一个桥梁,连接各种虚拟设备

Openstack,Docker容器之间的连接,OVS连接,都是使用的 veth-pair技术

结论:tomcat01 和 tomcat02 是公用的一个路由器docker0

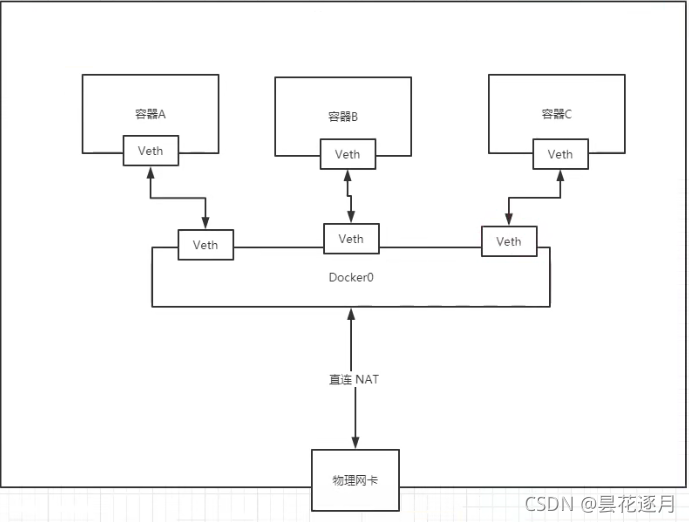

小结

所有的容器不指定网络的情况下,都是docker0路由的,docker会给容器分配一个默认的可用ip

Docker使用的是Linux的桥接,宿主机中是一个Docker容器的网桥 docker0

Docker中的所有网络接口都是虚拟的,虚拟的转发效率高!(比如:内网传递文件!)

只要容器被删除,对应的一对网桥就没有了!

–link(现在不推荐使用了)

思考一个场景,我们编写了一个微服务,database url=ip:,项目不重启,数据库换掉了,我们希望可以处理这个问题,怎么样可以通过名字来访问容器?

# 现在通过ip,容器之间可以互通

[root@localhost mytomcat]# docker exec -it b65612588084 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.096 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.070 ms

^C

--- 172.17.0.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1003ms

rtt min/avg/max/mdev = 0.070/0.083/0.096/0.013 ms

# 但是通过容器名,容器之间无法通过容器名来进行访问

[root@localhost mytomcat]# docker exec -it b65612588084 ping tomcat01

ping: tomcat01: Name or service not known

[root@localhost mytomcat]#

# 通过--link方式,就可以通过容器名字进行访问了

[root@localhost mytomcat]# docker run -d -P --name tomcat03 --link tomcat02 mytomcat:1.0

38dbebe09d96676ca2eb70202e51e4163f6f47573d51ba428cc7e6d8cca78b62

[root@localhost mytomcat]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.105 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.069 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.091 ms

^C

--- tomcat02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.069/0.088/0.105/0.016 ms

# --link 是单向的,反过来就不行了

[root@localhost mytomcat]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

[root@localhost mytomcat]#

# 看看两者的不同

# --link 相当于在容器中的/etc/hosts中配置了 ip和主机名 的映射

[root@localhost mytomcat]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 b65612588084

172.17.0.4 38dbebe09d96

[root@localhost mytomcat]# docker exec -it tomcat02 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 b65612588084

[root@localhost mytomcat]#

docker network --help

docker network ls

docker inspect netwrok_id

–link 就是在hosts配置中增加了一对ip和主机名的映射

现在不推荐使用–link了,它太笨了;我们以后使用自定义网络。

自定义网络!不使用docker0!

docker0的问题:它不支持通过容器名进行访问

自定义网络

查看所有的docker网络 docker network ls

网络模式

bridge : 桥接 docker (默认,也可以通过bridge模式创建自定义网络)

none : 不配置网络

host : 和宿主机共享网络

container :容器网络连通 ! (用的少! 局限很大!)

docker run -d -P --name tomcat01 [--net bridge] mytomcat:1.0

# docker0特点,默认,域名不能访问,--link可以打通域名连接,但是不方便!

# 通过docker network create 命令创建自定义网络

[root@localhost mytomcat]# docker network create -d bridge --gateway 192.168.0.1 --subnet 192.168.0.0/16 mynet

1fddb5e124679554a3da918c61b90f25f0a758f6167ffa190d75cf44705e44d4

[root@localhost mytomcat]# docker network ls

NETWORK ID NAME DRIVER SCOPE

352038d3ef06 bridge bridge local

36e3e088fd84 host host local

1fddb5e12467 mynet bridge local

f34a91248f63 none null local

[root@localhost mytomcat]# docker inspect mynet

[

{

"Name": "mynet",

"Id": "1fddb5e124679554a3da918c61b90f25f0a758f6167ffa190d75cf44705e44d4",

"Created": "2021-09-29T07:30:29.384973584-07:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

自定义网络修复了docker0的不足,而且不是通过配置/etc/hosts的方式来实现域名访问的

[root@localhost mytomcat]# docker run -d -P --net mynet --name tomcat01 mytomcat:1.0

fe743acd6ee99cf8168b9bd0b0ccfa06ac1241e71c11d8115865067b398cf4a7

[root@localhost mytomcat]# docker run -d -P --net mynet --name tomcat02 mytomcat:1.0

f290fa931046e3879b6a222b65912d6947a139ace6a40556092f51061ac618f2

[root@localhost mytomcat]# docker run -d -P --net mynet --name tomcat03 mytomcat:1.0

bc76032b6e085fa7e835b552cd12e2e61ebf043f09f16b6e2960d1aebbc9a305

[root@localhost mytomcat]#

[root@localhost mytomcat]# docker exec -it tomcat02 ping tomcat01

PING tomcat01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.095 ms

64 bytes from tomcat01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.070 ms

^C

--- tomcat01 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1004ms

rtt min/avg/max/mdev = 0.070/0.082/0.095/0.015 ms

[root@localhost mytomcat]# docker exec -it tomcat02 ping tomcat03

PING tomcat03 (192.168.0.4) 56(84) bytes of data.

64 bytes from tomcat03.mynet (192.168.0.4): icmp_seq=1 ttl=64 time=0.058 ms

64 bytes from tomcat03.mynet (192.168.0.4): icmp_seq=2 ttl=64 time=0.076 ms

64 bytes from tomcat03.mynet (192.168.0.4): icmp_seq=3 ttl=64 time=0.053 ms

64 bytes from tomcat03.mynet (192.168.0.4): icmp_seq=4 ttl=64 time=0.146 ms

^C

--- tomcat03 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3003ms

rtt min/avg/max/mdev = 0.053/0.083/0.146/0.037 ms

[root@localhost mytomcat]#

[root@localhost mytomcat]# docker exec -it tomcat02 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.0.3 f290fa931046

[root@localhost mytomcat]#

我们自定义的网络,docker自己都已经帮我们维护好了对应的域名和ip的对应关系,推荐使用!

好处:不同的集群使用不同的网络子网,它们之间是隔离的,保证集群是安全和健康的

网络连通

打通一个容器和另外一个子网的网络通信

打通之后,就是将一个容器放到了另一个网络下

一个容器有多个ip地址(多网卡,虚拟网卡)!比如阿里云服务:公网ip 私网ip

docker network connect --help

docker network connect mynet ntomcat02

docker inspect ntomcat02

docker exec -it ntomcat02 ping tomcat02

docker exec -it tomcat02 ping ntomcat02

结论:如果要跨网络通信,需要使用 docker network connect 将容器连通到另个一网络,成为另一个网络中的一员。

实战:部署Redis集群

https://www.bilibili.com/video/BV1og4y1q7M4?p=38&spm_id_from=pageDriver