kubeadm 安装k8s

一、部署准备

准备三台虚拟机,master节点至少2核2G

master: 192.168.100.11

node1: 192.168.100.12

node2: 192.168.100.13

1、修改各个机器主机名

hostnamectl set-hostname master

su

hostnamectl set-hostname node1

su

hostnamectl set-hostname node2

su

2、所有机器关闭防火墙、swap分区等

所有机器:

关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

关闭selinux:

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

关闭swap:

swapoff -a # 临时

vim /etc/fstab #永久(将带有swap的那一行注释掉)

free -m #查看,swap那一行会全部显示0

添加主机名与IP对应关系(记得设置主机名):

cat /etc/hosts

192.168.100.11 master

192.168.100.12 node1

192.168.100.13 node2

同步时间:(也可以不用同步,但是三台虚拟机的时间相差不能超过一天,

因为token只有一天的作用效果)

yum install ntpdate

ntpdate -u ntp.api.bz

ntpdate ntp1.aliyun.com #这里用的是阿里的

将桥接的IPv4流量传递到iptables的链:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system #使配置生效

执行完后可以先快照一下,假如出错可以到返回到这一步

二、所有节点安装Docker/kubeadm/kubelet

1、所有机器安装docker

参考:https://blog.csdn.net/lv74134/article/details/120052307?spm=1001.2014.3001.5501

1.1安装

1、安装

step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

Step 2: 添加软件源信息

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Step 3

sudo sed -i ‘s+download.docker.com+mirrors.aliyun.com/docker-ce+’ /etc/yum.repos.d/docker-ce.repo

Step 4: 更新并安装Docker-CE

sudo yum makecache fast

sudo yum -y install docker-ce

Step 4: 开启Docker服务

sudo service docker start

1.2 设置镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xxxx.mirror.aliyuncs.com"]

} #自己阿里云账户的下的分配的加速地址,到容器与镜像服务下可查

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo systemctl enable docker

1.3、网络优化

vim /etc/sysctl.conf

net.ipv4.ip_forward=1

docker-server端配置文件

vim /etc/docker/daemon.json

{

"gragh": "/data/docker"

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quary.io"]

"registry-mirrors": ["https://lq"]

"bip": "172.168.80.50/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

{

“gragh”: “/data/ docker” 数据目录

“storage-driver”: “overlay2”, 存储引擎LXC-》overlay —》overlay2

“insecure-registries”: [“registry.access.redhat.com”, “quary.io”] 私有仓库

“registry-mirrors”: [ “https:/lq” ] 镜像加速

“bip”: “172.168.80.50/24”, docker网络

“exec-opts” :[ “native.cgroupdriver=systemd” ],启动时候的额外参数(驱动)

“live-restore”: true 当docker容器引擎挂掉的时候,使用docker跑起来的容器还能运行(分离)

}

#docker安装完成,可以快照一次

2、所有节点安装kubeadm,kubelet和kubectl

2.1添加阿里云YUM软件源

#指定安装源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.2安装指定版本

[root@master ~]# yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0

[root@master ~]# rpm -qa | grep kube #如果版本过高,需yum remove掉,然后重新下载

kubectl-1.15.0-0.x86_64

kubernetes-cni-0.8.7-0.x86_64

kubelet-1.15.0-0.x86_64

kubeadm-1.15.0-0.x86_64

[root@master ~]# systemctl enable kubelet

#设置开机自启

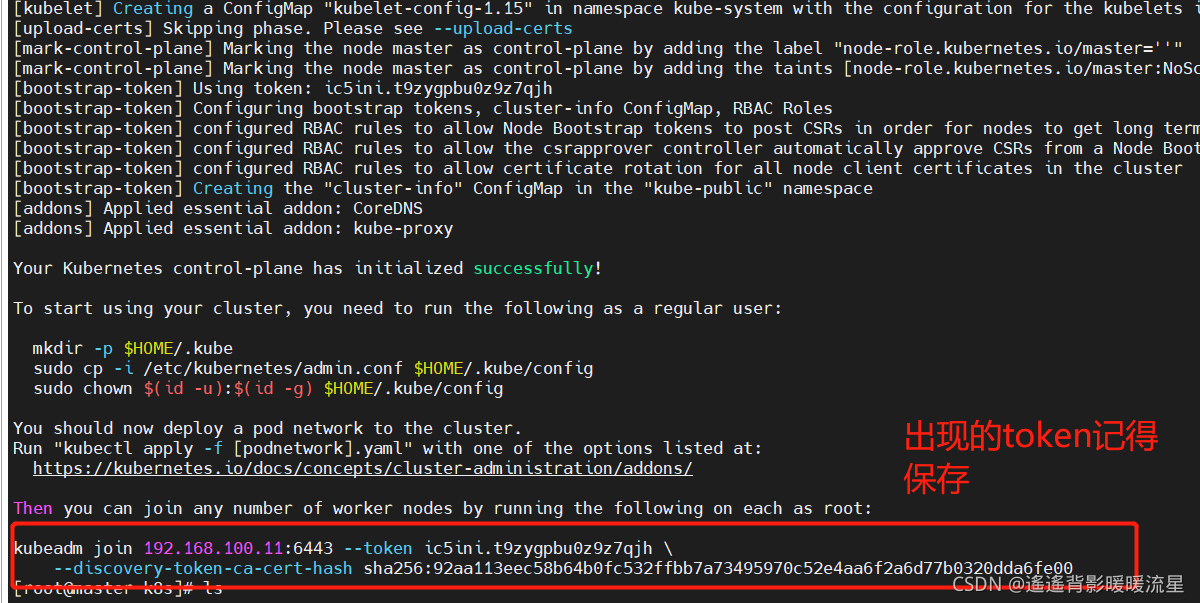

三、初始化和安装网络插件

1、master上执行初始化

[root@master ~]# mkdir k8s && cd k8s

[root@master k8s]# kubeadm init \

> --apiserver-advertise-address=192.168.100.11 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.15.0 \

> --service-cidr=10.1.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

kubeadm init \

--apiserver-advertise-address=192.168.100.11 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.15.0 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

–apiserver-advertise-address=192.168.100.11 #指定master节点IP

–image-repository registry.aliyuncs.com/google_containers

#由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

–kubernetes-version v1.14.0 #指定版本号

–pod-network-cidr=10.244.0.0/16 #指定pod的网段

[root@master k8s]# cat token.wenjian

kubeadm join 192.168.100.11:6443 --token ic5ini.t9zygpbu0z9z7qjh \

--discovery-token-ca-cert-hash sha256:92aa113eec58b64b0fc532ffbb7a73495970c52e4aa6f2a6d77b0320dda6fe00

#手动将尾部刚刚生成的token保存在一个文件里,在node加入集群的时候要用到token

[root@master k8s]# cd

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 4m12s v1.15.0

2、master上安装pod网络插件(flannel)

[root@master ]# wget https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

#从外网下载,经常失败,多下几次就好

[root@master ~]# ls

anaconda-ks.cfg k8s 公共 视频 文档 音乐

initial-setup-ks.cfg kube-flannel.yml 模板 图片 下载 桌面

[root@master ]# kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

[root@master ~]# kubectl get cs #查看组件是否健康

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@master ~]# kubectl get node #查看现有的节点

NAME STATUS ROLES AGE VERSION

master Ready master 17m v1.15.0

四、将node节点加入集群

在node1和node2上执行

[root@node1 docker]# docker pull lizhenliang/flannel:v0.11.0-amd64

#node上安装flannel镜像

v0.11.0-amd64: Pulling from lizhenliang/flannel

cd784148e348: Pull complete

04ac94e9255c: Pull complete

e10b013543eb: Pull complete

005e31e443b1: Pull complete

74f794f05817: Pull complete

Digest: sha256:bd76b84c74ad70368a2341c2402841b75950df881388e43fc2aca000c546653a

Status: Downloaded newer image for lizhenliang/flannel:v0.11.0-amd64

docker.io/lizhenliang/flannel:v0.11.0-amd64

[root@node1 docker]# docker imges

docker: 'imges' is not a docker command.

See 'docker --help'

[root@node1 docker]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.15.0 d235b23c357 0 2 years ago 82.4MB

quay.io/coreos/flannel v0.11.0-amd64 ff281650a72 1 2 years ago 52.6MB

lizhenliang/flannel v0.11.0-amd64 ff281650a72 1 2 years ago 52.6MB

registry.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca 1 3 years ago 742kB

[root@node1 docker]# kubeadm join 192.168.100.11:6443 --token ic5ini.t9zygpbu0z9 z7qjh --discovery-token-ca-cert-hash sha256:92aa113eec58b64b0fc532ffbb7a7349 5970c52e4aa6f2a6d77b0320dda6fe00

#复制master上生成的token

回到master上,查看集群信息

[root@master k8s]# kubectl get nodes

#查看所有节点

NAME STATUS ROLES AGE VERSION

master Ready master 26m v1.15.0

node1 Ready <none> 5m54s v1.15.0

node2 Ready <none> 5m49s v1.15.0

[root@master k8s]# kubectl get pods --all-namespaces

#查看命名空间

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-bccdc95cf-bwvrt 1/1 Running 0 26m

kube-system coredns-bccdc95cf-j99hn 1/1 Running 0 26m

kube-system etcd-master 1/1 Running 0 25m

kube-system kube-apiserver-master 1/1 Running 0 26m

kube-system kube-controller-manager-master 1/1 Running 0 25m

kube-system kube-flannel-ds-amd64-gsl25 1/1 Running 0 6m31 s

kube-system kube-flannel-ds-amd64-rf72f 1/1 Running 0 6m26 s

kube-system kube-flannel-ds-amd64-xm7xx 1/1 Running 0 9m54 s

kube-system kube-proxy-ff4rg 1/1 Running 0 26m

kube-system kube-proxy-r6tbz 1/1 Running 0 6m31 s

kube-system kube-proxy-t9qw7 1/1 Running 0 6m26 s

kube-system kube-scheduler-master 1/1 Running 0 25m

[root@master k8s]# kubectl get pod -n kube-system

#查看指定名称空间的pod信息

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-bwvrt 1/1 Running 0 42m

coredns-bccdc95cf-j99hn 1/1 Running 0 42m

etcd-master 1/1 Running 0 41m

kube-apiserver-master 1/1 Running 0 41m

kube-controller-manager-master 1/1 Running 0 41m

kube-flannel-ds-amd64-gsl25 1/1 Running 0 21m

kube-flannel-ds-amd64-rf72f 1/1 Running 0 21m

kube-flannel-ds-amd64-xm7xx 1/1 Running 0 25m

kube-proxy-ff4rg 1/1 Running 0 42m

kube-proxy-r6tbz 1/1 Running 0 21m

kube-proxy-t9qw7 1/1 Running 0 21m

kube-scheduler-master 1/1 Running 0 41m

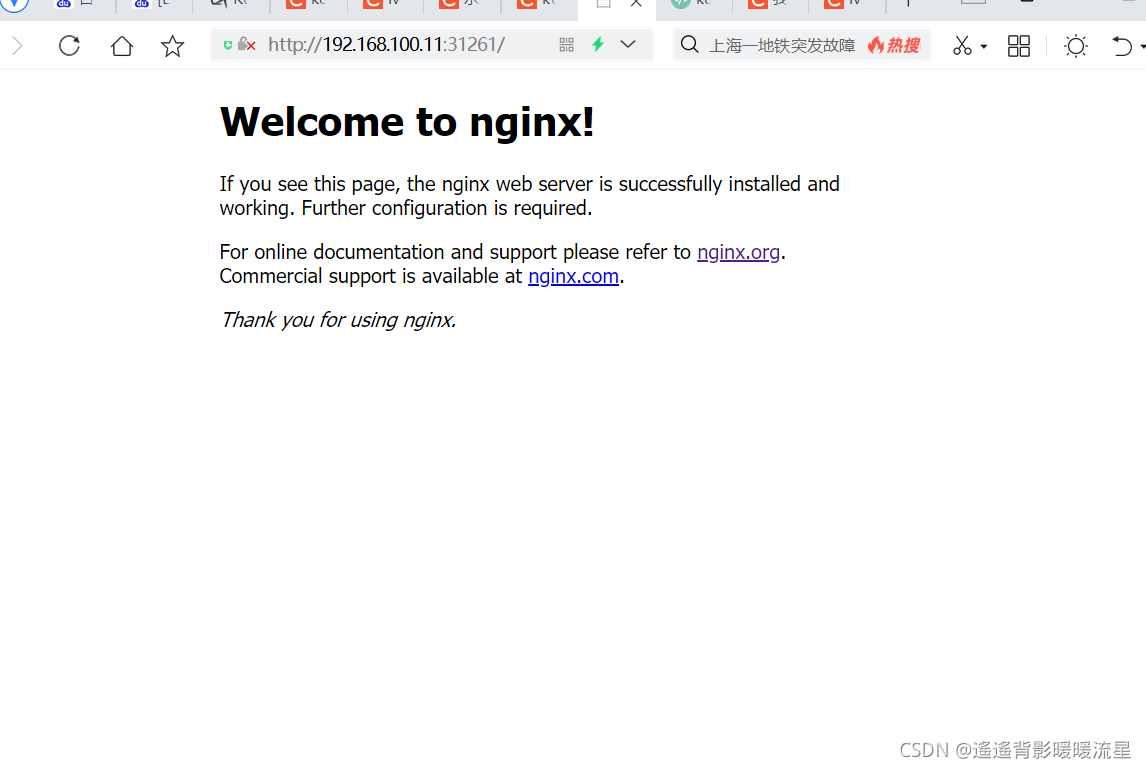

五、创建任务

[root@master k8s]# kubectl create deployment nginx --image=daocloud.io/library/nginx

#创建一个pod

deployment.apps/nginx created

[root@master k8s]# kubectl expose deployment nginx --port=80 --type=NodePort

#映射80端口

service/nginx exposed

[root@master k8s]# kubectl get pod,svc

# #查看状态以及映射的端口号

NAME READY STATUS RESTARTS AGE

pod/nginx-5ffd98bc9c-8wpcw 1/1 Running 0 51s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 136m

service/nginx NodePort 10.1.56.61 <none> 80:31261/TCP 30s

[root@master k8s]# curl -I 192.168.100.11:31261 #返回码200,访问成功

HTTP/1.1 200 OK

Server: nginx/1.19.6

Date: Sun, 14 Nov 2021 15:20:26 GMT

Content-Type: text/html

Content-Length: 612