基础

- KuberFate_v1.6.1

- docker-compose方式部署

- 训练服务及推理服务位于同一台服务器,docker容器内网互通;共部署四台;

编写zookeeper启动文件,配置文件

#zk的docker-compose.yml文件内容

version: '3'

networks:

fate-network:

external: true

name: fate-network

services:

zoo1:

image: zookeeper

restart: always

container_name: zoo1

expose:

- 2181

ports:

- 2181:2181

- 8000:2181

networks:

- fate-network

volumes:

- /data/projects/zookeeper/zoo1/data:/data

- /data/projects/zookeeper/zoo1/datalog:/datalog

- ./confs/zoo.cfg:/apache-zookeeper-3.7.0-bin/conf/zoo.cfg

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo2:

image: zookeeper

restart: always

container_name: zoo2

networks:

- fate-network

expose:

- 2181

ports:

- 2182:2181

volumes:

- /data/projects/zookeeper/zoo2/data:/data

- /data/projects/zookeeper/zoo2/datalog:/datalog

- ./confs/zoo.cfg:/apache-zookeeper-3.7.0-bin/conf/zoo.cfg

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo3:

image: zookeeper

restart: always

container_name: zoo3

networks:

- fate-network

expose:

- 2181

ports:

- 2183:2181

volumes:

- /data/projects/zookeeper/zoo3/data:/dada

- /data/projects/zookeeper/zoo3/datalog:/datalog

- ./confs/zoo.cfg:/apache-zookeeper-3.7.0-bin/conf/zoo.cfg

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

zk配置文件内容

# The number of milliseconds of each

ticktickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

dataDir=/data/projects/zookeeper

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

admin.enableServer=false

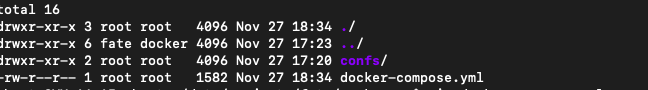

目录结构如图:

使用docker-compose up -d 直接启动,并日志核实;

FATE serving-server及serving-proxy配置参数修改

serving-server配置文件:

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

port=8000

#serviceRoleName=serving

# cache

#remoteModelInferenceResultCacheSwitch=false

#cache.type=local

#model.cache.path=

# local cache

#local.cache.maxsize=10000

#local.cache.expire=30

#local.cache.interval=3

# external cache

redis.ip=redis

redis.port=6379

### configure this parameter to use cluster mode

#redis.cluster.nodes=127.0.0.1:6379,127.0.0.1:6380,127.0.0.1:6381,127.0.0.1:6382,127.0.0.1:6383,127.0.0.1:6384

### this password is common in stand-alone mode and cluster mode

redis.password=fate_dev

#redis.timeout=10

#redis.expire=3000

#redis.maxTotal=100

#redis.maxIdle=100

# external subsystem

# proxy=serving-proxy:8879 #需要关闭掉

# adapter

feature.single.adaptor=com.webank.ai.fate.serving.adaptor.dataaccess.MockAdapter

feature.batch.adaptor=com.webank.ai.fate.serving.adaptor.dataaccess.MockBatchAdapter

# model transfer

model.transfer.url=http://172.21.16.15:9380/v1/model/transfer

# zk router

zk.url=zoo1:2181,zoo2:2181,zoo3:2181 #已修改

useRegister=true #已修改

useZkRouter=true #已修改

# zk acl

#acl.enable=false

#acl.username=

#acl.password=

serving-proxy配置文件修改

# limitations under the License.

#

# coordinator same as Party ID

coordinator=10000

server.port=8059

#inference.service.name=serving

#random, consistent

#routeType=random

#route.table=/data/projects/fate-serving/serving-proxy/conf/route_table.json

#auth.file=/data/projects/fate-serving/serving-proxy/conf/auth_config.json

# zk router

#useZkRouter=true

useRegister=true # 修改

useZkRouter=true # 修改

zk.url=zoo1:2181,zoo2:2181,zoo3:2181 # 修改

# zk acl

#acl.enable=false

#acl.username=

#acl.password=

# intra-partyid port

proxy.grpc.intra.port=8879 # 修改

# inter-partyid port

proxy.grpc.inter.port=8869 # 修改

# grpc

proxy.grpc.inference.timeout=3000 # 修改

proxy.grpc.inference.async.timeout=1000 # 修改

proxy.grpc.unaryCall.timeout=3000 # 修改

proxy.grpc.threadpool.coresize=50 # 修改

proxy.grpc.threadpool.maxsize=100 # 修改

proxy.grpc.threadpool.queuesize=10 # 修改

proxy.async.timeout=5000 # 修改

proxy.async.coresize=10 # 修改

proxy.async.maxsize=100 # 修改

proxy.grpc.batch.inference.timeout=10000 # 修改

编写serving-admin启动及配置文件

#serving-admin的docker-compose.yml 文件

version: '3'

networks:

fate-network:

external: true

name: confs-10000_fate-network

services:

serving-admin:

image: "federatedai/serving-admin:2.0.4-release"

volumes:

- ./confs/serving-admin/conf/application.properties:/data/projects/fate/serving-admin/conf/application.properties

ports:

- "9300:8350"

networks:

- fate-network

restart: always

container_name: serving-admin

配置文件:

server.port=8350

# cache

#local.cache.expire=300

# zk

#zk.url=zoo1:2181

zk.url=zoo1:2181,zoo2:2181,zoo3:2181

# zk acl

#acl.enable=false

#acl.username=

#acl.password=

# grpc

#grpc.timeout=5000

# username & password

admin.username=admin

admin.password=admin

使用docker-compose up -d 直接启动,并日志核实;注意,一定要在zk和FATE-SERVING启动成功后,最后再启动serving-admin服务;

访问ip:8350即可,配置文件中的账号密码登录;