Kubernetes CKA认证运维工程师笔记-Kubernetes监控与日志

1. 查看集群资源状况

查看master组件状态:

kubectl get cs

查看node状态:

kubectl get node

查看Apiserver代理的URL:

kubectl cluster-info

查看集群详细信息:

kubectl cluster-info dump

查看资源信息:

kubectl describe <资源> <名称>

查看资源信息:

kubectl get pod<Pod名称> --watch

[root@k8s-master ~]# kubectl --help

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new

Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate):

explain Documentation of resources

get Display one or many resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or by resources and label

selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet or Replication Controller

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

Advanced Commands:

diff Diff live version against would-be applied version

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource using strategic merge patch

replace Replace a resource by filename or stdin

wait Experimental: Wait for a specific condition on one or many resources.

convert Convert config files between different API versions

kustomize Build a kustomization target from a directory or a remote url.

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

alpha Commands for features in alpha

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

plugin Provides utilities for interacting with plugins.

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

[root@k8s-master ~]# kubectl api-resources

NAME SHORTNAMES APIGROUP NAMESPACED KIND

bindings true Binding

componentstatuses cs false ComponentStatus

configmaps cm true ConfigMap

endpoints ep true Endpoints

events ev true Event

limitranges limits true LimitRange

namespaces ns false Namespace

nodes no false Node

persistentvolumeclaims pvc true PersistentVolumeClaim

persistentvolumes pv false PersistentVolume

pods po true Pod

podtemplates true PodTemplate

replicationcontrollers rc true ReplicationController

resourcequotas quota true ResourceQuota

secrets true Secret

serviceaccounts sa true ServiceAccount

services svc true Service

mutatingwebhookconfigurations admissionregistration.k8s.io false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io false CustomResourceDefinition

apiservices apiregistration.k8s.io false APIService

controllerrevisions apps true ControllerRevision

daemonsets ds apps true DaemonSet

deployments deploy apps true Deployment

replicasets rs apps true ReplicaSet

statefulsets sts apps true StatefulSet

tokenreviews authentication.k8s.io false TokenReview

localsubjectaccessreviews authorization.k8s.io true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling true HorizontalPodAutoscaler

cronjobs cj batch true CronJob

jobs batch true Job

certificatesigningrequests csr certificates.k8s.io false CertificateSigningRequest

leases coordination.k8s.io true Lease

bgpconfigurations crd.projectcalico.org false BGPConfiguration

bgppeers crd.projectcalico.org false BGPPeer

blockaffinities crd.projectcalico.org false BlockAffinity

clusterinformations crd.projectcalico.org false ClusterInformation

felixconfigurations crd.projectcalico.org false FelixConfiguration

globalnetworkpolicies crd.projectcalico.org false GlobalNetworkPolicy

globalnetworksets crd.projectcalico.org false GlobalNetworkSet

hostendpoints crd.projectcalico.org false HostEndpoint

ipamblocks crd.projectcalico.org false IPAMBlock

ipamconfigs crd.projectcalico.org false IPAMConfig

ipamhandles crd.projectcalico.org false IPAMHandle

ippools crd.projectcalico.org false IPPool

kubecontrollersconfigurations crd.projectcalico.org false KubeControllersConfiguration

networkpolicies crd.projectcalico.org true NetworkPolicy

networksets crd.projectcalico.org true NetworkSet

endpointslices discovery.k8s.io true EndpointSlice

events ev events.k8s.io true Event

ingresses ing extensions true Ingress

ingressclasses networking.k8s.io false IngressClass

ingresses ing networking.k8s.io true Ingress

networkpolicies netpol networking.k8s.io true NetworkPolicy

runtimeclasses node.k8s.io false RuntimeClass

poddisruptionbudgets pdb policy true PodDisruptionBudget

podsecuritypolicies psp policy false PodSecurityPolicy

clusterrolebindings rbac.authorization.k8s.io false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io false ClusterRole

rolebindings rbac.authorization.k8s.io true RoleBinding

roles rbac.authorization.k8s.io true Role

priorityclasses pc scheduling.k8s.io false PriorityClass

csidrivers storage.k8s.io false CSIDriver

csinodes storage.k8s.io false CSINode

storageclasses sc storage.k8s.io false StorageClass

volumeattachments storage.k8s.io false VolumeAttachment

[root@k8s-master ~]# kubectl cluster-info

Kubernetes master is running at https://10.0.0.61:6443

KubeDNS is running at https://10.0.0.61:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 3d23h

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 3d23h

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 3d23h

web-96d5df5c8-ghb6g 1/1 Running 1 6d17h

[root@k8s-master ~]# kubectl describe web-96d5df5c8-ghb6g

error: the server doesn't have a resource type "web-96d5df5c8-ghb6g"

[root@k8s-master ~]# kubectl describe pod web-96d5df5c8-ghb6g

Name: web-96d5df5c8-ghb6g

Namespace: default

Priority: 0

Node: k8s-node1/10.0.0.62

Start Time: Mon, 22 Nov 2021 11:30:03 +0800

Labels: app=web

pod-template-hash=96d5df5c8

Annotations: cni.projectcalico.org/podIP: 10.244.36.73/32

cni.projectcalico.org/podIPs: 10.244.36.73/32

Status: Running

IP: 10.244.36.73

IPs:

IP: 10.244.36.73

Controlled By: ReplicaSet/web-96d5df5c8

Containers:

nginx:

Container ID: docker://ee0b322c28e2879554f2f27d8865437b95ba0ab1f2c6b8b1489393cf3e6c1fa8

Image: nginx

Image ID: docker-pullable://nginx@sha256:097c3a0913d7e3a5b01b6c685a60c03632fc7a2b50bc8e35bcaa3691d788226e

Port: <none>

Host Port: <none>

State: Running

Started: Fri, 26 Nov 2021 05:58:56 +0800

Last State: Terminated

Reason: Error

Exit Code: 255

Started: Mon, 22 Nov 2021 11:30:28 +0800

Finished: Fri, 26 Nov 2021 05:57:23 +0800

Ready: True

Restart Count: 1

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-8grtj (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-8grtj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-8grtj

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

[root@k8s-master ~]# kubectl describe node

Name: k8s-master

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8s-master

kubernetes.io/os=linux

node-role.kubernetes.io/master=

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 172.16.1.61/24

projectcalico.org/IPv4IPIPTunnelAddr: 10.244.235.192

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 21 Nov 2021 23:18:39 +0800

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

HolderIdentity: k8s-master

AcquireTime: <unset>

RenewTime: Mon, 29 Nov 2021 05:19:23 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Fri, 26 Nov 2021 05:58:25 +0800 Fri, 26 Nov 2021 05:58:25 +0800 CalicoIsUp Calico is running on this node

MemoryPressure False Mon, 29 Nov 2021 05:18:36 +0800 Sun, 21 Nov 2021 23:18:34 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Mon, 29 Nov 2021 05:18:36 +0800 Sun, 21 Nov 2021 23:18:34 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Mon, 29 Nov 2021 05:18:36 +0800 Sun, 21 Nov 2021 23:18:34 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Mon, 29 Nov 2021 05:18:36 +0800 Sun, 21 Nov 2021 23:37:59 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.0.0.61

Hostname: k8s-master

Capacity:

cpu: 2

ephemeral-storage: 30185064Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1863020Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 27818554937

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1760620Ki

pods: 110

System Info:

Machine ID: c20304a03ec54a0fa8aab6469d0a16dc

System UUID: 57654D56-6399-91DA-1188-C71724A08E29

Boot ID: 09c44076-1aa1-46a0-a1ad-8699d13ee2e6

Kernel Version: 3.10.0-1160.45.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.11

Kubelet Version: v1.19.0

Kube-Proxy Version: v1.19.0

PodCIDR: 10.244.0.0/24

PodCIDRs: 10.244.0.0/24

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system calico-kube-controllers-97769f7c7-z6npb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kube-system calico-node-vqzdj 250m (12%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kube-system etcd-k8s-master 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d6h

kube-system kube-apiserver-k8s-master 250m (12%) 0 (0%) 0 (0%) 0 (0%) 7d6h

kube-system kube-controller-manager-k8s-master 200m (10%) 0 (0%) 0 (0%) 0 (0%) 6d18h

kube-system kube-proxy-tvzpd 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d6h

kube-system kube-scheduler-k8s-master 100m (5%) 0 (0%) 0 (0%) 0 (0%) 6d18h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 800m (40%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

Name: k8s-node1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8s-node1

kubernetes.io/os=linux

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 172.16.1.62/24

projectcalico.org/IPv4IPIPTunnelAddr: 10.244.36.64

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 21 Nov 2021 23:23:18 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: k8s-node1

AcquireTime: <unset>

RenewTime: Mon, 29 Nov 2021 05:19:23 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Fri, 26 Nov 2021 05:58:30 +0800 Fri, 26 Nov 2021 05:58:30 +0800 CalicoIsUp Calico is running on this node

MemoryPressure False Mon, 29 Nov 2021 05:16:45 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Mon, 29 Nov 2021 05:16:45 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Mon, 29 Nov 2021 05:16:45 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Mon, 29 Nov 2021 05:16:45 +0800 Fri, 26 Nov 2021 05:58:09 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.0.0.62

Hostname: k8s-node1

Capacity:

cpu: 2

ephemeral-storage: 30185064Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1863020Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 27818554937

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1760620Ki

pods: 110

System Info:

Machine ID: c20304a03ec54a0fa8aab6469d0a16dc

System UUID: 153A4D56-390D-E3C5-B1BB-446F0639112A

Boot ID: 2f3a132d-3bf6-4186-ab1f-ea88e40e96ac

Kernel Version: 3.10.0-1160.45.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.11

Kubelet Version: v1.19.0

Kube-Proxy Version: v1.19.0

PodCIDR: 10.244.1.0/24

PodCIDRs: 10.244.1.0/24

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default my-dep-5f8dfc8c78-dvxp8 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

default my-dep-5f8dfc8c78-f4ln4 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

default web-96d5df5c8-ghb6g 0 (0%) 0 (0%) 0 (0%) 0 (0%) 6d17h

kube-system calico-node-4pwdc 250m (12%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kube-system coredns-6d56c8448f-tbsmv 100m (5%) 0 (0%) 70Mi (4%) 170Mi (9%) 7d6h

kube-system kube-proxy-q2xfq 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d5h

test my-dep-5f8dfc8c78-77cld 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d22h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 350m (17%) 0 (0%)

memory 70Mi (4%) 170Mi (9%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

Name: k8s-node2

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8s-node2

kubernetes.io/os=linux

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 172.16.1.63/24

projectcalico.org/IPv4IPIPTunnelAddr: 10.244.169.128

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 21 Nov 2021 23:23:27 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: k8s-node2

AcquireTime: <unset>

RenewTime: Mon, 29 Nov 2021 05:19:23 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Fri, 26 Nov 2021 05:59:03 +0800 Fri, 26 Nov 2021 05:59:03 +0800 CalicoIsUp Calico is running on this node

MemoryPressure False Mon, 29 Nov 2021 05:16:14 +0800 Sun, 21 Nov 2021 23:23:27 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Mon, 29 Nov 2021 05:16:14 +0800 Sun, 21 Nov 2021 23:23:27 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Mon, 29 Nov 2021 05:16:14 +0800 Sun, 21 Nov 2021 23:23:27 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Mon, 29 Nov 2021 05:16:14 +0800 Fri, 26 Nov 2021 05:58:46 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.0.0.63

Hostname: k8s-node2

Capacity:

cpu: 2

ephemeral-storage: 30185064Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1863020Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 27818554937

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1760620Ki

pods: 110

System Info:

Machine ID: c20304a03ec54a0fa8aab6469d0a16dc

System UUID: 46874D56-AB2E-7867-1BD5-C67713201686

Boot ID: a8891669-cf69-4f0c-bcd9-400c059e3b2d

Kernel Version: 3.10.0-1160.45.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.11

Kubelet Version: v1.19.0

Kube-Proxy Version: v1.19.0

PodCIDR: 10.244.2.0/24

PodCIDRs: 10.244.2.0/24

Non-terminated Pods: (8 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default my-dep-5f8dfc8c78-j9fqp 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

kube-system calico-node-9r6zd 250m (12%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kube-system coredns-6d56c8448f-gcgrh 100m (5%) 0 (0%) 70Mi (4%) 170Mi (9%) 7d6h

kube-system kube-proxy-5qpgc 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kubernetes-dashboard dashboard-metrics-scraper-7b59f7d4df-jxb4b 0 (0%) 0 (0%) 0 (0%) 0 (0%) 6d13h

kubernetes-dashboard kubernetes-dashboard-5dbf55bd9d-zpr7t 0 (0%) 0 (0%) 0 (0%) 0 (0%) 6d13h

test my-dep-5f8dfc8c78-58sdk 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d22h

test my-dep-5f8dfc8c78-965w7 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d22h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 350m (17%) 0 (0%)

memory 70Mi (4%) 170Mi (9%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d6h

my-dep NodePort 10.111.199.51 <none> 80:31734/TCP 3d23h

web NodePort 10.96.132.243 <none> 80:31340/TCP 6d17h

[root@k8s-master ~]# kubectl describe svc web

Name: web

Namespace: default

Labels: app=web

Annotations: <none>

Selector: app=web

Type: NodePort

IP: 10.96.132.243

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 31340/TCP

Endpoints: 10.244.36.73:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

[root@k8s-master ~]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 3d23h

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 3d23h

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 3d23h

web-96d5df5c8-ghb6g 1/1 Running 1 6d17h

^C[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 7d6h v1.19.0

k8s-node1 Ready <none> 7d5h v1.19.0

k8s-node2 Ready <none> 7d5h v1.19.0

[root@k8s-master ~]# kubectl describe node k8s-node1

Name: k8s-node1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8s-node1

kubernetes.io/os=linux

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 172.16.1.62/24

projectcalico.org/IPv4IPIPTunnelAddr: 10.244.36.64

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 21 Nov 2021 23:23:18 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: k8s-node1

AcquireTime: <unset>

RenewTime: Mon, 29 Nov 2021 05:22:53 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Fri, 26 Nov 2021 05:58:30 +0800 Fri, 26 Nov 2021 05:58:30 +0800 CalicoIsUp Calico is running on this node

MemoryPressure False Mon, 29 Nov 2021 05:21:48 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Mon, 29 Nov 2021 05:21:48 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Mon, 29 Nov 2021 05:21:48 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Mon, 29 Nov 2021 05:21:48 +0800 Fri, 26 Nov 2021 05:58:09 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.0.0.62

Hostname: k8s-node1

Capacity:

cpu: 2

ephemeral-storage: 30185064Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1863020Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 27818554937

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1760620Ki

pods: 110

System Info:

Machine ID: c20304a03ec54a0fa8aab6469d0a16dc

System UUID: 153A4D56-390D-E3C5-B1BB-446F0639112A

Boot ID: 2f3a132d-3bf6-4186-ab1f-ea88e40e96ac

Kernel Version: 3.10.0-1160.45.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.11

Kubelet Version: v1.19.0

Kube-Proxy Version: v1.19.0

PodCIDR: 10.244.1.0/24

PodCIDRs: 10.244.1.0/24

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default my-dep-5f8dfc8c78-dvxp8 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

default my-dep-5f8dfc8c78-f4ln4 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

default web-96d5df5c8-ghb6g 0 (0%) 0 (0%) 0 (0%) 0 (0%) 6d17h

kube-system calico-node-4pwdc 250m (12%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kube-system coredns-6d56c8448f-tbsmv 100m (5%) 0 (0%) 70Mi (4%) 170Mi (9%) 7d6h

kube-system kube-proxy-q2xfq 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d5h

test my-dep-5f8dfc8c78-77cld 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d22h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 350m (17%) 0 (0%)

memory 70Mi (4%) 170Mi (9%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

[root@k8s-master ~]# kubectl describe node k8s-node1

Name: k8s-node1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=k8s-node1

kubernetes.io/os=linux

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 172.16.1.62/24

projectcalico.org/IPv4IPIPTunnelAddr: 10.244.36.64

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 21 Nov 2021 23:23:18 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: k8s-node1

AcquireTime: <unset>

RenewTime: Mon, 29 Nov 2021 05:25:13 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Fri, 26 Nov 2021 05:58:30 +0800 Fri, 26 Nov 2021 05:58:30 +0800 CalicoIsUp Calico is running on this node

MemoryPressure False Mon, 29 Nov 2021 05:21:48 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Mon, 29 Nov 2021 05:21:48 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Mon, 29 Nov 2021 05:21:48 +0800 Sun, 21 Nov 2021 23:23:18 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Mon, 29 Nov 2021 05:21:48 +0800 Fri, 26 Nov 2021 05:58:09 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 10.0.0.62

Hostname: k8s-node1

Capacity:

cpu: 2

ephemeral-storage: 30185064Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1863020Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 27818554937

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 1760620Ki

pods: 110

System Info:

Machine ID: c20304a03ec54a0fa8aab6469d0a16dc

System UUID: 153A4D56-390D-E3C5-B1BB-446F0639112A

Boot ID: 2f3a132d-3bf6-4186-ab1f-ea88e40e96ac

Kernel Version: 3.10.0-1160.45.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.11

Kubelet Version: v1.19.0

Kube-Proxy Version: v1.19.0

PodCIDR: 10.244.1.0/24

PodCIDRs: 10.244.1.0/24

Non-terminated Pods: (7 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default my-dep-5f8dfc8c78-dvxp8 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

default my-dep-5f8dfc8c78-f4ln4 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d23h

default web-96d5df5c8-ghb6g 0 (0%) 0 (0%) 0 (0%) 0 (0%) 6d17h

kube-system calico-node-4pwdc 250m (12%) 0 (0%) 0 (0%) 0 (0%) 7d5h

kube-system coredns-6d56c8448f-tbsmv 100m (5%) 0 (0%) 70Mi (4%) 170Mi (9%) 7d6h

kube-system kube-proxy-q2xfq 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7d6h

test my-dep-5f8dfc8c78-77cld 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3d22h

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 350m (17%) 0 (0%)

memory 70Mi (4%) 170Mi (9%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events: <none>

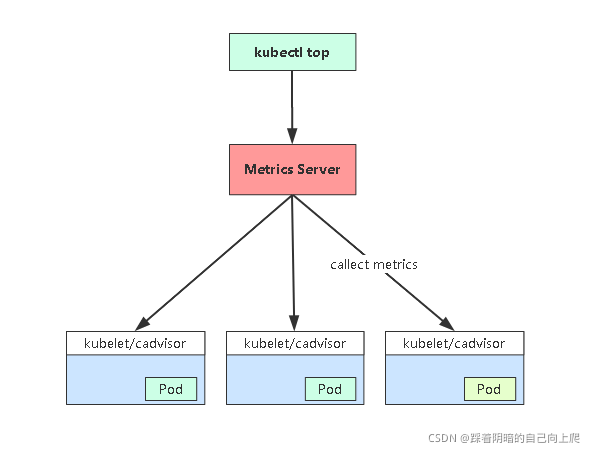

2. 监控集群资源利用率

Metrics-server + cAdvisor 监控集群资源消耗

Metrics Server是一个集群范围的资源使用情况的数据聚合器。作为一个应用部署在集群中。Metric server从每个节点上KubeletAPI收集指标,通过Kubernetes聚合器注册在Master APIServer中。

Metrics Server部署:

# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.7/components.yaml

# vi components.yaml

...

containers:

-name: metrics-server

image: lizhenliang/metrics-server:v0.3.7

imagePullPolicy: IfNotPresent

args:

---cert-dir=/tmp

---secure-port=4443

---kubelet-insecure-tls #不验证kubelet提供的https证书

---kubelet-preferred-address-types=InternalIP #使用节点IP连接kubelet

...

项目地址:https://github.com/kubernetes-sigs/metrics-server

查看Node资源消耗:

kubectl top node <node name>

查看Pod资源消耗:

kubectl top pod <pod name>

[root@k8s-master ~]# kubectl top pod

error: Metrics API not available

[root@k8s-master ~]# rz -E

rz waiting to receive.

[root@k8s-master ~]# vi metrics-server.yaml

[root@k8s-master ~]# kubectl apply -f metrics-server.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-z6npb 0/1 Running 1 7d6h

calico-node-4pwdc 1/1 Running 1 7d6h

calico-node-9r6zd 1/1 Running 1 7d6h

calico-node-vqzdj 1/1 Running 1 7d6h

coredns-6d56c8448f-gcgrh 1/1 Running 1 7d6h

coredns-6d56c8448f-tbsmv 1/1 Running 1 7d6h

etcd-k8s-master 1/1 Running 1 7d6h

kube-apiserver-k8s-master 1/1 Running 3 7d6h

kube-controller-manager-k8s-master 0/1 Running 8 6d19h

kube-proxy-5qpgc 1/1 Running 1 7d6h

kube-proxy-q2xfq 1/1 Running 1 7d6h

kube-proxy-tvzpd 1/1 Running 1 7d6h

kube-scheduler-k8s-master 0/1 Running 8 6d19h

metrics-server-84f9866fdf-kt2nb 0/1 ContainerCreating 0 47s

[root@k8s-master ~]# kubectl top pod

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io)

[root@k8s-master ~]# kubectl get apiservice

NAME SERVICE AVAILABLE AGE

v1. Local True 7d6h

v1.admissionregistration.k8s.io Local True 7d6h

v1.apiextensions.k8s.io Local True 7d6h

v1.apps Local True 7d6h

v1.authentication.k8s.io Local True 7d6h

v1.authorization.k8s.io Local True 7d6h

v1.autoscaling Local True 7d6h

v1.batch Local True 7d6h

v1.certificates.k8s.io Local True 7d6h

v1.coordination.k8s.io Local True 7d6h

v1.crd.projectcalico.org Local True 18h

v1.events.k8s.io Local True 7d6h

v1.networking.k8s.io Local True 7d6h

v1.rbac.authorization.k8s.io Local True 7d6h

v1.scheduling.k8s.io Local True 7d6h

v1.storage.k8s.io Local True 7d6h

v1beta1.admissionregistration.k8s.io Local True 7d6h

v1beta1.apiextensions.k8s.io Local True 7d6h

v1beta1.authentication.k8s.io Local True 7d6h

v1beta1.authorization.k8s.io Local True 7d6h

v1beta1.batch Local True 7d6h

v1beta1.certificates.k8s.io Local True 7d6h

v1beta1.coordination.k8s.io Local True 7d6h

v1beta1.discovery.k8s.io Local True 7d6h

v1beta1.events.k8s.io Local True 7d6h

v1beta1.extensions Local True 7d6h

v1beta1.metrics.k8s.io kube-system/metrics-server False (MissingEndpoints) 2m7s

v1beta1.networking.k8s.io Local True 7d6h

v1beta1.node.k8s.io Local True 7d6h

v1beta1.policy Local True 7d6h

v1beta1.rbac.authorization.k8s.io Local True 7d6h

v1beta1.scheduling.k8s.io Local True 7d6h

v1beta1.storage.k8s.io Local True 7d6h

v2beta1.autoscaling Local True 7d6h

v2beta2.autoscaling Local True 7d6h

[root@k8s-master ~]# kubectl get apiservice

NAME SERVICE AVAILABLE AGE

v1. Local True 7d6h

v1.admissionregistration.k8s.io Local True 7d6h

v1.apiextensions.k8s.io Local True 7d6h

v1.apps Local True 7d6h

v1.authentication.k8s.io Local True 7d6h

v1.authorization.k8s.io Local True 7d6h

v1.autoscaling Local True 7d6h

v1.batch Local True 7d6h

v1.certificates.k8s.io Local True 7d6h

v1.coordination.k8s.io Local True 7d6h

v1.crd.projectcalico.org Local True 18h

v1.events.k8s.io Local True 7d6h

v1.networking.k8s.io Local True 7d6h

v1.rbac.authorization.k8s.io Local True 7d6h

v1.scheduling.k8s.io Local True 7d6h

v1.storage.k8s.io Local True 7d6h

v1beta1.admissionregistration.k8s.io Local True 7d6h

v1beta1.apiextensions.k8s.io Local True 7d6h

v1beta1.authentication.k8s.io Local True 7d6h

v1beta1.authorization.k8s.io Local True 7d6h

v1beta1.batch Local True 7d6h

v1beta1.certificates.k8s.io Local True 7d6h

v1beta1.coordination.k8s.io Local True 7d6h

v1beta1.discovery.k8s.io Local True 7d6h

v1beta1.events.k8s.io Local True 7d6h

v1beta1.extensions Local True 7d6h

v1beta1.metrics.k8s.io kube-system/metrics-server True 3m58s

v1beta1.networking.k8s.io Local True 7d6h

v1beta1.node.k8s.io Local True 7d6h

v1beta1.policy Local True 7d6h

v1beta1.rbac.authorization.k8s.io Local True 7d6h

v1beta1.scheduling.k8s.io Local True 7d6h

v1beta1.storage.k8s.io Local True 7d6h

v2beta1.autoscaling Local True 7d6h

v2beta2.autoscaling Local True 7d6h

[root@k8s-master ~]# kubectl top pod

NAME CPU(cores) MEMORY(bytes)

my-dep-5f8dfc8c78-dvxp8 2m 178Mi

my-dep-5f8dfc8c78-f4ln4 2m 169Mi

my-dep-5f8dfc8c78-j9fqp 2m 188Mi

web-96d5df5c8-ghb6g 0m 4Mi

[root@k8s-master ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 278m 13% 1193Mi 69%

k8s-node1 173m 8% 1153Mi 67%

k8s-node2 158m 7% 1197Mi 69%

[root@k8s-master ~]# kubectl describe apiservice v1beta1.metrics.k8s.io

Name: v1beta1.metrics.k8s.io

Namespace:

Labels: <none>

Annotations: <none>

API Version: apiregistration.k8s.io/v1

Kind: APIService

Metadata:

Creation Timestamp: 2021-11-28T21:40:06Z

Resource Version: 834047

Self Link: /apis/apiregistration.k8s.io/v1/apiservices/v1beta1.metrics.k8s.io

UID: 4158e4cb-677e-4fbf-8cd4-24a2c8e417d3

Spec:

Group: metrics.k8s.io

Group Priority Minimum: 100

Insecure Skip TLS Verify: true

Service:

Name: metrics-server

Namespace: kube-system

Port: 443

Version: v1beta1

Version Priority: 100

Status:

Conditions:

Last Transition Time: 2021-11-28T21:43:04Z

Message: all checks passed

Reason: Passed

Status: True

Type: Available

Events: <none>

3. 管理K8s组件日志

- K8S系统的组件日志

- K8S Cluster里面部署的应用程序日志

- 标准输出

- 日志文件

systemd守护进程管理的组件:

journalctl -u kubelet

Pod部署的组件:

kubectl logs kube-proxy-btz4p -n kube-system

系统日志:

/var/log/messages

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-z6npb 0/1 Running 1 7d6h

calico-node-4pwdc 1/1 Running 1 7d6h

calico-node-9r6zd 1/1 Running 1 7d6h

calico-node-vqzdj 1/1 Running 1 7d6h

coredns-6d56c8448f-gcgrh 1/1 Running 1 7d6h

coredns-6d56c8448f-tbsmv 1/1 Running 1 7d6h

etcd-k8s-master 1/1 Running 1 7d6h

kube-apiserver-k8s-master 1/1 Running 3 7d6h

kube-controller-manager-k8s-master 1/1 Running 8 6d19h

kube-proxy-5qpgc 1/1 Running 1 7d6h

kube-proxy-q2xfq 1/1 Running 1 7d6h

kube-proxy-tvzpd 1/1 Running 1 7d6h

kube-scheduler-k8s-master 1/1 Running 8 6d19h

metrics-server-84f9866fdf-kt2nb 1/1 Running 0 8m48s

[root@k8s-master ~]# kubectl logs kube-proxy-5qpgc -n kube-system

I1125 21:58:52.916782 1 node.go:136] Successfully retrieved node IP: 10.0.0.63

I1125 21:58:52.916870 1 server_others.go:111] kube-proxy node IP is an IPv4 address (10.0.0.63), assume IPv4 operation

W1125 21:58:55.279414 1 server_others.go:579] Unknown proxy mode "", assuming iptables proxy

I1125 21:58:55.279484 1 server_others.go:186] Using iptables Proxier.

I1125 21:58:55.279802 1 server.go:650] Version: v1.19.0

I1125 21:58:55.280128 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_max' to 131072

I1125 21:58:55.280151 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I1125 21:58:55.280431 1 conntrack.go:83] Setting conntrack hashsize to 32768

I1125 21:58:55.284637 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' to 86400

I1125 21:58:55.284685 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' to 3600

I1125 21:58:55.285024 1 config.go:315] Starting service config controller

I1125 21:58:55.285036 1 shared_informer.go:240] Waiting for caches to sync for service config

I1125 21:58:55.285053 1 config.go:224] Starting endpoint slice config controller

I1125 21:58:55.285057 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I1125 21:58:55.385243 1 shared_informer.go:247] Caches are synced for endpoint slice config

I1125 21:58:55.389779 1 shared_informer.go:247] Caches are synced for service config

[root@k8s-master ~]# journalctl -u kubelet

-- Logs begin at Fri 2021-11-26 05:56:08 CST, end at Mon 2021-11-29 05:40:22 CST. --

Nov 26 05:56:34 k8s-master systemd[1]: Started kubelet: The Kubernetes Node Agent.

Nov 26 05:57:18 k8s-master kubelet[1437]: I1126 05:57:18.855799 1437 server.go:411] Version: v1.19.0

Nov 26 05:57:18 k8s-master kubelet[1437]: I1126 05:57:18.856204 1437 server.go:831] Client rotation is

Nov 26 05:57:18 k8s-master kubelet[1437]: I1126 05:57:18.941372 1437 certificate_store.go:130] Loading

Nov 26 05:57:19 k8s-master kubelet[1437]: I1126 05:57:19.204101 14

···

[root@k8s-master ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-97769f7c7-z6npb 0/1 Running 1 7d14h 10.244.235.194 k8s-master <none> <none>

calico-node-4pwdc 1/1 Running 1 7d14h 10.0.0.62 k8s-node1 <none> <none>

calico-node-9r6zd 1/1 Running 1 7d14h 10.0.0.63 k8s-node2 <none> <none>

calico-node-vqzdj 1/1 Running 1 7d14h 10.0.0.61 k8s-master <none> <none>

coredns-6d56c8448f-gcgrh 1/1 Running 1 7d15h 10.244.169.137 k8s-node2 <none> <none>

coredns-6d56c8448f-tbsmv 1/1 Running 1 7d15h 10.244.36.76 k8s-node1 <none> <none>

etcd-k8s-master 1/1 Running 1 7d15h 10.0.0.61 k8s-master <none> <none>

kube-apiserver-k8s-master 1/1 Running 3 7d15h 10.0.0.61 k8s-master <none> <none>

kube-controller-manager-k8s-master 1/1 Running 8 7d3h 10.0.0.61 k8s-master <none> <none>

kube-proxy-5qpgc 1/1 Running 1 7d15h 10.0.0.63 k8s-node2 <none> <none>

kube-proxy-q2xfq 1/1 Running 1 7d15h 10.0.0.62 k8s-node1 <none> <none>

kube-proxy-tvzpd 1/1 Running 1 7d15h 10.0.0.61 k8s-master <none> <none>

kube-scheduler-k8s-master 1/1 Running 8 7d3h 10.0.0.61 k8s-master <none> <none>

metrics-server-84f9866fdf-kt2nb 1/1 Running 0 8h 10.244.36.77 k8s-node1 <none> <none>

[root@k8s-node2 ~]# docker ps|grep kube-proxy

4e41b2e09542 bc9c328f379c "/usr/local/bin/kube…" 3 days ago Up 3 days k8s_kube-proxy_kube-proxy-5qpgc_kube-system_30dbaf0f-20e7-4eea-9cf1-b86a8989ff0b_1

82fbe66c78f8 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 3 days ago Up 3 days k8s_POD_kube-proxy-5qpgc_kube-system_30dbaf0f-20e7-4eea-9cf1-b86a8989ff0b_1

[root@k8s-node2 ~]# cd /var/lib/docker/containers/4e41b2e09542fcd3f101ac502edc4b1a61cb676889b1322e87f27a8958c80691/

[root@k8s-node2 4e41b2e09542fcd3f101ac502edc4b1a61cb676889b1322e87f27a8958c80691]# ls

4e41b2e09542fcd3f101ac502edc4b1a61cb676889b1322e87f27a8958c80691-json.log config.v2.json mounts

checkpoints hostconfig.json

[root@k8s-node2 4e41b2e09542fcd3f101ac502edc4b1a61cb676889b1322e87f27a8958c80691]# cat 4e41b2e09542fcd3f101ac502edc4b1a61cb676889b1322e87f27a8958c80691-json.log

{"log":"I1125 21:58:52.916782 1 node.go:136] Successfully retrieved node IP: 10.0.0.63\n","stream":"stderr","time":"2021-11-25T21:58:52.917118595Z"}

{"log":"I1125 21:58:52.916870 1 server_others.go:111] kube-proxy node IP is an IPv4 address (10.0.0.63), assume IPv4 operation\n","stream":"stderr","time":"2021-11-25T21:58:52.917150453Z"}

{"log":"W1125 21:58:55.279414 1 server_others.go:579] Unknown proxy mode \"\", assuming iptables proxy\n","stream":"stderr","time":"2021-11-25T21:58:55.282090971Z"}

{"log":"I1125 21:58:55.279484 1 server_others.go:186] Using iptables Proxier.\n","stream":"stderr","time":"2021-11-25T21:58:55.282114351Z"}

{"log":"I1125 21:58:55.279802 1 server.go:650] Version: v1.19.0\n","stream":"stderr","time":"2021-11-25T21:58:55.28211819Z"}

{"log":"I1125 21:58:55.280128 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_max' to 131072\n","stream":"stderr","time":"2021-11-25T21:58:55.282121099Z"}

{"log":"I1125 21:58:55.280151 1 conntrack.go:52] Setting nf_conntrack_max to 131072\n","stream":"stderr","time":"2021-11-25T21:58:55.282123805Z"}

{"log":"I1125 21:58:55.280431 1 conntrack.go:83] Setting conntrack hashsize to 32768\n","stream":"stderr","time":"2021-11-25T21:58:55.282126419Z"}

{"log":"I1125 21:58:55.284637 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' to 86400\n","stream":"stderr","time":"2021-11-25T21:58:55.286848007Z"}

{"log":"I1125 21:58:55.284685 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' to 3600\n","stream":"stderr","time":"2021-11-25T21:58:55.286873385Z"}

{"log":"I1125 21:58:55.285024 1 config.go:315] Starting service config controller\n","stream":"stderr","time":"2021-11-25T21:58:55.286877186Z"}

{"log":"I1125 21:58:55.285036 1 shared_informer.go:240] Waiting for caches to sync for service config\n","stream":"stderr","time":"2021-11-25T21:58:55.286879992Z"}

{"log":"I1125 21:58:55.285053 1 config.go:224] Starting endpoint slice config controller\n","stream":"stderr","time":"2021-11-25T21:58:55.286882683Z"}

{"log":"I1125 21:58:55.285057 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config\n","stream":"stderr","time":"2021-11-25T21:58:55.286885414Z"}

{"log":"I1125 21:58:55.385243 1 shared_informer.go:247] Caches are synced for endpoint slice config \n","stream":"stderr","time":"2021-11-25T21:58:55.39116737Z"}

{"log":"I1125 21:58:55.389779 1 shared_informer.go:247] Caches are synced for service config \n","stream":"stderr","time":"2021-11-25T21:58:55.391190784Z"}

[root@k8s-master ~]# kubectl logs kube-proxy-5qpgc -n kube-system

I1125 21:58:52.916782 1 node.go:136] Successfully retrieved node IP: 10.0.0.63

I1125 21:58:52.916870 1 server_others.go:111] kube-proxy node IP is an IPv4 address (10.0.0.63), assume IPv4 operation

W1125 21:58:55.279414 1 server_others.go:579] Unknown proxy mode "", assuming iptables proxy

I1125 21:58:55.279484 1 server_others.go:186] Using iptables Proxier.

I1125 21:58:55.279802 1 server.go:650] Version: v1.19.0

I1125 21:58:55.280128 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_max' to 131072

I1125 21:58:55.280151 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I1125 21:58:55.280431 1 conntrack.go:83] Setting conntrack hashsize to 32768

I1125 21:58:55.284637 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' to 86400

I1125 21:58:55.284685 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' to 3600

I1125 21:58:55.285024 1 config.go:315] Starting service config controller

I1125 21:58:55.285036 1 shared_informer.go:240] Waiting for caches to sync for service config

I1125 21:58:55.285053 1 config.go:224] Starting endpoint slice config controller

I1125 21:58:55.285057 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I1125 21:58:55.385243 1 shared_informer.go:247] Caches are synced for endpoint slice config

I1125 21:58:55.389779 1 shared_informer.go:247] Caches are synced for service config

4. 管理K8s应用日志

查看容器标准输出日志:

kubectl logs <Pod名称>

kubectl logs -f <Pod名称>

kubectl logs -f <Pod名称> -c <容器名称>

标准输出在宿主机的路径:

/var/log/docker/containers/<container-id>/<container-id>-json.log

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d

web-96d5df5c8-ghb6g 1/1 Running 1 6d18h

[root@k8s-master ~]# kubectl logs web-96d5df5c8-ghb6g

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2021/11/25 21:58:58 [notice] 1#1: using the "epoll" event method

2021/11/25 21:58:58 [notice] 1#1: nginx/1.21.4

2021/11/25 21:58:58 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2021/11/25 21:58:58 [notice] 1#1: OS: Linux 3.10.0-1160.45.1.el7.x86_64

2021/11/25 21:58:58 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:104

[root@k8s-master ~]# kubectl run nginx-php --image=lizhenliang/nginx-php

pod/nginx-php created

[root@k8s-master ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d9h

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d9h

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d9h

nginx-php 1/1 Running 0 2m37s

web-96d5df5c8-ghb6g 1/1 Running 1 7d3h

[root@k8s-master ~]# kubectl exec -it nginx-php -- bash

[root@nginx-php local]# cd nginx/logs/

[root@nginx-php logs]# ls

access.log error.log

[root@nginx-php logs]# tail -f access.log

10.0.0.62 - - [29/Nov/2021:15:00:50 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0"

10.0.0.62 - - [29/Nov/2021:15:02:52 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

[root@k8s-node1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d9h 10.244.36.74 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d9h 10.244.36.75 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d9h 10.244.169.139 k8s-node2 <none> <none>

nginx-php 1/1 Running 0 4m27s 10.244.36.78 k8s-node1 <none> <none>

web-96d5df5c8-ghb6g 1/1 Running 1 7d3h 10.244.36.73 k8s-node1 <none> <none>

[root@k8s-node1 ~]# curl 10.244.36.78

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx</center>

</body>

</html>

[root@k8s-node1 ~]# curl 10.244.36.78/status.html

ok

[root@k8s-master ~]# vi web.yaml

apiVersion: v1

kind: Pod

metadata:

name: web2

spec:

containers:

- name: web

image: lizhenliang/nginx-php

volumeMounts:

- name: logs

mountPath: /usr/local/nginx/logs

volumes:

- name: logs

emptyDir: {}

[root@k8s-master ~]# kubectl apply -f web.yaml

pod/web2 created

[root@k8s-master ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d9h

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d9h

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d9h

nginx-php 1/1 Running 0 18m

web-96d5df5c8-ghb6g 1/1 Running 1 7d3h

web2 1/1 Running 0 96s

[root@k8s-master ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d9h 10.244.36.74 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d9h 10.244.36.75 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d9h 10.244.169.139 k8s-node2 <none> <none>

nginx-php 1/1 Running 0 18m 10.244.36.78 k8s-node1 <none> <none>

web-96d5df5c8-ghb6g 1/1 Running 1 7d3h 10.244.36.73 k8s-node1 <none> <none>

web2 1/1 Running 0 102s 10.244.169.143 k8s-node2 <none> <none>

[root@k8s-master ~]# curl 10.244.169.143

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx</center>

</body>

</html>

[root@k8s-master ~]# curl 10.244.169.143/status.html

ok

[root@k8s-node2 ~]# docker ps|grep web2

1aa9b982094d lizhenliang/nginx-php "docker-entrypoint.s…" About a minute ago Up About a minute k8s_web_web2_default_b78138a1-eea8-4073-b122-2a974c0d7d5f_0

903b19602f50 registry.aliyuncs.com/google_containers/pause:3.2 "/pause" 2 minutes ago Up 2 minutes k8s_POD_web2_default_b78138a1-eea8-4073-b122-2a974c0d7d5f_0

[root@k8s-node2 ~]# cd /var/lib/kubelet/pods/b78138a1-eea8-4073-b122-2a974c0d7d5f/

[root@k8s-node2 b78138a1-eea8-4073-b122-2a974c0d7d5f]# cd

[root@k8s-node2 ~]# cd /var/lib/kubelet/pods/b78138a1-eea8-4073-b122-2a974c0d7d5f/volumes/kubernetes.io~empty-dir/

[root@k8s-node2 kubernetes.io~empty-dir]# ls

logs

[root@k8s-node2 kubernetes.io~empty-dir]# cd logs/

[root@k8s-node2 logs]# ls

access.log error.log

[root@k8s-node2 logs]# cat access.log

[root@k8s-node2 logs]# tail -f access.log

10.244.235.192 - - [29/Nov/2021:15:53:58 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0"

10.244.235.192 - - [29/Nov/2021:15:54:30 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

日志请求:kubectl -> apiserver -> kubelet -> container

容器中应用日志可以使用emptyDir数据卷将日志文件持久化到宿主机上。

宿主机的路径:

/var/lib/kubelet/pods/<pod-id>/volumes/kubernetes.io~empty-dir/logs/access.log

Pod创建一个边车容器读取业务容器日志

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: web

image: lizhenliang/nginx-php

volumeMounts:

- name: logs

mountPath: /usr/local/nginx/logs

- name: log

image: busybox

args: [/bin/sh, -c, 'tail -f /opt/access.log']

volumeMounts:

- name: logs

mountPath: /opt

volumes:

- name: logs

emptyDir: {}

[root@k8s-master ~]# kubectl apply -f pod.yaml

pod/logs created

[root@k8s-master ~]# kubectl get po -w

NAME READY STATUS RESTARTS AGE

logs 0/2 ContainerCreating 0 19s

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d10h

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d10h

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d10h

nginx-php 1/1 Running 0 67m

web-96d5df5c8-ghb6g 1/1 Running 1 7d4h

web2 1/1 Running 0 50m

logs 2/2 Running 0 32s

^C[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

logs 2/2 Running 0 103s 10.244.36.79 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-dvxp8 1/1 Running 1 4d10h 10.244.36.74 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-f4ln4 1/1 Running 1 4d10h 10.244.36.75 k8s-node1 <none> <none>

my-dep-5f8dfc8c78-j9fqp 1/1 Running 1 4d10h 10.244.169.139 k8s-node2 <none> <none>

nginx-php 1/1 Running 0 69m 10.244.36.78 k8s-node1 <none> <none>

web-96d5df5c8-ghb6g 1/1 Running 1 7d4h 10.244.36.73 k8s-node1 <none> <none>

web2 1/1 Running 0 52m 10.244.169.143 k8s-node2 <none> <none>

[root@k8s-master ~]# curl 10.244.36.79/status.html

ok

[root@k8s-master ~]# kubectl exec -it logs -- bash

Defaulting container name to web.

Use 'kubectl describe pod/logs -n default' to see all of the containers in this pod.

[root@logs local]# exit

exit

[root@k8s-master ~]# kubectl exec -it logs -c web -- bash

[root@logs local]# cd nginx/logs/

[root@logs logs]# ls

access.log error.log

[root@logs logs]# cat access.log

10.244.235.192 - - [29/Nov/2021:16:07:28 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

[root@logs logs]# exit

exit

[root@k8s-master ~]# kubectl exec -it logs -c log -- sh

/ # cd opt/ls

sh: cd: can't cd to opt/ls: No such file or directory

/ # cd opt/

/opt # ls

access.log error.log

/opt # cat access.log

10.244.235.192 - - [29/Nov/2021:16:07:28 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

[root@k8s-master ~]# kubectl logs logs -c log

10.244.235.192 - - [29/Nov/2021:16:07:28 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

[root@k8s-node1 ~]# curl 10.244.36.79

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx</center>

</body>

</html>

[root@k8s-node1 ~]# curl 10.244.36.79/status.html

ok

[root@k8s-master ~]# kubectl logs logs

error: a container name must be specified for pod logs, choose one of: [web log]

[root@k8s-master ~]# kubectl logs logs -c log -f

10.244.235.192 - - [29/Nov/2021:16:07:28 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

10.0.0.62 - - [29/Nov/2021:16:13:36 +0800] "GET / HTTP/1.1" 403 146 "-" "curl/7.29.0"

10.0.0.62 - - [29/Nov/2021:16:13:59 +0800] "GET /status.html HTTP/1.1" 200 3 "-" "curl/7.29.0"

课后作业

1、查看pod日志,并将日志中Error的行记录到指定文件

- pod名称:web

- 文件:/opt/web

kubectl logs web | grep error > /opt/web

2、查看指定标签使用cpu最高的pod,并记录到到指定文件

- 标签:app=web

- 文件:/opt/cpu

kubectl top pods -l app=web --sort-by="cpu" > /opt/cpu

3、Pod里创建一个边车容器读取业务容器日志

apiVersion: v1

kind: Pod

metadata:

name: log-counter

spec:

containers:

- name: web

image: busybox

command: ["/bin/sh","-c","for i in {1..100};do echo $i >> /var/log/access.log;sleep 1;done"]

volumeMounts:

- name: varlog

mountPath: /var/log

- name: log

image: busybox

command: ["/bin/sh","-c","tail -f /var/log/access.log"]

volumeMounts:

- name: varlog

mountPath: /var/log

volumes:

- name: varlog

emptyDir: {}