本实验在CentOS 7中完成

第一部分:安装Docker

这一部分是安装Docker,如果机器中已经安装过Docker,可以直接跳过

[root@VM-48-22-centos ~]# systemctl stop firewalld

[root@VM-48-22-centos ~]# systemctl disable firewalld

[root@VM-48-22-centos ~]# systemctl status firewalld

[root@VM-48-22-centos ~]# setenforce 0

[root@VM-48-22-centos ~]# getenforce

[root@VM-48-22-centos ~]# yum -y update

[root@VM-48-22-centos ~]# mkdir /etc/yum.repos.d/oldrepo

[root@VM-48-22-centos ~]# mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/oldrepo/

[root@VM-48-22-centos ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@VM-48-22-centos ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@VM-48-6-centos ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@VM-48-22-centos ~]# yum clean all

[root@VM-48-22-centos ~]# yum makecache fast

[root@VM-48-22-centos ~]# yum list docker-ce --showduplicates | sort -r

[root@VM-48-22-centos ~]# yum -y install docker-ce

[root@VM-48-22-centos ~]# systemctl start docker

[root@VM-48-22-centos ~]# systemctl enable docker

[root@VM-48-22-centos ~]# ps -ef | grep docker

[root@VM-48-22-centos ~]# docker version

到这一步能正常输出docker版本,说明docker已经成功安装。

为了后续拉取镜像能更快,需要添加一个镜像:

[root@VM-48-22-centos ~]# vi /etc/docker/daemon.json

{

"registry-mirrors": ["https://x3n9jrcg.mirror.aliyuncs.com"]

}

重启docker。

后续需要用git拉取一些资源,所以要安装git,同样为了能够正常访问github,需要修改一下hosts文件。

[root@VM-48-22-centos ~]# systemctl daemon-reload

[root@VM-48-22-centos ~]# systemctl restart docker

[root@VM-48-22-centos ~]# yum -y install git

[root@VM-48-22-centos ~]# vi /etc/hosts

添加以下内容:

192.30.255.112 github.com git

第二部分:安装Docker-Compose

这一部分是安装Docker-Compose,如果已经安装过,可以直接跳过。

[root@VM-48-6-centos ~]# curl -L https://get.daocloud.io/docker/compose/releases/download/1.27.4/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 423 100 423 0 0 476 0 --:--:-- --:--:-- --:--:-- 476

100 11.6M 100 11.6M 0 0 6676k 0 0:00:01 0:00:01 --:--:-- 6676k

[root@VM-48-6-centos ~]# chmod +x /usr/local/bin/docker-compose

[root@VM-48-6-centos ~]# docker-compose --version

docker-compose version 1.27.4, build 40524192

能够正常输出docker-compose版本号,说明成功安装。

第三部分:安装docker-spark

接下来正式开始在docker中安装spark的环境。

[root@VM-48-6-centos ~]# wget https://raw.githubusercontent.com/zq2599/blog_demos/master/sparkdockercomposefiles/docker-compose.yml

[root@VM-48-6-centos ~]# wget https://raw.githubusercontent.com/zq2599/blog_demos/master/sparkdockercomposefiles/hadoop.env

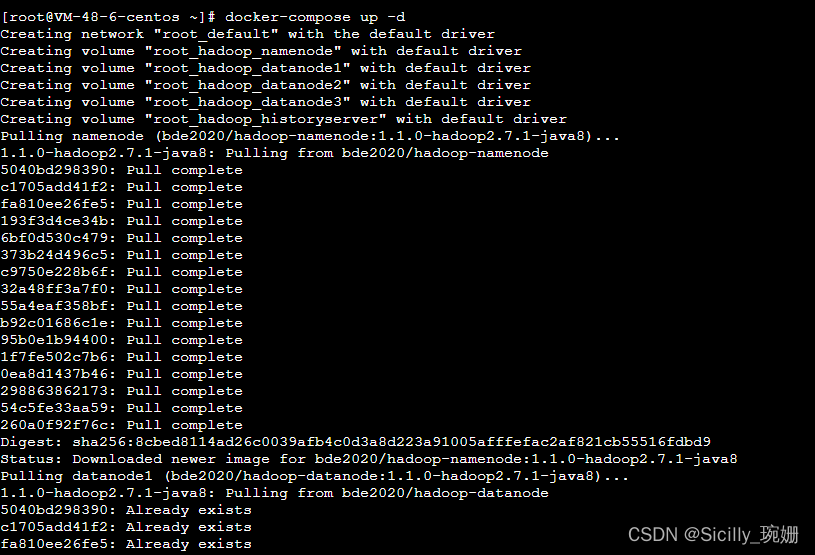

[root@VM-48-6-centos ~]# docker-compose up -d

就会开始拉取镜像

等候所有镜像拉取完毕。

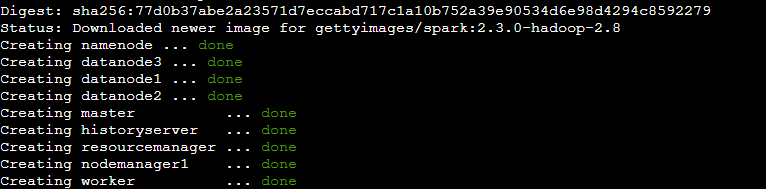

这时可以看到启动的容器

[root@VM-48-6-centos ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2109d9181081 gettyimages/spark:2.3.0-hadoop-2.8 "bin/spark-class org…" 2 hours ago Up 2 hours 7012-7015/tcp, 8881/tcp, 0.0.0.0:8081->8081/tcp, :::8081->8081/tcp worker

38f1e94ac3f6 bde2020/hadoop-nodemanager:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 8042/tcp nodemanager1

cf49696d67d6 bde2020/hadoop-resourcemanager:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 8088/tcp resourcemanager

0d1a21c3caa4 bde2020/hadoop-historyserver:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 8188/tcp historyserver

4c6ab11527a8 gettyimages/spark:2.3.0-hadoop-2.8 "bin/spark-class org…" 2 hours ago Up 2 hours 0.0.0.0:4040->4040/tcp, :::4040->4040/tcp, 0.0.0.0:6066->6066/tcp, :::6066->6066/tcp, 0.0.0.0:7077->7077/tcp, :::7077->7077/tcp, 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp, 7001-7005/tcp master

82111784c49d bde2020/hadoop-datanode:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 50075/tcp datanode2

23fd1c61f33c bde2020/hadoop-datanode:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 50075/tcp datanode1

6ebfca37650f bde2020/hadoop-datanode:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 50075/tcp datanode3

2e6d48ba2efe bde2020/hadoop-namenode:1.1.0-hadoop2.7.1-java8 "/entrypoint.sh /run…" 2 hours ago Up 2 hours (healthy) 0.0.0.0:50070->50070/tcp, :::50070->50070/tcp namenode

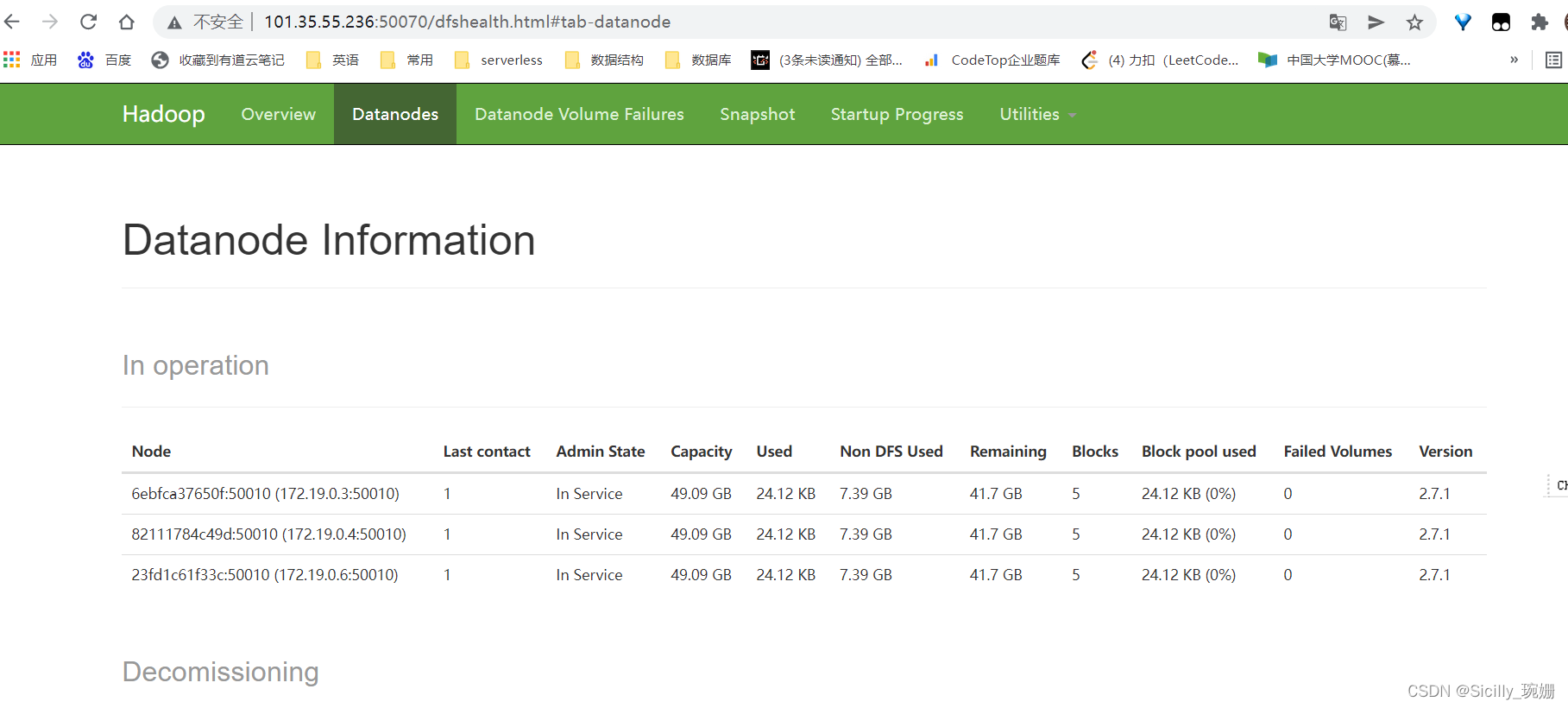

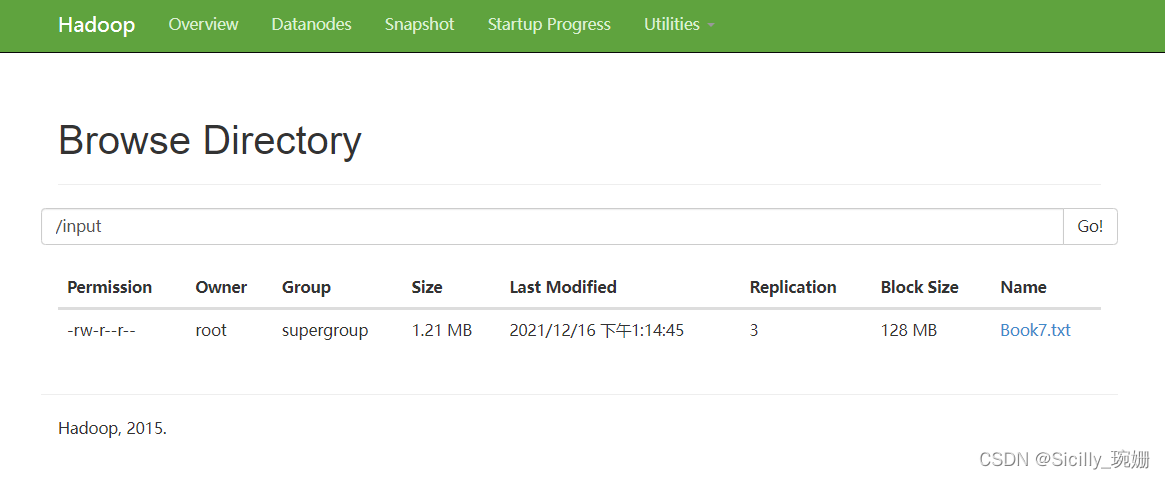

浏览器查看hdfs:

http://101.35.55.236:50070/ 【101.35.55.236要改成你的机器ip】

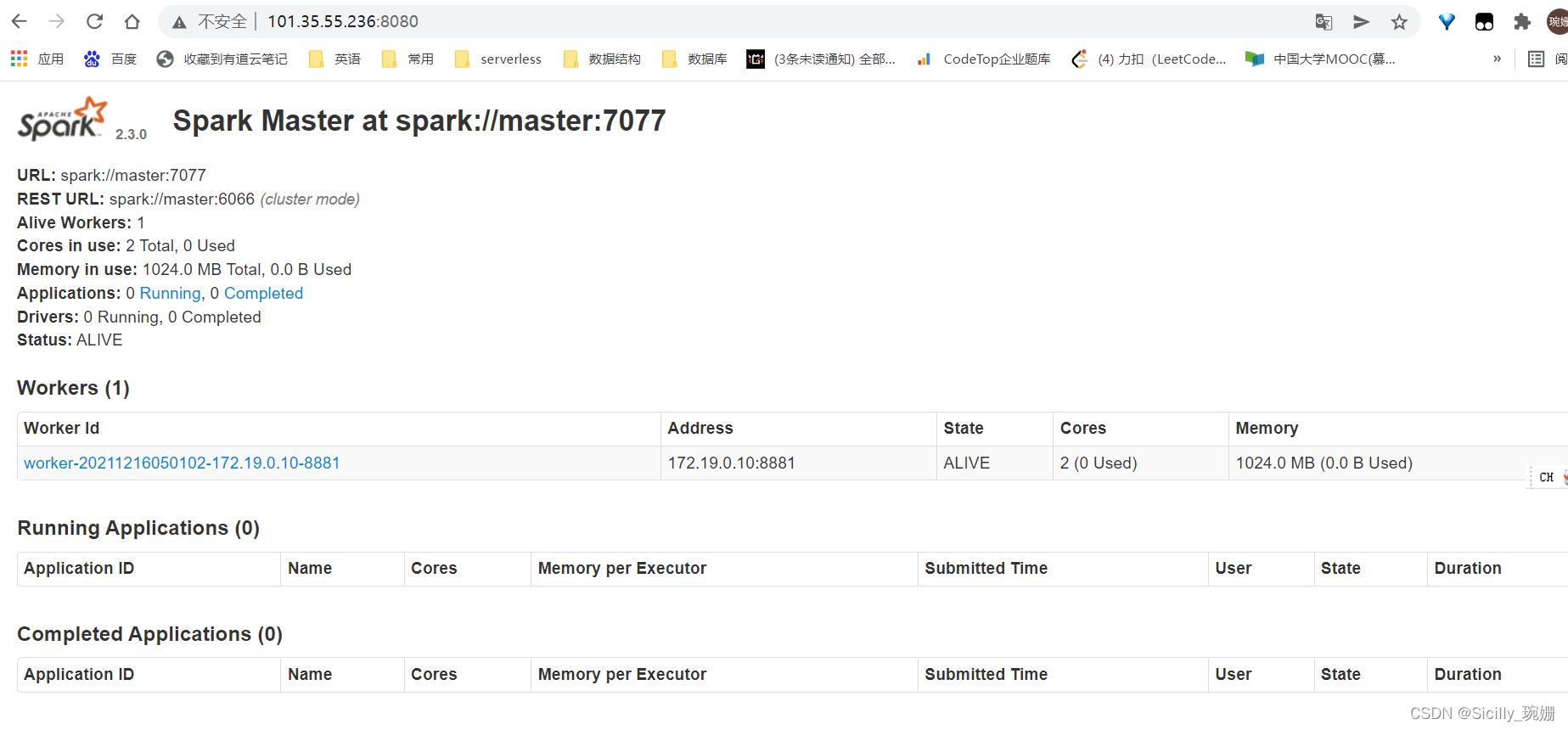

浏览器查看spark:

http://101.35.55.236:8080/ 【101.35.55.236要改成你的机器ip】

第四部分:执行WordCount程序

准备好一个txt文件(我的是Book7.txt)

在hdfs容器中创建/input文件夹

上传txt文件到本机的input_files文件夹(input_files目录挂载到namenode容器上了,因此会自动同步到容器中)

将容器内的文件上传到hdfs

[root@VM-48-6-centos ~]# ll

total 24

drwxr-xr-x 4 root root 4096 Dec 16 13:00 conf

drwxr-xr-x 2 root root 4096 Dec 16 13:00 data

-rw-r--r-- 1 root root 3046 Dec 16 12:56 docker-compose.yml

-rw-r--r-- 1 root root 1189 Dec 16 12:57 hadoop.env

drwxr-xr-x 2 root root 4096 Dec 16 13:00 input_files

drwxr-xr-x 2 root root 4096 Dec 16 13:00 jars

[root@VM-48-6-centos ~]# cd input_files/

[root@VM-48-6-centos input_files]# ls

Book7.txt

[root@VM-48-6-centos input_files]# docker exec namenode hdfs dfs -mkdir /input

[root@VM-48-6-centos input_files]# cd ..

[root@VM-48-6-centos ~]# docker exec namenode hdfs dfs -put /input_files/Book7.txt /input

查看浏览器中的hdfs,是否有上传好的文件:

方式一:在spark_shell运行

[root@VM-48-6-centos ~]# docker exec -it master spark-shell --executor-memory 512M --total-executor-cores 2

2021-12-16 05:19:59 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://localhost:4040

Spark context available as 'sc' (master = spark://master:7077, app id = app-20211216052007-0000).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.3.0

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_131)

Type in expressions to have them evaluated.

Type :help for more information.

scala> sc.textFile("hdfs://namenode:8020/input/Book7.txt").flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey(_ + _).sortBy(_._2,false).take(10).foreach(println)

(,12132)

(the,10349)

(and,5955)

(to,4871)

(of,4138)

(a,3442)

(he,2844)

(Harry,2735)

(was,2674)

(his,2492)

运行完毕后要按Ctrl+C退出执行

方式二:spark-submit执行

需要准备好一个jar包

[root@VM-48-6-centos ~]# ls

conf data docker-compose.yml hadoop.env input_files jars

[root@VM-48-6-centos ~]# cd jars

[root@VM-48-6-centos jars]# wget https://raw.githubusercontent.com/zq2599/blog_demos/master/sparkdockercomposefiles/sparkwordcount-1.0-SNAPSHOT.jar

--2021-12-16 13:35:52-- https://raw.githubusercontent.com/zq2599/blog_demos/master/sparkdockercomposefiles/sparkwordcount-1.0-SNAPSHOT.jar

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5915 (5.8K) [application/octet-stream]

Saving to: ‘sparkwordcount-1.0-SNAPSHOT.jar’

100%[======================================================================================================================>] 5,915 --.-K/s in 0s

2021-12-16 13:35:54 (90.7 MB/s) - ‘sparkwordcount-1.0-SNAPSHOT.jar’ saved [5915/5915]

[root@VM-48-6-centos jars]# docker exec -it master spark-submit \

> --class com.bolingcavalry.sparkwordcount.WordCount \

> --executor-memory 512m \

> --total-executor-cores 2 \

> /root/jars/sparkwordcount-1.0-SNAPSHOT.jar \

> namenode \

> 8020 \

> Book7.txt

执行完毕,输出很多结果,其中有:

2021-12-16 05:36:25 INFO DAGScheduler:54 - ResultStage 4 (take at WordCount.java:78) finished in 0.157 s

2021-12-16 05:36:25 INFO DAGScheduler:54 - Job 1 finished: take at WordCount.java:78, took 0.442847 s

2021-12-16 05:36:25 INFO WordCount:90 - top 10 word :

12132

the 10349

and 5955

to 4871

of 4138

a 3442

he 2844

Harry 2735

was 2674

his 2492

参考资料

安装docker-compose

https://www.cnblogs.com/xiao987334176/p/12377113.html

docker中搭建spark集群

https://blog.csdn.net/boling_cavalry/article/details/86851069

https://blog.csdn.net/weixin_42588332/article/details/119515003