Kubernetes部署

概述

本文用于指导Kubernetes(以下简称为k8s)部署,采用的是较为简单便捷的kubeadmin工具进行安装,版本为1.19.0

一直以来都在说k8s,但是在网上看到好多教程都是比较杂,跟着做总是会缺失一些文件或者因为GFW下载不到某些镜像,导致安装一直失败,最近重新整理了下,将自己的k8s部署过程进行记录并分享,希望可以给到正在部署k8s的童鞋们一点参考

部署环境

服务器信息

| 主机名 | IP | 硬件配置 | 操作系统 |

|---|---|---|---|

| k8s-master | 172.18.1.60 | 4C/4G | centos7.5 |

| k8s-node1 | 172.18.1.61 | 4C/4G | centos7.5 |

| k8s-node2 | 172.18.1.62 | 4C/4G | centos7.5 |

基础配置

集群中的三台服务器均需要进行配置

关闭防火墙

为了便于集群搭建,实验环境下关闭了防火墙

systemctl stop firewalld

systemctl disable firewalld

iptables -F

关闭SElinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

setenforce 0

禁用swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

mount -a

配置hosts列表

cat >> /etc/hosts <<EOF

172.18.1.60 k8s-master

172.18.1.61 k8s-node1

172.18.1.62 k8s-node2

EOF

配置桥接流量转发

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

配置时间同步

yum install ntpdate -y

ntpdate time.windows.com

安装docker服务

注意,这里使用的是k8s版本未1.19,最新支持的docker版本未19.0,所以要安装指定的docker版本,不然后续可能会报错

curl https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo

yum install -y docker-ce-19.03.0

systemctl enable docker && systemctl start docker

配置docker镜像仓库加速

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://t48ldfx1.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker

配置k8s源仓库

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

以上配置需要在k8s的集群中进行基础配置

k8s master配置

部署kubelet、kubeadm、kubectl

这里采用的是k8s 1.19.0的版本,其他版本未测试,理论上部署应该是一样的,但是支持的docker版本可能会存在差异,建议查下版本支持的docker对应版本

yum install -y kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0

systemctl enable kubelet

部署Kubernetes Master

kubeadm init \

--apiserver-advertise-address=172.18.1.60 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.19.0 \

--service-cidr=11.62.0.0/16 \

--pod-network-cidr=11.55.0.0/16

参数说明

-–apiserver-advertise-address:集群通告地址

-–image-repository:由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

-–kubernetes-version: K8s版本,与上面安装的一致

-–service-cidr :集群内部虚拟网络,Pod统一访问入口

-–pod-network-cidr Pod:网络,需要与接下来部署的CNI网络组件yaml中保持一致

在执行该命令过程中,可能会存在网络故障导致超时报错,此时只要重新运行即可

在此过程中,通过docker images 命令,可以查看到下载的镜像文件

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.19.0 bc9c328f379c 16 months ago 118MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.19.0 1b74e93ece2f 16 months ago 119MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.19.0 09d665d529d0 16 months ago 111MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.19.0 cbdc8369d8b1 16 months ago 45.7MB

registry.aliyuncs.com/google_containers/etcd 3.4.9-1 d4ca8726196c 18 months ago 253MB

registry.aliyuncs.com/google_containers/coredns 1.7.0 bfe3a36ebd25 18 months ago 45.2MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 22 months ago 683kB

安装成功的话,可以看到类似以下的输出内容

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.18.1.60:6443 --token qdgxzt.gs2ickopckav5hz1 \

--discovery-token-ca-cert-hash sha256:eb1a33df519a2b61ed906a4b5bee7021567c91524cfe08ba6ecc5b97202fecc6

以上内容提示了如何连接到k8s集群,以及节点服务器如何添加到集群

建立k8s管理用户

useradd kadmin

配置k8s用户连接

mkdir -p /home/kadmin/.kube

cp /etc/kubernetes/admin.conf /home/kadmin/.kube/config

chown kadmin:kadmin -R /home/kadmin/.kube

验证配置

使用kadmin用户,执行kubectl get ns

su - kadmin

$ kubectl get ns

NAME STATUS AGE

default Active 8m51s

kube-node-lease Active 8m54s

kube-public Active 8m54s

kube-system Active 8m55s

可以看到k8s的4个默认的空间

k8s node配置

官方参考文档:

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/#config-file

部署kubelet、kubeadmin

这里采用的是k8s 19.0的版本,其他版本未测试,理论上部署应该是一样的

yum install -y kubelet-1.19.0 kubeadm-1.19.0

systemctl enable kubelet

加入k8s集群

根据k8s完成后的提示,使用token方式加入到现有的k8s集群

当token过期后,可以用以下方式进行重新生成

官方参考:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-join/

kubeadm token create --print-join-command

执行kubeadm join命令

kubeadm join 172.18.1.60:6443 --token qdgxzt.gs2ickopckav5hz1 \

--discovery-token-ca-cert-hash sha256:eb1a33df519a2b61ed906a4b5bee7021567c91524cfe08ba6ecc5b97202fecc6

等待加入动作完成,完成后提示以下该信息

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

配置k8s集群网络

这里采用的是Calico,项目介绍 https://docs.projectcalico.org/getting-started/kubernetes/quickstart

curl https://docs.projectcalico.org/manifests/calico.yaml -o /tmp/calico.yaml

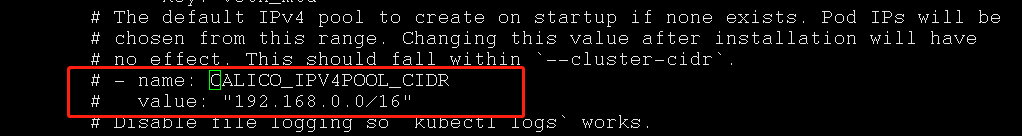

取消注释 CALICO_IPV4POOL_CIDR,并将其值设置为 k8s 的pod-network-cidr的值

在k8s-master中应用该配置文件

kubectl apply -f /tmp/calico.yaml

输出

$ kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

部署k8s控制台

获取配置文件

获取k8s dashboard建议的配置文件

官方配置文件链接(需科学上网):

https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

我使用的是根据官方配置文件进行修改而来的,默认是将dashboard的访问端口映射到节点服务器的30001端口,如果需要修改为其他端口,则修改nodePort的值即可

配置文件地址:https://gitee.com/xiaojinran/k8s/blob/master/k8s-dashboard/dashboard.yaml

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

应用dashboard的配置文件

curl https://gitee.com/xiaojinran/k8s/blob/master/k8s-dashboard/dashboard.yaml -o /tmp/k8s-dashboard.yaml

kubectl apply -f /tmp/k8s0dashboard.yaml

结果输出

$ curl https://gitee.com/xiaojinran/k8s/blob/master/k8s-dashboard/dashboard.yaml -o /tmp/k8s-dashboard.yaml

$ kubectl apply -f /tmp/k8s0dashboard.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

配置登录token

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

登录k8s控制台

概览信息

集群节点信息

验证k8s集群

创建nginx测试服务

使用控制台,我们来部署一个简单的nginx服务

点击控制台的

选择从表单进行创建

填写应用部署名称,容器镜像,pods个数以及暴露容器内部的80端口到集群(内网IP)的80,点击Deploy,进行部署

稍等一下,就可以看到应用部署情况

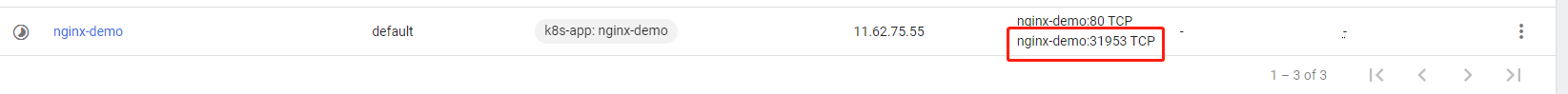

查看映射端口号

访问ngix服务

通过访问集群中的节点IP,以及端口号31953,可以访问到nginx服务

总结

以上为使用kubeadm安装部署的k8s集群,方法简单易行,不用过多的配置即可完成k8s集群的搭建,目前k8s的1.19、1.20版本均可按照以上流程进行部署,各位读者在部署过程中如果有其他问题,欢迎随时留言讨论。