handle_mm_fault是与架构无关的page fault处理部分,主要是根据发生page fault的虚拟地址和上述步骤查找的对应的vma以及error code转换后的flag具体错误类型进行处理,是内核处理page fault的核心函数,其接口如下:

vm_fault_t handle_mm_fault(struct vm_area_struct *vma, unsigned long address,

unsigned int flags)参数:

- struct vm_area_struct *vma: 发生pag fault 地址对应的vma

- unsigned long address:产生page fault的地址

- unsigned int flags:根据硬件具体返回的error code 转换成相应flag进程处理。

返回值:

- vm_fault_t: 函数返回的处理page fault 值,如果为0 处理成功,其他非零值代码各个错误信息

vm_fault_t和flags信息可以从《linux那些事之page fault(AMD64架构)(user space)(2)》具体了解。

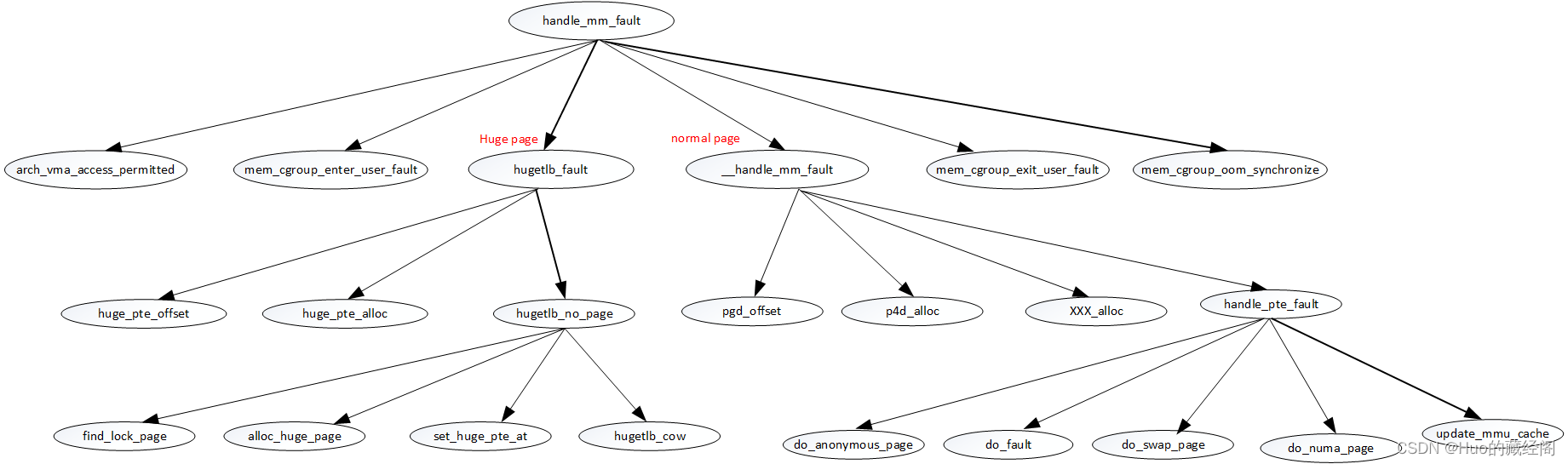

handle_mm_fault 函数调用关系

handle_mm_fault函数处理比较复制,需要处理兼容各种场景 ,可以说是kernel 比较复杂的一个处理流程,《understanding linux virtual memory》书中使用的2.4内核版本代码,当时有很多特性2.4版本并没有涉及到,下面是结合5.8.10版本内核代码更新调用关系如下:

handle_mm_fault

handle_mm_fault源码如下:

/*

* By the time we get here, we already hold the mm semaphore

*

* The mmap_lock may have been released depending on flags and our

* return value. See filemap_fault() and __lock_page_or_retry().

*/

vm_fault_t handle_mm_fault(struct vm_area_struct *vma, unsigned long address,

unsigned int flags)

{

vm_fault_t ret;

__set_current_state(TASK_RUNNING);

count_vm_event(PGFAULT);

count_memcg_event_mm(vma->vm_mm, PGFAULT);

/* do counter updates before entering really critical section. */

check_sync_rss_stat(current);

if (!arch_vma_access_permitted(vma, flags & FAULT_FLAG_WRITE,

flags & FAULT_FLAG_INSTRUCTION,

flags & FAULT_FLAG_REMOTE))

return VM_FAULT_SIGSEGV;

/*

* Enable the memcg OOM handling for faults triggered in user

* space. Kernel faults are handled more gracefully.

*/

if (flags & FAULT_FLAG_USER)

mem_cgroup_enter_user_fault();

if (unlikely(is_vm_hugetlb_page(vma)))

ret = hugetlb_fault(vma->vm_mm, vma, address, flags);

else

ret = __handle_mm_fault(vma, address, flags);

if (flags & FAULT_FLAG_USER) {

mem_cgroup_exit_user_fault();

/*

* The task may have entered a memcg OOM situation but

* if the allocation error was handled gracefully (no

* VM_FAULT_OOM), there is no need to kill anything.

* Just clean up the OOM state peacefully.

*/

if (task_in_memcg_oom(current) && !(ret & VM_FAULT_OOM))

mem_cgroup_oom_synchronize(false);

}

return ret;

}

- 将当前进程激活 处于RUNNING状态

- 对page fault次数进行统计

- check_sync_rss_stat:多核spin lock特性,当cpu为多核时,spin lock已经被其他另外cpu核锁拥有,该核将无法拥有获取锁处于自旋等待状态,为了提高性能,针对多核场景可以采用多个锁,减少等待状态。允许用户可以配置的CONFIG_SPLIT_PTLOCK_CPUS与实际物理核CONFIG_NR_CPUS并不用完全一致,用户可以根据需要配置。再此刻需要检查使用超过限制进行同步

- 用户空间异常,将设置in_user_fault为1,防止在出现OOM时,将本进程内存或进程给杀掉

- 根据vma>vm_flags是否设置VM_HUGETLB,判断是否是huge page

- 如果是huge page则进入hugetlb_fault 处理

- 非huge page, 调用__handle_mm_fault处理

- 处理完之后,将in_user_fault 设置为0 ,本进程也可以被OOM处理

- 如果当前进程进程,也会被OOM处理,调用mem_cgroup_oom_synchronize 完成本进程OOM处理。

__handle_mm_fault

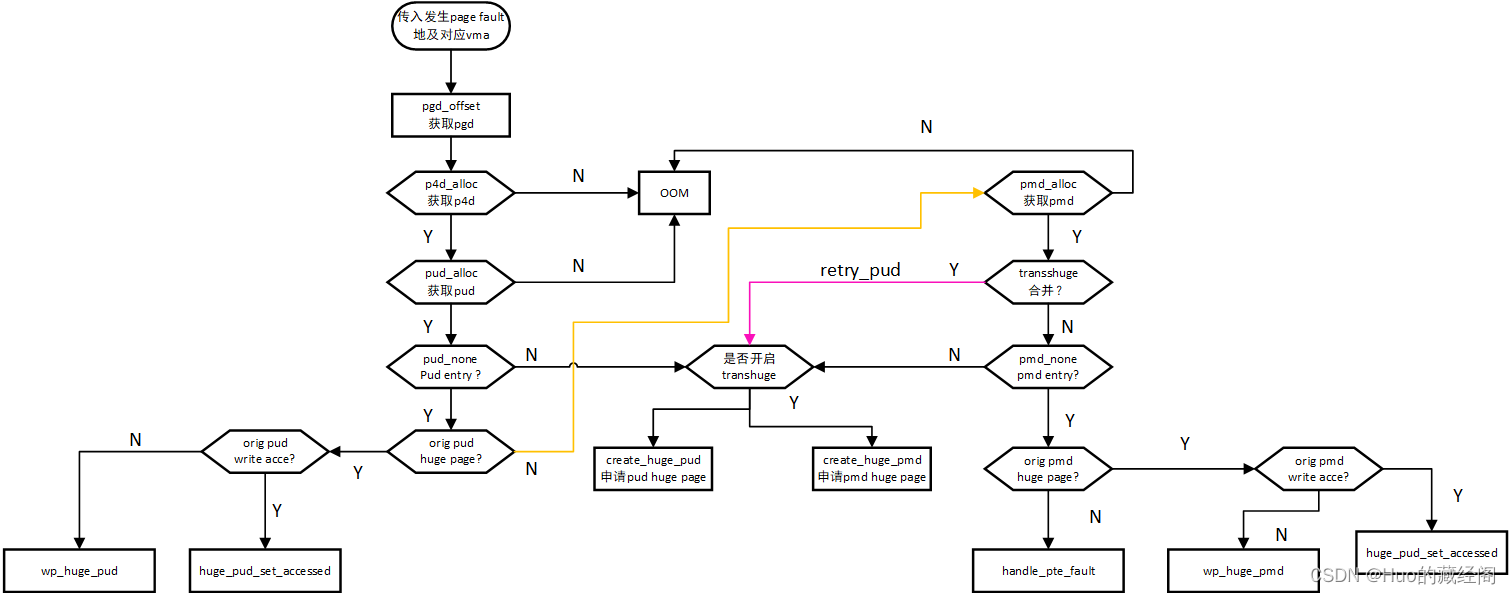

__handle_mm_fault为处理正常页的page fault进一步处理函数,包含了大部分处理逻辑:

??处理逻辑基本依照五级转换page table进行处理:

- pgd_offset:根据地址获取pgd表。

- p4d_alloc:根据pgd和address获取p4d表,如果p4d表不存在,则调用__p4d_alloc 从物理内存中申请新的p4d表。

- pud_alloc:根据p4d和address获取pud表,如果pud表不存在,则调用__pud_alloc 从物理内存中申请新的p4d表。

- 此时查看是否开启transport huge page特性,如果开启且pud_none即不存在pud entry根据vma可以申请一个pud 级别的huge page,且pud_none即不存在pud entry,则调用create_huge_pud直接申请huge page处理。

- pud_none已经存在,则获取pud entry属性是否huge page,并做权限检查,如果flags中要求有写权限,但是pud entry并没有写权限,则调用wp_huge_pud处理(主要是针对写时复制COW场景,例如pud entry只有只读属性,当父子两个进程,子进程继承父进程一些内存,子进程对继承过来的内存或者共享内存写时会触发COW场景)。

- transport huge page未开启,且pud_none存在且是一个normal page,则继续正常walk page table,进入pmd。

- pmd_alloc,如果pmd表不存在,则新申请一个。

- 此时查看pmd 属性,是否可以和pud级别huge page合并,减少 page table数量,如果可以合并,则进入retry_pud处理。

- 不可以合并,且pmd_none即不存在,并且?transport huge page开启,则直接调用create_huge_pmd进行pmd 级别huge page处理。

- pmd entry存在,对其属性进行检查,如果是pmd huge page,flag要求写权限且pmd 没有写权限,则进入wp_huge_pmd处理

- pmd entry 检查不是huge page,则正常继续下一步 进入handle_pte_fault处理。

- handle_pte_fault会根据vma不同属性 进行分别处理。

struct vm_fault

__handle_mm_fault函数除了对walk page table中针对transport huge page特性和huge page进行处理之外,还有另外一个重要作用就是填充struct vm_fault结构,为后续处理page fault 组织所必要的信息,后续还将一个处理结果也会填充到struct vm_fault中,该结构定义如下:

/*

* vm_fault is filled by the the pagefault handler and passed to the vma's

* ->fault function. The vma's ->fault is responsible for returning a bitmask

* of VM_FAULT_xxx flags that give details about how the fault was handled.

*

* MM layer fills up gfp_mask for page allocations but fault handler might

* alter it if its implementation requires a different allocation context.

*

* pgoff should be used in favour of virtual_address, if possible.

*/

struct vm_fault {

struct vm_area_struct *vma; /* Target VMA */

unsigned int flags; /* FAULT_FLAG_xxx flags */

gfp_t gfp_mask; /* gfp mask to be used for allocations */

pgoff_t pgoff; /* Logical page offset based on vma */

unsigned long address; /* Faulting virtual address */

pmd_t *pmd; /* Pointer to pmd entry matching

* the 'address' */

pud_t *pud; /* Pointer to pud entry matching

* the 'address'

*/

pte_t orig_pte; /* Value of PTE at the time of fault */

struct page *cow_page; /* Page handler may use for COW fault */

struct page *page; /* ->fault handlers should return a

* page here, unless VM_FAULT_NOPAGE

* is set (which is also implied by

* VM_FAULT_ERROR).

*/

/* These three entries are valid only while holding ptl lock */

pte_t *pte; /* Pointer to pte entry matching

* the 'address'. NULL if the page

* table hasn't been allocated.

*/

spinlock_t *ptl; /* Page table lock.

* Protects pte page table if 'pte'

* is not NULL, otherwise pmd.

*/

pgtable_t prealloc_pte; /* Pre-allocated pte page table.

* vm_ops->map_pages() calls

* alloc_set_pte() from atomic context.

* do_fault_around() pre-allocates

* page table to avoid allocation from

* atomic context.

*/

};

- struct vm_area_struct *vma: addr所属vma结构。

- unsigned int flags:??FAULT_FLAG_xxx flags,在do_user_addr_fault函数中由hard error code转换而来(《linux那些事之page fault(AMD64架构)(user space)(2)》

- gfp_t gfp_mask: 申请内存所使用flag,?__handle_mm_fault函数中可以调用_get_fault_gfp_mask(vma),从vma中获取转码成相应gfp_mask。

- pgoff_t pgoff:在vma中页偏移。

- unsigned long address: 发生page fault的虚拟地址。

- pmd_t *pmd:查找pmd表地址。

- pud_t *pud:pud表地址。

- pte_t orig_pte: 根据address查找到的原始pte 。

- struct page *cow_pag: COW page fault处理。

- struct page *page: page fault处理完之后 申请到的物理page。

- pte_t *pte:处理过之后的pte。

- spinlock_t *ptl: page table lock。

- pgtable_t prealloc_pte:预申请的pte。

?__handle_mm_fault函数中对struct vm_fault结构初始化:

static vm_fault_t __handle_mm_fault(struct vm_area_struct *vma,

unsigned long address, unsigned int flags)

{

struct vm_fault vmf = {

.vma = vma,

.address = address & PAGE_MASK,

.flags = flags,

.pgoff = linear_page_index(vma, address),

.gfp_mask = __get_fault_gfp_mask(vma),

};

... ...

}

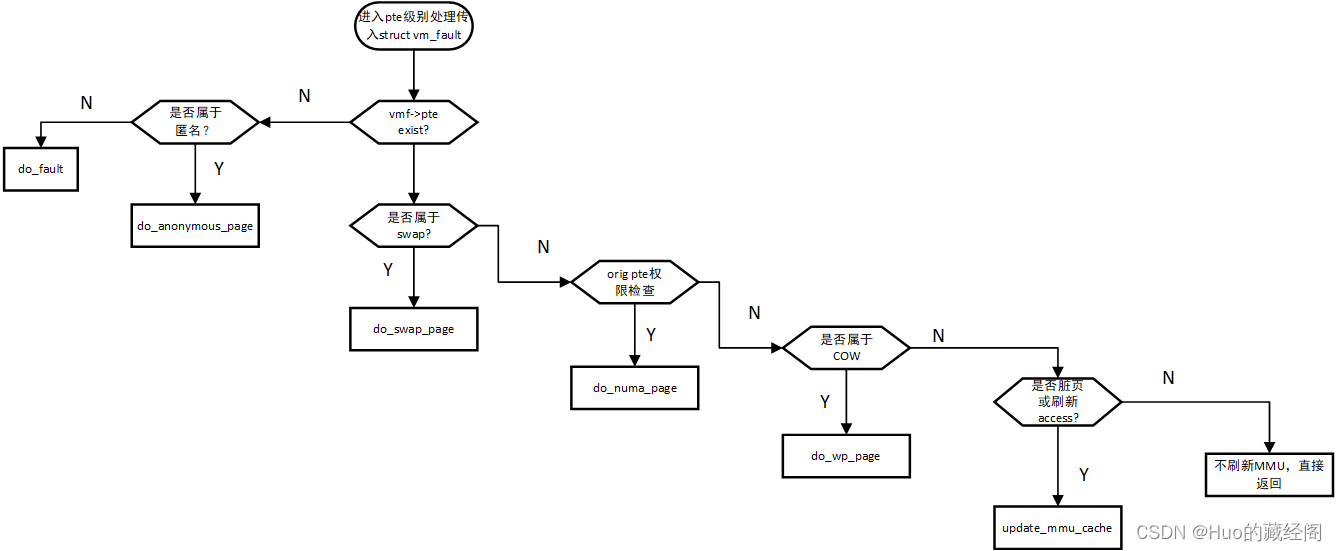

?handle_pte_fault

handle_pte_fault函数是page fault中walk page table中最后一级别处理,主要是根据最终查找的pte以及vma中标记的各种内存使用用途flag,进行各种不同的使用场景进行下一步进一步处理,主要处理逻辑如下:

?

?需要进一步结合代码说明:

/*

* These routines also need to handle stuff like marking pages dirty

* and/or accessed for architectures that don't do it in hardware (most

* RISC architectures). The early dirtying is also good on the i386.

*

* There is also a hook called "update_mmu_cache()" that architectures

* with external mmu caches can use to update those (ie the Sparc or

* PowerPC hashed page tables that act as extended TLBs).

*

* We enter with non-exclusive mmap_lock (to exclude vma changes, but allow

* concurrent faults).

*

* The mmap_lock may have been released depending on flags and our return value.

* See filemap_fault() and __lock_page_or_retry().

*/

static vm_fault_t handle_pte_fault(struct vm_fault *vmf)

{

pte_t entry;

if (unlikely(pmd_none(*vmf->pmd))) {

/*

* Leave __pte_alloc() until later: because vm_ops->fault may

* want to allocate huge page, and if we expose page table

* for an instant, it will be difficult to retract from

* concurrent faults and from rmap lookups.

*/

vmf->pte = NULL;

} else {

/* See comment in pte_alloc_one_map() */

if (pmd_devmap_trans_unstable(vmf->pmd))

return 0;

/*

* A regular pmd is established and it can't morph into a huge

* pmd from under us anymore at this point because we hold the

* mmap_lock read mode and khugepaged takes it in write mode.

* So now it's safe to run pte_offset_map().

*/

vmf->pte = pte_offset_map(vmf->pmd, vmf->address);

vmf->orig_pte = *vmf->pte;

/*

* some architectures can have larger ptes than wordsize,

* e.g.ppc44x-defconfig has CONFIG_PTE_64BIT=y and

* CONFIG_32BIT=y, so READ_ONCE cannot guarantee atomic

* accesses. The code below just needs a consistent view

* for the ifs and we later double check anyway with the

* ptl lock held. So here a barrier will do.

*/

barrier();

if (pte_none(vmf->orig_pte)) {

pte_unmap(vmf->pte);

vmf->pte = NULL;

}

}

if (!vmf->pte) {

if (vma_is_anonymous(vmf->vma))

return do_anonymous_page(vmf);

else

return do_fault(vmf);

}

if (!pte_present(vmf->orig_pte))

return do_swap_page(vmf);

if (pte_protnone(vmf->orig_pte) && vma_is_accessible(vmf->vma))

return do_numa_page(vmf);

vmf->ptl = pte_lockptr(vmf->vma->vm_mm, vmf->pmd);

spin_lock(vmf->ptl);

entry = vmf->orig_pte;

if (unlikely(!pte_same(*vmf->pte, entry))) {

update_mmu_tlb(vmf->vma, vmf->address, vmf->pte);

goto unlock;

}

if (vmf->flags & FAULT_FLAG_WRITE) {

if (!pte_write(entry))

return do_wp_page(vmf);

entry = pte_mkdirty(entry);

}

entry = pte_mkyoung(entry);

if (ptep_set_access_flags(vmf->vma, vmf->address, vmf->pte, entry,

vmf->flags & FAULT_FLAG_WRITE)) {

update_mmu_cache(vmf->vma, vmf->address, vmf->pte);

} else {

/* Skip spurious TLB flush for retried page fault */

if (vmf->flags & FAULT_FLAG_TRIED)

goto unlock;

/*

* This is needed only for protection faults but the arch code

* is not yet telling us if this is a protection fault or not.

* This still avoids useless tlb flushes for .text page faults

* with threads.

*/

if (vmf->flags & FAULT_FLAG_WRITE)

flush_tlb_fix_spurious_fault(vmf->vma, vmf->address);

}

unlock:

pte_unmap_unlock(vmf->pte, vmf->ptl);

return 0;

}

?主要步骤:

- 填充vmf->pte 和vmf->orig_pte方便后续处理

- vmf->pte 为NULL,说明该虚拟地址还没有做过映射,需要申请pte以及对应物理内存

- 如果为匿名映射即vma->vm_ops为NULL,则调用do_anonymous_page并返回。一般通过malloc或者mmap匿名映射申请到的内存都属于匿名映射,大部分情况都属于匿名映射

- 如果vma->vm_ops不为NULL,即不是匿名映射,调用do_fault处理并返回,最终会调用果vma->vm_ops中的fault接口。非匿名映射即文件映射一般都是通过mmap 申请到的文件映射,即在mmap中带fd参数,文件映射一般针对特殊驱动使用的一段特殊空间,用来做特殊使用,驱动可以根据自己需要注册对应的vm_ops。或者也可以用作文件操作

- vmf->pte不为空,说明该地址存在对应pte,进一步查找pte中物理页是否存在pte_present,如果不存在则说明该页有可能被置换到硬盘中,需要将该页的内容从磁盘中加载到内存中,调用do_swap_page处理并返回。

- vmf->pte不为空, 且物理页存在,则需要进一步查看物理页实际权限是否为none,以及通过vma查看需要的权限,如果vma设置可以访问权限,而实际物理页没有访问权限none,调用do_numa_page处理并返回。

- 如果以上情况都不是,则进一步查看是否是写内存造成的page fault,如果是写内存造成page fault,而对应pte 中物理页没有写权限,有可能是COW场景,需要调用do_wp_page进行处理。

- 上面所有情况都不是,虚拟地址存在对应物理映射,查看vma中权限是否和pte 权限一直,并根据需要设置access权限和脏页dirty标记,只剩下这中情况,有必要刷新update_mmu_cache,更新PTE entry中access权限和脏页dirty标记。

参考资料

c - Meaning of a piece of code in execv() system call - Stack Overflow