108.1 演示环境介绍

- CDSW版本:1.2或者1.3以上即可

108.2 操作演示

1.启用GPU的限制

- 加载nvidia.ko模块,创建/dev/nvidiactl设备,在/dev/nvidia0下创建设备列表

- 同时还会创建/dev/nvidia-uvm和/dev/nvidia-uvm-tools 设备,并给 /etc/rc.modules分配执行权限

- 在所有GPU服务器下运行一次以下命令:

# Manually load the required NVIDIA modules

sudo cat >> /etc/rc.modules <<EOMSG

/usr/bin/nvidia-smi

/usr/bin/nvidia-modprobe -u -c=0

EOMSG

# Set execute permission for /etc/rc.modules

sudo chmod +x /etc/rc.modules

2.启用GPU

- 设置操作系统和Kernel

- 更新并重启机器

sudo yum update -y

sudo reboot

- Development Tools和kernel-devel包的安装

- 需要在所有GPU节点上执行

sudo yum groupinstall -y "Development tools"

sudo yum install -y kernel-devel-`uname -r`

- 安装NVIDIA驱动在GPU节点上

- 根据需要修改NVIDIA_DRIVER_VERSION参数。

wget http://us.download.nvidia.com/.../NVIDIA-Linux-x86_64-<driver_version>.run

export NVIDIA_DRIVER_VERSION=<driver_version>

chmod 755 ./NVIDIA-Linux-x86_64-$NVIDIA_DRIVER_VERSION.run

./NVIDIA-Linux-x86_64-$NVIDIA_DRIVER_VERSION.run -asq

- 验证驱动程序是否正确安装:

/usr/bin/nvidia-smi

- 启用Docker NVIDIA Volumes

- 下载nvidia-docker,注意是否跟环境相对应

wget https://github.com/NVIDIA/nvidia-docker/releases/download/v1.0.1/nvidia-docker-1.0.1-1.x86_64.rpm

sudo yum install -y nvidia-docker-1.0.1-1.x86_64.rpm

- 启动所需的服务和插件

systemctl start nvidia-docker

systemctl enable nvidia-docker

- 运行一个小的容器来创建Docker卷结构

sudo nvidia-docker run --rm nvidia/cuda:9.1 nvidia-smi

- 确保以下目录已经创建

/var/lib/nvidia-docker/volumes/nvidia_driver/$NVIDIA_DRIVER_VERSION/

- 验证CDSW是否可以访问GPU

sudo docker run --net host \

--device=/dev/nvidiactl \

--device=/dev/nvidia-uvm \

--device=/dev/nvidia0 \

-v /var/lib/nvidia-docker/volumes/nvidia_driver/$NVIDIA_DRIVER_VERSION/:/usr/local/nvidia/ \

-it nvidia/cuda:9.1 \

/usr/local/nvidia/bin/nvidia-smi

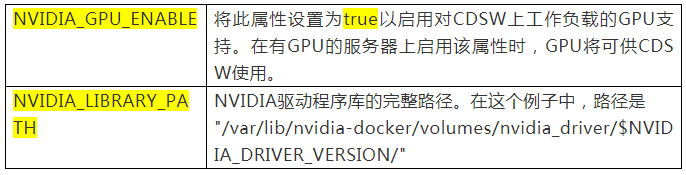

- 在所有CDSW节点上的/etc/cdsw/config/cdsw.conf配置文件中设置以下参数

- 必须确保所有节点上的cdsw.conf是相同的,无论该节点是否安装了GPU

- 必须确保所有节点上的cdsw.conf是相同的,无论该节点是否安装了GPU

- 重启CDSW

cdsw restart

- 如修改了工作节点上的cdsw.conf,需运行以下命令以确保更改生效:

cdsw reset

cdsw join

- GPU的节点

cdsw status

- 创建定制的CUDA引擎镜像

- cuda.Dockerfile

FROM docker.repository.cloudera.com/cdsw/engine:4

RUN NVIDIA_GPGKEY_SUM=d1be581509378368edeec8c1eb2958702feedf3bc3d17011adbf24efacce4ab5 && \

NVIDIA_GPGKEY_FPR=ae09fe4bbd223a84b2ccfce3f60f4b3d7fa2af80 && \

apt-key adv --fetch-keys http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/7fa2af80.pub && \

apt-key adv --export --no-emit-version -a $NVIDIA_GPGKEY_FPR | tail -n +5 > cudasign.pub && \

echo "$NVIDIA_GPGKEY_SUM cudasign.pub" | sha256sum -c --strict - && rm cudasign.pub && \

echo "deb http://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64 /" > /etc/apt/sources.list.d/cuda.list

ENV CUDA_VERSION 8.0.61

LABEL com.nvidia.cuda.version="${CUDA_VERSION}"

ENV CUDA_PKG_VERSION 8-0=$CUDA_VERSION-1

RUN apt-get update && apt-get install -y --no-install-recommends \

cuda-nvrtc-$CUDA_PKG_VERSION \

cuda-nvgraph-$CUDA_PKG_VERSION \

cuda-cusolver-$CUDA_PKG_VERSION \

cuda-cublas-8-0=8.0.61.2-1 \

cuda-cufft-$CUDA_PKG_VERSION \

cuda-curand-$CUDA_PKG_VERSION \

cuda-cusparse-$CUDA_PKG_VERSION \

cuda-npp-$CUDA_PKG_VERSION \

cuda-cudart-$CUDA_PKG_VERSION && \

ln -s cuda-8.0 /usr/local/cuda && \

rm -rf /var/lib/apt/lists/*

RUN echo "/usr/local/cuda/lib64" >> /etc/ld.so.conf.d/cuda.conf && \

ldconfig

RUN echo "/usr/local/nvidia/lib" >> /etc/ld.so.conf.d/nvidia.conf && \

echo "/usr/local/nvidia/lib64" >> /etc/ld.so.conf.d/nvidia.conf

ENV PATH /usr/local/nvidia/bin:/usr/local/cuda/bin:${PATH}

ENV LD_LIBRARY_PATH /usr/local/nvidia/lib:/usr/local/nvidia/lib64

RUN echo "deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1604/x86_64 /" > /etc/apt/sources.list.d/nvidia-ml.list

ENV CUDNN_VERSION 6.0.21

LABEL com.nvidia.cudnn.version="${CUDNN_VERSION}"

RUN apt-get update && apt-get install -y --no-install-recommends \

libcudnn6=$CUDNN_VERSION-1+cuda8.0 && \

rm -rf /var/lib/apt/lists/*

- 从cuda.Dockerfile生成一个自定义引擎镜像(custom engine image):

docker build --network host -t <company-registry>/cdsw-cuda:2 . -f cuda.Dockerfile

- 把这个新的引擎镜像push到公共Docker注册表,便可以用于CDSW工作负载:

docker push <company-registry>/cdsw-cuda:2

3.TensorFlow示例

- 打开CDSW控制台,启动一个Python引擎,安装TensorFlow

- Python 2

!pip install tensorflow-gpu

- Python 3

!pip3 install tensorflow-gpu

- 安装后必需要重启会话

- 使用以下示例代码创建一个新文件,该示例的后半部分列出了该引擎的所有可用GPU

import tensorflow as tf

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b')

c = tf.matmul(a, b)

# Creates a session with log_device_placement set to True.

sess = tf.Session(config=tf.ConfigProto(log_device_placement=True))

# Runs the operation.

print(sess.run(c))

# Prints a list of GPUs available

from tensorflow.python.client import device_lib

def get_available_gpus():

local_device_protos = device_lib.list_local_devices()

return [x.name for x in local_device_protos if x.device_type == 'GPU']

print get_available_gpus()

大数据视频推荐:

CSDN

大数据语音推荐:

企业级大数据技术应用

大数据机器学习案例之推荐系统

自然语言处理

大数据基础

人工智能:深度学习入门到精通