K8S+Dashboard部署

1.安装前说明

服务器必须是Centos 7.2及以上,Kubernetes 我们采用1.15.*版本

Docker-ce v18.06.3,Etcd 3.2.9,Flanneld v0.7.0-amd64

TLS 认证通信(所有组件,如etcd、kubernetes master 和node)

RBAC 授权,kubedns、dashboard

注意事项:

Docker安装的版本需与K8s版本批配,不然会出问题

Kubernetes 1.15.2 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.15.1 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.15.0 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.14.5 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.14.4 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.14.3 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.14.2 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.14.1 -->Docker版本1.13.1、17.03、17.06、17.09、18.06、18.09

Kubernetes 1.14.0 -->Docker版本1.1

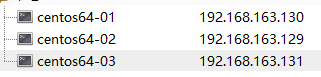

2.环境准备

3台虚拟机; 2核2G (最低) 20G 做的默认最低配置虚拟机~

分别登录三台服务器修改Host Name

hostnamectl set-hostname k8s-master \#Master节点

hostnamectl set-hostname k8s-node1 \#node1节点

hostnamectl set-hostname k8s-node2 \#node2节点

| name | IP | 数量 |

|---|---|---|

| k8s-master | 192.168.163.130 | Master节点 |

| k8s-node1 | 192.168.163.129 | 工作节点1 |

| k8s-node2 | 192.168.163.131 | 工作节点2 |

3. 初始化环境

以下步骤在三台服务器上均执行一遍

关闭selinux

setenforce 0

sed -i 's@^\(SELINUX=\).*@\1disabled@' /etc/selinux/config

关闭防火墙及IP TABLE

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl disable iptables

systemctl stop iptables

设置host

vim /etc/hosts

配置以下内容

192.168.163.130 k8s-master

192.168.163.129 k8s-node1

192.168.163.131 k8s-node2

4.安装Docker

备份源

sudo mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak

修改OS源为阿里的仓库

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

安装常用命名

yum -y install conntrack ipvsadm ipset jq sysstat curl iptables libseccomp wget lrzsz nmap lsof net-tools zip unzip vim telnet

安装依赖项

yum install -y yum-utils device-mapper-persistent-data lvm2

安装Docker源为阿里

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

清理缓存

yum clean all

重新生成缓存

yum makecache

再次查看yum源信息

yum repolist

下图代表已切换源为阿里 aliyun.com

三台服务安装Docker

查看docker列表 执行版本安装

yum list docker-ce --showduplicates | sort -r

安装18.06.3版本,k8s对应1.15.*

sudo yum install docker-ce-18.06.3.ce-3.el7

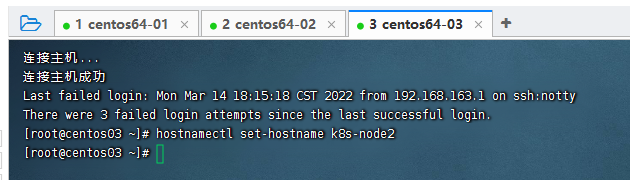

查看docker版本验证

启动docker

sudo chkconfig docker on #设置开机启动

systemctl start docker

修改Docker镜像仓库为国内镜像

部分系统安装后未生成daemon.json,请执行以下命令

mkdir -p /etc/docker

touch /etc/docker/daemon.json

vim /etc/docker/daemon.json

配置以下内容

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors":["从以下的公网仓库地址选其一"]

}

公网仓库地址以下三个任选其一,也可以找其他开放的仓库地址或自建仓库

https://docker.mirrors.ustc.edu.cn

https://registry.docker-cn.com

http://hub-mirror.c.163.com

修改后刷新daemon.json,重启docker服务使配置生效

systemctl daemon-reload

sudo systemctl restart docker.service

执行完后,可以查看下docker状态及详细信息

service docker status

docker info

如果不能正常启动docker,大概率是daemon.json文件有问题

我检查了好几次该文件的内容,因为是复制的内容,所以符号是中文的,单词也拼写错误,需要注意的几点如下:

1、注意符号是否是英文符号

2、单词是否拼写正确

3、json文件格式是否正确

以上步骤在三台机器均执行完毕后,且Docker状态正常,我们就开始安装K8S集群了

5.安装K8S

老规矩以下安装步骤在3台服务器上均执行一遍

安装K8S源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

导入公钥

wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

rpm --import yum-key.gpg

wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

rpm --import rpm-package-key.gpg

安装

yum install -y kubelet-1.15.9-0 kubeadm-1.15.9-0 kubectl-1.15.9-0

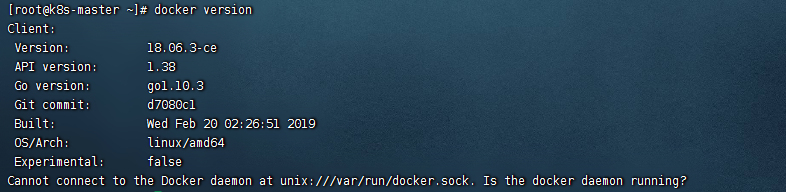

此时我这里发生错误

解决办法

vim /etc/yum.repos.d/kubernetes.repo

修改 repo_gpgcheck=0 跳过验证,如下图

重新执行安装指令即可;

启动

部署后第二天重启虚拟机报错:The connection to the server 192.168.163.130:6443 was refused - did you specify the right host or port?,所以建议此时提前做好①②配置

①在虚拟机上部署k8s 1.9版本需要关闭操作系统交换分区

# 我这里三台机器都执行了,不然给报swap的错误提示,这个只是临时的

swapoff -a

# 永久

swapoff -a

vi /etc/fstab

注释最后一行

②配置环境变量

具体根据情况,此处记录linux设置该环境变量

方式一:编辑文件设置

vim /etc/profile

在底部增加新的环境变量 export KUBECONFIG=/etc/kubernetes/admin.conf

方式二:直接追加文件内容

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

重新加载配置配置生效:

source /etc/profile

设置自启动查看状态

# 自启动kubelet

systemctl enable kubelet && systemctl start kubelet

#查看状态

systemctl status kubelet

说明

kubectl 是命令行工具,所以不用启动

kubeadm 是集群搭建工具,也不用启动

kubelet 是节点管理工具,需要在所有节点启动

注意 : 以上所有教程,都需要在集群所有机器上均操作一遍,包括安装 kubectl kubeadm kubelet,启动 kubelet

初始化K8S集群

在master节点上执行如下命令,其他节点不执行

注意命令的master ip及主机名,我的master主机名是k8s-master

kubeadm init --kubernetes-version=v1.15.9 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.163.130 --apiserver-cert-extra-sans=192.168.163.130,k8s-master --image-repository registry.aliyuncs.com/google_containers

#如果初始化失败了,配置低也很慢,记得重置 kubeadm reset 然后重新执行上面指令

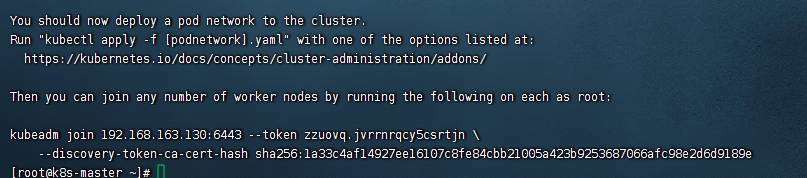

执行初始化,约半分钟,系统提示成功,系统生成的加入节点的命令和token自行保存下备用

kubeadm join 192.168.163.130:6443 --token zzuovq.jvrrnrqcy5csrtjn \

--discovery-token-ca-cert-hash sha256:1a33c4af14927ee16107c8fe84cbb21005a423b9253687066afc98e2d6d9189e

参数含义:

–kubernetes-version:指定kubeadm版本;

–pod-network-cidr:指定pod所属网络

–image-repository 指定下载源

–service-cidr:指定service网段,负载均衡ip

–ignore-preflight-errors=Swap/all:忽略 swap/所有 报错

–apiserver-advertise-address 指明用 Master 的哪个 interface 与 Cluster 的其他节点通信。如果 Master 有多个 interface,建议明确指定,如果不指定,kubeadm 会自动选择有默认网关的 interface。

–pod-network-cidr 指定 Pod 网络的范围。Kubernetes 支持多种网络方案,而且不同网络方案对 --pod-network-cidr 有自己的要求,这里设置为 10.244.0.0/16 是因为我们将使用 flannel 网络方案,必须设置成这个 CIDR。在后面的实践中我们会切换到其他网络方案,比如 Canal。

6.配置K8S

将当前用户配置为集群管理员(如果不配置,下次连接时会无法使用kubectl)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

部署flannel 等价 配置网络

不幸的是在线的被墙废弃了,直接通过源码搞吧

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

直接执行一下执行

cat <<EOF > kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

EOF

然后

kubectl apply -f kube-flannel.yml

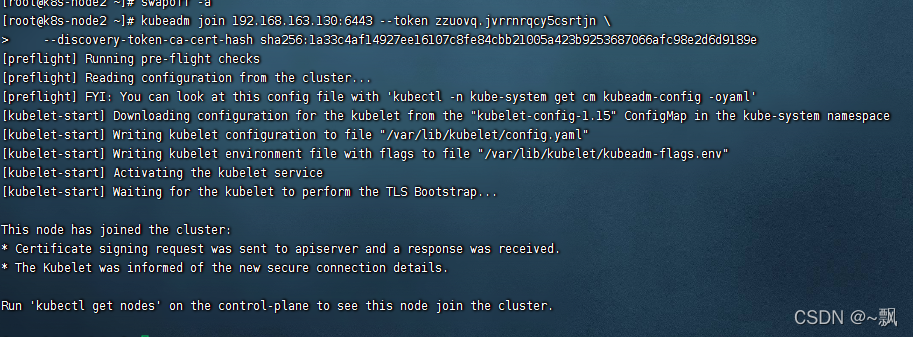

加入其他子节点

在另外两台子节点执行之前备份的初始化 join指令

前面保存的加入节点命令和token,这个环节使用,后续有新服务器加入同样在新节点执行该命令即可

kubeadm join 192.168.163.130:6443 --token zzuovq.jvrrnrqcy5csrtjn \

--discovery-token-ca-cert-hash sha256:1a33c4af14927ee16107c8fe84cbb21005a423b9253687066afc98e2d6d9189e

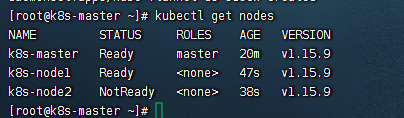

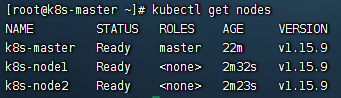

加入完毕后,我们用命令kubectl get nodes获取所有节点

kubectl get nodes

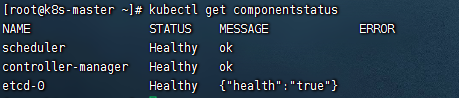

验证安装信息

#检查系统基础模块健康度

kubectl get componentstatus

# 检查node状态,如果有工作节点NotReady,等几分钟一般就会正常

kubectl get nodes

#检查系统pod状态

kubectl get pods -n kube-system

测试K8S集群

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@k8s-master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-554b9c67f9-wplnx 0/1 ContainerCreating 0 19s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 24m

service/nginx NodePort 10.99.165.65 <none> 80:30569/TCP 8s

如果想要看到Pod更多的信息,比如pod被部署在了哪个Node上,可以通过 kubectl get pods,svc -o wide来查看。

因为我们采用的是NodePort方式,该模式会直接将服务映射为主机的一个端口,用户通过主机IP+端口可以访问到该服务,其映射暴露出的端口号会在30000-32767范围内随机取一个,上面部署的nginx我们看到其端口是30569,那么我们用公网http://192.168.163.130|129|131:30569测试,如下图所示,已经可以访问到nginx默认页。

7.安装Dashboard

Dashboard是k8s的管理UI界面,其安装过程最复杂的是证书的处理。

获取并修改Dashboard安装的Yaml文件

wget http://mirror.faasx.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

修改该文件配置

vim kubernetes-dashboard.yaml

按i,然后按方向键滚动到最后,节点是kind: service这部分

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort 插入这一行,注意对齐及空格

ports:

- port: 443

nodePort: 30000 插入一行,如果指定,则K8S会自动分配一个端口,注意手工填写端口范围不能超出k8s默认限制,

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

以下开始处理证书

mkdir key && cd key

生成证书

openssl genrsa -out dashboard.key 2048

我这里写的自己的node1节点,因为我是通过nodeport访问的;如果通过apiserver访问,可以写成自己的master节点ip

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=192.168.163.130'

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

创建新的证书secret

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kube-system

执行安装

# yaml路径再上一层 尴尬

cd ../

kubectl apply -f kubernetes-dashboard.yaml

# 安装可能需要几分钟,查看状态

kubectl get pod -n kube-system

创建用户信息

1.创建一个叫admin-user的服务账号:

vim dashboard-admin.yaml

#内容如下

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

# 然后执行创建用户

kubectl create -f dashboard-admin.yaml

2.直接绑定admin角色:

vim admin-user-role-binding.yaml

# 内容如下

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

#执行绑定:

kubectl create -f admin-user-role-binding.yaml

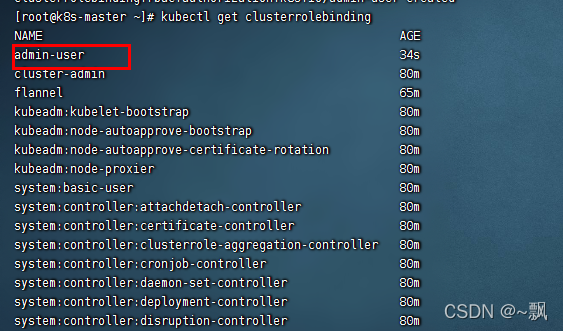

3.查看绑定是否成功

kubectl get clusterrolebinding

4.验证安装

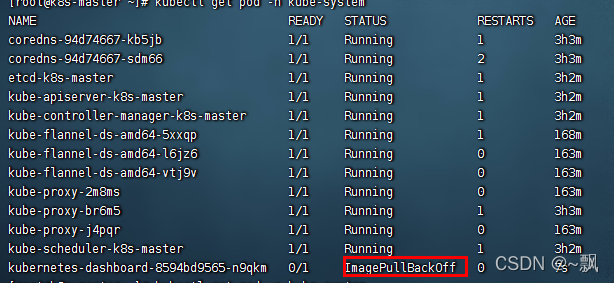

查看pod运行状态

kubectl get pod -n kube-system | grep dashboard

4.1 遇到了问题,没有上图的情况 跳到4.2

`遇到的问题`

ImagePullBackOff,ErrImagePull,CrashLoopBackOff问题解决 dashboard不能正常访问

`查询原因常用指令` namespace 不修改一般都是kube-system podname:pod名称

1,通过 kubectl get pods -A查看dashboard具体情况,发现dashboard的pod节点ImagePullBackOff

2,通过 kubectl describe pod -n namespace podname 查看节点详细情况,看到node2节点无法正擦pull下镜像。

3, 通过kubectl log pod -n namespace podname查看日志。

4,遇到的情况node节点的dns不能用导致镜像pull不下来

https://blog.csdn.net/heliu3/article/details/108770151

自动镜像拉不下来问题解决

可参考: https://blog.csdn.net/zzq900503/article/details/105796027

其中两种方式:

方式一(走线上):更换所需的镜像地址 为可以拉到的,此时只用修改 image:

vim kubernetes-dashboard.yaml

修改image名称即可如1.8.3版本 anjia0532/kubernetes-dashboard-amd64:v1.8.3

方式二(走本地).通过docker 拉取可以拉到的镜像,对镜像定义本地tag(重命名的意思),要保证和image:名字一致;配置 imagePullPolicy: Never 不让拉取,走本地已有镜像

docker pull anjia0532/kubernetes-dashboard-amd64:v1.8.3

docker tag anjia0532/kubernetes-dashboard-amd64:v1.8.3 k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

vim kubernetes-dashboard.yaml

修改image名称即可如1.8.3版本 k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

设置 imagePullPolicy: Never

4.2. 经过一番斗争后终于跑起来了

kubectl get pod --namespace=kube-system

# 查运行状态

[root@k8s-master ~]# kubectl get pod -n kube-system | grep dashboard

kubernetes-dashboard-754df9896d-2hbbc 1/1 Running 0 21m

# 查看dashborad service

[root@k8s-master ~]# kubectl get svc -n kube-system|grep dashboard

kubernetes-dashboard NodePort 10.102.220.69 <none> 443:30000/TCP 22m

# 查运行日志

[root@k8s-master ~]# kubectl logs kubernetes-dashboard-754df9896d-2hbbc -n kube-system

2022/03/15 07:32:17 Starting overwatch

2022/03/15 07:32:17 Using in-cluster config to connect to apiserver

2022/03/15 07:32:17 Using service account token for csrf signing

2022/03/15 07:32:17 No request provided. Skipping authorization

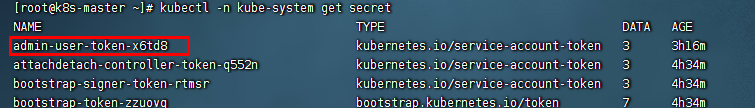

- 获取登录Dashboard的Token

# 找到admin-user-token用户

kubectl -n kube-system get secret

# 我的用户是 admin-user-token-x6td8 ;获取token串

kubectl -n kube-system describe secret admin-user-token-x6td8

# token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXg2dGQ4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5NzQ1MDM0Mi03MWNmLTQ0NzItOWM5Zi03MWQwYTBhYjk4NTIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.nJEx6wHHvkBIJc_P7IkFmnnPME1Of6LLSlPOMqE8dWecB6a6aP4wXzf-0UnmK_xCIr3OY_azJ165Y1RYLAtSaJkDxejjlHOaO0lHzeu6LeCq0jc_V8r8d9ZK5vgib3mpLm9gU9zsMDJ9wDajAz2WFf9uiy-8e4FiMqVCHkzHfW_4GdVGjEHaL8fXxUZcaeN8Jmc2IONlGHAkHcr5k-lnljsk9ZEfneqRTovO5TLGxqYb0kGIWHsD4sgOzYtmnMbKrrNDxXUYR4KSBJujcNaW2ecXYVOrXXDYZUkfhHrn--s_YpmfSO8X4vYtawvLO6lxR0mZmQnuQian0KsnZ0jYtQ

如上图所示,该帐户的登录token已经产生,自行复制保存下来,后面登录dashboard就使用这个token,我们输入https://ip:30000测试下登录,注意dahsboard是使用的https协议。

谷歌浏览器、win11自带浏览器,高级了,非私密链接不让访问;火狐还会显示继续访问按钮

解决办法

当出现 “您的连接不是私密” 页面时,点击高级后,并直接输入 thisisunsafe 关键字(“暗号啊”)并回车。当你使用的 Chrome 版本不允许通过点击操作设置例外时,这样操作将允许将此次请求设置到安全例外中。

粘上上面备份token就可以了

嗖的一下就进去了,随便点了 至此完成安装!