一、环境准备

??版本信息

| 名称 | 版本 |

|---|---|

| 系统版本 | CentOS Linux release 7.9.2009 (Core) |

| 内核版本 | 5.4.180-1.el7.elrepo.x86_64 |

| kubeadm版本 | v1.22.7 |

| containerd版本 | 1.4.12 |

??版本要求

??使用containerd,官方建议4.x以上内核,centos7默认使用3.1版本内核,建议升级内核。升级内核参考之前的文章。

??centos7升级内核

1.k8s集群高可用拓扑选项

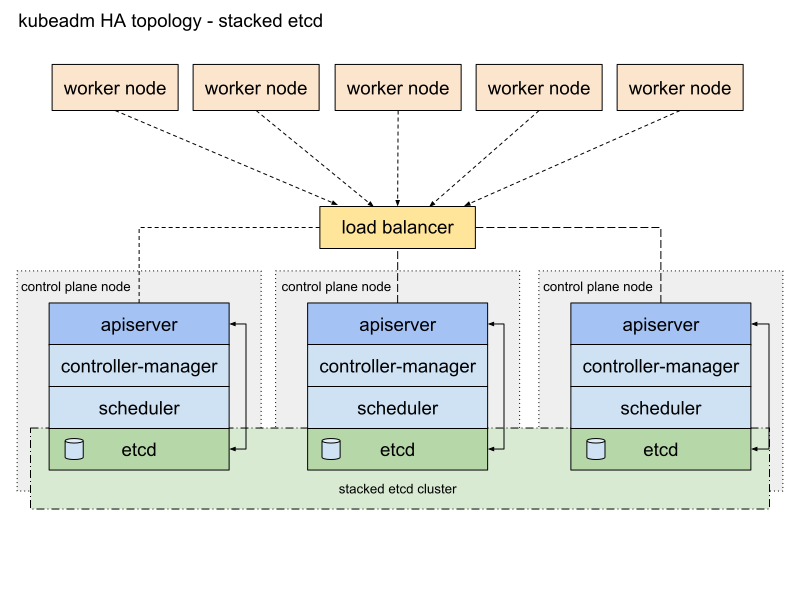

??堆叠拓扑

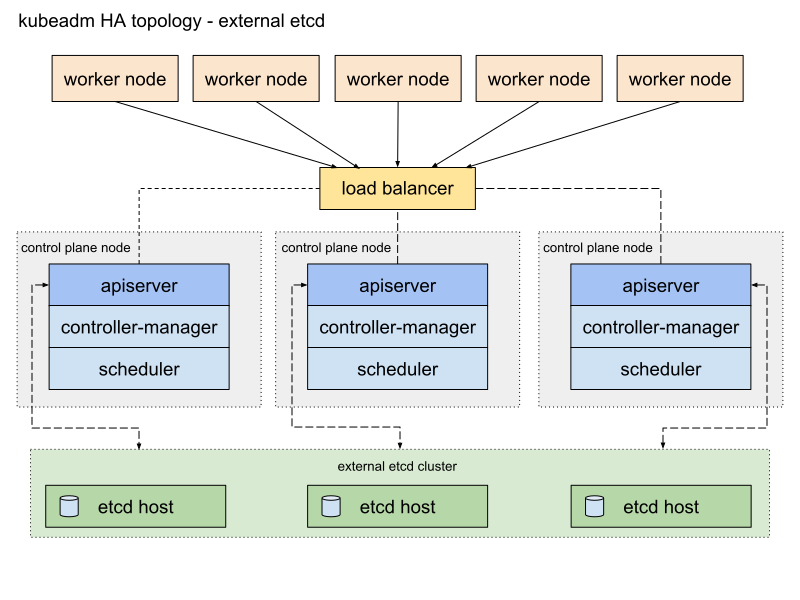

??外部 etcd 拓扑

使用堆叠拓扑,控制平面和 etcd 成员在同一节点上,设置和管理相对简单,缺点在于如果出现单点故障,etcd 成员和控制平面实例都将丢失。

使用外部 etcd 拓扑,etcd 分布式数据存储集群在独立于控制平面节点的其他节点上运行,可用性更高,但是需要两倍于堆叠 拓扑的主机数量。

2.节点环境初始化(所有节点都执行)

??选择纯净的机器,静态配置IP地址。运行以下脚本:

#!/bin/bash

# 更新

yum update -y

# 卸载 firewalld

systemctl stop firewalld

yum remove firewalld -y

# 卸载 networkmanager

systemctl stop NetworkManager

yum remove NetworkManager -y

# 同步服务器时间

yum install chrony -y

systemctl enable --now chronyd

chronyc sources

# 安装iptables(搭建完集群后再安装,比较省事)

#yum install -y iptables iptables-services && systemctl enable --now iptables.service

# 关闭 selinux

setenforce 0

sed -i '/^SELINUX=/cSELINUX=disabled' /etc/selinux/config

getenforce

# 关闭swap分区

swapoff -a # 临时

sed -i '/ swap / s/^/# /g' /etc/fstab #永久

# 安装其他必要组件和常用工具包

yum install -y yum-utils zlib zlib-devel openssl openssl-devel \

net-tools vim wget lsof unzip zip bind-utils lrzsz telnet

# 如果是从安装过docker的服务器升级k8s,建议将/etc/sysctl.conf配置清掉

# 这条命令会清除所有没被注释的行

# sed -i '/^#/!d' /etc/sysctl.conf

# 安装ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

yum install ipset ipvsadm -y

# 允许 iptables 检查桥接流量

cat <<EOF | tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

sysctl --system

cat <<EOF | tee /etc/sysctl.d/k8s.conf

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

modprobe br_netfilter

lsmod | grep netfilter

sysctl -p /etc/sysctl.d/k8s.conf

# 安装 containerd

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list containerd.io --showduplicates

yum install -y containerd.io

mkdir -p /etc/containerd

containerd config default | tee /etc/containerd/config.toml

cat <<EOF | tee /etc/crictl.yaml

runtime-endpoint: "unix:///run/containerd/containerd.sock"

image-endpoint: "unix:///run/containerd/containerd.sock"

timeout: 10

debug: false

pull-image-on-create: false

disable-pull-on-run: false

EOF

# 使用 systemd cgroup驱动程序

sed -i "s#k8s.gcr.io#registry.aliyuncs.com/google_containers#g" /etc/containerd/config.toml

sed -i '/containerd.runtimes.runc.options/a\ \ \ \ \ \ \ \ \ \ \ \ SystemdCgroup = true' /etc/containerd/config.toml

sed -i "s#https://registry-1.docker.io#https://registry.aliyuncs.com#g" /etc/containerd/config.toml

systemctl daemon-reload

systemctl enable containerd

systemctl restart containerd

# 添加kubernetes yum软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装kubeadm,kubelet和kubectl

yum list kubeadm --showduplicates

yum install -y kubelet-1.22.7 kubeadm-1.22.7 kubectl-1.22.7 --disableexcludes=kubernetes

# 设置开机自启

systemctl daemon-reload

systemctl enable --now kubelet

# kubelet每隔几秒就会重启,陷入等待 kubeadm 指令的死循环

# 命令自动补全

yum install -y bash-completion

source <(crictl completion bash)

crictl completion bash >/etc/bash_completion.d/crictl

source <(kubectl completion bash)

kubectl completion bash >/etc/bash_completion.d/kubectl

source /usr/share/bash-completion/bash_completion

3.重新命名主机,修改hosts文件

#分别修改主机名称(根据实际情况选择不同服务器重命名)

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-node1

#修改hosts文件(按实际情况修改后,所有节点执行)

cat <<EOF | tee /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.15 k8s-master1

192.168.1.123 k8s-master2

192.168.1.124 k8s-master3

192.168.1.65 k8s-node1

192.168.1.26 k8s-node2

192.168.1.128 k8s-node3

192.168.1.129 k8s-node4

EOF

??以上步骤k8s集群所有主机都需要执行

4.安装keepalived、haproxy

配置文件

??k8s-master1

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s-master1

# 添加如下内容

script_user root

enable_script_security

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh" # 检测脚本路径

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER #主

interface eth0 #网卡名

virtual_router_id 51

priority 100 #权重

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.223 #vip

}

track_script {

check_haproxy # 模块

}

}

??k8s-master2

! Configuration File for keepalived

global_defs {

router_id k8s-master2

# 添加如下内容

script_user root

enable_script_security

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh" # 检测脚本路径

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP #备

interface eth0 #网卡名

virtual_router_id 51

priority 99 #权重

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.223 #vip

}

track_script {

check_haproxy # 模块

}

}

??k8s-master3

! Configuration File for keepalived

global_defs {

router_id k8s-master3

# 添加如下内容

script_user root

enable_script_security

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh" # 检测脚本路径

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP #备

interface eth0 #网卡名

virtual_router_id 51

priority 98 #权重

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.223 #vip

}

track_script {

check_haproxy # 模块

}

}

??haproxy配置(3台master都一样)

vim /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server k8s-master1 192.168.1.15:6443 check

server k8s-master2 192.168.1.123:6443 check

server k8s-master3 192.168.1.124:6443 check

??健康检查脚本

vim /etc/keepalived/check_haproxy.sh

#!/bin/sh

# HAPROXY down

A=`ps -C haproxy --no-header | wc -l`

if [ $A -eq 0 ]

then

systmectl start haproxy

if [ ps -C haproxy --no-header | wc -l -eq 0 ]

then

killall -9 haproxy

echo "HAPROXY down"#需要告警的话根据实际情况填写

sleep 3600

fi

fi

启动服务,并设置开机自启

systemctl enable --now keepalived

systemctl enable --now haproxy

二、部署Kubernetes Master

1.kubeadm常用命令

| 命令 | 效果 |

|---|---|

| kubeadm init | 用于搭建控制平面节点 |

| kubeadm join | 用于搭建工作节点并将其加入到集群中 |

| kubeadm upgrade | 用于升级 Kubernetes 集群到新版本 |

| kubeadm config | 如果你使用了 v1.7.x 或更低版本的 kubeadm 版本初始化你的集群,则使用 kubeadm upgrade 来配置你的集群 |

| kubeadm token | 用于管理 kubeadm join 使用的令牌 |

| kubeadm reset | 用于恢复通过 kubeadm init 或者 kubeadm join 命令对节点进行的任何变更 |

| kubeadm certs | 用于管理 Kubernetes 证书 |

| kubeadm kubeconfig | 用于管理 kubeconfig 文件 |

| kubeadm version | 用于打印 kubeadm 的版本信息 |

| kubeadm alpha | 用于预览一组可用于收集社区反馈的特性 |

2.推荐使用配置文件部署

??默认配置文件

# 查看默认配置文件

kubeadm config print init-defaults

# 查看所需镜像

kubeadm config images list --image-repository registry.aliyuncs.com

# 导出默认配置文件到当前目录

kubeadm config print init-defaults > kubeadm.yaml

??配置文件参考

kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: qjbajd.zp1ta327pwur2k8g

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.15

bindPort: 6443

nodeRegistration:

criSocket: /run/containerd/containerd.sock

name: k8s-master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

extraArgs:

authorization-mode: "Node,RBAC"

certSANs:

- 192.168.1.223

- 192.168.1.15

- 192.168.1.123

- 192.168.1.124

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.22.7

controlPlaneEndpoint: "192.168.1.223:16443" # 虚拟IP和haproxy端口

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

添加certSANs、controlPlaneEndpoint等配置项,选择IPVS 代理模式 ,cgroup选择systemd(kubelet和containerd使用相同的cgroup驱动,官方建议使用systemd),镜像库使用阿里云镜像站,其他的根据实际情况填写

??提前拉取镜像

kubeadm config images pull --config kubeadm-config.yaml

3.集群初始化

kubeadm init --config=kubeadm-config.yaml --upload-certs

??部分信息

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.1.223:16443 --token qjbajd.zp1ta327pwur2k8g \

--discovery-token-ca-cert-hash sha256:d21d770c1bfc059280c005096e5fd0e4133ef0e69ac724980005c9e821b45fe9 \

--control-plane --certificate-key ba37092cbcaa1784e46cb2827bc3603d200c6149db28577e9fe433403126734b

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.223:16443 --token qjbajd.zp1ta327pwur2k8g \

--discovery-token-ca-cert-hash sha256:d21d770c1bfc059280c005096e5fd0e4133ef0e69ac724980005c9e821b45fe9

??根据提示操作

mkdir -p $HOME/.kube && \

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && \

chown $(id -u):$(id -g) $HOME/.kube/config

??master节点执行命令加入集群

示例:

[root@k8s-master2 ~]# kubeadm join 192.168.1.223:16443 --token qjbajd.zp1ta327pwur2k8g \

> --discovery-token-ca-cert-hash sha256:d21d770c1bfc059280c005096e5fd0e4133ef0e69ac724980005c9e821b45fe9 \

> --control-plane --certificate-key ba37092cbcaa1784e46cb2827bc3603d200c6149db28577e9fe433403126734b

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.123 192.168.1.223 192.168.1.15 192.168.1.124]

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master2 localhost] and IPs [192.168.1.123 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master2 localhost] and IPs [192.168.1.123 127.0.0.1 ::1]

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

[mark-control-plane] Marking the node k8s-master2 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

??master节点加入后也要执行命令生成配置文件,才能使用kubectl管理集群

mkdir -p $HOME/.kube && \

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && \

chown $(id -u):$(id -g) $HOME/.kube/config

??node节点执行命令加入集群

示例:

[root@k8s-node1 ~]# kubeadm join 192.168.1.223:16443 --token qjbajd.zp1ta327pwur2k8g \

> --discovery-token-ca-cert-hash sha256:d21d770c1bfc059280c005096e5fd0e4133ef0e69ac724980005c9e821b45fe9

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

4.部署calico插件

??部署网络插件,使用 Kubernetes API 数据存储进行安装——50 个节点或更少

??使用3.21版本,下载

curl -O https://docs.projectcalico.org/archive/v3.21/manifests/calico.yaml

使用calico插件,网卡名称最好是常用的,比如说eth、eno等。如果是enp0s6这种,可能导致部分pod报错。使用服务器部署容易遇到这个问题,虚拟机、云主机网卡名称一般是统一的。

??使用apply部署

kubectl apply -f calico.yaml

??查看pod

kubectl get pods --all-namespaces -o wide

??等待一段时间,查看集群运行情况,查看node节点

kubectl get nodes -o wide

??效果示例

[root@k8s-master1 yaml]# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-76c5bc74-476tl 1/1 Running 0 4m32s 10.244.159.131 k8s-master1 <none> <none>

kube-system calico-node-48zjc 1/1 Running 0 2m51s 192.168.1.124 k8s-master3 <none> <none>

kube-system calico-node-4c4v9 1/1 Running 0 2m45s 192.168.1.65 k8s-node1 <none> <none>

kube-system calico-node-5pz6d 1/1 Running 0 4m33s 192.168.1.15 k8s-master1 <none> <none>

kube-system calico-node-7r4lq 1/1 Running 0 2m38s 192.168.1.129 k8s-node4 <none> <none>

kube-system calico-node-gb95k 1/1 Running 0 3m42s 192.168.1.123 k8s-master2 <none> <none>

kube-system calico-node-hqtgk 1/1 Running 0 2m49s 192.168.1.26 k8s-node2 <none> <none>

kube-system calico-node-s5z6k 1/1 Running 0 2m46s 192.168.1.128 k8s-node3 <none> <none>

kube-system coredns-7f6cbbb7b8-rqrqn 1/1 Running 0 5m54s 10.244.159.130 k8s-master1 <none> <none>

kube-system coredns-7f6cbbb7b8-zlw7s 1/1 Running 0 5m54s 10.244.159.129 k8s-master1 <none> <none>

kube-system etcd-k8s-master1 1/1 Running 5 6m8s 192.168.1.15 k8s-master1 <none> <none>

kube-system etcd-k8s-master2 1/1 Running 0 3m41s 192.168.1.123 k8s-master2 <none> <none>

kube-system etcd-k8s-master3 1/1 Running 0 3m21s 192.168.1.124 k8s-master3 <none> <none>

kube-system kube-apiserver-k8s-master1 1/1 Running 5 6m8s 192.168.1.15 k8s-master1 <none> <none>

kube-system kube-apiserver-k8s-master2 1/1 Running 1 3m41s 192.168.1.123 k8s-master2 <none> <none>

kube-system kube-apiserver-k8s-master3 1/1 Running 1 2m59s 192.168.1.124 k8s-master3 <none> <none>

kube-system kube-controller-manager-k8s-master1 1/1 Running 7 (3m30s ago) 6m1s 192.168.1.15 k8s-master1 <none> <none>

kube-system kube-controller-manager-k8s-master2 1/1 Running 1 3m41s 192.168.1.123 k8s-master2 <none> <none>

kube-system kube-controller-manager-k8s-master3 1/1 Running 1 3m21s 192.168.1.124 k8s-master3 <none> <none>

kube-system kube-proxy-2f55t 1/1 Running 0 2m51s 192.168.1.124 k8s-master3 <none> <none>

kube-system kube-proxy-dcbzb 1/1 Running 0 3m42s 192.168.1.123 k8s-master2 <none> <none>

kube-system kube-proxy-lqkw8 1/1 Running 0 2m47s 192.168.1.128 k8s-node3 <none> <none>

kube-system kube-proxy-lvpnz 1/1 Running 0 2m38s 192.168.1.129 k8s-node4 <none> <none>

kube-system kube-proxy-pwz5m 1/1 Running 0 2m49s 192.168.1.26 k8s-node2 <none> <none>

kube-system kube-proxy-rdfw2 1/1 Running 0 2m46s 192.168.1.65 k8s-node1 <none> <none>

kube-system kube-proxy-vqksb 1/1 Running 0 5m54s 192.168.1.15 k8s-master1 <none> <none>

kube-system kube-scheduler-k8s-master1 1/1 Running 7 (3m30s ago) 6m1s 192.168.1.15 k8s-master1 <none> <none>

kube-system kube-scheduler-k8s-master2 1/1 Running 1 3m41s 192.168.1.123 k8s-master2 <none> <none>

kube-system kube-scheduler-k8s-master3 1/1 Running 1 3m9s 192.168.1.124 k8s-master3 <none> <none>

[root@k8s-master1 yaml]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master1 Ready control-plane,master 6m43s v1.22.7 192.168.1.15 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.12

k8s-master2 Ready control-plane,master 4m16s v1.22.7 192.168.1.123 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.13

k8s-master3 Ready control-plane,master 4m6s v1.22.7 192.168.1.124 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.13

k8s-node1 Ready <none> 3m21s v1.22.7 192.168.1.65 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.12

k8s-node2 Ready <none> 3m23s v1.22.7 192.168.1.26 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.12

k8s-node3 Ready <none> 3m22s v1.22.7 192.168.1.128 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.13

k8s-node4 Ready <none> 3m14s v1.22.7 192.168.1.129 <none> CentOS Linux 7 (Core) 5.4.180-1.el7.elrepo.x86_64 containerd://1.4.13

??node和pod都显示ready,k8s集群部署成功

三、常用扩展工具部署

1.部署metrics-server

??下载地址

https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.5.2/components.yaml

若被墙,下载链接

https://download.csdn.net/download/weixin_44254035/83205018

??修改yaml文件

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls #忽略证书要求,使用阿里云镜像站

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.5.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

??使用kubectl apply -f部署

kubectl apply -f metrics-0.5.2.yaml

# 查看pod,等待ready

kubectl get pods -n kube-system

若报错,可以查看日志定位问题

kubectl logs pod名称 -n kube-system

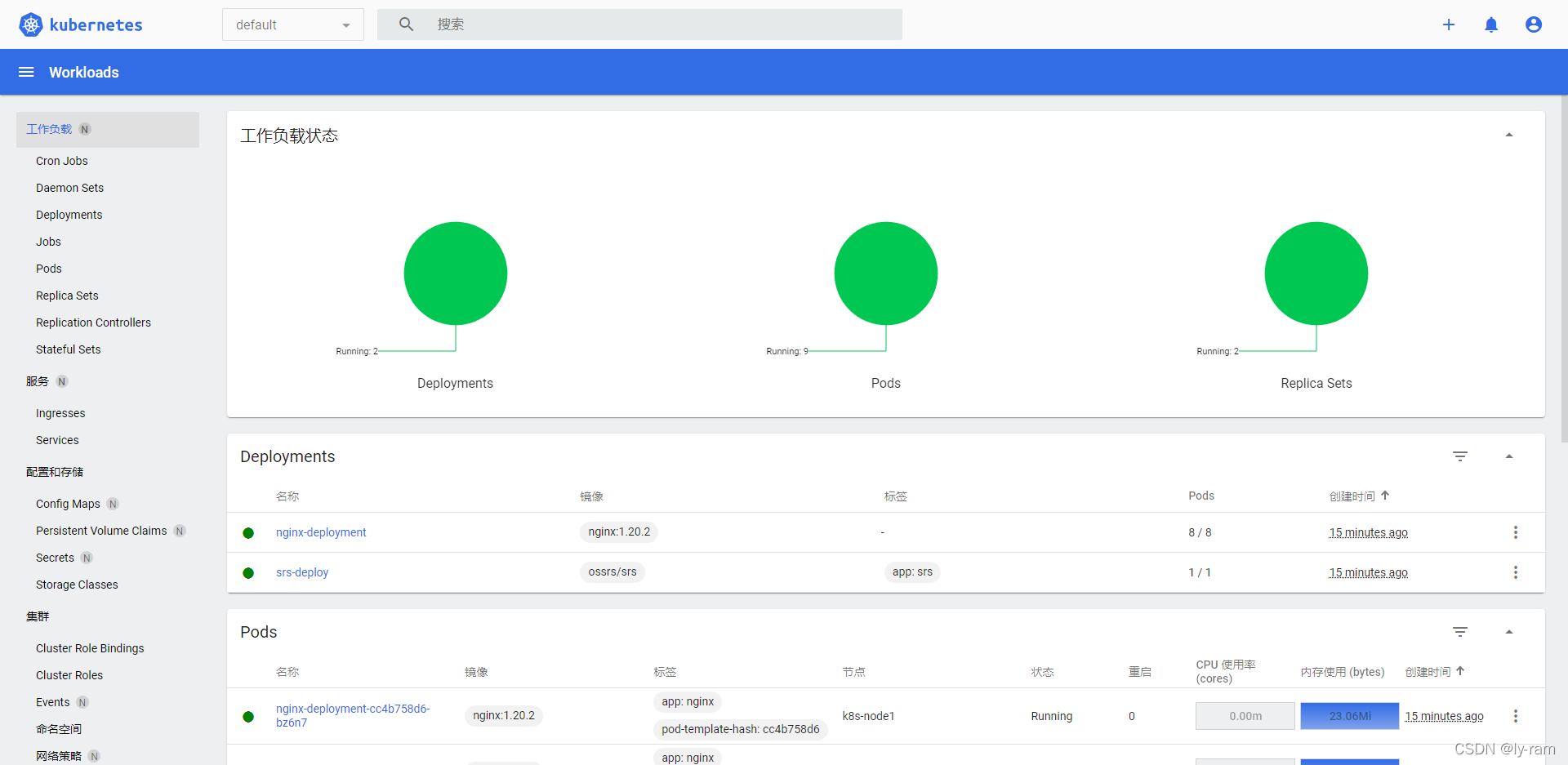

??目前默认的命名空间没有pod在运行,运行nginx、srs测试一下

vim nginx-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 8

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.20.2

ports:

- containerPort: 80

vim srs-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: srs-deploy

labels:

app: srs

spec:

replicas: 1

selector:

matchLabels:

app: srs

template:

metadata:

labels:

app: srs

spec:

containers:

- name: srs

image: ossrs/srs

imagePullPolicy: IfNotPresent

ports:

- containerPort: 1935

- containerPort: 1985

- containerPort: 8080

vim srs-service.yaml

apiVersion: v1

kind: Service

metadata:

name: srs-origin-service

spec:

type: NodePort

selector:

app: srs

ports:

- name: srs-origin-service-1935-1935

port: 1935

protocol: TCP

targetPort: 1935

nodePort: 31935 # 新增

- name: srs-origin-service-1985-1985

port: 1985

protocol: TCP

targetPort: 1985

nodePort: 31985 # 新增

- name: srs-origin-service-8080-8080

port: 8080

protocol: TCP

targetPort: 8080

nodePort: 30080 # 新增

部署

kubectl apply -f nginx-test.yaml -f srs-deployment.yaml -f srs-service.yaml

??使用kubectl top命令

kubectl top nodes

kubectl top pods

效果示例

[root@k8s-master1 test]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master1 433m 10% 1367Mi 35%

k8s-master2 356m 8% 1018Mi 26%

k8s-master3 325m 8% 1088Mi 28%

k8s-node1 206m 0% 856Mi 2%

k8s-node2 189m 0% 950Mi 0%

k8s-node3 133m 3% 738Mi 19%

k8s-node4 125m 3% 717Mi 18%

[root@k8s-master1 test]# kubectl top pods

NAME CPU(cores) MEMORY(bytes)

nginx-deployment-cc4b758d6-bz6n7 0m 23Mi

nginx-deployment-cc4b758d6-ct7jg 0m 5Mi

nginx-deployment-cc4b758d6-dpf5d 0m 5Mi

nginx-deployment-cc4b758d6-jw9xf 0m 44Mi

nginx-deployment-cc4b758d6-mfzjh 0m 44Mi

nginx-deployment-cc4b758d6-mg2np 0m 23Mi

nginx-deployment-cc4b758d6-rb7jr 0m 5Mi

nginx-deployment-cc4b758d6-t6b9m 0m 5Mi

srs-deploy-68f79458dc-7jt6l 60m 12Mi

2.部署Dashboard

mkdir -p /home/yaml/dashboard && cd /home/yaml/dashboard

# 下载

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml

# 改名

mv recommended.yaml dashboard-recommended.yaml

若被墙,下载链接

https://download.csdn.net/download/weixin_44254035/83056768

??修改dashboard-recommended.yaml,修改service的类型为NodePort

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort # 新增

ports:

- port: 443

targetPort: 8443

nodePort: 30005 # 新增

selector:

k8s-app: kubernetes-dashboard

kubectl apply -f dashboard-recommended.yaml

??创建Service Account 及 ClusterRoleBinding

vim auth.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

kubectl apply -f auth.yaml

??查看运行情况

kubectl get po,svc -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dashboard-metrics-scraper-c45b7869d-vx249 1/1 Running 0 69s 10.244.169.133 k8s-node2 <none> <none>

pod/kubernetes-dashboard-764b4dd7-q7cwl 1/1 Running 0 71s 10.244.169.132 k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dashboard-metrics-scraper ClusterIP 10.104.248.183 <none> 8000/TCP 71s k8s-app=dashboard-metrics-scraper

service/kubernetes-dashboard NodePort 10.103.213.23 <none> 443:30005/TCP 75s k8s-app=kubernetes-dashboard

??获取访问 Kubernetes Dashboard所需的 Token

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-6gzhs

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 6977202e-a4b9-47b6-8a5b-428fc29f44b5

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Im1Kc3J3Vi03VEE3Q1Z4RWZ4U1lybHhkdFEzMlBIYUlQUzd5WkZ6V2I0SFkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTZnemhzIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2OTc3MjAyZS1hNGI5LTQ3YjYtOGE1Yi00MjhmYzI5ZjQ0YjUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.g0AqarDbfOukXv-B9w8U8e-I1RhQTq6C2Z96Ae_6c4OpwJgyTL8yz3MwoEYv3HoGsXQgxGeEWn7lyBe4xIdOBSwQ__u9TRwFr4CLLVlJQCEhSwJUnys3aAg1nbQztk8IJxprl8blcYFwDrumvwVF8gDgJzBU6CD4d_zAk9xXW7tGXFKoZuNww_v4K2YNQUXAIJ4bSunDAJJ5sTtdvgaZty_0lXwgcUzdSxpPDOtkCAAlUt0cPXJZiv-lrRAllrlloTv0Cip50s9MagaHrgkuzmPPkZuXJ-y9XUJuP0D_QoAAJVCynTfgCgTNTksuOilA23NnHT5f60xdQYNrT3ophA

??浏览器访问

https://192.168.1.223:30005

复制token粘贴后进入

??下面通过kubeconfig登陆

获取token

kubectl get secret -n kubernetes-dashboard

NAME TYPE DATA AGE

admin-user-token-6gzhs kubernetes.io/service-account-token 3 6m47s

default-token-df4j6 kubernetes.io/service-account-token 3 7m5s

kubernetes-dashboard-certs Opaque 0 7m4s

kubernetes-dashboard-csrf Opaque 1 7m4s

kubernetes-dashboard-key-holder Opaque 2 7m3s

kubernetes-dashboard-token-xn9kp kubernetes.io/service-account-token 3 7m6s

使用admin-user-token-**

DASH_TOCKEN=$(kubectl -n kubernetes-dashboard get secret admin-user-token-6gzhs -o jsonpath={.data.token} |base64 -d)

获取配置文件

cd /etc/kubernetes/pki

??设置集群条目

kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://192.168.1.223:16443" --embed-certs=true --kubeconfig=/root/dashboard-admin.conf

??设置用户条目

kubectl config set-credentials dashboard-admin --token=$DASH_TOCKEN --kubeconfig=/root/dashboard-admin.conf

??设置上下文条目

kubectl config set-context dashboard-admin@kubernetes --cluster=kubernetes --user=dashboard-admin --kubeconfig=/root/dashboard-admin.conf

??设置当前上下文

kubectl config use-context dashboard-admin@kubernetes --kubeconfig=/root/dashboard-admin.conf

??传出文件

sz /root/dashboard-admin.conf