参考文章:

了解了一些基础知识以后,我们来搭建ELK集群并模拟真实业务场景实验一下负载均衡日志的收集

环境

win10、8g内存(还是小了点,都爆红了,但还是能用的)

wsl2 linux子系统

ubuntu20.04

docker-desktop

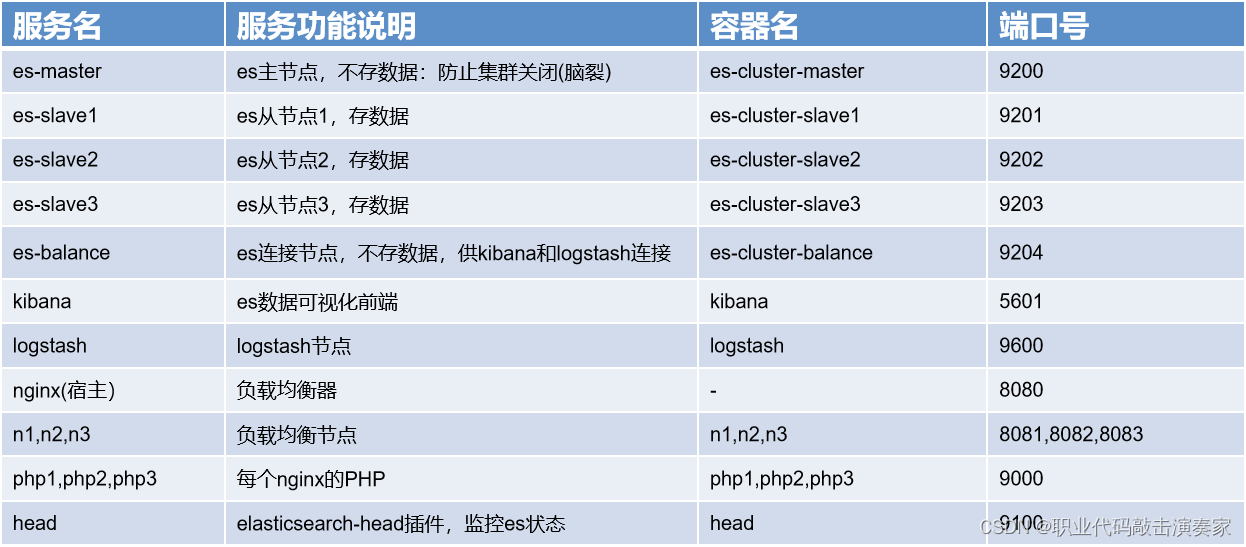

安装elk集群和nginx负载均衡节点

参考上方大神文章并做一些调整:(去掉了kafka部分,因为我把握不住啊)

所需服务和说明

注:这里elk我使用的docker-compose安装,nginx(宿主)是在wsl2中使用apt install nginx安装的,其余都用docker安装;如果不用docker-compose,单独去装也可以,docker-compose只是一个容器编排工具

nginx负载均衡模拟

在wsl2家目录下创建文件夹

mkdir nginx

cd nginx

创建如下目录和文件(n1,n2,n3为每个节点的数据目录)

注:日志(.log结尾的)先不用创建,是后面请求生成的,conf文件夹中的内容,可以先随意创建一个nginx容器,从容器的配置文件夹中复制过来

创建PHP容器和nginx容器

创建固定ip的网络

docker network create --subnet=172.20.0.0/16 webnet

docker安装php-fpm并运行

docker run --name php1 --net webnet --ip 172.20.0.11 -v /home/bcm/nginx/n1/www:/var/www/html:rw --privileged=true -d php:7.4.3-fpm

docker run --name php2 --net webnet --ip 172.20.0.12 -v /home/bcm/nginx/n2/www:/var/www/html:rw --privileged=true -d php:7.4.3-fpm

docker run --name php3 --net webnet --ip 172.20.0.13 -v /home/bcm/nginx/n3/www:/var/www/html:rw --privileged=true -d php:7.4.3-fpm

安装三个nginx容器

docker run -d --name n1 -p 8081:80 --net webnet -v /home/bcm/nginx/n1/conf:/etc/nginx -v /home/bcm/nginx/n1/logs:/var/log/nginx -v /home/bcm/nginx/n1/www:/usr/share/nginx/html nginx

docker run -d --name n2 -p 8082:80 --net webnet -v /home/bcm/nginx/n2/conf:/etc/nginx -v /home/bcm/nginx/n2/logs:/var/log/nginx -v /home/bcm/nginx/n2/www:/usr/share/nginx/html nginx

docker run -d --name n3 -p 8083:80 --net webnet -v /home/bcm/nginx/n3/conf:/etc/nginx -v /home/bcm/nginx/n3/logs:/var/log/nginx -v /home/bcm/nginx/n3/www:/usr/share/nginx/html nginx

修改每个容器的配置文件

vim n1/conf/conf.d/default.conf

location ~ \.php$ {

root /var/www/html/;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_pass 172.20.0.11:9000;

fastcgi_index index.php;

}

vim n2/conf/conf.d/default.conf

location ~ \.php$ {

root /var/www/html/;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_pass 172.20.0.12:9000;

fastcgi_index index.php;

}

vim n3/conf/conf.d/default.conf

location ~ \.php$ {

root /var/www/html/;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_pass 172.20.0.13:9000;

fastcgi_index index.php;

}

安装宿主机nginx

sudo apt install nginx

修改配置文件

vim /etc/nginx/conf.d/defatlt.conf

upstream docker-nginx {

server 127.0.0.1:8081 weight=10;

server 127.0.0.1:8082 weight=10;

server 127.0.0.1:8083 weight=10;

}

server{

listen 8080;

server_name localhost;

location / {

proxy_pass http://docker-nginx;

}

}

检测配置文件 sudo nginx -t

启动nginx服务:sudo service nginx start

在n1,n2和n3的www目录创建index.php文件

<?php

$url = "https://www.yiketianqi.com/free/day?appid=&appsecret=&unescape=1";

$request = curlPost($url);

$logStr = date('Y-m-d H:i:s').'|'.$url.'|'.$request.PHP_EOL;

if(writeLog($logStr)){

echo json_encode([

"code"=>0,

"server"=>"n1",

"ip"=>getip(),

"msg" =>$logStr

]);

}else{

echo json_encode([

"code"=>-1,

"server"=>"n1",

"ip"=>getip(),

"msg"=> "failed!"

]);

}

function writeLog($logStr, $file = '', $dir = ''){

if(empty($dir)){

$dir = __DIR__."/logs";

}

$ret = false;

if(!is_dir($dir)) {

@mkdir($dir, 0777, true);

@chmod($dir, 0777);

}

if(is_dir($dir)){

if (empty($file)) {

$fileName = $dir . '/' . 'request-result-'. date('Ymd') .'.log';

} else {

$fileName = $dir . '/' . $file;

}

if(!is_file($fileName)) {

$ret = @file_put_contents($fileName, $logStr, FILE_APPEND);

@chmod($fileName, 0777);

} else {

$ret = @file_put_contents($fileName, $logStr, FILE_APPEND);

}

}

if($ret){

return true;

}

return false;

}

function curlPost($url,$params=[]){

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

// 设置超时时间

curl_setopt($ch, CURLOPT_TIMEOUT, 15);

// POST数据

curl_setopt($ch, CURLOPT_POST, 1);

// 把post的变量加上

if(!empty($params)){

curl_setopt($ch, CURLOPT_POSTFIELDS, http_build_query($params));

}

curl_setopt($ch, CURLOPT_HTTPHEADER, array('Content-Type:'.'application/json; charset=UTF-8'));

$output = curl_exec($ch);

curl_close($ch);

return $output;

}

function getip() {

static $ip = '';

$ip = $_SERVER['REMOTE_ADDR'];

if(isset($_SERVER['HTTP_CDN_SRC_IP'])) {

$ip = $_SERVER['HTTP_CDN_SRC_IP'];

} elseif (isset($_SERVER['HTTP_CLIENT_IP']) && preg_match('/^([0-9]{1,3}\.){3}[0-9]{1,3}$/', $_SERVER['HTTP_CLIENT_IP'])) {

$ip = $_SERVER['HTTP_CLIENT_IP'];

} elseif(isset($_SERVER['HTTP_X_FORWARDED_FOR']) AND preg_match_all('#\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}#s', $_SERVER['HTTP_X_FORWARDED_FOR'], $matches)) {

foreach ($matches[0] AS $xip) {

if (!preg_match('#^(10|172\.16|192\.168)\.#', $xip)) {

$ip = $xip;

break;

}

}

}

return $ip;

}

对应目录分别修改文中的n1为n2和n3

浏览器请求:http://127.0.0.1:8080 刷新几次,即可在n1,n2,n3目录对应位置生成日志文件,以备下文logstash获取

docker-compose 安装elk

在wsl2家目录下创建文件夹

mkdir elk-v7

cd elk-v7

创建如下文件夹和文件

编辑 .env文件

vim .env

#软件版本(不适用于8.0以上版本,亲测不可用)

VERSION=7.17.1

#主机地址(可通过ip addr命令查看)

HOST_IP=172.31.127.211

# elasticsearch's JVM args setting, for every elasticsearch instance.

# -Xms is es boostrap occupied memory size

# -Xmx is the biggest memory es can use.

ES_JVM_OPTS=-Xms256m -Xmx256m

# es-master data 挂载目录

ES_MASTER_DATA_DIR=./es-data/es-master-data

# es-slave1 data 挂载目录

ES_SLAVE1_DATA_DIR=./es-data/es-slave1-data

# es-slave2 data 挂载目录

ES_SLAVE2_DATA_DIR=./es-data/es-slave2-data

# es-slave3 data 挂载目录

ES_SLAVE3_DATA_DIR=./es-data/es-slave3-data

# es-balance data 挂载目录

ES_BALANCE_DATA_DIR=./es-data/es-balance-data

#logstash配置目录

LOGSTASH_CONFIG_DIR=./logstash/config

编辑logstash.conf文件

vim logstash/config/logstash.conf

input {

file{

path => "/home/bcm/nginx/n1/logs/access.log"

type => "n1"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n2/logs/access.log"

type => "n2"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n3/logs/access.log"

type => "n3"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n1/www/logs/request-result-*.log"

type => "request1"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n2/www/logs/request-result-*.log"

type => "request2"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n3/www/logs/request-result-*.log"

type => "request3"

start_position => "beginning"

}

}

output {

if[type] in ["n1","n2","n3"]{

elasticsearch{

action => "index"

hosts => "172.25.235.46:9204"

index => "nginx-access-%{+YYYYMMdd}"

}

}

if[type] in ["request1","request2","request3"]{

elasticsearch{

action => "index"

hosts => "172.25.235.46:9204"

index => "request-result-%{+YYYYMMdd}"

}

}

}

说明:正常生产环境肯定是一个服务器安装一个logstash,这里只是模拟,所以只装了一个,收集不同目录下的日志文件

编辑logstash.yml

vim logstash/config/logstash.yml

http.host: 0.0.0.0

xpack.monitoring.elasticsearch.hosts:

- http://172.25.235.46:9204

xpack.monitoring.enabled: true

ip地址是通过ip addr命令获取的,端口号是es-balance服务的端口号

编辑 docker-compose.yml文件

vim docker-compose.yml

version: "3"

services:

es-master:

image: elasticsearch:${VERSION}

container_name: es-cluster-master

environment:

- cluster.name=es-cluster #集群名称

- node.name=es-master #节点名称

- cluster.initial_master_nodes=es-master # 注册主节点

- node.master=true #是否是主节点

- node.data=false #是否存数据

- http.cors.enabled=true #开启跨域

- http.cors.allow-origin=*

- bootstrap.memory_lock=false #是否锁定内存

- ES_JAVA_OPTS=${ES_JVM_OPTS} #jvm最大使用内存

ports:

- "9200:9200"

expose:

- "9200"

- "9300"

volumes:

- ${ES_MASTER_DATA_DIR}:/usr/share/elasticsearch/data:rw

es-slave1:

image: elasticsearch:${VERSION}

container_name: es-cluster-slave1

depends_on:

- es-master

environment:

- cluster.name=es-cluster

- node.name=slave1

- node.master=false

- node.data=true

- cluster.initial_master_nodes=es-master

- discovery.zen.ping.unicast.hosts=es-master

- bootstrap.memory_lock=false

- ES_JAVA_OPTS=${ES_JVM_OPTS}

ports:

- "9201:9200"

expose:

- "9300"

volumes:

- ${ES_SLAVE1_DATA_DIR}:/usr/share/elasticsearch/data:rw

es-slave2:

image: elasticsearch:${VERSION}

container_name: es-cluster-slave2

depends_on:

- es-master

environment:

- cluster.name=es-cluster

- node.name=slave2

- node.master=false

- node.data=true

- cluster.initial_master_nodes=es-master

- discovery.zen.ping.unicast.hosts=es-master

- bootstrap.memory_lock=false

- ES_JAVA_OPTS=${ES_JVM_OPTS}

ports:

- "9202:9200"

expose:

- "9300"

volumes:

- ${ES_SLAVE2_DATA_DIR}:/usr/share/elasticsearch/data:rw

networks:

- elk-network

es-slave3:

image: elasticsearch:${VERSION}

container_name: es-cluster-slave3

depends_on:

- es-master

environment:

- cluster.name=es-cluster

- node.name=slave3

- node.master=false

- node.data=true

- cluster.initial_master_nodes=es-master

- discovery.zen.ping.unicast.hosts=es-master

- bootstrap.memory_lock=false

- ES_JAVA_OPTS=${ES_JVM_OPTS}

ports:

- "9203:9200"

expose:

- "9300"

volumes:

- ${ES_SLAVE3_DATA_DIR}:/usr/share/elasticsearch/data:rw

es-balance:

image: elasticsearch:${VERSION}

container_name: es-cluster-balance

depends_on:

- es-master

environment:

- cluster.name=es-cluster

- node.name=balance

- node.master=false

- node.data=false

- cluster.initial_master_nodes=es-master

- discovery.zen.ping.unicast.hosts=es-master

- bootstrap.memory_lock=false

- ES_JAVA_OPTS=${ES_JVM_OPTS}

ports:

- "9204:9200"

expose:

- "9300"

volumes:

- ${ES_BALANCE_DATA_DIR}:/usr/share/elasticsearch/data:rw

kibana:

image: kibana:${VERSION}

container_name: kibana

depends_on:

- es-master

environment:

- ELASTICSEARCH_HOSTS=http://${HOST_IP}:9204

- I18N_LOCALE=zh-CN

ports:

- "5601:5601"

logstash:

image: logstash:${VERSION}

container_name: logstash

ports:

- "9600:9600"

environment:

- XPACK_MONITORING_ENABLED=true

volumes:

- ${LOGSTASH_CONFIG_DIR}/logstash.conf:/usr/share/logstash/pipeline/logstash.conf:rw

- ${LOGSTASH_CONFIG_DIR}/logstash.yml:/usr/share/logstash/config/logstash.yml:rw

depends_on:

- es-master

- es-slave1

- es-slave2

- es-slave3

在启动之前还要做一个操作:

vim ~/etc/sysctl.conf

vm.max_map_count=262144

保存后执行:sudo sysctl -p

docker-compose命令:

# 启动

docker-compose up -d

# 停止和删除

docker-compose down

# 停止

docker-compose stop

# 开启

docker-compose start

# 更新启动

docker-compose up -d --build

安装elasticsearch-head(用于监控es健康状态)

docker run -d --name head -p 9100:9100 mobz/elasticsearch-head:5

此时如果正常启动所有程序,浏览器访问:http://127.0.0.1:9100进行集群状态监控浏览,访问:http://127.0.0.1:5601打开kibana查看日志

说明:

1.kibana的日志查看需要配置(位置为stack management->索引模式->添加,去匹配对应的索引),然后到discover菜单下即可查看集成的日志。

2.访问127.0.0.1:8080可能会产生权限报错问题,需要进入每个php-fpm容器,chmod -R 777 /var

docker安装单机版ELK(8.1.0)

1.拉取镜像文件

docker pull elasticsearch:8.1.0

docker pull kibana:8.1.0

docker pull logstash:8.1.0

2.安装elasticsearch

docker run -d --name es -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xms84m -Xmx512m" elasticsearch:8.1.0

从容器中复制文件出来

docker cp es:/usr/share/elasticsearch/config/elasticsearch.yml .

修改配置文件

vim elasticsearch.yml

cluster.name: "docker-cluster"

network.host: 0.0.0.0

xpack.security.enabled: false

xpack.security.enrollment.enabled: false

xpack.security.http.ssl:

enabled: false

keystore.path: certs/http.p12

xpack.security.transport.ssl:

enabled: false

verification_mode: certificate

keystore.path: certs/transport.p12

truststore.path: certs/transport.p12

覆盖配置文件

docker cp elasticsearch.yml es:/usr/share/elasticsearch/config/elasticsearch.yml

重启容器

3.安装kibana

docker run -d --name kibanba -p 5601:5601 kibana:8.1.0

从容器中复制配置文件

docker cp kibana:/usr/share/kibana/config/kibana.yml .

vim kibana.yml

server.host: "0.0.0.0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://172.20.60.25:9200" ] #es连接地址

monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"

docker cp kibana.yml kibana:/usr/share/kibana/config/kibana.yml

重启容器

4.安装logstash

创建logstash配置文件

vim logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://172.20.60.25:9200" ]# es地址:ip addr获取到的地址,不能是127.0.0.1

创建管道传输配置文件

vim logstash.conf

input {

file{

path => "/home/bcm/nginx/n1/logs/access.log"

type => "n1"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n2/logs/access.log"

type => "n2"

start_position => "beginning"

}

file{

path => "/home/bcm/nginx/n3/logs/access.log"

type => "n3"

start_position => "beginning"

}

}

output {

if[type] in ["n1","n2","n3"]{

elasticsearch{

action => "index"

hosts => "172.20.60.25:9200"

index => "nginx-access-%{+YYYYMMdd}"

}

}

}

docker run -d --name logstash -p 9600:9600 -v /home/bcm/nginx/n1/logs:/home/bcm/nginx/n1/logs -v /home/bcm/nginx/n2/logs:/home/bcm/nginx/n2/logs -v /home/bcm/nginx/n3/logs:/home/bcm/nginx/n3/logs -v $PWD/logstash.yml:/usr/share/logstash/config/logstash.yml -v $PWD/logstash.conf:/usr/share/logstash/pipeline/logstash.conf -v /home/bcm/nginx/n1/www:/home/bcm/nginx/n1/www -v /home/bcm/nginx/n2/www:/home/bcm/nginx/n2/www -v /home/bcm/nginx/n3/www:/home/bcm/nginx/n3/www logstash:8.1.0

好了,就到这里吧,本次实验不适用于真实的生产环境,只是模拟,真实生产环境需要进行一些改造