通用

安装基本软件包

yum install bash‐comp* vim net‐tools wget ‐y

设置主机名,管理节点设置主机名为node1(node2的设置为node2)

hostnamectl set-hostname node102

设置Host解析,编辑/etc/hosts文件,添加域名解析

[root@node1 ~]# vim /etc/hosts 10.168.1.101 node101 10.168.1.102 node102 10.168.1.103 node103

关闭防火墙、selinux和swap

[root@node1 ~]# systemctl stop firewalld [root@node1 ~]# systemctl disable firewalld [root@node1 ~]# setenforce 0 [root@node1 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config [root@node1 ~]# swapoff -a [root@node1 ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

配置内核参数,将桥接的IPv4流量传递到iptables的链

[root@node1 ~]# cat >> /etc/sysctl.d/k8s.conf <<EOF > net.bridge.bridge-nf-call-ip6tables = 1 > net.bridge.bridge-nf-call-iptables = 1 > EOF [root@node1 ~]# sysctl --system * Applying /usr/lib/sysctl.d/00-system.conf ... * Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ... kernel.yama.ptrace_scope = 0 * Applying /usr/lib/sysctl.d/50-default.conf ... kernel.sysrq = 16 kernel.core_uses_pid = 1 net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.all.rp_filter = 1 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.conf.all.accept_source_route = 0 net.ipv4.conf.default.promote_secondaries = 1 net.ipv4.conf.all.promote_secondaries = 1 fs.protected_hardlinks = 1 fs.protected_symlinks = 1 * Applying /etc/sysctl.d/99-sysctl.conf ... * Applying /etc/sysctl.d/k8s.conf ... * Applying /etc/sysctl.conf ...

配置阿里云的kubernetes的yum源

[root@master ~]# cat >>/etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF [root@node1 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo [root@node1 ~]# yum repolist

docker-ce安装

卸载掉当前默认docker环境

[root@node1 ~]# yum -y remove docker docker-common docker-selinux docker-engine

安装Docker-ce

[root@node1 ~]# yum install docker-ce -y [root@node1 ~]# systemctl start docker [root@node1 ~]# systemctl enable docker

kubelet、kubeadm、kubectl安装

[root@node1 ~]# yum install kubelet kubeadm kubectl -y [root@node1 ~]# systemctl enable kubelet

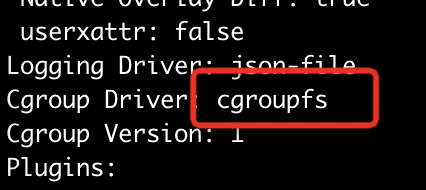

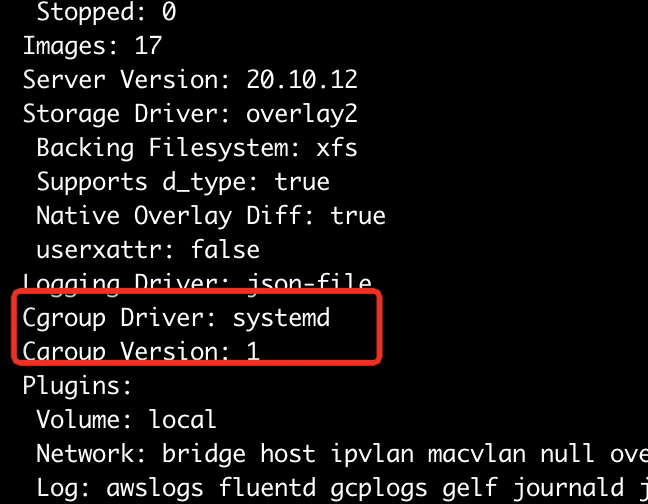

设置docker的Cgroup Driver

docker info

$ cat <<EOF >/etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart docker && systemctl enable docker

节点下载镜像

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.3 docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.3 docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.3 docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.23.3 docker pull registry.aliyuncs.com/google_containers/pause:3.6 docker pull registry.aliyuncs.com/google_containers/etcd:3.5.1-0 docker pull registry.aliyuncs.com/google_containers/coredns:v1.8.6 docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.1 k8s.gcr.io/kube-apiserver:v1.23.1 docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.3 k8s.gcr.io/kube-controller-manager:v1.23.3 docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.3 k8s.gcr.io/kube-scheduler:v1.23.3 docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.23.3 k8s.gcr.io/kube-proxy:v1.23.3 docker tag registry.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6 docker tag registry.aliyuncs.com/google_containers/etcd:3.5.1-0 k8s.gcr.io/etcd:3.5.1-0 docker tag registry.aliyuncs.com/google_containers/coredns:v1.8.6 k8s.gcr.io/coredns:v1.8.6 ~

master

kube-vip

获取 kube-vip 的 docker 镜像,并在 /etc/kuberentes/manifests 中设置静态 pod 的 yaml 资源清单文件,这样 Kubernetes 就会自动在每个控制平面节点上部署 kube-vip 的 pod 了。

#!/bin/bash # 设置VIP地址 export VIP=192.168.0.100 export INTERFACE=ens192 ctr image pull docker.io/plndr/kube-vip:0.3.1 ctr run --rm --net-host docker.io/plndr/kube-vip:0.3.1 vip \ /kube-vip manifest pod \ --interface $INTERFACE \ --vip $VIP \ --controlplane \ --services \ --arp \ --leaderElection | tee /etc/kubernetes/manifests/kube-vip.yaml

kubeadm-conf

使用虚拟ip:192.168.0.100:6443

[root@node101 ~]# cat kubeadm-conf/kubeadm-conf.yaml apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.23.3 apiServer: certSANs: - node101 - node101 - node101 - 192.168.0.101 - 192.168.0.102 - 192.168.0.103 - 192.168.0.100 controlPlaneEndpoint: "192.168.0.100:6443" networking: podSubnet: "10.200.0.0/16" serviceSubnet: "10.95.0.0/12"

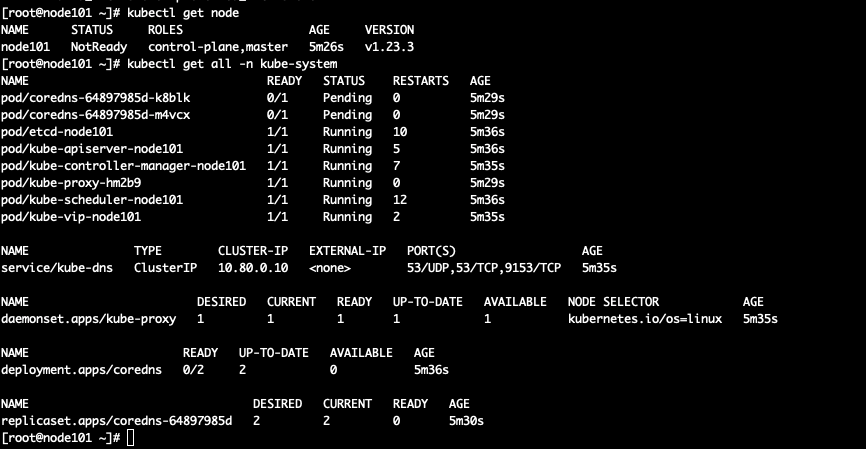

初始化第一个master节点

kubeadm init --config=kubeadm-conf/kubeadm-conf.yaml --upload-certs

To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.0.100:6443 --token 6yrc7n.8aah1q3pkf2penu5 \ --discovery-token-ca-cert-hash sha256:7a10a372e5e8fcd6dea3e3017fc2b9cf5f220b513086c884ee9129401eda8783 \ --control-plane --certificate-key 559f3c60faba1cf0208524bac09852135fc1ae053318fccd581cf3681aceba92 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.0.100:6443 --token 6yrc7n.8aah1q3pkf2penu5 \ --discovery-token-ca-cert-hash sha256:7a10a372e5e8fcd6dea3e3017fc2b9cf5f220b513086c884ee9129401eda8783

配置flannel网络

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yaml

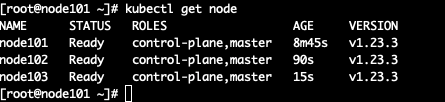

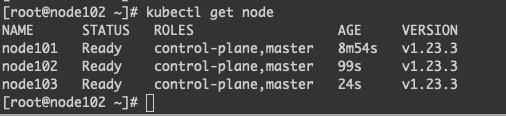

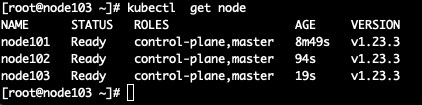

其他master节点加入集群

kubeadm join 192.168.0.100:6443 --token 6yrc7n.8aah1q3pkf2penu5 \ --discovery-token-ca-cert-hash sha256:7a10a372e5e8fcd6dea3e3017fc2b9cf5f220b513086c884ee9129401eda8783 \ --control-plane --certificate-key 559f3c60faba1cf0208524bac09852135fc1ae053318fccd581cf3681aceba92

从任何一个master上都可以访问到k8s集群。

通过`ip a`可以查看到虚拟ip。

work节点加入集群

kubeadm join 192.168.0.100:6443 --token 6yrc7n.8aah1q3pkf2penu5 \ --discovery-token-ca-cert-hash sha256:7a10a372e5e8fcd6dea3e3017fc2b9cf5f220b513086c884ee9129401eda8783

集群健康状态查看

[root@node101 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node101 Ready control-plane,master 15m v1.23.3

node102 Ready control-plane,master 7m49s v1.23.3

node103 Ready control-plane,master 6m34s v1.23.3

node106 Ready <none> 2m52s v1.23.3

[root@node101 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

[root@node101 ~]# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-64897985d-k8blk 1/1 Running 0 15m

pod/coredns-64897985d-m4vcx 1/1 Running 0 15m

pod/etcd-node101 1/1 Running 10 15m

pod/etcd-node102 1/1 Running 3 7m54s

pod/etcd-node103 1/1 Running 1 6m38s

pod/kube-apiserver-node101 1/1 Running 5 15m

pod/kube-apiserver-node102 1/1 Running 2 7m57s

pod/kube-apiserver-node103 1/1 Running 5 6m43s

pod/kube-controller-manager-node101 1/1 Running 8 (7m42s ago) 15m

pod/kube-controller-manager-node102 1/1 Running 2 7m57s

pod/kube-controller-manager-node103 1/1 Running 2 6m42s

pod/kube-flannel-ds-7x56b 1/1 Running 0 3m1s

pod/kube-flannel-ds-c6tsn 1/1 Running 0 7m58s

pod/kube-flannel-ds-lxpsl 1/1 Running 0 6m43s

pod/kube-flannel-ds-vptcs 1/1 Running 0 9m10s

pod/kube-proxy-2nb48 1/1 Running 0 6m43s

pod/kube-proxy-9729r 1/1 Running 0 3m1s

pod/kube-proxy-hm2b9 1/1 Running 0 15m

pod/kube-proxy-wgcsh 1/1 Running 0 7m58s

pod/kube-scheduler-node101 1/1 Running 13 (7m42s ago) 15m

pod/kube-scheduler-node102 1/1 Running 3 7m58s

pod/kube-scheduler-node103 1/1 Running 3 6m43s

pod/kube-vip-node101 1/1 Running 3 (7m48s ago) 15m

pod/kube-vip-node102 1/1 Running 1 7m58s

pod/kube-vip-node103 1/1 Running 1 6m42s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.80.0.10 <none> 53/UDP,53/TCP,9153/TCP 15m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-flannel-ds 4 4 4 4 4 <none> 9m10s

daemonset.apps/kube-proxy 4 4 4 4 4 kubernetes.io/os=linux 15m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 2/2 2 2 15m

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-64897985d 2 2 2 15m

[root@node101 ~]#

高可靠性检测

通过ip a可以看到vip所在的节点,执行reboot重启机器,vip漂移到其他节点。

参考

利用 kubeadm 创建高可用集群 | Kubernetes

https://github.com/kubernetes/kubeadm/blob/main/docs/ha-considerations.md#keepalived-and-haproxy

GitHub - kube-vip/kube-vip: Kubernetes Control Plane Virtual IP and Load-Balancer