Docker网络原理

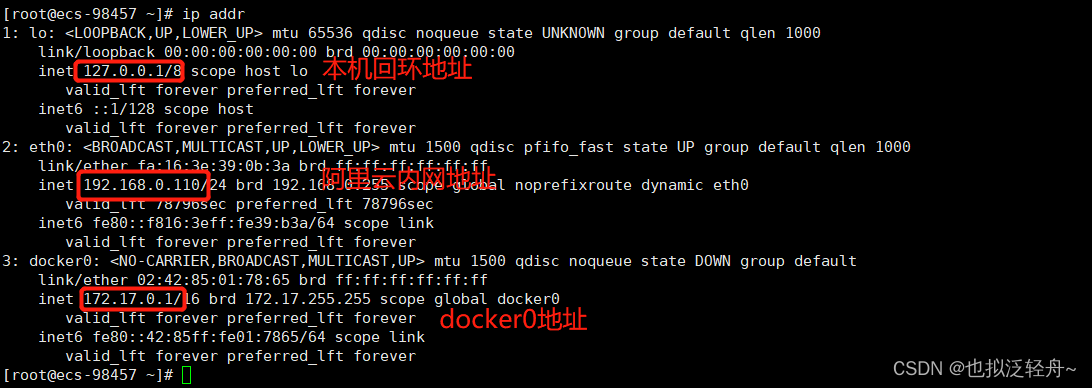

当我们用ip addr命令查看服务器内部网络地址时,可以发现三个地址:

[root@ecs-98457 ~]# docker run -d -P --name tomcat01 tomcat

Unable to find image 'tomcat:latest' locally

latest: Pulling from library/tomcat

dbba69284b27: Pull complete

9baf437a1bad: Pull complete

6ade5c59e324: Pull complete

b19a994f6d4c: Pull complete

43c0aceedb57: Pull complete

24e7c71ec633: Pull complete

612cf131e488: Pull complete

dc655e69dd90: Pull complete

efe57b7441f6: Pull complete

8db51a0119f4: Pull complete

Digest: sha256:263f93ac29cb2dbba4275a4e647b448cb39a66334a6340b94da8bf13bde770aa

Status: Downloaded newer image for tomcat:latest

1c2ff81d2e3f14b7088a6be103063d08d0bc6b97d5a6008bd612d11744df5b15

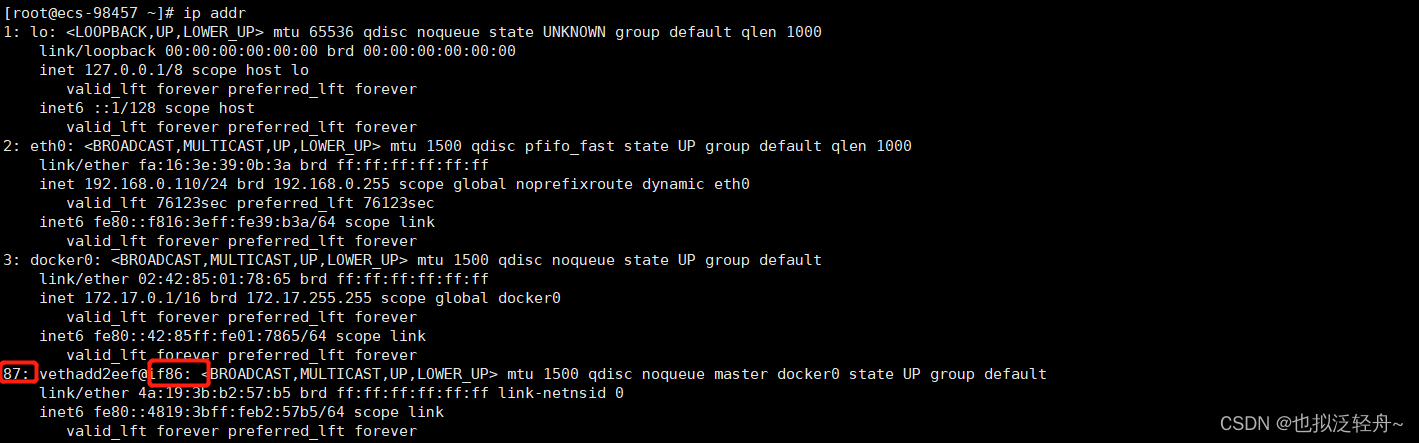

[root@ecs-98457 ~]# ip addr

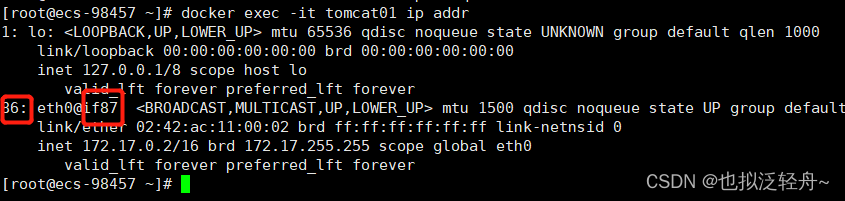

[root@ecs-98457 ~]# docker exec -it tomcat01 ip addr

我们发现主机上新增的网络和容器的网络是对应的 在主机上可以直接ping通容器

[root@ecs-98457 ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.045 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.044 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.046 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.045 ms

64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.045 ms

64 bytes from 172.17.0.2: icmp_seq=6 ttl=64 time=0.057 ms

64 bytes from 172.17.0.2: icmp_seq=7 ttl=64 time=0.045 ms

64 bytes from 172.17.0.2: icmp_seq=8 ttl=64 time=0.047 ms

64 bytes from 172.17.0.2: icmp_seq=9 ttl=64 time=0.045 ms

^C

--- 172.17.0.2 ping statistics ---

9 packets transmitted, 9 received, 0% packet loss, time 7999ms

rtt min/avg/max/mdev = 0.044/0.046/0.057/0.008 ms

[root@ecs-98457 ~]#

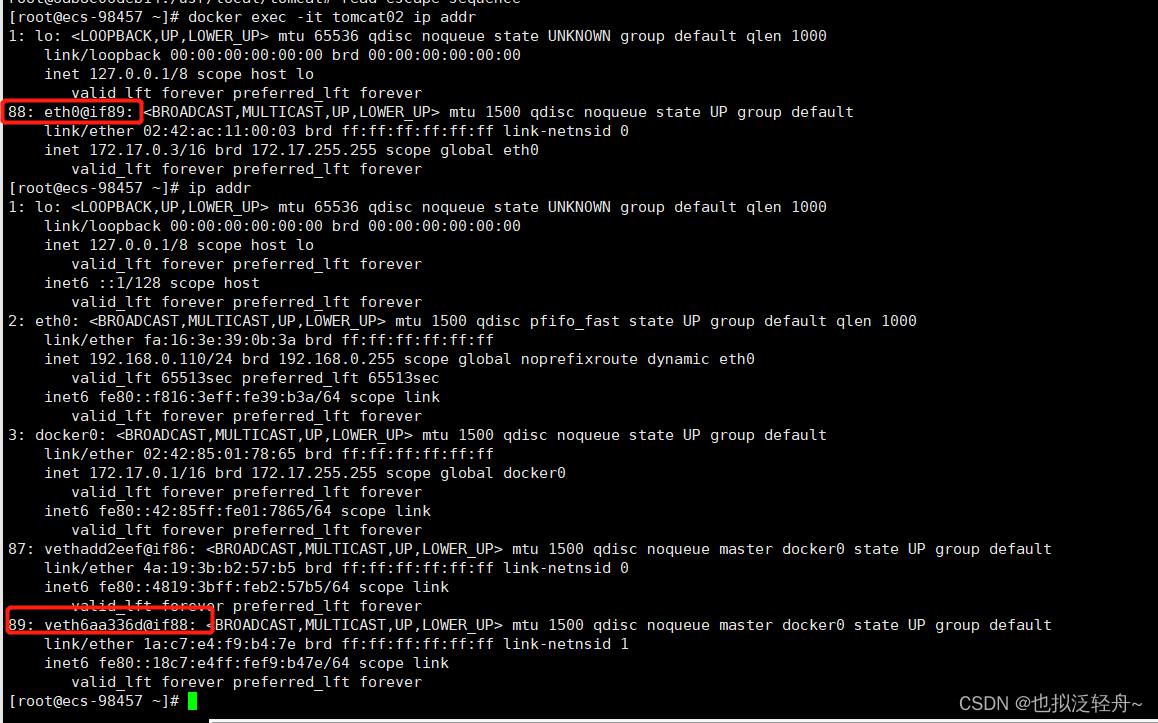

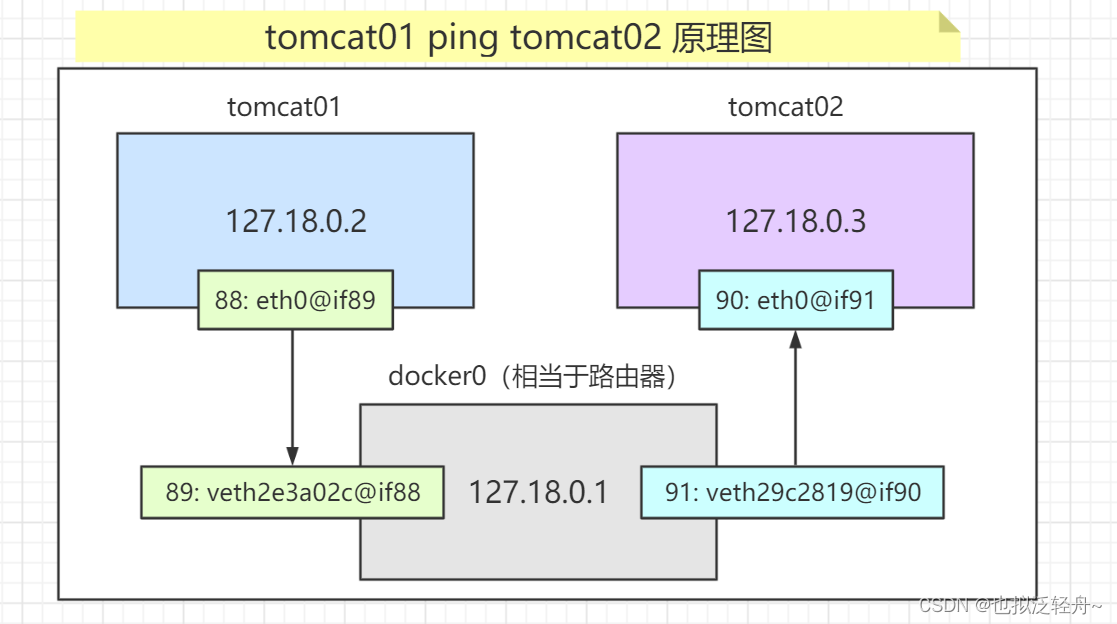

接着我们启动tomcat02

[root@ecs-98457 ~]# docker run -d -P --name tomcat02 tomcat

我们发现每次新增的网络地址都是一对一对的88/89 86/87,这就是evth-pair技术,就是一对虚拟设备接口,成对出现,一段连着协议,一段彼此相连;容器内的88连接了主机的89,容器内的86连接了主机的87;

evth-pair充当了一个桥梁,实现了主机可以ping通容器内部ip地址,用于连接各种虚拟网络设备

那么 tomcar01和tomcat02 这两个容器能否ping通呢?当然是可以的,因为两个容器内的ip地址都桥接了主机相应的ip地址,都与docker0地址处于同一网段,因此可以ping通

# tomcat02 ping tomcat01可以ping通

[root@ecs-98457 ~]# docker exec -it tomcat02 ping 172.17.0.2

PING 172.18.0.2 (172.18.0.2) 56(84) bytes of data.

64 bytes from 172.18.0.2: icmp_seq=1 ttl=64 time=0.127 ms

64 bytes from 172.18.0.2: icmp_seq=2 ttl=64 time=0.109 ms

64 bytes from 172.18.0.2: icmp_seq=3 ttl=64 time=0.100 ms

[root@ecs-98457 ~]# docker exec -it tomcat01 ip addr

OCI runtime exec failed: exec failed: container_linux.go:380: starting container process caused: exec: "ip": executable file not found in $PATH: unknown

[root@ecs-98457 ~]# docker exec -it tomcat01

"docker exec" requires at least 2 arguments.

See 'docker exec --help'.

Usage: docker exec [OPTIONS] CONTAINER COMMAND [ARG...]

Run a command in a running container

解决方案:

依次执行以下命令

①进入容器:docker exec -it tomcat1 /bin/bash

②更新:apt update

apt install -y iproute2

apt-get update

③安装ip命令:apt install net-tools

[root@ecs-98457 ~]# docker exec -it tomcat01 /bin/bash

root@1c2ff81d2e3f:/usr/local/tomcat# apt update

Get:1 http://security.debian.org/debian-security bullseye-security InRelease [44.1 kB]

Hit:2 http://deb.debian.org/debian bullseye InRelease

Get:3 http://deb.debian.org/debian bullseye-updates InRelease [39.4 kB]

Fetched 83.5 kB in 3s (28.4 kB/s)

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

1 package can be upgraded. Run 'apt list --upgradable' to see it.

root@1c2ff81d2e3f:/usr/local/tomcat# apt install -y iproute2

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

libatm1 libbpf0 libcap2 libcap2-bin libelf1 libmnl0 libpam-cap libxtables12

Suggested packages:

iproute2-doc

The following NEW packages will be installed:

iproute2 libatm1 libbpf0 libcap2 libcap2-bin libelf1 libmnl0 libpam-cap libxtables12

0 upgraded, 9 newly installed, 0 to remove and 1 not upgraded.

Need to get 1394 kB of archives.

After this operation, 4686 kB of additional disk space will be used.

Get:1 http://deb.debian.org/debian bullseye/main amd64 libelf1 amd64 0.183-1 [165 kB]

Get:2 http://deb.debian.org/debian bullseye/main amd64 libbpf0 amd64 1:0.3-2 [98.3 kB]

Get:3 http://deb.debian.org/debian bullseye/main amd64 libcap2 amd64 1:2.44-1 [23.6 kB]

Get:4 http://deb.debian.org/debian bullseye/main amd64 libmnl0 amd64 1.0.4-3 [12.5 kB]

Get:5 http://deb.debian.org/debian bullseye/main amd64 libxtables12 amd64 1.8.7-1 [45.1 kB]

Get:6 http://deb.debian.org/debian bullseye/main amd64 libcap2-bin amd64 1:2.44-1 [32.6 kB]

Get:7 http://deb.debian.org/debian bullseye/main amd64 iproute2 amd64 5.10.0-4 [930 kB]

Get:8 http://deb.debian.org/debian bullseye/main amd64 libatm1 amd64 1:2.5.1-4 [71.3 kB]

Get:9 http://deb.debian.org/debian bullseye/main amd64 libpam-cap amd64 1:2.44-1 [15.4 kB]

Fetched 1394 kB in 1min 52s (12.4 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package libelf1:amd64.

(Reading database ... 12729 files and directories currently installed.)

Preparing to unpack .../0-libelf1_0.183-1_amd64.deb ...

Unpacking libelf1:amd64 (0.183-1) ...

Selecting previously unselected package libbpf0:amd64.

Preparing to unpack .../1-libbpf0_1%3a0.3-2_amd64.deb ...

Unpacking libbpf0:amd64 (1:0.3-2) ...

Selecting previously unselected package libcap2:amd64.

Preparing to unpack .../2-libcap2_1%3a2.44-1_amd64.deb ...

Unpacking libcap2:amd64 (1:2.44-1) ...

Selecting previously unselected package libmnl0:amd64.

Preparing to unpack .../3-libmnl0_1.0.4-3_amd64.deb ...

Unpacking libmnl0:amd64 (1.0.4-3) ...

Selecting previously unselected package libxtables12:amd64.

Preparing to unpack .../4-libxtables12_1.8.7-1_amd64.deb ...

Unpacking libxtables12:amd64 (1.8.7-1) ...

Selecting previously unselected package libcap2-bin.

Preparing to unpack .../5-libcap2-bin_1%3a2.44-1_amd64.deb ...

Unpacking libcap2-bin (1:2.44-1) ...

Selecting previously unselected package iproute2.

Preparing to unpack .../6-iproute2_5.10.0-4_amd64.deb ...

Unpacking iproute2 (5.10.0-4) ...

Selecting previously unselected package libatm1:amd64.

Preparing to unpack .../7-libatm1_1%3a2.5.1-4_amd64.deb ...

Unpacking libatm1:amd64 (1:2.5.1-4) ...

Selecting previously unselected package libpam-cap:amd64.

Preparing to unpack .../8-libpam-cap_1%3a2.44-1_amd64.deb ...

Unpacking libpam-cap:amd64 (1:2.44-1) ...

Setting up libatm1:amd64 (1:2.5.1-4) ...

Setting up libcap2:amd64 (1:2.44-1) ...

Setting up libcap2-bin (1:2.44-1) ...

Setting up libmnl0:amd64 (1.0.4-3) ...

Setting up libxtables12:amd64 (1.8.7-1) ...

Setting up libelf1:amd64 (0.183-1) ...

Setting up libpam-cap:amd64 (1:2.44-1) ...

debconf: unable to initialize frontend: Dialog

debconf: (No usable dialog-like program is installed, so the dialog based frontend cannot be used. at /usr/share/perl5/Debconf/FrontEnd/Dialog.pm line 78.)

debconf: falling back to frontend: Readline

Setting up libbpf0:amd64 (1:0.3-2) ...

Setting up iproute2 (5.10.0-4) ...

debconf: unable to initialize frontend: Dialog

debconf: (No usable dialog-like program is installed, so the dialog based frontend cannot be used. at /usr/share/perl5/Debconf/FrontEnd/Dialog.pm line 78.)

debconf: falling back to frontend: Readline

Processing triggers for libc-bin (2.31-13+deb11u3) ...

root@1c2ff81d2e3f:/usr/local/tomcat# apt-get update

Hit:1 http://security.debian.org/debian-security bullseye-security InRelease

Hit:2 http://deb.debian.org/debian bullseye InRelease

Hit:3 http://deb.debian.org/debian bullseye-updates InRelease

Reading package lists... Done

root@1c2ff81d2e3f:/usr/local/tomcat# apt install net-tools

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

net-tools is already the newest version (1.60+git20181103.0eebece-1).

0 upgraded, 0 newly installed, 0 to remove and 1 not upgraded.

root@1c2ff81d2e3f:/usr/local/tomcat# read escape sequence

[root@ecs-98457 ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

86: eth0@if87: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@ecs-98457 ~]#

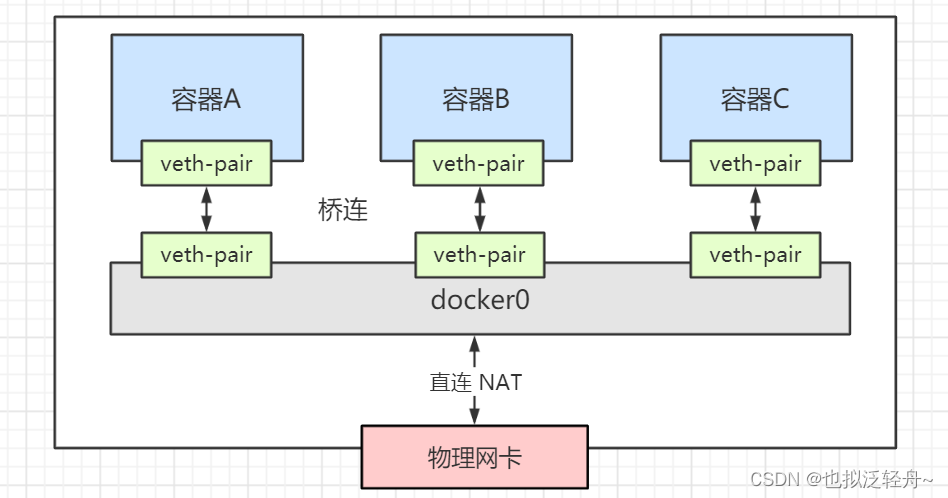

结论:容器和容器之间是可以互相ping通的

所有的容器不指定网络的情况下,都是通过 docker0路由的,docker会给容器分配一个默认的可用ip

Docker 使用的是Linux的桥接,宿主机中是一个Docker容器的网桥docker0

Docker 中所有的网络接口都是虚拟的,转发效率高,只要容器删除,对应的网桥就删除

容器互联–link

如果我们编写了一个微服务,连接数据库的ip地址变化了,此时数据连接就会断开,服务不可用;如果此时能够通过容器名字连接数据库,就可以解决数据库连接的问题;

如果tomcat01直接通过pingtomcat02的容器名,会报错

[root@ecs-98457 ~]# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Name or service not known

那么如何解决这个问题呢?可以在创建容器时用–link指定连接的容器,此时就可以通过容器名来ping通了

[root@ecs-98457 ~]# docker run -d -P --name tomcat03 --link tomcat01 tomcat

1cc06da893c10d4ef1bdf7e590860b44cf55a0c506b57b64dda44551ef06d816

[root@ecs-98457 ~]# docker exec -it tomcat03 ping tomcat01

PING tomcat01 (172.18.0.2) 56(84) bytes of data.

64 bytes from tomcat01 (172.18.0.2): icmp_seq=1 ttl=64 time=0.127 ms

64 bytes from tomcat01 (172.18.0.2): icmp_seq=2 ttl=64 time=0.103 ms

64 bytes from tomcat01 (172.18.0.2): icmp_seq=3 ttl=64 time=0.103 ms

但是反过来ping则无法ping通

[root@zsr ~]# docker exec -it tomcat01 ping tomcat03

ping: tomcat03: Name or service not known

原理:通过–link 使tomcat03 在本地hosts中配置了 tomcat02的ip与容器名的映射

自定义网络

# 查看所有的网络

[root@ecs-98457 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

57bcc505a110 bridge bridge local

52709fbbc538 host host local

e6a87210f6a8 none null local

[root@ecs-98457 ~]#

网络模式

bridge:桥接(默认)

none:不配置网络

host:主机模式,和宿主机共享网络

container:容器网络联通(使用少!)

当我们启动一个容器时,其实有默认参数–net bridge,这也就是docker0,是默认的方式,上述提到可以用–link进行互联实现通过容器名访问,但是比较繁琐,不建议使用;

# 启动一个容器时带默认参数 --net bridge

docker run -d -P --name mytomcat tomcat

docker run -d -P --name mytomcat --net bridge tomcat

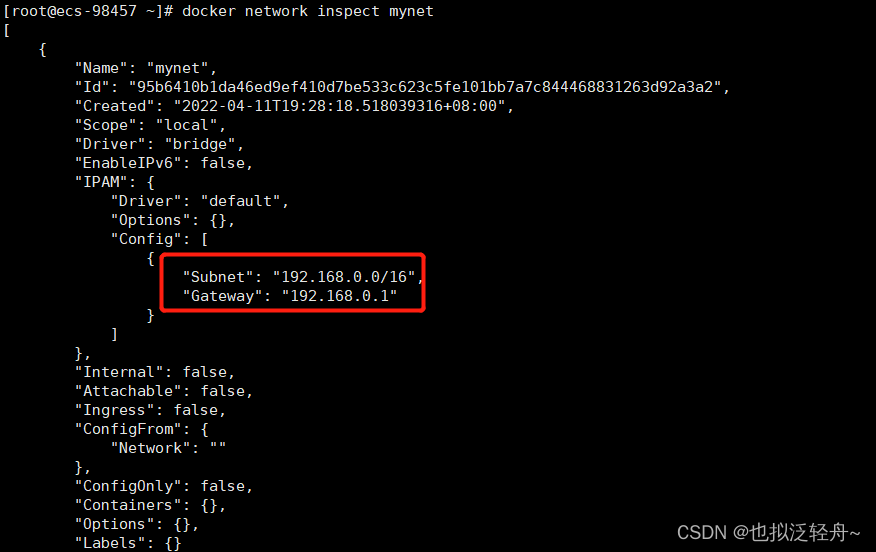

# 创建一个网络mynet,采用默认的桥接模式,子网地址192.168.0.0,网关192.168.0.1

[root@ecs-98457 ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

95b6410b1da46ed9ef410d7be533c623c5fe101bb7a7c844468831263d92a3a2

[root@ecs-98457 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

57bcc505a110 bridge bridge local

52709fbbc538 host host local

95b6410b1da4 mynet bridge local

e6a87210f6a8 none null local

查看自定义网络的详细信息

[root@ecs-98457 ~]# docker network inspect mynet

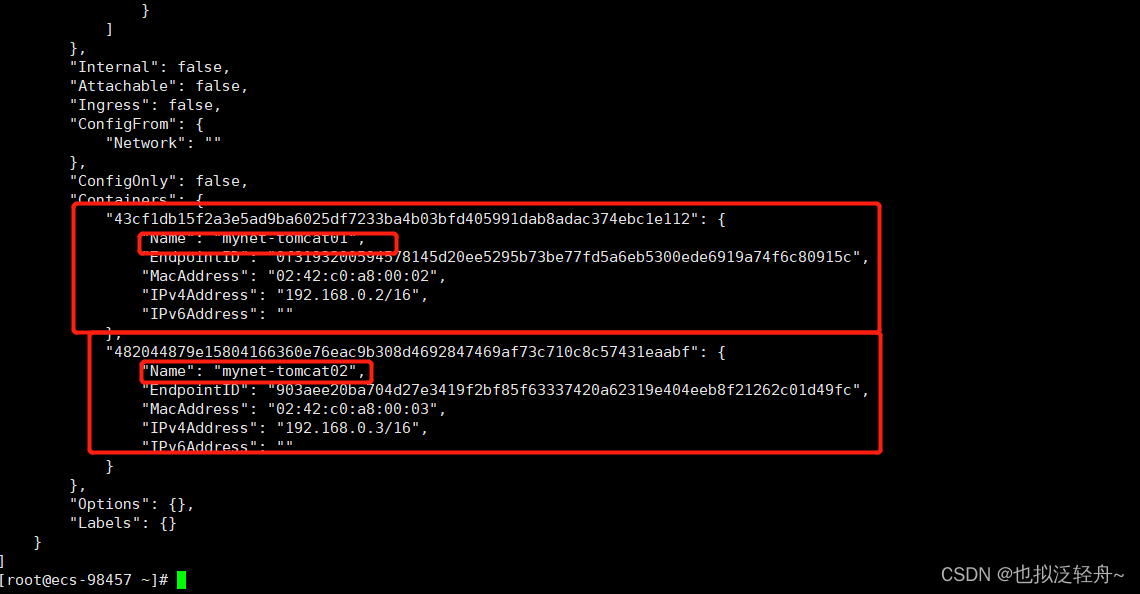

我们指定使用自定义网络mynet来启动两个容器

[root@ecs-98457 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

tomcat latest b00440a36b99 9 days ago 680MB

[root@ecs-98457 ~]# docker run -d -P --name mynet-tomcat01 --net mynet tomcat

43cf1db15f2a3e5ad9ba6025df7233ba4b03bfd405991dab8adac374ebc1e112

[root@ecs-98457 ~]# docker run -d -P --name mynet-tomcat02 --net mynet tomcat

482044879e15804166360e76eac9b308d4692847469af73c710c8c57431eaabf

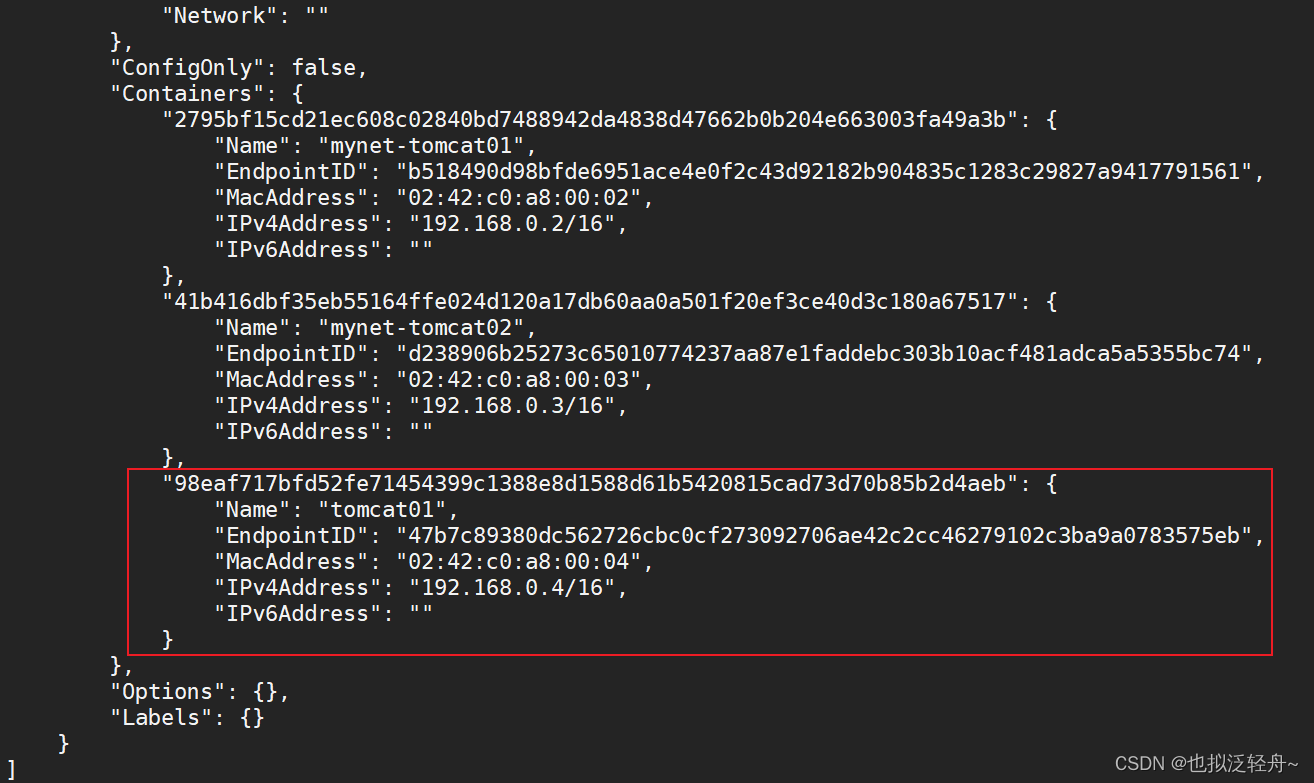

[root@ecs-98457 ~]# docker network inspect mynet

再次测试ping连接,发现自定义的网络解决了docker0的缺点,可以直接通过容器名来访问

[root@ecs-98457 ~]# docker exec -it mynet-tomcat01 ping mynet-tomcat02

PING mynet-tomcat02 (192.168.0.3) 56(84) bytes of data.

64 bytes from mynet-tomcat02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.094 ms

64 bytes from mynet-tomcat02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.094 ms

64 bytes from mynet-tomcat02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.158 ms

应用场景:

不同的集群(redis,mysql)用不同的网络,使用自己的子网,保证集群的安全及健康

网络联通

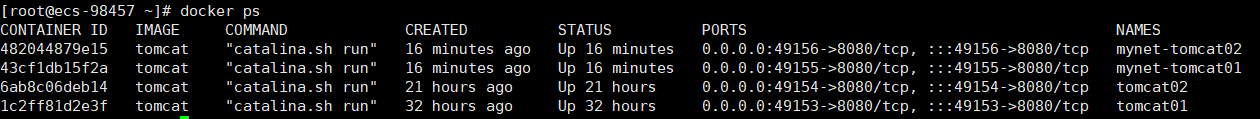

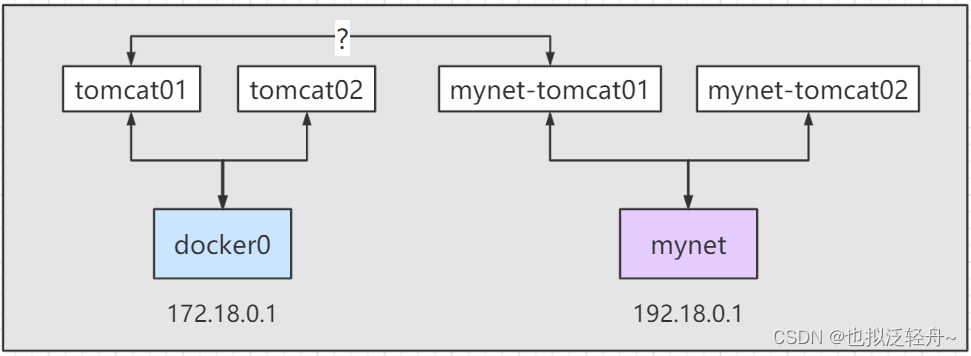

上述我们启动了四个容器,分别是默认docker0网络下的tomcat01/tomcat02,自定义网络mynet网络下的mynet-tomcat01/mynet-tomcat02

此时可以实现tomcat01到mynet-tomcat01的互联吗?答案当然是否定的,因为是处于不同的网段,我们可以进行测试

# docker exec -it 192.18.0.2 ping 172.18.0.2

Error: No such container: 192.18.0.2

那么怎么实现tomcat01到mynet-tomcat01的互联呢?可以使用docker network connect命令

# 测试联通tomcat01到mynet-tomcat01

# docker network connect mynet tomcat01

然后查看mynet得详细信息,可以发现联通之后就是将tomcat01的放入mynet网络中,也就实现了一个容器两个ip,类似于阿里云的公网IP和私网IP,都是可以访问

联通后我们再次ping连接测试,成功ping通!

# docker exec -it tomcat01 ping mynet-tomcat01

PING mynet-tomcat01 (192.168.0.2) 56(84) bytes of data.

64 bytes from mynet-tomcat01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.091 ms

64 bytes from mynet-tomcat01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.100 ms

^C

--- mynet-tomcat01 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1ms

rtt min/avg/max/mdev = 0.091/0.095/0.100/0.010 ms