目录

socket创建过程

SYSCALL_DEFINE3(socket, int, family, int, type, int, protocol)

{

return __sys_socket(family, type, protocol);

}系统调用__sys_socket->sock_create->__sock_create

int __sock_create(struct net *net, int family, int type, int protocol,

struct socket **res, int kern)

{

...

//分配sock对象

sock = sock_alloc();

//获取协议族操作表

pf = rcu_dereference(net_families[family]);

//协议族create

err = pf->create(net, sock, protocol, kern);

}

看一下关联PF_INET的协议族的关联函数

static const struct net_proto_family inet_family_ops = {

.family = PF_INET,

.create = inet_create,

.owner = THIS_MODULE,

};

//sock_register - add a socket protocol handler

int sock_register(const struct net_proto_family *ops)

{

spin_lock(&net_family_lock);

if (rcu_dereference_protected(net_families[ops->family],

lockdep_is_held(&net_family_lock)))

err = -EEXIST;

else {

rcu_assign_pointer(net_families[ops->family], ops);

err = 0;

}

}

static int __init inet_init(void)

{

...

(void)sock_register(&inet_family_ops);

}

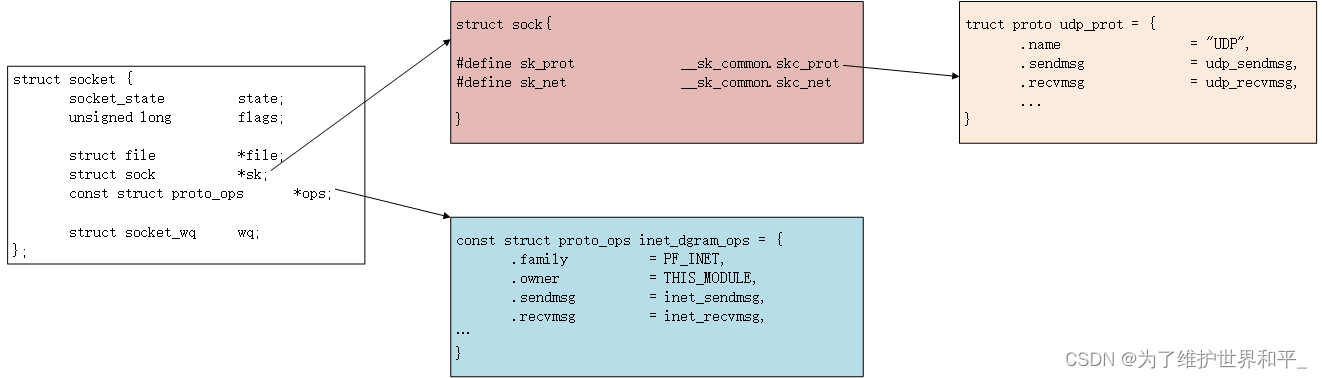

AF_INET执行的是inet_create

inet_stream_ops

和

tcp_prot

。把它们分别设置到

socket->ops

和

sock->sk_prot

上

void sock_init_data(struct socket *sock, struct sock *sk)

{

sk->sk_state_change = sock_def_wakeup;

sk->sk_data_ready = sock_def_readable;

sk->sk_write_space = sock_def_write_space;

sk->sk_error_report = sock_def_error_report;

sk->sk_destruct = sock_def_destruct;

...

}

当软中断上收到数据包时会通过调?

sk_data_ready

函数指针

来唤醒在

sock

上等待的进程。

tcp_recvmsg

int tcp_recvmsg(struct sock *sk, struct msghdr *msg, size_t len, int nonblock,

int flags, int *addr_len)

{

do {

skb_queue_walk(&sk->sk_receive_queue, skb) {

...

}

if (copied >= target) {

release_sock(sk);

lock_sock(sk);

} else {

sk_wait_data(sk, &timeo, last);

}

...

}

使用skb_queue_walk 遍历接收队列接收数据

sk_wait_data等待队列处理

#define DEFINE_WAIT_FUNC(name, function) \

struct wait_queue_entry name = { \

.private = current, \

.func = function, \

.entry = LIST_HEAD_INIT((name).entry), \

}

static inline wait_queue_head_t *sk_sleep(struct sock *sk)

{

BUILD_BUG_ON(offsetof(struct socket_wq, wait) != 0);

return &rcu_dereference_raw(sk->sk_wq)->wait;

}

int sk_wait_data(struct sock *sk, long *timeo, const struct sk_buff *skb)

{

DEFINE_WAIT_FUNC(wait, woken_wake_function);

int rc;

add_wait_queue(sk_sleep(sk), &wait);

sk_set_bit(SOCKWQ_ASYNC_WAITDATA, sk);

rc = sk_wait_event(sk, timeo, skb_peek_tail(&sk->sk_receive_queue) != skb, &wait);

sk_clear_bit(SOCKWQ_ASYNC_WAITDATA, sk);

remove_wait_queue(sk_sleep(sk), &wait);

return rc;

}

在

DEFINE_WAIT _FUNC

宏下

????????定义了?个等待队列项 wait

????????注册 回调函数?function

????????把当前进程描述符 current

关联到其

.private成员上

sk_sleep

获取

sock

对象下的等待队列列表头wait_queue_head_t

add_wait_queue 把新定义的等待队列项 wait

插?到

sock

对象的等待队列下

sk_wait_event??

让出

CPU

,进程将进?睡眠状态

软中断模块

软中断?ksoftirqd

进程,收到数据包以后:

- 若是 tcp 的包 执行 tcp_v4_rcv 函数;

- 如果是 ESTABLISH 状态下的数据包,把数据拆出来放到对应 socket 的接收队列中;

- 最后调? sk_data_ready 来唤醒?户进程。

接下来分析源码,从tcp_v4_rcv 开始

tcp_v4_rcv

int tcp_v4_rcv(struct sk_buff *skb)

{

th = (const struct tcphdr *)skb->data;//tcp header

iph = ip_hdr(skb);//获取ip header

lookup:

sk = __inet_lookup_skb(&tcp_hashinfo, skb, __tcp_hdrlen(th), th->source,

th->dest, sdif, &refcounted);

//用户未被锁定

if (!sock_owned_by_user(sk)) {

skb_to_free = sk->sk_rx_skb_cache;

sk->sk_rx_skb_cache = NULL;

//接收数据

ret = tcp_v4_do_rcv(sk, skb);

}

...

}int tcp_v4_do_rcv(struct sock *sk, struct sk_buff *skb)

{

struct sock *rsk;

//ESTABLISH 状态的数据包处理

if (sk->sk_state == TCP_ESTABLISHED) { /* Fast path */

struct dst_entry *dst = sk->sk_rx_dst;

...

tcp_rcv_established(sk, skb);

return 0;

}

//? ESTABLISH 状态的数据包处理

}static int __must_check tcp_queue_rcv(struct sock *sk, struct sk_buff *skb,

bool *fragstolen)

{

...

if (!eaten) {

//把收到的数据放到socket的接收队列尾部

__skb_queue_tail(&sk->sk_receive_queue, skb);

skb_set_owner_r(skb, sk);

}

}

void tcp_data_ready(struct sock *sk)

{

...

//软中断唤醒

sk->sk_data_ready(sk);

}

//主函数

void tcp_rcv_established(struct sock *sk, struct sk_buff *skb)

{

//从队列中接收数据

eaten = tcp_queue_rcv(sk, skb, &fragstolen);

//唤醒软中断

tcp_data_ready(sk);

...

}sock_def_readable

sk_data_ready在socket创建的时候注册的 sock_def_readable

void sock_def_readable(struct sock *sk)

{

struct socket_wq *wq;

rcu_read_lock();

//获取wait queue

wq = rcu_dereference(sk->sk_wq);

//有进程在此socket的等待队列

if (skwq_has_sleeper(wq))

//唤醒等待队列上的进行

wake_up_interruptible_sync_poll(&wq->wait, EPOLLIN | EPOLLPRI |

EPOLLRDNORM | EPOLLRDBAND);

sk_wake_async(sk, SOCK_WAKE_WAITD, POLL_IN);

rcu_read_unlock();

}

recvfrom

执?的最后,通过

DEFINE_WAIT_FUNC?

将当前进程关联的等待队列添加到

sock-

>sk_wq

下的

wait

?了。

void __wake_up_locked_sync_key(struct wait_queue_head *wq_head,

unsigned int mode, void *key)

{

__wake_up_common(wq_head, mode, 1, WF_SYNC, key, NULL);

}

#define wake_up_interruptible_sync_poll_locked(x, m) \

__wake_up_locked_sync_key((x), TASK_INTERRUPTIBLE, poll_to_key(m))__wake_up_common

实现唤醒软中断

总结

- 应用程序调用socket函数会进入内核态创建的对象

- recv函数在进入内核态后查看接收队列,在没有数据到来时把当前进程阻塞

- 软中断上下文将处理完数据放到socket的接收队列中

- 根据socket对象找到其等待队列中正在因等待而被阻塞的进程,把它唤醒。

注意上述有两次进程上下文切换的开销

1)每次进程为了等一个socket上数据就得从CPU上拿下来,然后再换上另一个进程

2)等到数据ready了,睡眠的进程又会被唤醒

每一次切换大约3~5us左右,如果是网络IO密集型的应用,CPU在不停做无用功。

高效的网络IO模型 select,poll,epoll

参考

https://course.0voice.com/v1/course/intro?courseId=2&agentId=0