keeplived

简介

- keepalived

- keepalived是集群管理中保证集群高可用(HA)的一个服务软件,其功能类似于heartbeat,用来防止单点故障。

- keepalived工作原理

??keepalived是以VRRP协议为实现基础的,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master。这样我们就可以保证集群的高可用。

- VRRP本身是数通方向的协议。

??keepalived是以VRRP协议为实现基础的,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。

??虚拟路由冗余协议,可以认为是实现路由器高可用的协议,即将N台提供相同功能的路由器组成一个路由器组,这个组里面有一个master和多个backup,master上面有一个对外提供服务的vip(该路由器所在局域网内其他机器的默认路由为该vip),master会发组播,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master。这样的话就可以保证路由器的高可用了。

- keepalived主要有三个模块,分别是core、check和vrrp。core模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。check负责健康检查,包括常见的各种检查方式。vrrp模块是来实现VRRP协议的

实验需求

- web server两台

两台正常安装Nginx的虚拟机启动并进行测试

ip

??web server1 : 192.168.94.140

??web server2 : 192.168.94.141

- keepalived server两台

正常安装keepalived、nginx进行keeplived高可用配置和nginx代理和负载均衡并进行测试

ip

??keepalived server1 : 192.168.94.130

??keepalived server2 : 192.168.94.131

目的

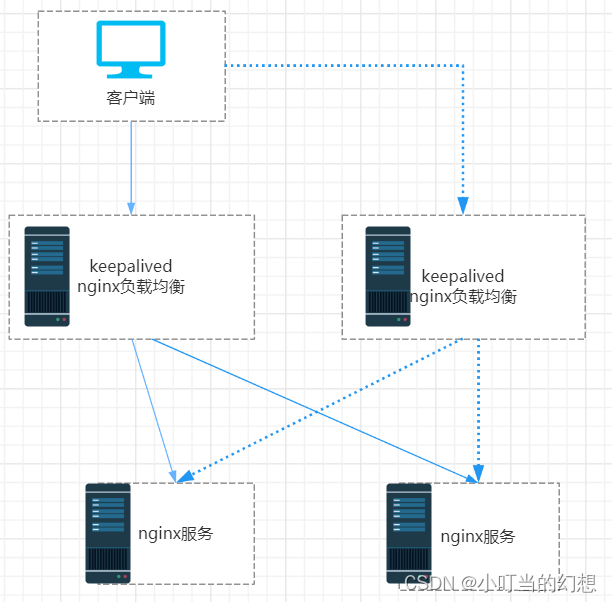

使用四台centos7的虚拟机实现keepalived的高可用

当一台做nginx负载均衡的机器宕机的时候另一台备用服务器开始代替宕掉的服务器进行工作

如下面拓扑图(虚线代表备用路线)

配置

web server配置

永久关闭防火墙、selinux

- web server 下载安装nginx

两台都要下载扩展源

centos7自带源没有nginx

yum -y install epel-release

yum -y install nginx

-

开启nginx服务

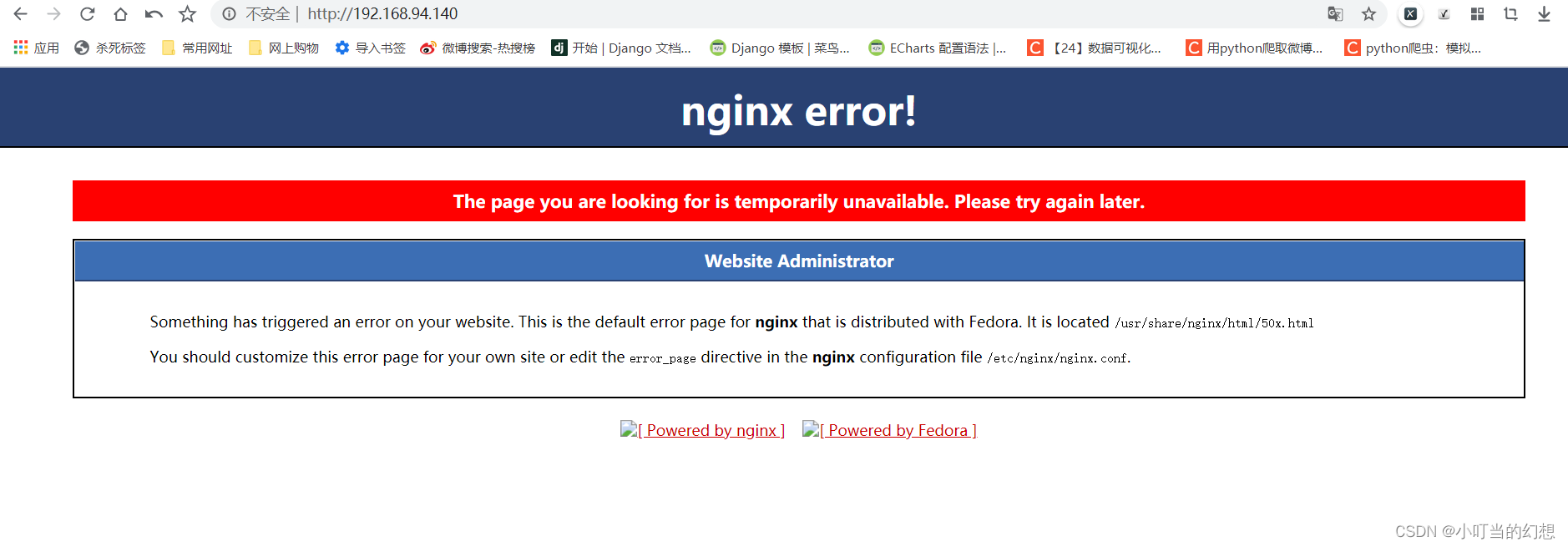

测试两台nginx服务是否正常systemctl start nginx??发现不正常!!!!! 我的虚拟机不干净,没错的可以自动跳过

出现下面页面

-

排错吧。。。。

??从上面页面可以看出nginx服务是已经开启的,可能是因为虚拟机做过一些nginx配置的原因

所以还是看看错误日志吧cat /var/log/nginx/error.log显示

2022/06/17 16:02:44 [error] 1713#1713: *2 connect() failed (113: No route to host) while connecting to upstream, client: 192.168.94.1, server: localhost, request: "GET /favicon.ico HTTP/1.1", upstream: "http://192.168.94.139:80/favicon.ico", host: "192.168.94.140", referrer: "http://192.168.94.140/"??从错误日志里里我得到了错误原因:我用nginx做过负载均衡动过配置文件。。。。。。。。

验证自己的猜测

查看子配置文件[root@web1 ~]# ls /etc/nginx/conf.d/ proxy.conf upstream.conf??确实出现两个配置文件,证明我的猜测是对的,然后把它删除或者改成不以conf结尾的文件再重启服务使得重新加载配置文件。

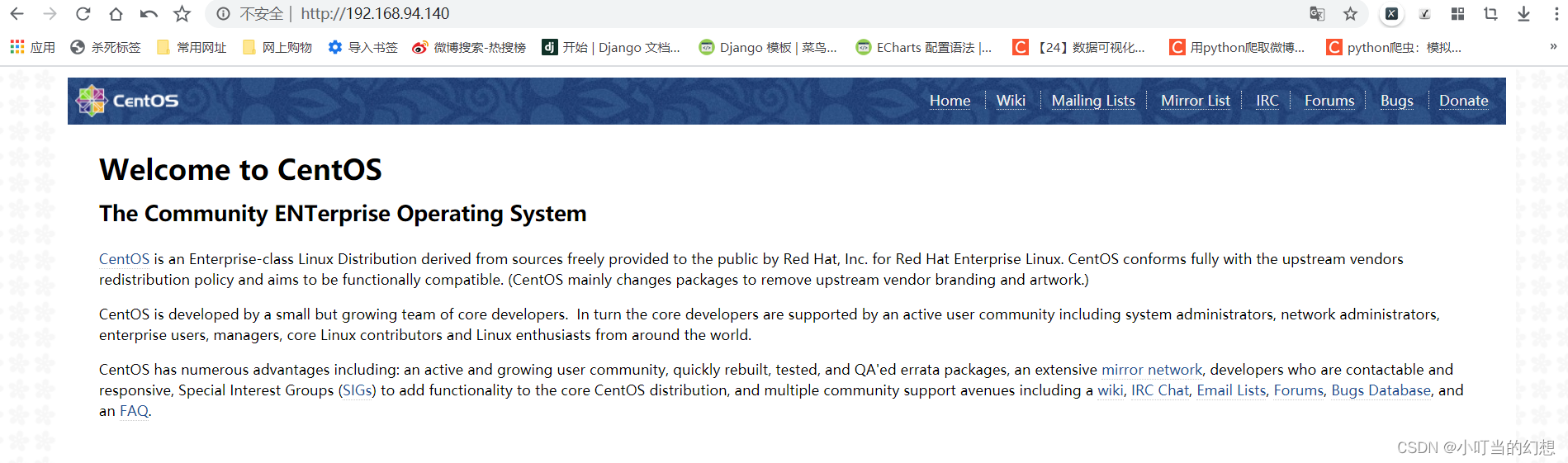

显示正常了

??我为什么要找/etc/nginx/conf.d下的文件,这是nginx服务定义的,你可以看主配置文件里的介绍

??而且我一般不怎么动主配置文件,来自菜鸡的不自信。error因人而异、只是分享不喜勿杠[root@web1 nginx]# cat /etc/nginx/nginx.conf有下面内容,意思是从

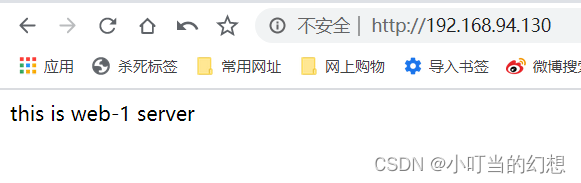

/etc/nginx/conf.d/目录中添加加载模块化配置文件# Load modular configuration files from the /etc/nginx/conf.d directory. # See http://nginx.org/en/docs/ngx_core_module.html#include # for more information. include /etc/nginx/conf.d/*.conf;- 重写自带的index.html以便于区分是哪台web server 提供的服务

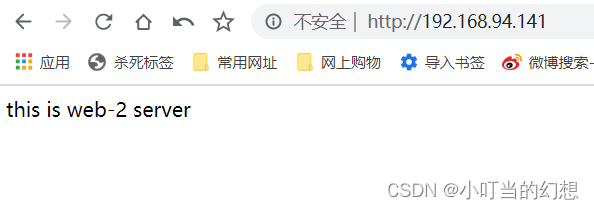

web1的配置 [root@web1 nginx]# echo "this is web-2 server" > /usr/share/nginx/html/index.html web2的配置 [root@web2 ~]# echo "this is web-2 server" > /usr/share/nginx/html/index.html??你为什么知道index.html文件在/var/share/nginx/html/下或者说你怎么知道你改的文件是就是nginx的文件呢?所以怎么说???

看配置文件啊!!!! 看安装信息啊!!!看我干嘛!!!!!

??[root@web1 nginx]# cat /etc/nginx/nginx.conf??有部分下面内容

error_log /var/log/nginx/error.log;#错误日志文件 pid /run/nginx.pid; #pid文件 root /usr/share/nginx/html;#根目录[root@web1 nginx]# nginx -V nginx version: nginx/1.20.1 built by gcc 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC) built with OpenSSL 1.1.1g FIPS 21 Apr 2020 (running with OpenSSL 1.1.1k FIPS 25 Mar 2021) TLS SNI support enabled configure arguments: --prefix=/usr/share/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --http-client-body-temp-path=/var/lib/nginx/tmp/client_body --http-proxy-temp-path=/var/lib/nginx/tmp/proxy --http-fastcgi-temp-path=/var/lib/nginx/tmp/fastcgi --http-uwsgi-temp-path=/var/lib/nginx/tmp/uwsgi --http-scgi-temp-path=/var/lib/nginx/tmp/scgi --pid-path=/run/nginx.pid --lock-path=/run/lock/subsys/nginx --user=nginx --group=nginx --with-compat --with-debug --with-file-aio --with-google_perftools_module --with-http_addition_module --with-http_auth_request_module --with-http_dav_module --with-http_degradation_module --with-http_flv_module --with-http_gunzip_module --with-http_gzip_static_module --with-http_image_filter_module=dynamic --with-http_mp4_module --with-http_perl_module=dynamic --with-http_random_index_module --with-http_realip_module --with-http_secure_link_module --with-http_slice_module --with-http_ssl_module --with-http_stub_status_module --with-http_sub_module --with-http_v2_module --with-http_xslt_module=dynamic --with-mail=dynamic --with-mail_ssl_module --with-pcre --with-pcre-jit --with-stream=dynamic --with-stream_ssl_module --with-stream_ssl_preread_module --with-threads --with-cc-opt='-O2 -g -pipe -Wall -Wp,-D_FORTIFY_SOURCE=2 -fexceptions -fstack-protector-strong --param=ssp-buffer-size=4 -grecord-gcc-switches -specs=/usr/lib/rpm/redhat/redhat-hardened-cc1 -m64 -mtune=generic' --with-ld-opt='-Wl,-z,relro -specs=/usr/lib/rpm/redhat/redhat-hardened-ld -Wl,-E'??当然上面没有说index的指定路径。。。但。。。但是!里面有安装路径和error.log日志文件路径、配置文件路径、属主属组是nginx等等。。。

??当你选择编译安装的时候你会和这些参数打交道

说了那么多看效果。。。。。

配置keepalived、nginx

在服务器ip为

192.168.94.130

192.168.94.131

做配置

-

安装 keepalived、nginx设置成开机自启

systemctl enable keepalived systemctl enable nginx在两台需要做负载均衡的服务器上,做nginx负载均衡配置,在/etc/nginx/conf.d/下编写配置文件

vim proxy.confserver { listen 80; server_name localhost; access_log /var/log/nginx/host.access.log main; location / { proxy_pass http://index; proxy_redirect default; proxy_set_header Host $http_host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header REMOTE-HOST $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; } }vim upstream.confupstream index { server 192.168.94.140:80 weight=1 max_fails=2 fail_timeout=2; server 192.168.94.141:80 weight=2 max_fails=2 fail_timeout=2; }??把同样的配置文件写在另一个做负载均衡的服务器里面

可以利用scp远程拷贝,我是在131的机子上做的那么远程scp如下[root@backup ~]# scp upstream.conf 192.168.94.130:/etc/nginx/conf.d/ [root@backup ~]# scp proxy.conf 192.168.94.130:/etc/nginx/conf.d/??两台服务器130、131重新启动nginx服务以至于重新加载配置文件

测试访问

方法:

??

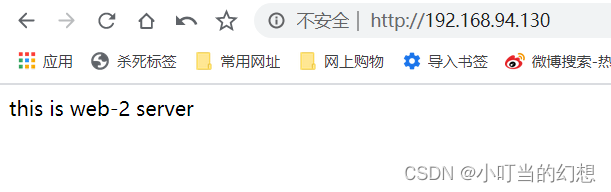

??浏览器直接访问两台负载均衡服务器的ip地址,并不断刷新

??

预测结果:

??

??两个ip地址都能够访问到上游服务器里的nginx web服务也就是140和141的nginx服务并且经过不断刷新访问两个上游服务器的次数比例为1:2,这是根据负载均衡的服务器upstream.conf配置文件里的weight权重决定的

??

结果:

??

正常

-

配置keepalived服务

编写配置文件

两台都做配置文件备份防止出错无法挽回[root@master ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak[root@backup ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bakmaster主:

! Configuration File for keepalived global_defs { router_id director1 #辅助改为director2 } vrrp_instance VI_1 { state MASTER #定义主还是备 interface ens33 #VIP绑定接口 virtual_router_id 80 #整个集群的调度器一致 priority 100 #back改为50 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.94.150/24 #VIP即虚拟IP利用虚拟ip漂移技术实现 } } ~backup备:

global_defs { router_id directory2 } vrrp_instance VI_1 { #实例名称,两台要保持相同 state BACKUP #设置为backup interface ens33 #心跳网卡 nopreempt #设置到back上面,不抢占资源 virtual_router_id 80 #虚拟路由编号,主备要保持一致 priority 50 #辅助改为50 advert_int 1 #检查间隔,单位秒 authentication { #秘钥认证 auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.94.150/24 } }重启keepalived

systemctl restart keepalived查看是否 master主多了一个虚拟ip150而backup机器上没有

[root@master ~]# systemctl restart keepalived [root@master ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:5c:99:6f brd ff:ff:ff:ff:ff:ff inet 192.168.94.130/24 brd 192.168.94.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.94.150/24 scope global secondary ens33 valid_lft forever preferred_lft forever[root@backup ~]# systemctl restart keepalived [root@backup ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:2c:b5:86 brd ff:ff:ff:ff:ff:ff inet 192.168.94.131/24 brd 192.168.94.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever测试:

方法:

??

??把master主机宕掉看看是否虚拟ip是否会飘到backup主机上

??

预测结果:

??

??会,且能够正常访问到上游nginx服务

准备:

查看主、备服务器服务是否正常

[root@backup ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2022-06-19 09:30:05 CST; 19min ago

Process: 998 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 1048 (keepalived)

CGroup: /system.slice/keepalived.service

├─1048 /usr/sbin/keepalived -D

├─1050 /usr/sbin/keepalived -D

└─1051 /usr/sbin/keepalived -D

[root@backup ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2022-06-19 09:30:05 CST; 26min ago

Process: 998 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 1048 (keepalived)

CGroup: /system.slice/keepalived.service

├─1048 /usr/sbin/keepalived -D

├─1050 /usr/sbin/keepalived -D

└─1051 /usr/sbin/keepalived -D

6月 19 09:45:38 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:38 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:38 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:38 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:43 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:43 backup Keepalived_vrrp[1051]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens33 for 192.168.94.150

6月 19 09:45:43 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:43 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:43 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:45:43 backup Keepalived_vrrp[1051]: Sending gratuitous ARP on ens33 for 192.168.94.150

[root@backup ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2022-06-19 09:48:30 CST; 8min ago

Process: 22308 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 22306 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 22305 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 22310 (nginx)

CGroup: /system.slice/nginx.service

├─22310 nginx: master process /usr/sbin/nginx

└─22312 nginx: worker process

6月 19 09:48:30 backup systemd[1]: Starting The nginx HTTP and reverse proxy server...

6月 19 09:48:30 backup nginx[22306]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

6月 19 09:48:30 backup nginx[22306]: nginx: configuration file /etc/nginx/nginx.conf test is successful

6月 19 09:48:30 backup systemd[1]: Started The nginx HTTP and reverse proxy server.

[root@master ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2022-06-19 09:57:34 CST; 31s ago

Process: 57840 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 57841 (keepalived)

CGroup: /system.slice/keepalived.service

├─57841 /usr/sbin/keepalived -D

├─57842 /usr/sbin/keepalived -D

└─57843 /usr/sbin/keepalived -D

6月 19 09:57:35 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:35 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:35 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:35 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:40 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:40 master Keepalived_vrrp[57843]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens33 for 192.168.94.150

6月 19 09:57:40 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:40 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:40 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

6月 19 09:57:40 master Keepalived_vrrp[57843]: Sending gratuitous ARP on ens33 for 192.168.94.150

[root@master ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2022-06-19 09:48:04 CST; 10min ago

Process: 46804 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 46802 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCESS)

Process: 46800 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 46808 (nginx)

CGroup: /system.slice/nginx.service

├─46808 nginx: master process /usr/sbin/nginx

├─46812 nginx: worker process

└─46813 nginx: worker process

6月 19 09:48:04 master systemd[1]: Starting The nginx HTTP and reverse proxy server...

6月 19 09:48:04 master nginx[46802]: nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

6月 19 09:48:04 master nginx[46802]: nginx: configuration file /etc/nginx/nginx.conf test is successful

6月 19 09:48:04 master systemd[1]: Started The nginx HTTP and reverse proxy server.

查看ip:

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5c:99:6f brd ff:ff:ff:ff:ff:ff

inet 192.168.94.130/24 brd 192.168.94.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.94.150/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5c:996f/64 scope link

valid_lft forever preferred_lft forever

[root@backup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2c:b5:86 brd ff:ff:ff:ff:ff:ff

inet 192.168.94.131/24 brd 192.168.94.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe2c:b586/64 scope link

valid_lft forever preferred_lft forever

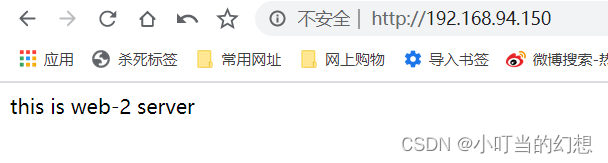

关闭主(master)的keepalived服务(相当于宕机)

再查看ip

[root@master ~]# systemctl stop keepalived

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5c:99:6f brd ff:ff:ff:ff:ff:ff

inet 192.168.94.130/24 brd 192.168.94.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe5c:996f/64 scope link

valid_lft forever preferred_lft forever

[root@backup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2c:b5:86 brd ff:ff:ff:ff:ff:ff

inet 192.168.94.131/24 brd 192.168.94.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.94.150/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe2c:b586/64 scope link

valid_lft forever preferred_lft forever

结果:

在主、备的服务器上发生了虚拟ip的漂移,从而实现服务的高可用

上游服务正常

本篇只是一个思维引导,错误之处,请指出。

error因人而异