没有可替代的产品------keepalived

高可用集群技术

keepalived安装

keepalived实现VRRP

keepalived实现LVS的高可用

keepalived 实现其它应用的高可用

??????????????????

1 高可用集群

- LB:Load Balance 负载均衡

? LVS/HAProxy/nginx(http/upstream, stream/upstream)

- HA:High Availability 高可用集群

? 数据库、Zookeeper、Redis

? KeepAlived 通用的高可用集群

? SPoF: Single Point of Failure,解决单点故障

- HPC:High Performance Computing 高性能集群

1.2系统可用性

SLA:服务等级协议

SLA:Service-Level Agreement 服务等级协议(提供服务的企业与客户之间就服务的品质、水准、性能等方面所达成的双方共同认可的协议或契约)

1.4实现高可用

提升系统高用性,降低MTTR- Mean Time To Repair(平均故障时间)

解决方案:建立冗余机制

- 主/备

- 双主 ###访问量不是特别大

- active —> HEARTBEAT ---->passive

- active<---->HEARTBEAT<----->avcive

1.5 高可用相关技术

实现的是服务的高可用 (依赖一些组件)最终才能实现高可用

1.5.1 HA Service

资源:组成一个高可用服务的“组件”,比如:vip,service process,shared storage

- passive node的数量

- 资源切换

1.5.2 Shared Storage

- NAS(Network Attached Storage):网络附加存储,基于网络的共享文件系统。

- SAN(Storage Area Network):存储区域网络,基于网络的块级别的共享

1.5.3 Network partition 网络分区

网络的高可用,(双份,route swith)-----------早期的高可用

隔离设备 fence

node:STONITH = Shooting The Other Node In The Head(强制下线/断电)

1.5.4 双节点集群(TWO nodes Cluster)

辅助设备:仲裁设备,ping node, quorum disk

- Failover:故障切换,即某资源的主节点故障时,将资源转移至其它节点的操作

- Failback:故障移回,即某资源的主节点故障后重新修改上线后,将之前已转移至其它节点的资源重新切回的过程

1.5.5 HA Cluster实现方案

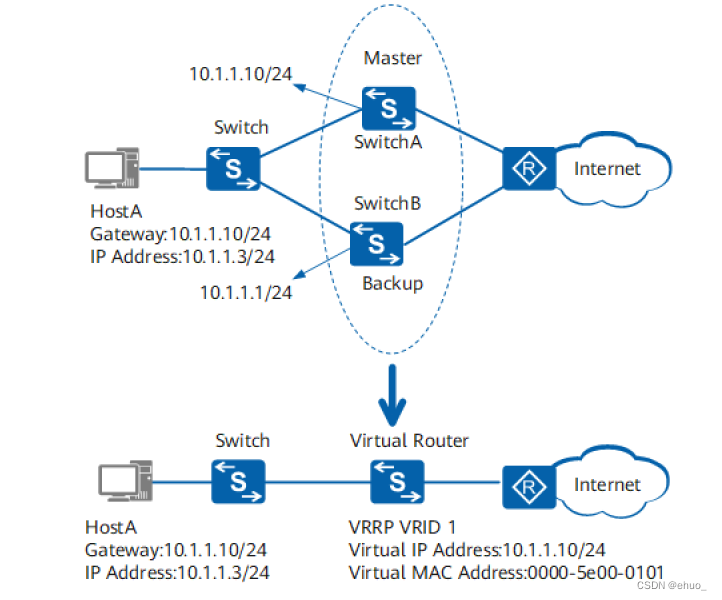

VRRP:Virtual Router Redundancy Protocol

虚拟路由冗余协议,解决静态网关单点风险

- 物理层:路由器、三层交换机

- 软件层:keepalived

1.5.6 VRRP

1.5.6.1 VRRP 网络层硬件实现

VRRP

参考链接:

https://support.huawei.com/enterprise/zh/doc/EDOC1000141382/19258d72/basic-concepts-of-vrrp

https://wenku.baidu.com/view/dc0afaa6f524ccbff1218416.html

https://wenku.baidu.com/view/281ae109ba1aa8114431d9d0.html

1.5.6.2 VRRP 相关术语

虚拟路由器:virtual Router

虚拟路由器标识:VRID (0-255)。唯一标识虚拟路由器(对外的一个业务)

-

VIP:virtual IP (可以有一个,也可多个)

-

VMAC:共享一个mac地址

-

物理路由器:

master:主设备

backup:备用设备

priority:优先级

VRRP相关技术

通告:心跳,优先级等;周期性

工作方式:抢占式、非抢占式

安全认证

- 无认证

- 简单字符认证:预共享密钥

工作模式:

- 主/备:单虚拟路由器

- 主/主:主/备(虚拟路由器1),备/主(虚拟路由器2)

2 Keepalived 架构和安装

2.1 Keepalived 介绍

vrrp 协议的软件实现,原生设计目的为了高可用 ipvs服务

功能:

- 基于vrrp协议完成地址流动

- 为vip地址所在的节点生成ipvs规则(在配置文件中预先定义)

- 为ipvs集群的各RS做健康状态检测

- 基于脚本调用接口完成脚本中定义的功能,进而影响集群事务,以此支持nginx、haproxy等服务

2.2 keepalived架构

https://keepalived.org/doc/

http://keepalived.org/documentation.html

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-rCHOm2AN-1657977604333)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220713101438873.png)]](https://img-blog.csdnimg.cn/a14591ce6ee34c7186c5a8be4754f39c.png)

-

用户空间核心组件:

vrrp stack:VIP消息通告

checkers:监测 Real Server

system call:实现 vrrp 协议状态转换时调用脚本的功能

SMTP:邮件组件

IPVS wrapper:生成 IPVS 规则

Netlink Reflector:网络接口

WatchDog:监控进程 -

控制组件:提供keepalived.conf 的解析器,完成Keepalived配置

-

IO复用器:针对网络目的而优化的自己的线程抽象

-

内存管理组件:为某些通用的内存管理功能(例如分配,重新分配,发布等)提供访问权限

2.3 Keepalived 环境准备

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-k8zCzg3J-1657977604334)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220713101838802.png)]](https://img-blog.csdnimg.cn/50eefc580b1b4c4a9c2ecfc70e521f2b.png)

两个节点:时间必须同步-ntp, chrony

关闭防火墙及SELinux

2.4 Keepalived 相关文件

软件包名:keepalived

主程序文件:/usr/sbin/keepalived

主配置文件:/etc/keepalived/keepalived.conf

配置文件示例:/usr/share/doc/keepalived/

Unit File:/lib/systemd/system/keepalived.service

Unit File的环境配置文件:

/etc/sysconfig/keepalived CentOS

/etc/default/keepalived Ubuntu

注意:CentOS 7 上有 bug,可能有下面情况出现

systemctl restart keepalived #新配置可能无法生效

systemctl stop keepalived;systemctl start keepalived #无法停止进程,需要 kill停止

2.5 Keepalived 安装

2.5.1 包安装

#CentOS

[root@10 ~]# yum -y install keepalived

yum info keepalived #查看详细的信息

#ubuntu

[root@ubuntu2004 ~]#apt -y install keepalived

root@ubuntu2004:~# apt list keepalived

Listing... Done

keepalived/focal-updates,now 1:2.0.19-2ubuntu0.2 amd64 [installed]

N: There are 2 additional versions. Please use the '-a' switch to see them.

root@ubuntu2004:~#

#ubuntu 默认缺少一个配置文件

dpkg -L keepalived #配置文件

root@ubuntu2004:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.sample /etc/keepalived/keepalived.conf

#核心VRRP功能

root@ubuntu2004:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:8a:24:a5 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.100/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.200.11/32 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.200.12/32 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.200.13/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe8a:24a5/64 scope link

valid_lft forever preferred_lft forever

#编译安装

[root@ubuntu2004 ~]#apt update

[root@ubuntu2004 ~]#apt -y install make gcc ipvsadm build-essential pkg-config automake autoconf libipset-dev libnl-3-dev libnl-genl-3-dev libssl-dev libxtables-dev libip4tc-dev libip6tc-dev libipset-dev libmagic-dev libsnmp-dev libglib2.0-dev libpcre2-dev libnftnl-dev libmnl-dev libsystemd-dev

root@ka1:~# wget https://keepalived.org/software/keepalived-2.2.7.tar.gz

root@ka1:~# tar xf keepalived-2.2.7.tar.gz

root@ka1:~# ls

init4.sh keepalived-2.2.7 keepalived-2.2.7.tar.gz reset_v6.sh snap

root@ka1:~# cd keepalived-2.2.7

#选项--disable-fwmark 可用于禁用iptables规则,可访 止VIP无法访问,无此选项默认会启用iptables

规则

root@ka1:~/keepalived-2.2.7# ./configure --prefix=/usr/local/keepalived --disable-femark

root@ka1:~/keepalived-2.2.7# make -j 2 && make install

root@ka1:~/keepalived-2.2.7# ls /usr/local/keepalived/

bin etc sbin share

root@ka1:~/keepalived-2.2.7# tree /usr/local/keepalived/

#可以查看到版本信息

root@ka1:~/keepalived-2.2.7# /usr/local/keepalived/sbin/keepalived -v

Keepalived v2.2.7 (01/16,2022)

root@ka1:~/keepalived-2.2.7# cp keepalived/keepalived.service /lib/systemd/system/

#默认无法启动,缺少配置文件

root@ka1:~/keepalived-2.2.7# ls /usr/local/keepalived/etc/keepalived/

keepalived.conf.sample samples

root@ka1:~/keepalived-2.2.7# cp /usr/local/keepalived/etc/keepalived/keepalived.conf.sample /usr/local/keepalived/etc/keepalived/keepalived.conf

root@ka1:~/keepalived-2.2.7# mv /usr/local/keepalived/etc/keepalived/keepalived.conf /usr/local/keepalived/etc/

#路径

root@ka1:~/keepalived-2.2.7# cat /usr/local/keepalived/etc/keepalived.conf

root@ka2:~# systemctl daemon-reload

root@ka2:~# systemctl enable --now keepalived.service

root@ka1:~# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/lib/systemd/system/keepalived.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2022-07-14 17:30:00 CST; 3min 26s ago

Docs: man:keepalived(8)

man:keepalived.conf(5)

man:genhash(1)

https://keepalived.org

Main PID: 77346 (keepalived)

Tasks: 3 (limit: 2237)

Memory: 2.0M

CGroup: /system.slice/keepalived.service

├─77346 /usr/local/keepalived/sbin/keepalived -f /usr/local/keepalived/etc/keepalived.conf --dont-fork -D

├─77362 /usr/local/keepalived/sbin/keepalived -f /usr/local/keepalived/etc/keepalived.conf --dont-fork -D

└─77363 /usr/local/keepalived/sbin/keepalived -f /usr/local/keepalived/etc/keepalived.conf --dont-fork -D

Jul 14 17:30:07 ka1.ehuo.org Keepalived_healthcheckers[77362]: HTTP_CHECK on service [192.168.200.2]:tcp:1358 failed after 3 retries.

Jul 14 17:30:07 ka1.ehuo.org Keepalived_healthcheckers[77362]: Removing service [192.168.200.2]:tcp:1358 from VS [10.10.10.2]:tcp:1358

Jul 14 17:30:07 ka1.ehuo.org Keepalived_healthcheckers[77362]: Lost quorum 1-0=1 > 0 for VS [10.10.10.2]:tcp:1358

Jul 14 17:30:07 ka1.ehuo.org Keepalived_healthcheckers[77362]: Adding sorry server [192.168.200.200]:tcp:1358 to VS [10.10.10.2]:tcp:1358

Jul 14 17:30:07 ka1.ehuo.org Keepalived_healthcheckers[77362]: Removing alive servers from the pool for VS [10.10.10.2]:tcp:1358

Jul 14 17:30:26 ka1.ehuo.org Keepalived_healthcheckers[77362]: smtp fd 10 returned write error

Jul 14 17:30:27 ka1.ehuo.org Keepalived_healthcheckers[77362]: smtp fd 11 returned write error

Jul 14 17:30:27 ka1.ehuo.org Keepalived_healthcheckers[77362]: smtp fd 12 returned write error

root@ka1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:33:8c:a4 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.103/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe33:8ca4/64 scope link

valid_lft forever preferred_lft forever

#ip地址没有出来,会可能产生冲突问题

关闭已经打开的机器,向外发布,不能全部拥有

oot@ka2:~# mkdir /etc/keepalived

root@ka2:~# mv /usr/local/keepalived/etc/keepalived.conf /etc/keepalived/

root@ka2:~# vim /lib/systemd/system/keepalived.service

#删除-f,原配置文件

root@ka2:~# systemctl daemon-reload

root@ka2:~# systemctl restart keepalived.service

#ip a 的地址,是飘动的,如果有一个机器宕机,就会飘动到另一台

vim /etc/keepalived/keepalived.conf

2.6 KeepAlived 配置说明

2.6.1 配置文件组成部分

配置文件

/etc/keepalived/keepalived.conf

配置文件组成

GLOBAL CONFIGURATION

Global definitions:定义邮件配置,route_id,vrrp配置,多播地址等

VRRP CONFIGURATION

VRRP instance(s):定义每个vrrp虚拟路由器

LVS CONFIGURATION

Virtual server group(s)

Virtual server(s):LVS集群的VS和RS

2.6.2 配置语法说明

2.6.2.1 全局配置

global_defs{

router_id LVS_DEVEL #建议主机名区分 ,路由器ID

vrrp_skip_check_adv_addr #对所有通告都健康性检查,会消耗性能,

vrrp_strict #会自动开启iptables防火墙规则,默认导致VIP无法访问,建议不加此配置

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 230.1.1.1 #指定组播IP地址范围:224.0.0.0到239.255.255.255,默认值:224.0.0.18

vrrp_iptables #此项和vrrp_strict同时开启时,则不会添加防火墙规则,如果无配置vrrp_strict项,则无需启用此项配置

}

2.6.2.2 配置虚拟路由器

vrrp_instance VI_1 { ##<String>为vrrp的实例名,一般为业务名称

state MASTER #名义上的,,#当前节点在此虚拟路由器上的初始状态,状态为MASTER或者BACKUP

interface eth0 #绑定为当前虚拟路由器使用的物理接口,如:eth0,bond0,br0,可以和VIP不在一个网卡

virtual_router_id 66 #集群,广播地址必须一致

prioity NUM #真正的 #当前物理节点在此虚拟路由器的优先级,范围:1-254,每个keepalived主机节点此值不同

advert_int 1 #vrrp通告的时间间隔,默认1s

authentication { #认证机制

auth_tpye PASS #AH为IPSEC认证(不推荐),PASS为简单密码(建议使用)

auth_pass 123456 #预共享密钥,仅前8位有效,同一个虚拟路由器的多个keepalived节点必须一样

}

virtual_ipaddress { #虚拟IP,生产环境可能指定上百个IP地址

10.0.0.100 dev eth0 label eth0:1 #指定VIP的网卡label

}

}

抓包

[root@10 ~]# tcpdump -i eth0 -nn host 230.1.1.1

2.7 启用 Keepalived 日志功能

root@ka1:~# cat /usr/local/keepalived/etc/sysconfig/keepalived

# Options for keepalived. See `keepalived --help' output and keepalived(8) and

# keepalived.conf(5) man pages for a list of all options. Here are the most

# common ones :

#

# --vrrp -P Only run with VRRP subsystem.

# --check -C Only run with Health-checker subsystem.

# --dont-release-vrrp -V Dont remove VRRP VIPs & VROUTEs on daemon stop.

# --dont-release-ipvs -I Dont remove IPVS topology on daemon stop.

# --dump-conf -d Dump the configuration data.

# --log-detail -D Detailed log messages.

# --log-facility -S 0-7 Set local syslog facility (default=LOG_DAEMON)

#

KEEPALIVED_OPTIONS="-D"

实现独立日志

vim /etc/rsyslog.d/6-keepalived.conf

local6.* /var/log/keepalived.log

vim /usr/local/keepalived/etc/sysconfig/keepalived

KEEPALIVED_OPTIONS="-D -S 6"

#不详细,可以指定

systemctl restart keepalived.service rsyslog.service

2.8 实现 Keepalived 独立子配置文件

当生产环境复杂时, /etc/keepalived/keepalived.conf 文件中内容过多,不易管理,可以将不同集群的配置,比如:不同集群的VIP配置放在独立的子配置文件中

利用include 指令可以实现包含子配置文件

include /path/file

3 Keepalived 实现 VRRP

journalctl -u keepalived.service

ubuntu 缺少一个配置文件

多了VIP地址,不直接提供端口

配置文件

vrrp_地址漂移

三个必须一致

广播地址。密码。路由器ID

三个不一样

state route_id priority

global_defs{

router_id LVS_DEVEL #建议主机名区分 ,路由器ID

vrrp_skip_check_adv_addr #对所有通告都检查,会消耗性能

#vrrp_strict #会自动开启iptables防火墙规则,默认导致VIP无法访问,建议不加此配置

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group 230.1.1.1

}

vrrp_instance VI_1 {

state MASTER #名义上的

interface eth0

virtual_router_id 66 #集群,广播地址必须一致

prioity NUM #真正的

advert_int 1

authentication {

auth_tpye PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

tcpdump -i eth0 -nm host

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-qyNP5aTa-1657977604334)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220713120211527.png)]](https://img-blog.csdnimg.cn/2acd5b36a34b47648a344ba2b34a049e.png)

00000000000000000000

unicast_src_ip 10.0.0.101

unicast_peer{

10.0.0.102

}

3.2 抢占模式和非抢占模式

默认为抢占模式 preempt,即当高优先级的主机恢复在线后,会抢占低先级的主机的master角色,造成网络抖动,建议设置为非抢占模式 nopreempt ,即高优先级主机恢复后,并不会抢占低优先级主机的master 角色

注意: 非抢占模式下,如果原主机down机, VIP迁移至的新主机, 后续新主机也发生down时,仍会将VIP迁移回原主机

注意:要关闭 VIP抢占,必须将各 Keepalived 服务器 state 配置为 BACKUP

#ha1主机配置

rrp_instance VI_1 {

state BACKUP #都为BACKUP

interface eth0

virtual_router_id 66

priority 100 #优先级高

advert_int 1

nopreempt #添加此行,设为nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

#ha2主机配置

vrrp_instance VI_1 {

state BACKUP #都为BACKUP

interface eth0

virtual_router_id 66

priority 80 #优先级低

advert_int 1

#nopreempt #生产中ka2主机是抢占式,不添加此行,否则会导致ka1即使优先级降低,也不会切换至ka

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

3.2.2 抢占延迟模式 preempt_delay

抢占延迟模式,即优先级高的主机恢复后,不会立即抢回VIP,而是延迟一段时间(默认300s)再抢回VIP

preempt_delay # #指定抢占延迟时间为#s,默认延迟300s

注意:需要各keepalived服务器state为BACKUP,并且不要启用 vrrp_strict

#ka1主机配置

vrrp_instance VI_1 {

state BACKUP #都为BACKUP

interface eth0

virtual_router_id 66

priority 100 #优先级高

advert_int 1

preempt_delay 60 #抢占延迟模式,默认延迟300s

#ka2主机配置

vrrp_instance VI_1 {

state BACKUP #都为BACKUP

interface eth0

virtual_router_id 66

priority 80 #优先级低

advert_int 1

3.3 VIP 单播配置

默认keepalived主机之间利用多播相互通告消息,会造成网络拥塞,可以替换成单播,减少网络流量

注意:启用 vrrp_strict 时,不能启用单播

#在所有节点vrrp_instance语句块中设置对方主机的IP,建议设置为专用于对应心跳线网络的地址,而非使用业务网络

unicast_src_ip <IPADDR> #指定发送单播的源IP

unicast_peer {

<IPADDR> #指定接收单播的对方目标主机IP

......

}

#master 主机配置

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 66

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

unicast_src_ip 10.0.0.103 #自己的地址

unicast_peer {

10.0.0.104 #目的地址

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

#slave 主机配置

root@ka2:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 66

priority 80

advert_int 1

#nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

unicast_src_ip 10.0.0.104 #自己的地址

unicast_peer {

10.0.0.103 #目的地址

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-sPlaYsjq-1657977604335)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220714234804669.png)]](https://img-blog.csdnimg.cn/8418374959a54385963caa961ce692b7.png)

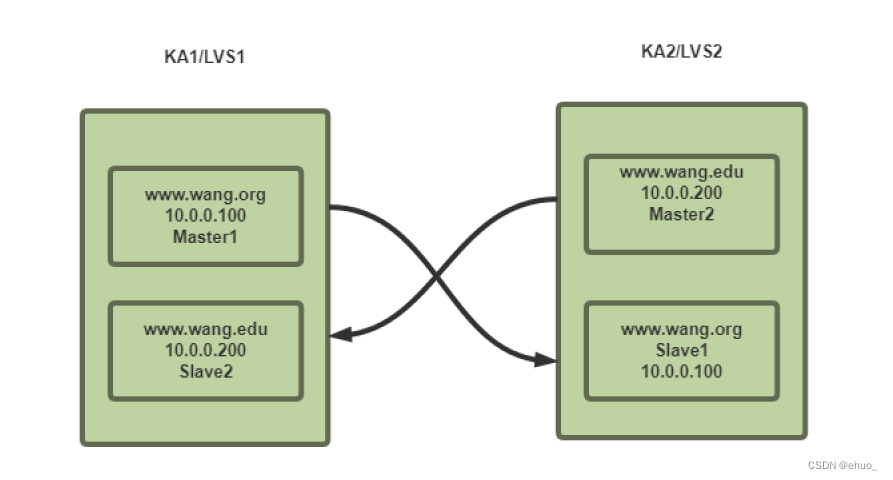

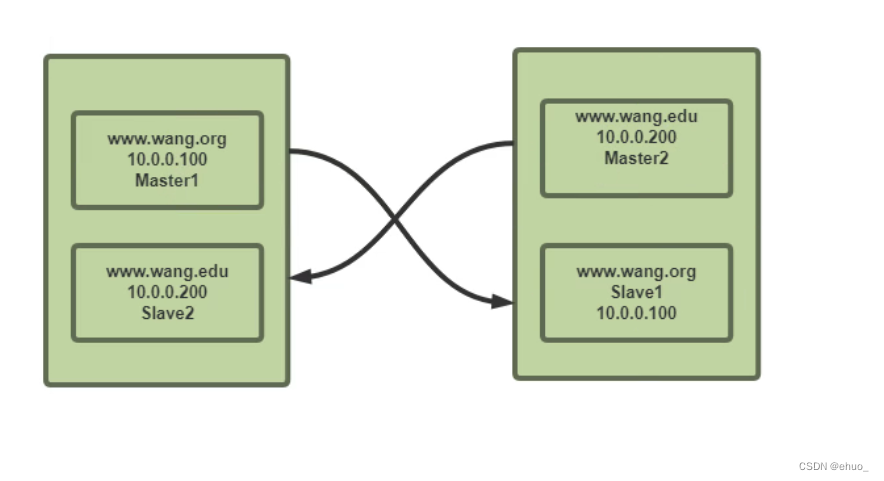

3.5 实现 Master/Master 的 Keepalived 双主架构

master/slave的单主架构,同一时间只有一个Keepalived对外提供服务,此主机繁忙,而另一台主机却很空闲,利用率低下,可以使用master/master的双主架构,解决此问题。

Master/Master 的双主架构:

即将两个或以上VIP分别运行在不同的keepalived服务器,以实现服务器并行提供web访问的目的,提高服务器资源利用率

简单来说就是配置文件双份

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 66

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.edu.conf

vrrp_instance VI_2 {

state BACKUP #slave-备

interface eth0

virtual_router_id 88 #主机名修改,不是一个集群

priority 80 #优先级降低

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:2 #增加个网卡加IP

}

}

root@ka2:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 66

priority 80

advert_int 1

#nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

root@ka2:/etc/keepalived/conf.d# cat www.ehuo.edu.conf

vrrp_instance VI_2 {

state MASTER #master-备

interface eth0

virtual_router_id 88 #主机名修改,不是一个集群

priority 100 #优先级增加

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:2 #增加个网卡加IP

}

}

#测试

root@ka1:/etc/keepalived/conf.d# hostname -I

10.0.0.103 10.0.0.100

root@ka1:/etc/keepalived/conf.d# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:33:8c:a4 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.103/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.100/32 scope global eth0:1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe33:8ca4/64 scope link

valid_lft forever preferred_lft forever

root@ka2:/etc/keepalived/conf.d# hostname -I

10.0.0.104 10.0.0.200

root@ka2:/etc/keepalived/conf.d# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:4d:e7:36 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.104/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.200/32 scope global eth0:2

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe4d:e736/64 scope link

valid_lft forever preferred_lft forever

3.6 实现多主模架构

3.6.2 案例:三个节点的三主六从架构实现

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-vCYf1lrf-1657977604335)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220715001259320.png)]](https://img-blog.csdnimg.cn/cb3fc760ba0b46d59a2481ab751a8a01.png)

#第一个节点ka1配置:

virtual_router_id 1 , Vrrp instance 1 , MASTER,优先级100

virtual_router_id 2 , Vrrp instance 2 , BACKUP,优先级80

virtual_router_id 3 , Vrrp instance 3 , BACKUP,优先级60

#第二个节点ka2配置:

virtual_router_id 1 , Vrrp instance 1 , BACKUP,优先级60

virtual_router_id 2 , Vrrp instance 2 , MASTER,优先级100

virtual_router_id 3 , Vrrp instance 3 , BACKUP,优先级80

#第三个节点ka3配置:

virtual_router_id 1 , Vrrp instance 1 , BACKUP,优先级80

virtual_router_id 2 , Vrrp instance 2 , BACKUP,优先级60

virtual_router_id 3 , Vrrp instance 3 , MASTER,优先级100

4 实现 IPVS 的高可用性

4.1 IPVS 相关配置

4.1.1 虚拟服务器配置结构

KLVS

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WJywudza-1657977604335)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220713154600363.png)]](https://img-blog.csdnimg.cn/b7f6e3fd4af4428da17d244779e6d81f.png)

每一个虚拟服务器即一个IPVS集群

可以通过下面语法实现

virtual_server IP PORT {

...

real_server{

...

}

real_server{

...

}

}

4.1.2 Virtual Server (虚拟服务器)的定义格式

virtual_server IP PORT #定义虚拟主机IP地址及其端口

virtual_server fwmark int #ipvs的防火墙达标,实现基于防火墙的负载均衡集群

virtual_server group string #使用虚拟服务器组

4.1.4 虚拟服务器配置

virtual_server IP PORT {

delay_loop <INT> #检查后端服务器的时间间隔

lb_algo rr|wrr|lc|wlc|lblc|sh|dh #定义调度方法

lb_kind NAT|DR|TUN #集群的类型,注意要大写

persistence_timeout <INT> #持久连接时长

protocol TCP|UDP|SCTP #指定服务协议,一般为TCP

sorry_server <IPADDR> <PORT> #所有RS故障时,备用服务器地址

real_server <IPADDR> <PORT> { #RS的IP和PORT

weight <INT> #RS权重

notify_up <STRING>|<QUOTED-STRING> #RS上线通知脚本

notify_down <STRING>|<QUOTED-STRING> #RS下线通知脚本

HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK { ... } #定义当前主机健康状态检测方法

}

}

#注意:括号必须分行写,两个括号写在同一行,如: }} 会出错

4.1.5 应用层监测

应用层检测:HTTP_GET|SSL_GET

HTTP_GET|SSL_GET {

url {

path <URL_PATH> #定义要监控的URL

status_code <INT> #判断上述检测机制为健康状态的响应码,一般为 200

}

connect_timeout <INTEGER> #客户端请求的超时时长, 相当于haproxy的timeout server

nb_get_retry <INT> #重试次数

delay_before_retry <INT> #重试之前的延迟时长

connect_ip <IP ADDRESS> #向当前RS哪个IP地址发起健康状态检测请求

connect_port <PORT> #向当前RS的哪个PORT发起健康状态检测请求

bindto <IP ADDRESS> #向当前RS发出健康状态检测请求时使用的源地址

bind_port <PORT> #向当前RS发出健康状态检测请求时使用的源端口

}

4.1.6 TCP监测

传输层检测:TCP_CHECK

TCP_CHECK {

connect_ip <IP ADDRESS> #向当前RS的哪个IP地址发起健康状态检测请求

connect_port <PORT> #向当前RS的哪个PORT发起健康状态检测请求

bindto <IP ADDRESS> #发出健康状态检测请求时使用的源地址

bind_port <PORT> #发出健康状态检测请求时使用的源端口

connect_timeout <INTEGER> #客户端请求的超时时长, 等于haproxy的timeout server

}

4.2 实战案例

4.2.1 实战案例:实现单主的 LVS-DR 模式

准备web服务器并使用脚本绑定VIP至web服务器lo网卡

#准备两台后端RS主机

[root@10 ~]# cat lvs_dr_rs.sh

vip=10.0.0.100

mask='255.255.255.255'

dev=lo:1

rpm -q httpd &> /dev/null || yum -y install httpd &>/dev/null

service httpd start &> /dev/null && echo "The httpd Server is Ready!"

echo "`hostname -I`" > /var/www/html/index.html

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $dev $vip netmask $mask #broadcast $vip up

#route add -host $vip dev $dev

echo "The RS Server is Ready!"

;;

stop)

ifconfig $dev down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "The RS Server is Canceled!"

;;

*)

echo "Usage: $(basename $0) start|stop"

exit 1

;;

esac

[root@10 ~]# bash lvs_dr_rs.sh start

The httpd Server is Ready!

The RS Server is Ready!

[root@10 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 10.0.0.100/32 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:45:df:fe brd ff:ff:ff:ff:ff:ff

inet 10.0.0.15/24 brd 10.0.0.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe45:dffe/64 scope link

valid_lft forever preferred_lft forever

#测试直接访问两台RS

[root@192 ~]# curl 10.0.0.15

10.0.0.15

[root@192 ~]# curl 10.0.0.25

10.0.0.25

配置keepalived

#103主机上的keepalived配置

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 66

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

virtual_server 10.0.0.100 80 { #VIP和PORT

delay_loop 6 #检查后端服务器的时间间隔

lb_algo rr #定义调度方法

lb_kind DR #集群的类型,注意要大写

# persistenct_timeout 3 #持久连接时长

protocol TCP #指定服务协议,一般为TCP

real_server 10.0.0.15 80 { #RS的IP和PORT

weight 1 #RS权重

TCP_CHECK {

conncet_timeout 5 #客户端请求的超时时长, 相当于haproxy的timeout server

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试之前的延迟时长

connect_port 80 #向当前RS的哪个PORT发起健康状态检测请求

}

}

}

virtual_server 10.0.0.100 80 { #VIP和PORT

delay_loop 6 #检查后端服务器的时间间隔

lb_algo rr #定义调度方法

lb_kind DR #集群的类型,注意要大写

#persistenct_timeout 3 #持久连接时长

protocol TCP #指定服务协议,一般为TCP

real_server 10.0.0.25 80 { #RS的IP和PORT

weight 1 #RS权重

TCP_CHECK {

conncet_timeout 5 #客户端请求的超时时长, 相当于haproxy的timeout server

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试之前的延迟时长

connect_port 80 #向当前RS的哪个PORT发起健康状态检测请求

}

}

}

#104主机的keepalived

root@ka2:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 66

priority 80

advert_int 1

#nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

}

virtual_server 10.0.0.100 80 { #VIP和PORT

delay_loop 6 #检查后端服务器的时间间隔

lb_algo rr #定义调度方法

lb_kind DR #集群的类型,注意要大写

# persistenct_timeout 3 #持久连接时长

protocol TCP #指定服务协议,一般为TCP

real_server 10.0.0.15 80 { #RS的IP和PORT

weight 1 #RS权重

TCP_CHECK {

conncet_timeout 5 #客户端请求的超时时长, 相当于haproxy的timeout server

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试之前的延迟时长

connect_port 80 #向当前RS的哪个PORT发起健康状态检测请求

}

}

}

virtual_server 10.0.0.100 80 { #VIP和PORT

delay_loop 6 #检查后端服务器的时间间隔

lb_algo rr #定义调度方法

lb_kind DR #集群的类型,注意要大写

# persistenct_timeout 3 #持久连接时长

protocol TCP #指定服务协议,一般为TCP

real_server 10.0.0.25 80 { #RS的IP和PORT

weight 1 #RS权重

TCP_CHECK {

conncet_timeout 5 #客户端请求的超时时长, 相当于haproxy的timeout server

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试之前的延迟时长

connect_port 80 #向当前RS的哪个PORT发起健康状态检测请求

}

}

}

访问测试结果

root@192 ~]# curl 10.0.0.25

10.0.0.25

[root@192 ~]# curl 10.0.0.100

10.0.0.15

[root@192 ~]# curl 10.0.0.100

10.0.0.25

[root@192 ~]# curl 10.0.0.100

10.0.0.15

[root@192 ~]# curl 10.0.0.100

10.0.0.25

root@ka1:~# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.100:80 rr

-> 10.0.0.15:80 Route 1 0 0

-> 10.0.0.25:80 Route 1 0 0

root@ka2:~# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.100:80 rr

-> 10.0.0.15:80 Route 1 0 0

-> 10.0.0.25:80 Route 1 0 0

root@ka2:~#

后端的操作

#;两边都增加VIP 15,25;

[root@10 ~]# ip a a 10.0.0.200/32 dev lo label lo:2

[root@10 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 10.0.0.100/32 scope global lo:1

valid_lft forever preferred_lft forever

inet 10.0.0.200/32 scope global lo:2

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:1a:f8:e8 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.25/24 brd 10.0.0.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

#:;; 在15,25 安装nginx服务,增加虚拟主机;

[root@10 ~]# yum -y install nginx

[root@10 ~]# systemctl enable --now nginx.server

#进入子配置文件中配置

[root@10 ~]# cd /etc/nginx/conf.d/

[root@10 conf.d]# cat www.ehuo.org.conf

server {

listen 80;

server_name www.ehuo.org;

root /data/site1;

}

[root@10 conf.d]# cat www.ehuo.edu.conf

server {

listen 80;

server_name www.ehuo.edu;

root /data/site2;

}

#页面

[root@10 conf.d]# mkdir /data/site{1,2}

[root@10 conf.d]# cat /data/site1/index.html

www.ehuo.org

10.0.0.15

[root@10 conf.d]# cat /data/site2/index.html

www.ehuo.edu

10.0.0.15

[root@10 conf.d]# systemctl restart nginx.service

#测试页面

[root@10 ~]# curl -Hhost:www.ehuo.edu 10.0.0.15

www.ehuo.edu

10.0.0.15

[root@10 ~]# curl -Hhost:www.ehuo.org 10.0.0.15

www.ehuo.org

10.0.0.15

#增加VIP地址

[root@10 ~]# ip a a 10.0.0.200/32 dev lo label lo:2

#在25 上也是同样的操作

keepalived 配置

#ka1上的配置

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.edu.conf

vrrp_instance VI_2 {

state BACKUP #slave-备

interface eth0

virtual_router_id 88 #主机名修改,不是一个集群

priority 80 #优先级降低

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:2 #增加个网卡加IP

}

}

virtual_server 10.0.0.200 80 { #修改地方

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.0.0.15 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.0.0.25 80 {

weight 1

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

#ka2的配置

root@ka2:/etc/keepalived/conf.d# cat www.ehuo.edu.conf

vrrp_instance VI_2 {

state MASTER #master-备

interface eth0

virtual_router_id 88 #主机名修改,不是一个集群

priority 100 #优先级增加

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:2 #增加个网卡加IP

}

}

virtual_server 10.0.0.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.0.0.15 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.0.0.25 80 {

weight 1

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

#记得要重新启动

systemctl restart keepalived.service

#检查

root@ka1:~# hostname -I

10.0.0.103 10.0.0.100

root@ka1:/etc/keepalived/conf.d# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.100:80 rr

-> 10.0.0.15:80 Route 1 0 0

-> 10.0.0.25:80 Route 1 0 0

TCP 10.0.0.200:80 rr

-> 10.0.0.15:80 Route 1 0 0

-> 10.0.0.25:80 Route 1 0 0

root@ka2:/etc/keepalived/conf.d# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.100:80 rr

-> 10.0.0.15:80 Route 1 0 0

-> 10.0.0.25:80 Route 1 0 0

TCP 10.0.0.200:80 rr

-> 10.0.0.15:80 Route 1 0 0

-> 10.0.0.25:80 Route 1 0 0

root@ka2:/etc/keepalived/conf.d# hostname -I

10.0.0.104 10.0.0.200

#测试

[root@192 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.100 www.ehuo.org

10.0.0.200 www.ehuo.edu

[root@192 ~]# curl www.ehuo.org

www.ehuo.org

10.0.0.15 :1

[root@192 ~]# curl www.ehuo.org

www.ehuo.org

10.0.0.15

[root@192 ~]# curl www.ehuo.org

www.ehuo.org

10.0.0.15 :1

[root@192 ~]# curl www.ehuo.org

www.ehuo.org

10.0.0.15

[root@192 ~]# curl www.ehuo.edu

www.ehuo.edu

10.0.0.15

[root@192 ~]# curl www.ehuo.edu

www.ehuo.edu

10.0.0.25 :2

由监测的结果可以得出结论,此时双主模型已经成功了。

双主在生产中慎用,性能有所下降!

5 基于 VRRP Script 实现其它应用的高可用性

keepalived利用 VRRP Script 技术,可以调用外部的辅助脚本进行资源监控,并根据监控的结果实现优先动态调整,从而实现其它应用的高可用性功能

参考配置文件

/usr/share/doc/keepalived/keepalived.conf.vrrp.localcheck

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-NvLBBwnb-1657977604336)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\1657959611093.png)]](https://img-blog.csdnimg.cn/9743d37223d94076a7871381784cf426.png)

5.1 VRRP Script 配置

脚本技术,可以修改优先级。

两步实现

-

定义脚本

? 脚本,探测服务是否健康,如果不健康,修改优先级。一旦发现应用的状态异常,则触发对MASTER节点的权重减至低于SLAVE节点,从而实现 VIP 切换到 SLAVE 节点

当 keepalived_script 用户存在时,会以此用户身份运行脚本,否则默认以root运行脚本

注意: 此定义脚本的语句块一定要放在下面调用此语句vrrp_instance语句块的前面

vrrp_script <SCRIPT_NAME> {

script <string>| <quoted-string> #此脚本返回值为非0时,会触发下面options执行options

}

-

定义脚本

? track_script:调用vrrp_script定义的脚本去监控资源,定义在VRRP实例之内,调用事先定义的vrrp_script

track_script { SCRIPT_NAME_1 SCRIPT_NAME_2 }

5.1.1 定义 VRRP script

vrrp_script <script_name> { #定义一个检测脚本,在global_defs 之外配置

script <string> | <quoted-string> #shell命令或脚本路径

interval <INT> #间隔时间,单位为秒,默认1秒

timeout <INT> #超时时间

weight <INTEGER:-254..254> #默认为0,如果设置此值为负数,当上面脚本返回值为非0时,会将此值与本节点权重相加可以降低本节点权重,即表示fall. 如果是正数,当脚本返回值为0,会将此值与本节点权重相加可以提高本节点权重,即表示 rise.通常使用负值

fall <INTEGER> #执行脚本连续几次都失败,则转换为失败,建议设为2以上

rise <INTEGER> #执行脚本连续几次都成功,把服务器从失败标记为成功

user USERNAME [GROUPNAME] #执行监测脚本的用户或组

init_fail #设置默认标记为失败状态,监测成功之后再转换为成功状态

}

5.1.2 调用 VRRP script

vrrp_instance VI_1 {

...

track_script {

<script_name>

}

}

5.2 实战案例:利用脚本实现主从角色切换

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_script check_down {

script "[ ! -f /etc/keepalived/down ]" #取反keepalived down

interval 1 #间隔时间

weight -30 #如果ture -30 etc/keepalived/down存在时返回非0,触发权重-30

fall 3 #失败3次

rise 2 #2次标记为成功

timeout 2 #超时时间

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 66

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

track_script {

check_down #调用前面定义的脚本

}

}

root@ka2:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_script check_down {

script "[ ! -f /etc/keepalived/down ]" #取反keepalived down

interval 1 #间隔时间

weight -30 #如果ture -30

fall 3 #失败3次

rise 2 #2次标记为成功

timeout 2 #超时时间

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 66

priority 80

advert_int 1

#nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

track_script {

check_down

}

}

root@ka1:/etc/keepalived/conf.d# touch /etc/keepalived/down

[root@10 site1]# tcpdump -i eth0 -nn host 230.1.1.1

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

17:01:17.177396 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 100, authtype simple, intvl 1s, length 20

17:01:18.180175 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 100, authtype simple, intvl 1s, length 20

17:03:22.408867 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 70, authtype simple, intvl 1s, length 20

17:03:23.409418 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 70, authtype simple, intvl 1s, length 20

17:03:24.096425 IP 10.0.0.104 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 80, authtype simple, intvl 1s, length 20

17:03:25.099180 IP 10.0.0.104 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 80, authtype simple, intvl 1s, length 20

17:03:26.099789 IP 10.0.0.104 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 80, authtype simple, intvl 1s, length 20

#测试删除

root@ka1:/etc/keepalived/conf.d# rm -f /etc/keepalived/down

[root@10 conf.d]# tcpdump -i eth0 -nn host 230.1.1.1

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

17:26:44.903392 IP 10.0.0.104 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 80, authtype simple, intvl 1s, length 20

17:26:45.906646 IP 10.0.0.104 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 80, authtype simple, intvl 1s, length 20

le, intvl 1s, length 20

17:26:53.920666 IP 10.0.0.104 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 80, authtype simple, intvl 1s, length 20

17:26:54.525103 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 100, authtype simple, intvl 1s, length 20

17:26:55.527525 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 100, authtype simple, intvl 1s, length 20

17:26:56.529230 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 100, authtype simple, intvl 1s, length 20

17:26:57.530452 IP 10.0.0.103 > 230.1.1.1: VRRPv2, Advertisement, vrid 66, prio 100, authtype simple, intvl 1s, length 20

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fdGWkmRW-1657977604336)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\1657962223797.png)]](https://img-blog.csdnimg.cn/b767179a40b44d219c8d41bab98346a0.png)

5.5 实战案例:实现 HAProxy 高可用

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-69MvXooL-1657977604336)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\1657963977863.png)]](https://img-blog.csdnimg.cn/c69e8437558b47b08e1806c353414a32.png)

#在两个ka1和ka2先实现haproxy的配置

yum -y install haproxy

apt install haproxy -y

root@ka1:~# cat /etc/haproxy/haproxy.cfg

listen status

stats enable

bind 0.0.0.0:9999

stats uri /haproxy_status

listen www.ehuo.org

bind 10.0.0.100:80

server 10.0.0.15 10.0.0.15:80 check

server 10.0.0.25 10.0.0.25:80 check

#在两个ka1和ka2两个节点启用内核参数

[root@ka1,2 ~]#vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1

[root@ka1,2 ~]#sysctl -p

#创建脚本

root@ka1:/etc/keepalived/conf.d# cat check_haproxy.sh

#!/bin/bash

#

#**********************************************************************************************

#Author: ehuo

#QQ: 787152176

#Date: 2022-07-16

#FileName: check_haproxy.sh

#URL: 787152176@qq.com

#Description: The test script

#Copyright (C): 2022 All rights reserved

#*********************************************************************************************

killall -0 haproxy || systemctl restart haproxy #可以自愈,如果起不来,在切换,不是特别严谨!

root@ka1:/etc/keepalived/conf.d# chmod +x check_haproxy.sh

root@ka1:/etc/keepalived/conf.d# cat www.ehuo.org.conf

vrrp_script check_haproxy {

script "/etc/keepalived/conf.d/check_haproxy.sh" #脚本的路径

interval 1 #间隔时间

weight -30 #如果ture -30

fall 3 #失败3次

rise 2 #2次标记为成功

timeout 2 #超时时间

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 66

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.100 dev eth0 label eth0:1

}

track_script {

check_haproxy #调用前面定义的脚本

}

}

#

whilr :; do curl www.ehuo.org ; sleep 1 ;done

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-3HFyyTLL-1657977604336)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\1657970708542.png)]](https://img-blog.csdnimg.cn/05db18560dfd4078bacb01edb974b295.png)

vim check_haproxy.sh

killall -0 haproxy #检测健康性

ipvsadmin

ipvsadm -A -t 10.0.0.100:80 -s rr

ipvsadm -A -t 10.0.0.100:80 -m nat -g

健康性检查 与 高可用

默认keepaliveclient 浏览器监测

5.6 实战案例:实现 MySQL 双主模式的高可用

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-2N3QBDpb-1657977604337)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220713175810014.png)]](https://img-blog.csdnimg.cn/96577b1c8f1c4eccb0be191e7ce12af9.png)

6 综合实战案例

- 编译安装 HAProxy 新版 LTS 版本,编译安装 Keepalived

- 开启HAProxy多线程,线程数与CPU核心数保持一致,并绑定CPU核心

- 因业务较多避免配置文件误操作,需要按每业务一个配置文件并统一保存至/etc/haproxy/conf.d目录中

- 基于ACL实现单IP多域名负载功能,两个域名的业务: www.wang.org 和 www.wang.net

- 实现MySQL主从复制,并通过HAProxy对MySQL进行四层反向代理

- 对 www.wang.org 域名基于HAProxy+Nginx+Tomcat+MySQL,并实现Jpress的JAVA应用

- 对 www.wang.net 域名基于HAProxy+Nginx+PHP+MySQL+Redis,实现phpMyadmin的PHP应用,并实现Session会话保持统一保存到Redis

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-s6MVx7Za-1657977604338)(C:\Users\78715\AppData\Roaming\Typora\typora-user-images\image-20220713181431611.png)]](https://img-blog.csdnimg.cn/e51b07f60f97453ab88c5b47bec61637.png)